NVIDIA’s GPU Technology Conference (GTC, held March 25-29) is this editor’s favorite annual tech event, having attended eight out of nine GTCs since NVISION 08, and this GTC 2018 was the best. The GTC is an important trade event held at the San Jose Convention Center that is represented by every major auto maker, most major labs, and every major technology company, yet GeForce gaming is absolutely not the focus. However, participating cooperatively in amazing VR demos, connecting with major manufacturers, catching up with friends and making new ones, as well as a visit to NVIDIA’s new headquarters made this event especially memorable.

Although it costs $650 for a one-day pass to this 5-day conference, it gets far more crowded with each successive year with over 8,000 professionals attending this year’s event. In fact, Jensen’s keynote address was so crowded this year that the media tables were removed to accommodate more attendees! The GTC has almost outgrown its venue, and next year there may be need for overflow rooms or for a larger keynote address auditorium. However, there was little attention given to the GPU for gaming which is about half of NVIDIA’s business.

The GeForce consumer press was not invited to GTC, although VR is prominently featured at the GTC, and BTR got invited as a top VR benching site. In fact, we got to meet with VIVE and we privately auditioned the very impressive and soon-to-be-released, VIVE Pro, which we will feature and use in our upcoming FCAT-VR benchmarking reviews.

The GTC is all about the GPU and harnessing its massive parallel power to significantly improve the way humans compute and play games. This year, the emphasis continues to be on AI and on deep learning which affects every industry, including automotive. Analyzing massive data sets for deep learning require progressively faster and faster GPUs and NVIDIA is delivering in spades. These GPUs are co-processors with the CPU, and they basically use the same architecture whether used for AI or for playing PC games.

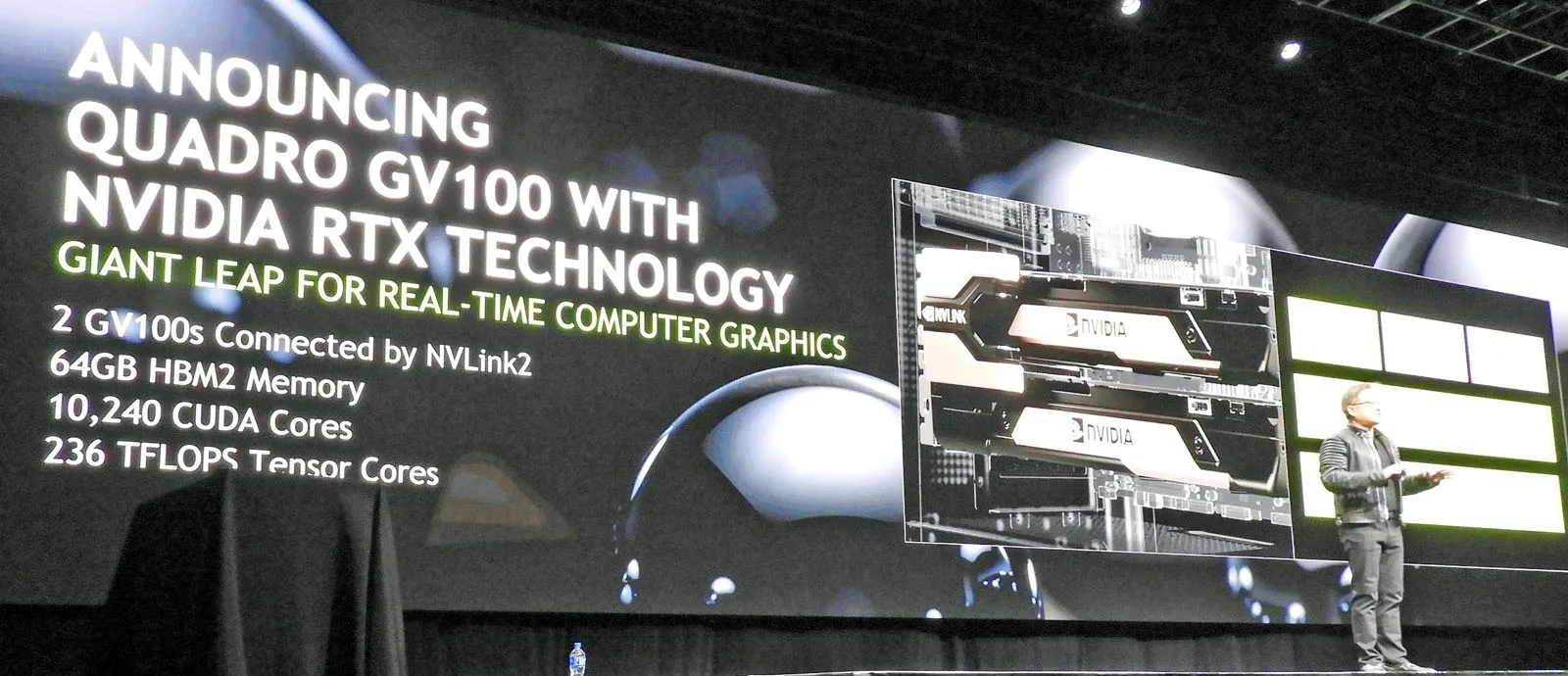

Last year, a new Volta architecture was announced by NVIDIA’s CEO Jensen during the 2017 keynote featuring the GV100 Tesla for high performance computing (HPC). Now, a year later, the GV100 has been upgraded to 32 GB of HBM2 from 16 GB, the Quadro GV100 has been launched as two cards using NVLINK2 pooling 64 GB of HBM2 memory, the world’s largest $399,000 linked GPU was launched, and gamers are still waiting for Volta GeForce video cards.

NVIDIA’s Project Holodeck was another big announcement made at last year’s GTC that interested gamers. This year’s keynote followed up with a demonstration of a driver inside the auditorium (in VR inside the Holodeck) piloting a car outside in the parking lot as though he were actually inside the car while getting the same view as a driver would (but from VR). And three Ready Player One Escape Room team members stationed in three different rooms, played cooperatively in VR as though they were together in the same (virtual) room! It’s a taste of what is possible for gamers using a simplified Holodeck and what may be possibly coming soon to VR arcades.

Project Holodeck was introduced as a concept at last year’s GTC, and today it can practically be used as a driving simulator as well for a gaming-oriented project that eventually could become massively multiplayer after VR streaming becomes possible over the Internet. We also experienced VIVE’s own impressive version of a holodeck centering around a supercar when we previewed the upcoming Pro.

The GTC at a Glance

We made the eight-hour drive to San Jose from our home near Palm Springs in heavy traffic to arrive on Monday evening. We want to thank NVIDIA for inviting us to GTC 2018 as Press, as well as for our hotel accommodation. The Marriott’s wireless broadband was sufficiently fast at 7 MB/s to play Kingdom Come: Deliverance and Borderlands: The Pre-Sequel both with completely maxed-out settings using NVIDIA’s GeForce NOW beta on our 12.6″ notebook with absolutely fluid results. We are totally impressed with this amazing low latency cloud service and will use it whenever we are away from home.

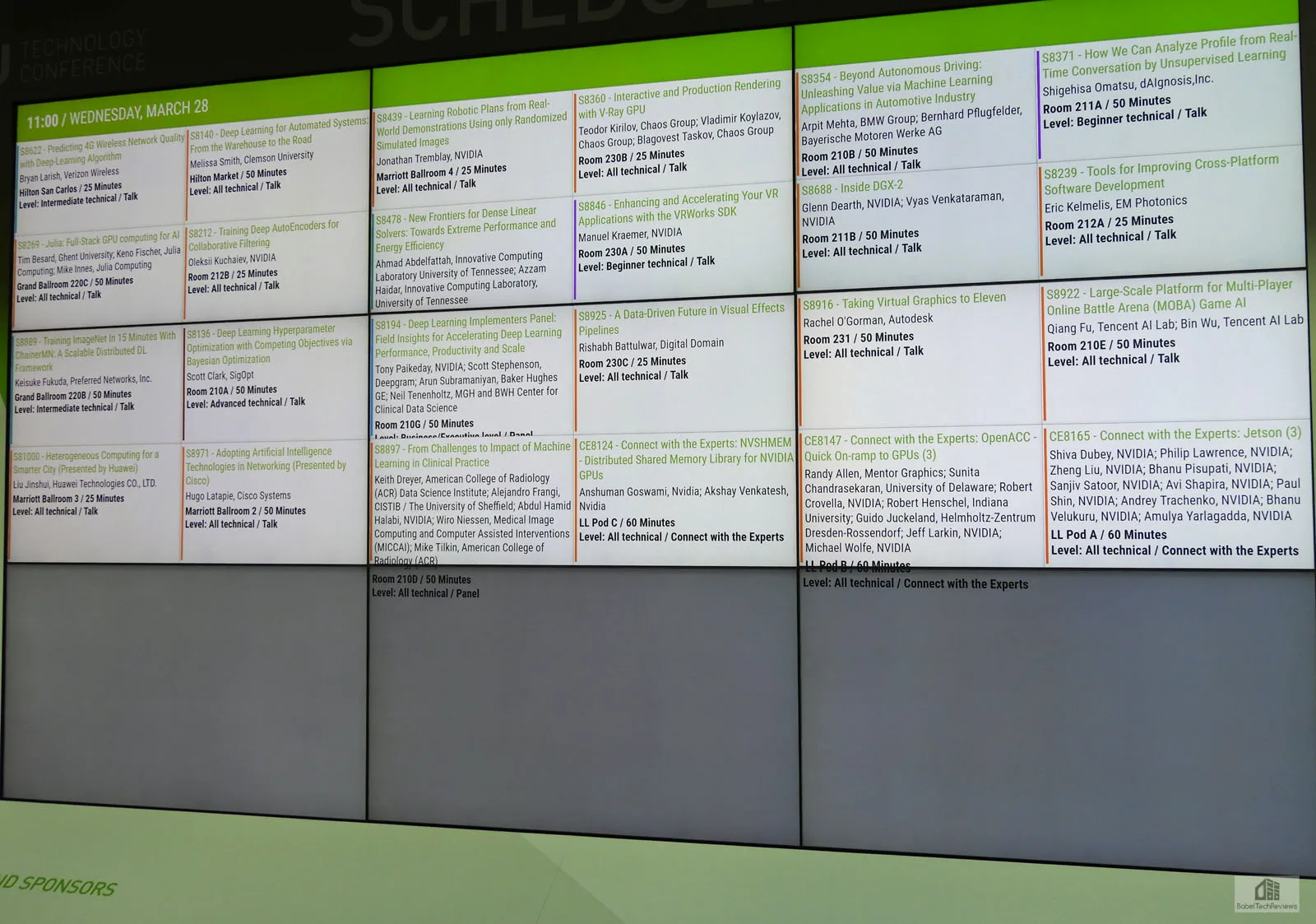

To help the GTC attendees keep on schedule, electronic signs post the sessions’ details. Here is Wedneday’s GTC schedule “at a glance” in a rare moment captured with no one around. NVIDIA also provides a customizable GTC app that is very helpful to keep attendees on track as there are more events available to attend than anyone can hope to see in person. Fortunately, the sessions are recorded and attendees can research recorded GTC library sessions after the conference.

Posters and books

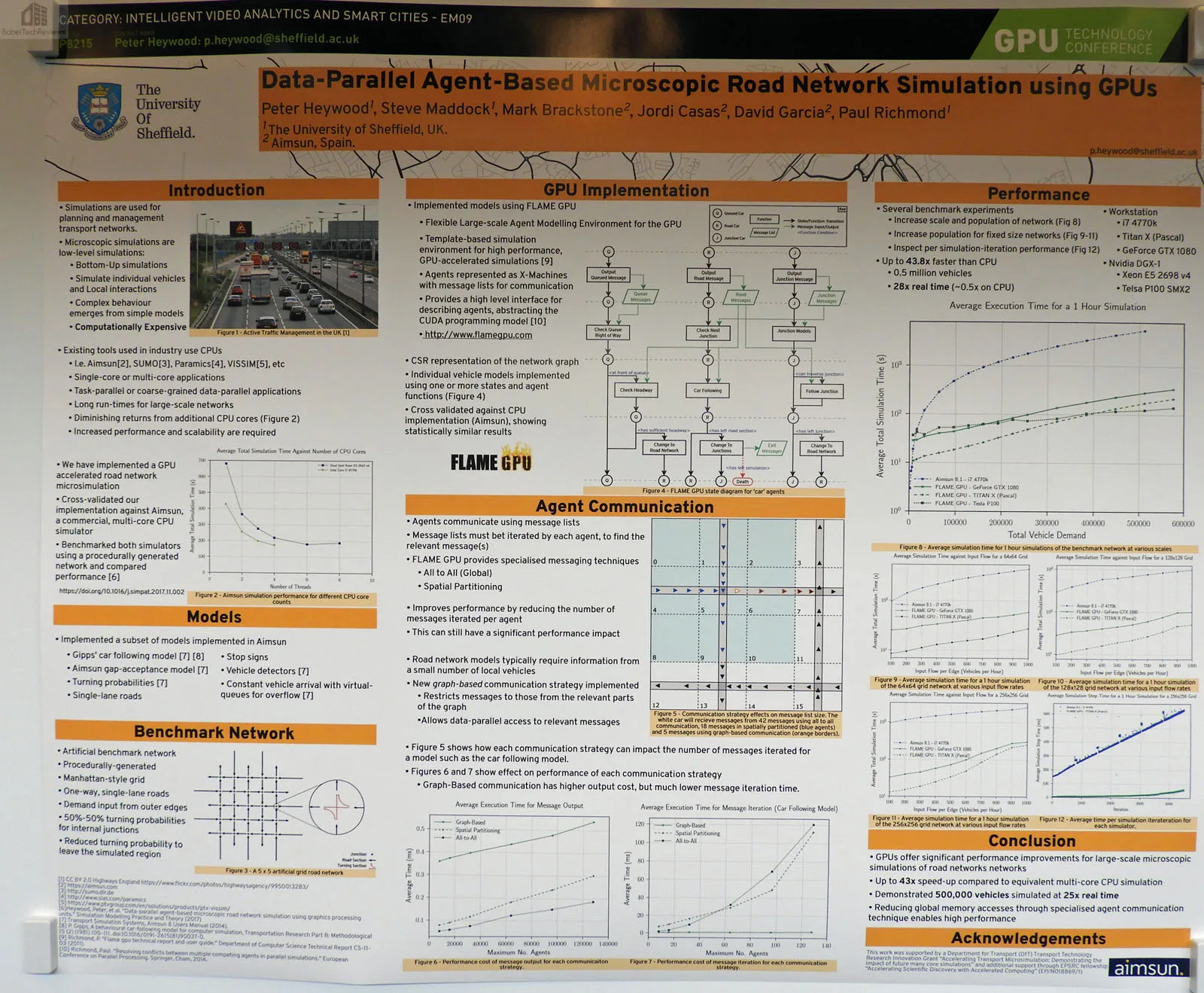

Hundreds of posters demonstrating the myriad of uses for the GPU were again featured. It would be interesting to see them digitized for future GTCs so paper and room could be conserved.

There was also a competition to reward the best poster by voting for them and the above poster is one of the five finalists. And believe-it-or-not, some attendees still actually buy and read text on a traditional ink-on-paper leaves book-style format!

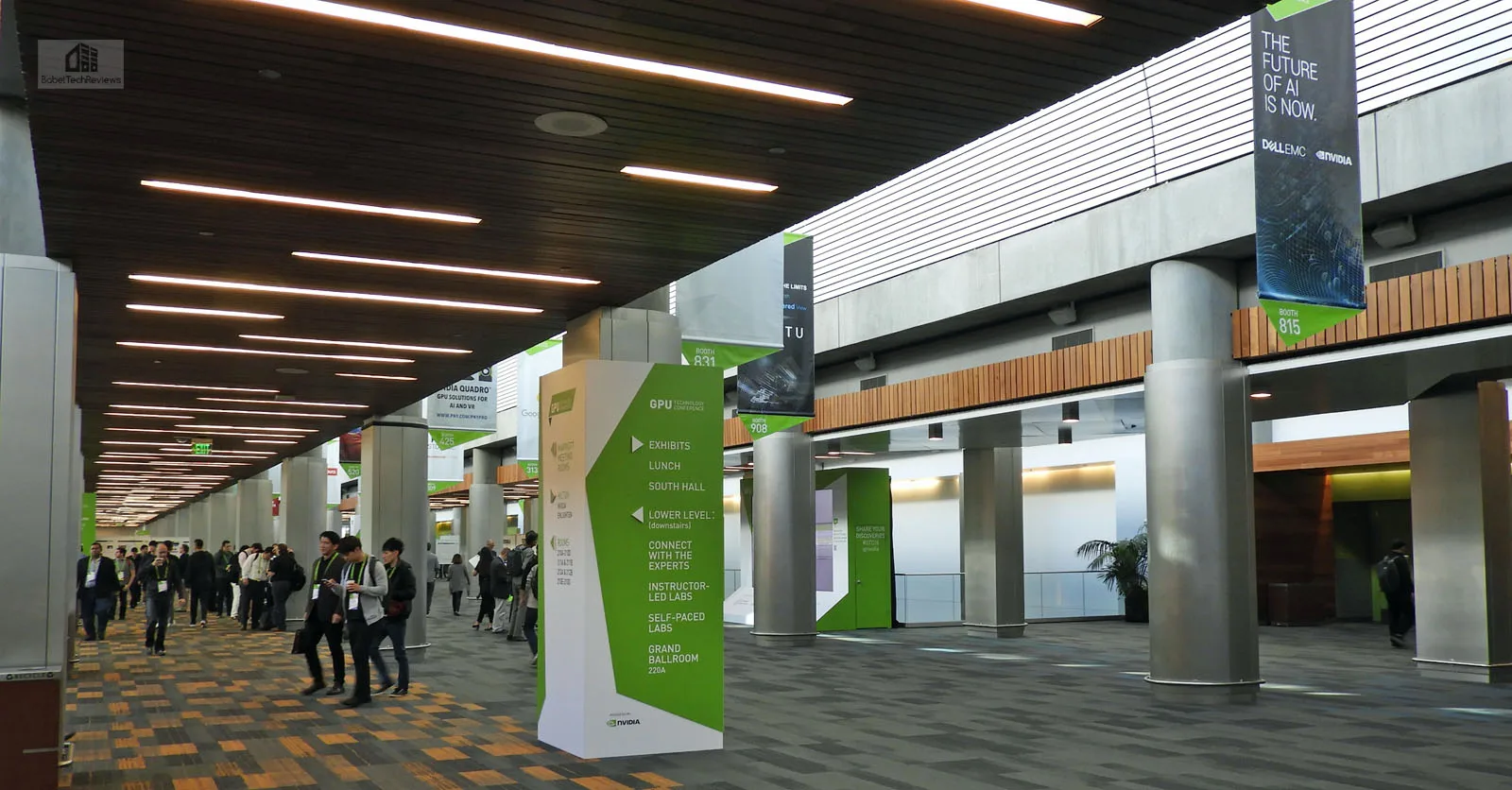

After years of experience, NVIDIA has got the logistics of the GTC completely right even as the attendance grows year-over-year. It runs very smoothly considering that they also make sure that lunch is provided for each of the thousands of full-pass attendees daily, and they offer custom dietary choices, including vegan and “gluten free”, for which this editor is personally grateful. The NVIDIA employees that staff the GTC are extraordinarily friendly and helpful.

We spent all day Tuesday and a half day on Wednesday at GTC 2018, and will focus on the keynote as well as our experiences with VR and with some of the other exhibits which included cars, RTX, Robotics, “growing” metal parts like a plant and printing them, and more. Of course, the highlight of any GTC is the Keynote address by NVIDIA’s CEO, Jen-Hsun (“Jensen”) Huang.

Tuesday, March 27

Opening Keynote

The Keynote hall was beyond packed and the press had to line up at 8 AM to be assured of a seat by the 9 AM start time. This time, there were no tables for the press, and our notebooks became laptops. I found a seat in the front row and to the left of the stage. Here are some of the important highlights from Jensen’s 2-1/2 hour Keynote which set the stage for the rest of the conference.

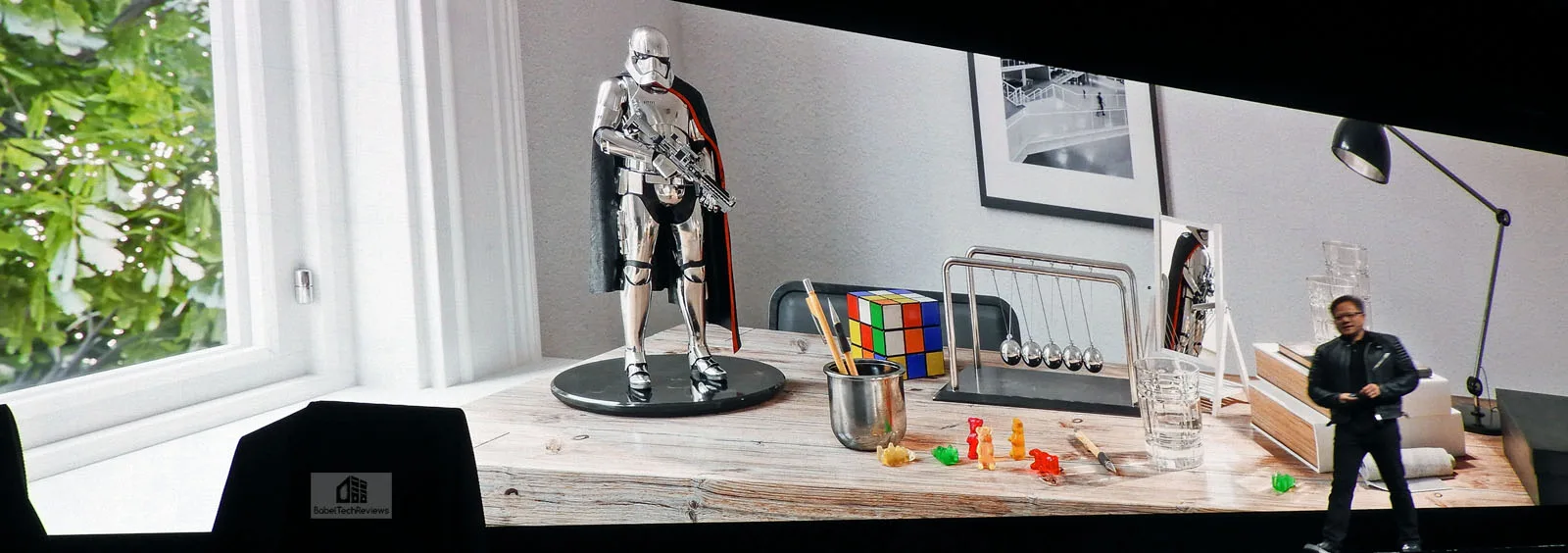

Jensen began by pointing out that photorealistic graphics uses ray tracing (RT) which requires extreme calculations as used by the film industry. RT in real time has been the holy grail for GPU scientists for the past 40 years. An impressive Unreal Engine 4 RTX demo running in real time on a single $68,000 DGX using 4 Volta GPUs was shown. It was noted that without using deep learning algorithims, RTX in real time is impossible

Media and entertainment professionals can now see and interact with their creations with accurate lighting and shadows, and do complex renders up to 10 times faster than with a CPU alone by using NVIDIA’s RTX when combined with the new Quadro GV100 GPU. NVIDIA RTX technology was introduced last week at the annual Game Developers Conference and it is supported by 25 of the world’s leading professional design and creative applications with a combined user base of more than 25 million customers.

The Quadro GV100 GPU, with 32 GB of memory is scalable to 64 GB with multiple Quadro GPUs using NVIDIA NVLink interconnect technology, is the highest-performance platform available for RTX applications. Based on NVIDIA’s Volta GPU architecture, the GV100 provides 7.4 teraflops of double-precision, 14.8 teraflops of single-precision and 118.5 teraflops of deep learning performance. And the NVIDIA OptiX AI-denoiser built into NVIDIA RTX delivers almost 100x the performance of CPUs for real-time, noise-free rendering. Check out the RTX video under ‘Wednesday’ which shows this very quick RT denosing.

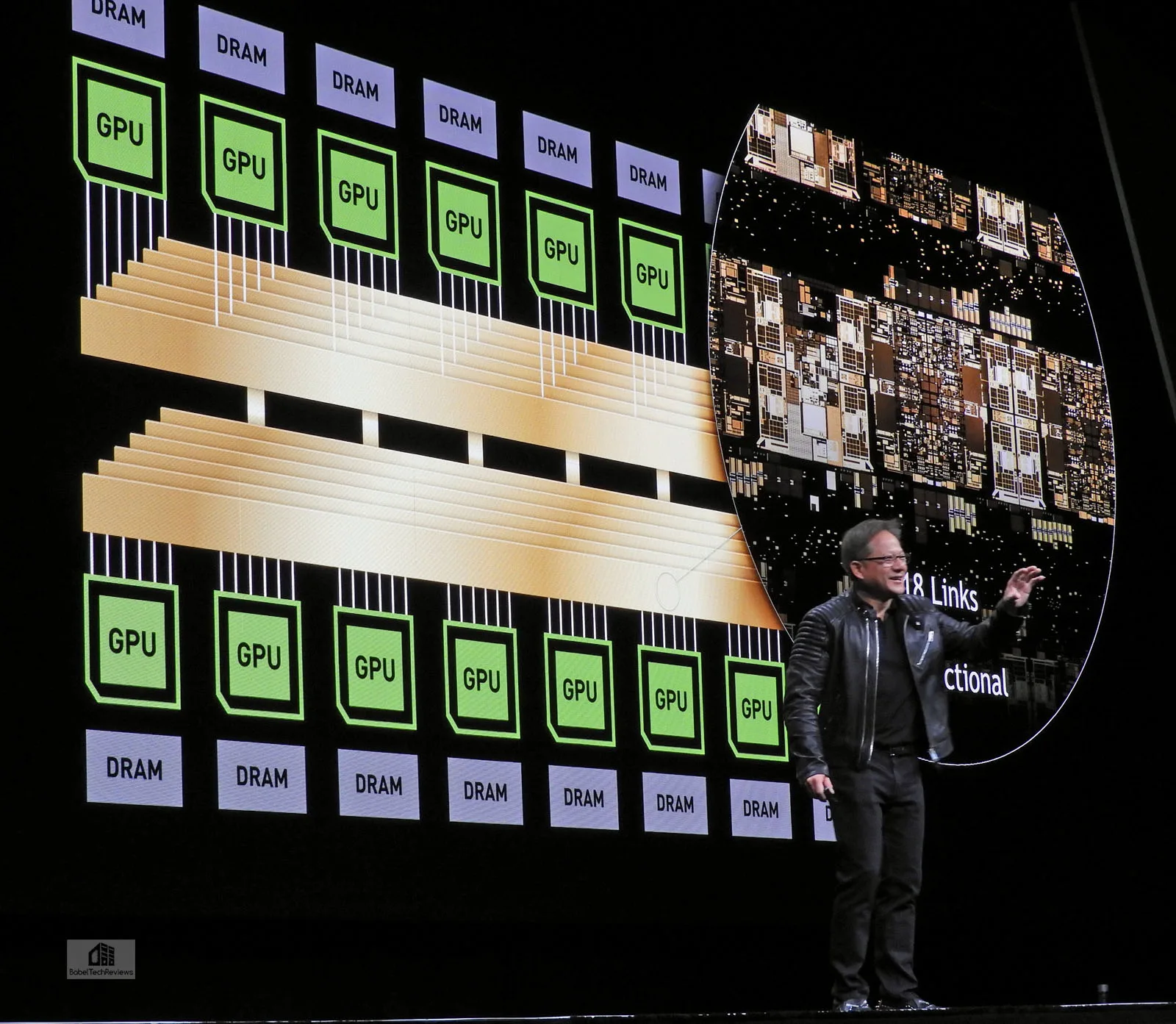

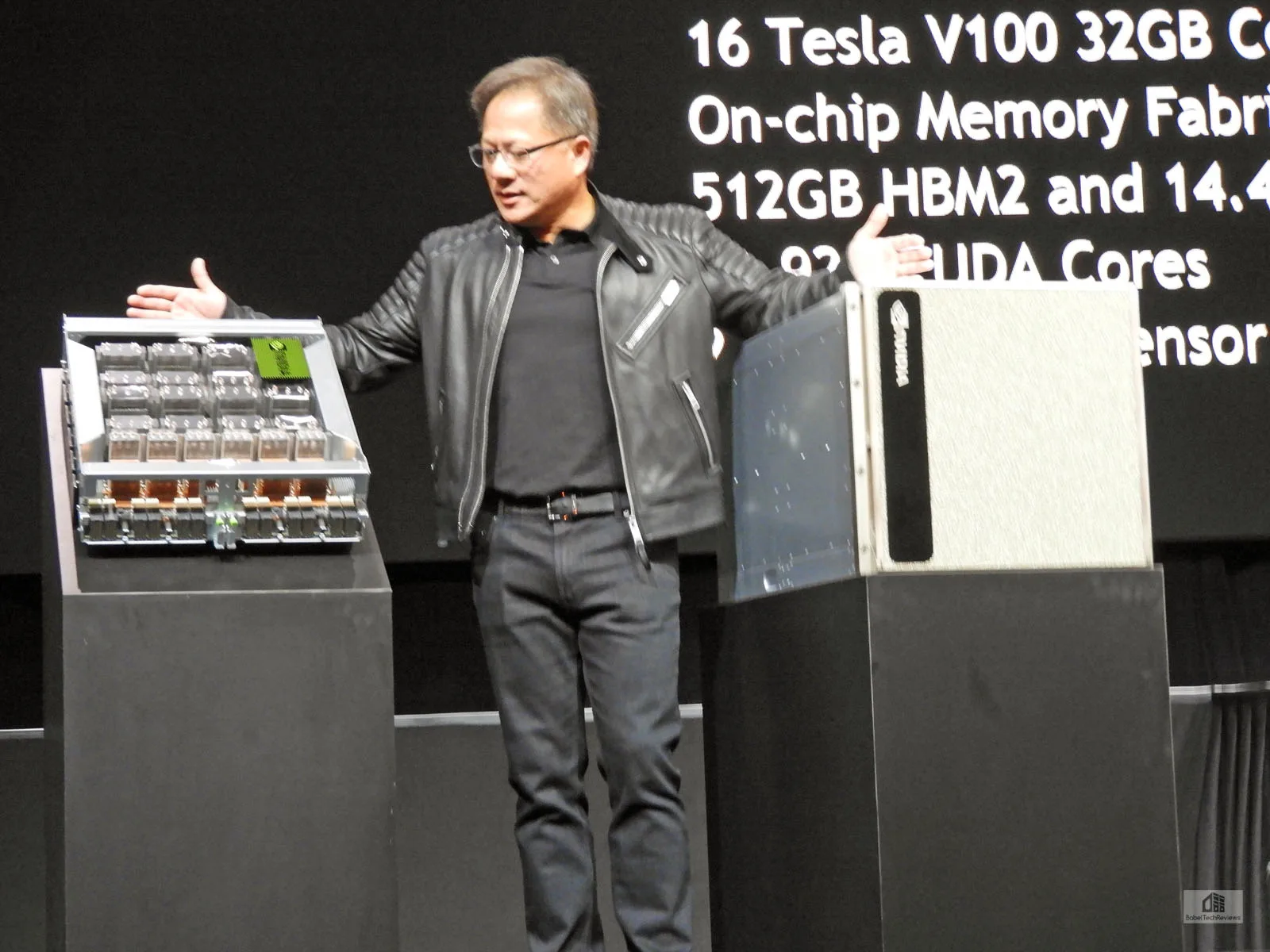

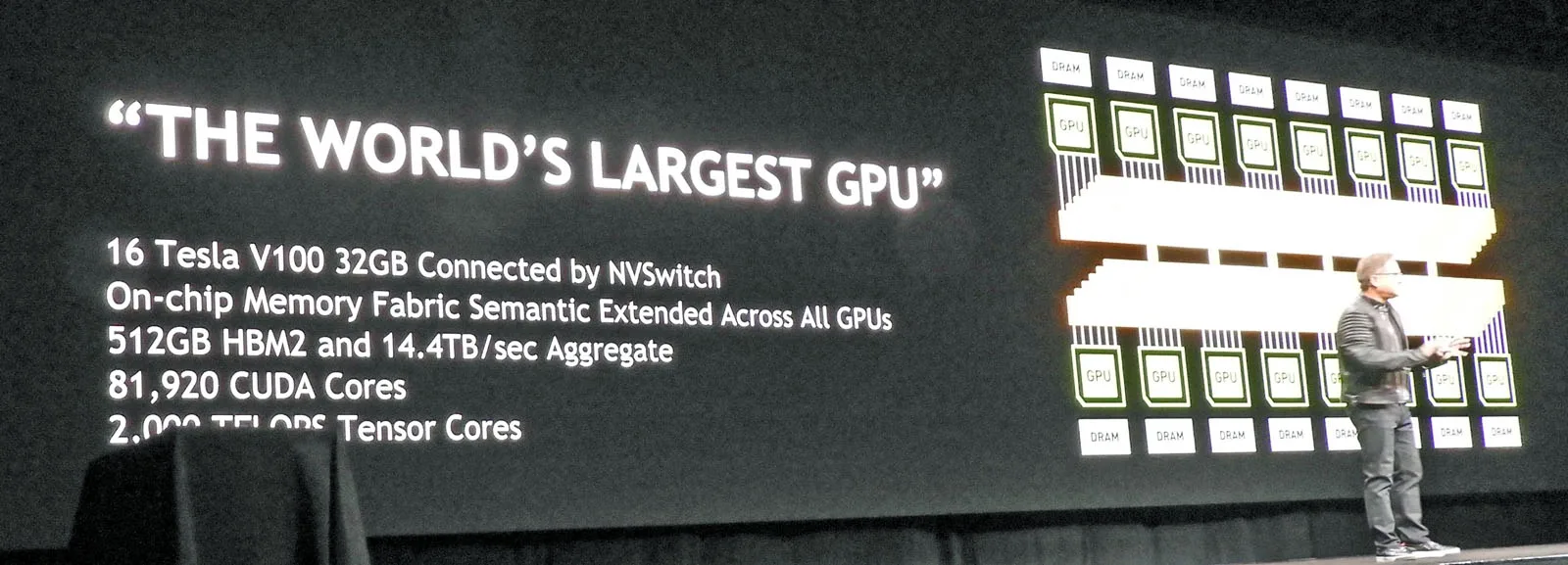

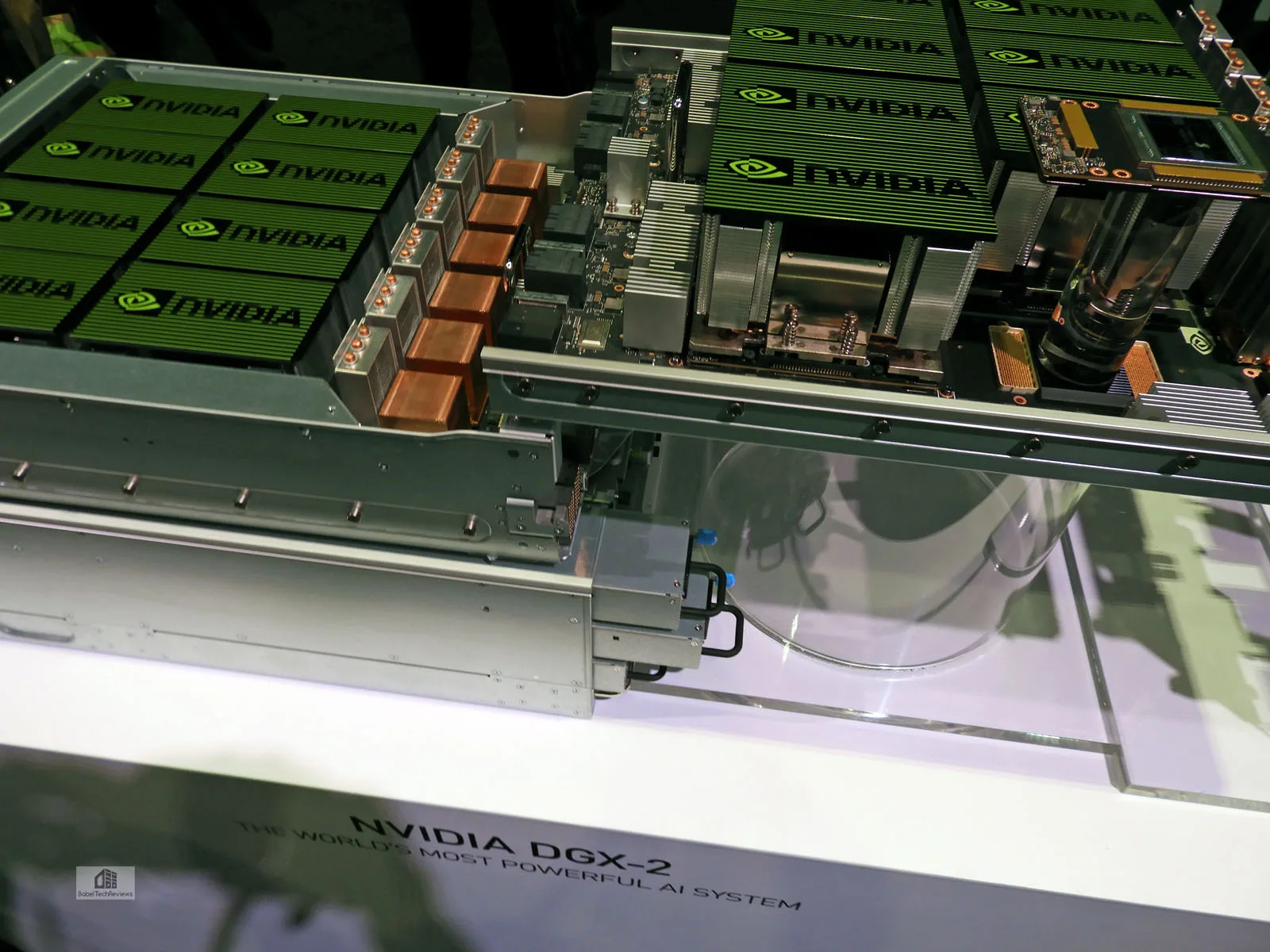

NVIDIA unveiled a series of advances to boost performance on deep learning workloads by a factor of ten compared with the generation introduced just six months ago. Advancements include a two times memory boost to Tesla V100 and a new GPU interconnect fabric called NVSwitch, which enables up to 16 Tesla V100 GPUs to simultaneously communicate at a record speed of 2.4 terabytes per second using 512 GB of interconnected memory.

Every single GPU can communicate with each other at 20x the bandwidth of PCIe 3.0 using a non blocking switch – not a network – with low latency. Excellent thermal management keeps the 10KW of GPUs and electronics cool in a chassis weighting 350 pounds – all for $399,000 and multi-systems can be connected.

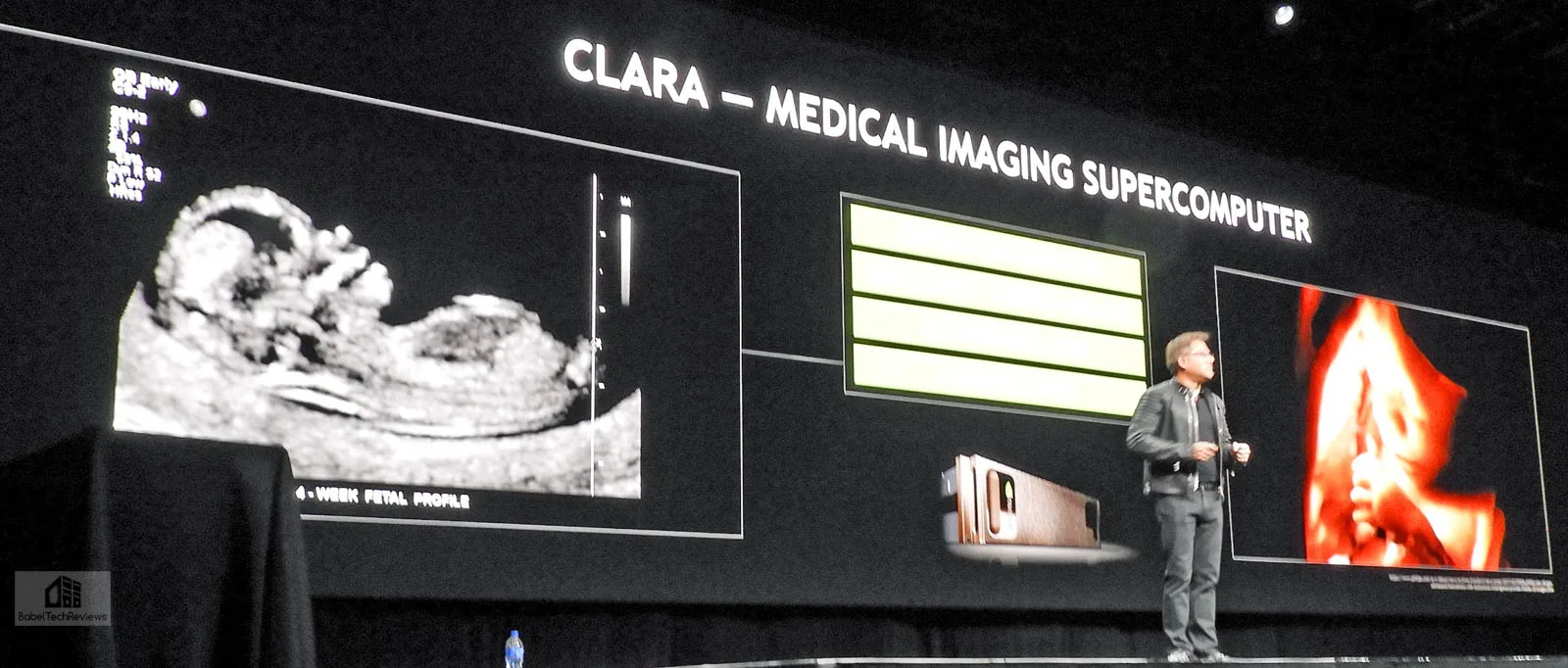

Jensen pointed out that a comparable CPU render farm costs 5 times more and uses 7 times the power while taking 7 times the space.Jensen then moved on to the medical field noting that there are currently 3 million instruments in use with only 100K new ones each year. Cloud data centers using Project CLARA can take advantage of a medical imaging supercomputer in a remote data center so any hospital can upgrade their instruments virtually.

For example, a medical team can stream their black and white ultrasound 2D image into the CLARA supercomputer to make it 3D and then improve it further using deep learning to accurately extrapolate a color 3D segmented image that provides a lot more information than the original.

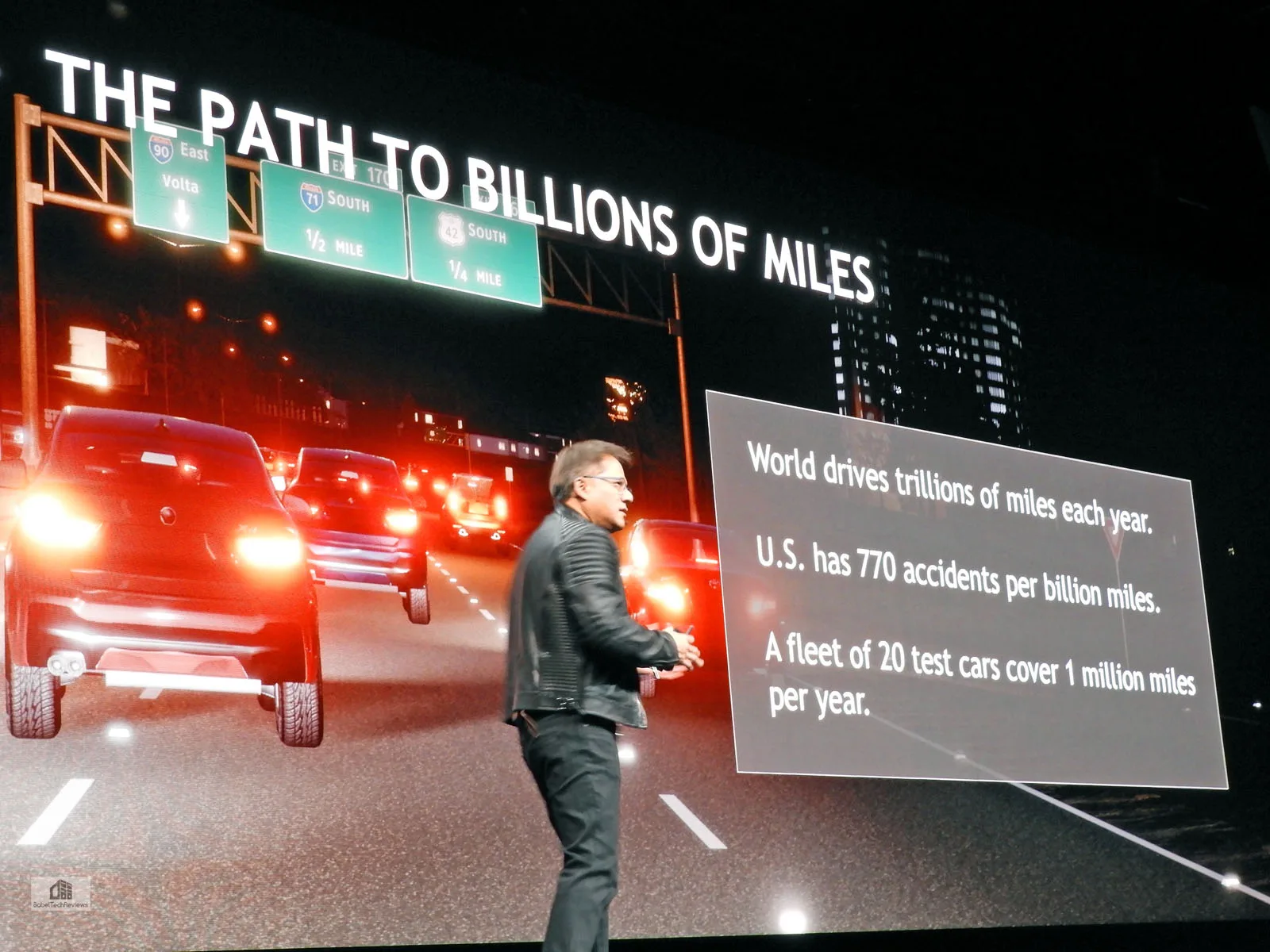

Jensen then moved onto automotive to show that deep learning is essential to that trillion dollar a year mega industry. NVIDIA believes that self driving will ultimately benefit humankind and save lives. But it is one of the most difficult challenges ever to create a completely autonomous vehicle and NVIDIA believes they can solve it using the next generation of drive platform called Orin which uses 2 Drive Pegasus in 1 SOC.

Humans drive 10 trillion miles a year. But 20 autonomous test cars drive only 1,000,000 miles in a year which is not enough to train these cars. So enter Project Holodeck which allows for the training of autonomous cars’ deep learning in a simulator.

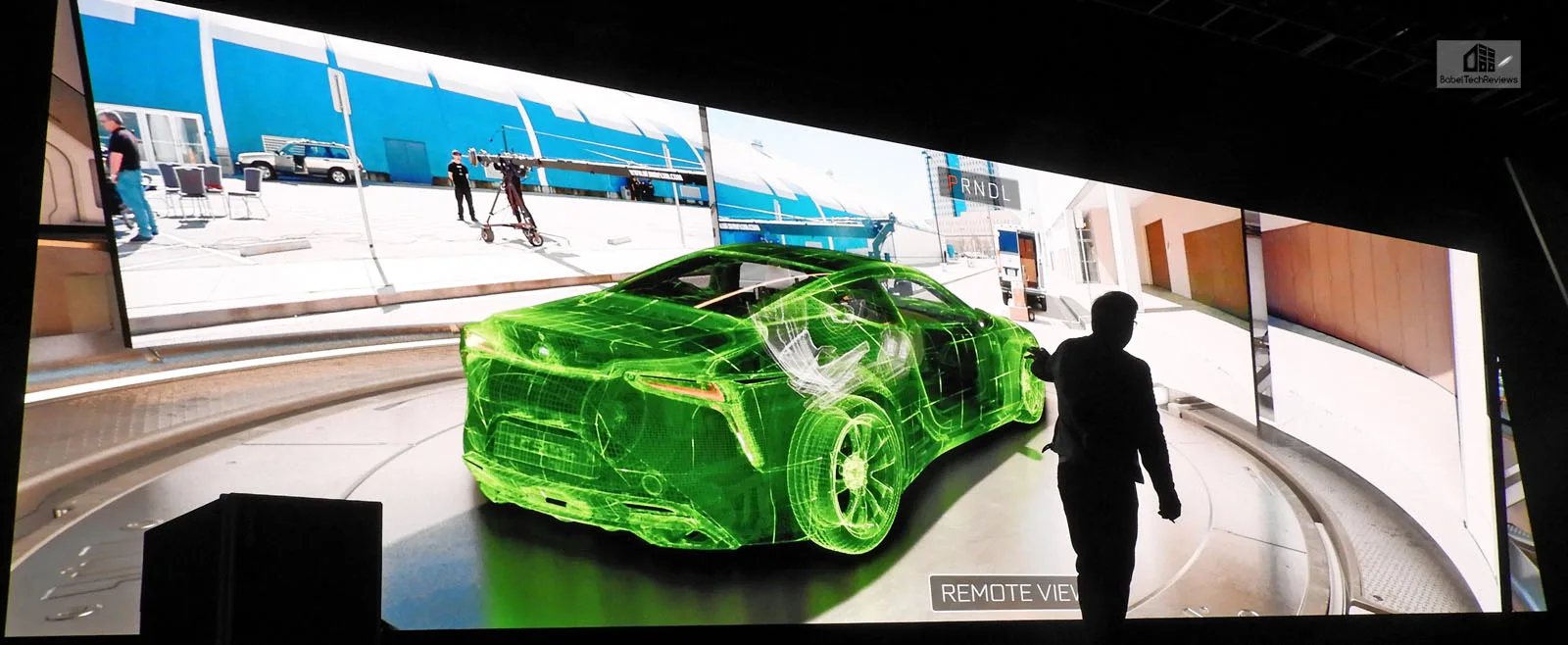

The Holodeck allows for 3 layers of inception with a driver in VR piloting a real car in the real world from the holodek using Remote Drive and Remote View which can be applied and can even go into dangerous places using a robot, and the operator can teleport into the robot via VR to control it easily just like driving a car.

Jensen’s keynote speech was inspiring although it ran about one-half hour over.

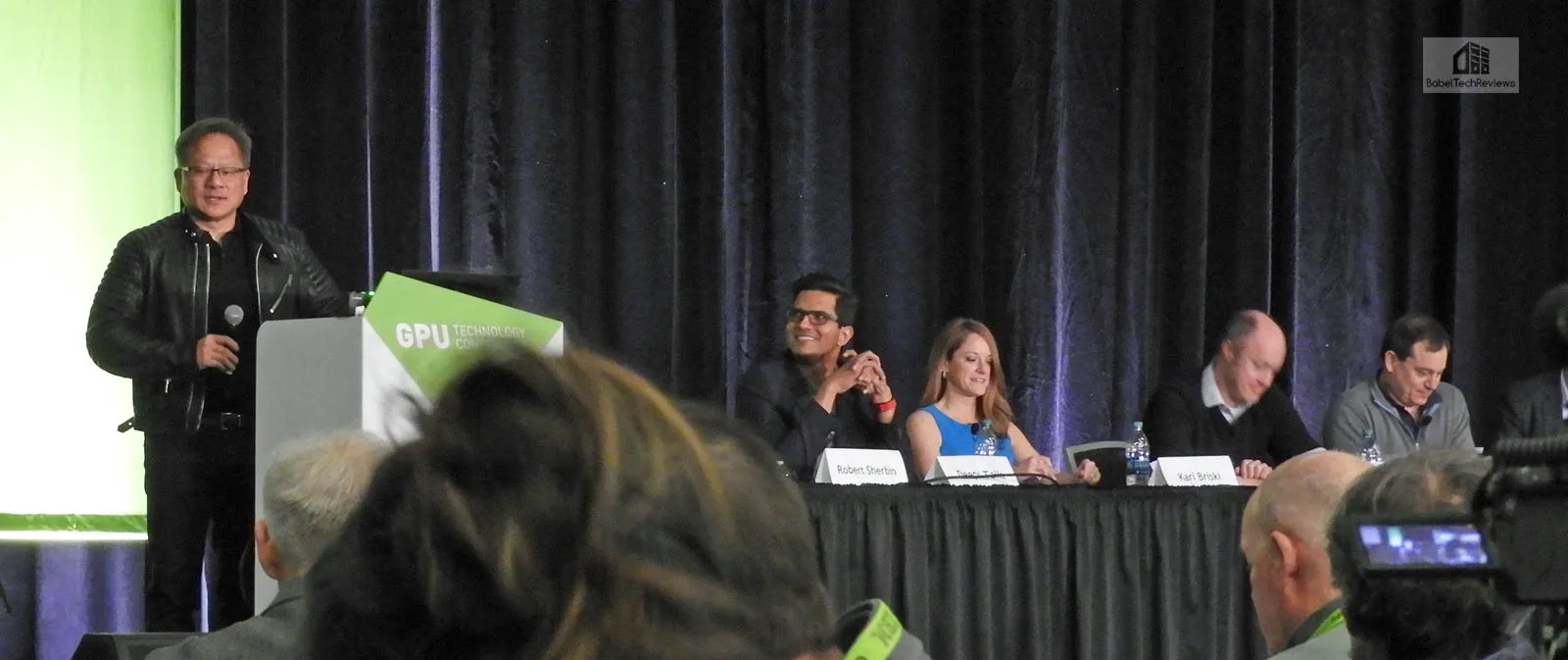

Press Q&A

After Jensen’s keynote ended, the press moved to the Q&A where lunch was ready. Jensen was there for Q&A at a GTC for the first time since Fermi, and he covered the press questions about the fatal driving accident that Uber had the week before. Jensen revealed that NVIDIA was going to be cautious and suspend real-world autonomous testing even though Uber wasn’t using NVIDIA’s platform until the results of the investigation are revealed.

The rest of the Q&A were unfortunately limited to one or two questions and the press were directed to ask their NVIDIA contacts. Of course, we did not expect any answers about upcoming GeForce products and we did not ask.

Next we headed off to a private meeting with VIVE.

Networking/Exhibits/VR

VIVE and the VIVE Pro

We got to spend more time in VR with the VIVE pro than we spent at CES at NVIDIA’s private exhibit. We noted that the new HMD is significantly lighter and more comfortable than the current HMD, and best of all for us, it is adjustable to easily fit over our regular eyeglasses. We are uncomfortable using our contact lenses just for VR, and we had to buy a pediatric pair of glasses just to wear our current HMD.

We think that the best thing about the VIVE Pro is the increased resolution that makes reading text no longer a difficult task. This is great for RPG gamers, but especially useful for developers and those who share text in virtual reality for their work. That is why the VIVE Pro is a prosumer device. It will naturally be adopted by gamers who are at the cutting edge, but it is arguably more important and useful for professionals with applications for developers as well as for the medical field, government, and aviation industries who use VR for training and for simulations.

We think that the best thing about the VIVE Pro is the increased resolution that makes reading text no longer a difficult task. This is great for RPG gamers, but especially useful for developers and those who share text in virtual reality for their work. That is why the VIVE Pro is a prosumer device. It will naturally be adopted by gamers who are at the cutting edge, but it is arguably more important and useful for professionals with applications for developers as well as for the medical field, government, and aviation industries who use VR for training and for simulations.

We got to check out VIVE’s version of a holodeck using a supercar that could be viewed from all angles including from inside the engine. It was very impressive and it leveraged the higher resolution of the Pro to demonstrate what could be accomplished today in VR using consumer hardware.

We look forward to reviewing the VIVE Pro and we will benchmark VR games using its more demanding resolution. We just purchased and downloaded Skyrim VR, and we look forward to playing it and benchmarking it today for an upcoming 20-game VR showdown between AMD’s and NVIDIA’s top GPUs.

Other Exhibits

NVIDIA had several large sections in the main exhibit hall including one for the BFGD 4K HDR 65″ gaming displays, but instead we concentrated on VR and some other unusual cutting edge exhibits. Below we see a VR HMD that uses 5K resolution with 170 degree Field of view – however, unlike the VIVE Pro, they generally require workstations to power them and far more graphics power than consumer or prosumer graphics card set-ups can provide currently.

Wireless is absolutely crucial for mass VR adoption, and we already see several solutions for the VIVE consumer and Pro VR HMDs.

Many of Nvidia’s major partners in professional GPU computing were represented with their servers, including Dell, HP, IBM, and Super Micro, major sponsors of the GTC. And this year, there was a jam packed VR Village where you had to make an appointment, or miss out! We made an appointment for the next day for the Ready Player One Escape Room demo and we had no idea what to expect.

Our hotel room had a rare private balcony and the weather cooperated as friends we hadn’t seen in a long time stopped by to chat and catch up on news and rumor.

Wednesday, March 28

The Ready Player One ESCAPE ROOM VR demo

We demo’d Ready Player One using the Vive Pro and were matched up with two programmers who were much younger. The Holodeck software is based on NVIDIA’s existing technology including VRWorks, DesignWorks, GameWorks, and it requires NVIDIA’s fastest GPUs.

NVIDIA partnered with Warner Bros and HTC VIVE using assets from Ready Player One. Players are transported to the year 2045 and to Aech’s basement for an escape room-style experience. To exit, players must cooperatively solve one puzzle which triggers the next. Teams that work together to complete the challenge within the set time are rewarded with success. The three of us were stationed in three separate rooms and each of us put on a HMD to be instantly transported into VR so that we could interact in VR as though we were sharing the same large virtual room that was populated with a lot of items. Unfortunately, my own controller had some difficulty with interaction, but after searching the room together for clues, I found the key, a coin, which was used to operate a retro Joust arcade console so we could play against each other to escape from the room. I was the only one present who has actually played Joust on an arcade machine in the 1980s.

The three of us were stationed in three separate rooms and each of us put on a HMD to be instantly transported into VR so that we could interact in VR as though we were sharing the same large virtual room that was populated with a lot of items. Unfortunately, my own controller had some difficulty with interaction, but after searching the room together for clues, I found the key, a coin, which was used to operate a retro Joust arcade console so we could play against each other to escape from the room. I was the only one present who has actually played Joust on an arcade machine in the 1980s.  It was a lot of fun playing cooperatively in VR in a type of Holodeck, and it shows what can be accomplished now in VR arcades and eventually cooperatively over the Internet. But the Holodeck is also a powerful platform for content creation. Designers can create virtual worlds and import models directly from their applications into Holodeck without any compromises.

It was a lot of fun playing cooperatively in VR in a type of Holodeck, and it shows what can be accomplished now in VR arcades and eventually cooperatively over the Internet. But the Holodeck is also a powerful platform for content creation. Designers can create virtual worlds and import models directly from their applications into Holodeck without any compromises.

In addition to VR and Project Holodeck, Isaac advances training and testing of robots by using simulations to model all possible interactions between a robot and its environment. The Isaac robot simulator provides an AI-based software solution for training robots in highly realistic virtual environments and then transferring what is learned to physical units. Isaac is built on Epic Games’ Unreal Engine 4.

Attendees also got to interact with Isaac in VR by teaching it how a human would make pretzels with sticky dough as if he only had two digits like a robot does. I doubt that Isaac learned anything other than what not to do from my failed attempts, but I am glad to have contributed a little to his database.

Engineering and testing that would normally take months can be done in minutes. Once a simulation is complete, the trained system can be transferred to physical robots.

These industrial robots are taking over the repetitive and sometimes dangerous tasks that humans perform.

These industrial robots are taking over the repetitive and sometimes dangerous tasks that humans perform.

It was impressive to see 360 video streaming using a notebook and an external enclosure containing GV100. Below is Times Square in New York City.

Other Demos – RTX, Growing metal, Robotics, VR & motion capture

Time is of the essence for most developers, and Ray Tracing (RT) takes a lot of time. By using deep learning coupled with denosing similar to what was presented at last year’s SIGGRAPH, using RTX and GV100 production render has sped up so fast that the developer is nearly working in real time instead of waiting seconds or even minutes to preview their changes!

One of the most interesting demos used FLEX to design and “grow” suitable metal parts much like a plant grows subject to forces of wind and gravity to make them stronger. After the part is finalized, the design can be sent to a 3D metal printer for immediate printing and use.

Cars with varying levels of automomy were also featured including NVIDIA’s Robo race car.

We are reminded that Google and just about every other major cloud platform uses NVIDIA’s GPUs for inferencing and deep learning;

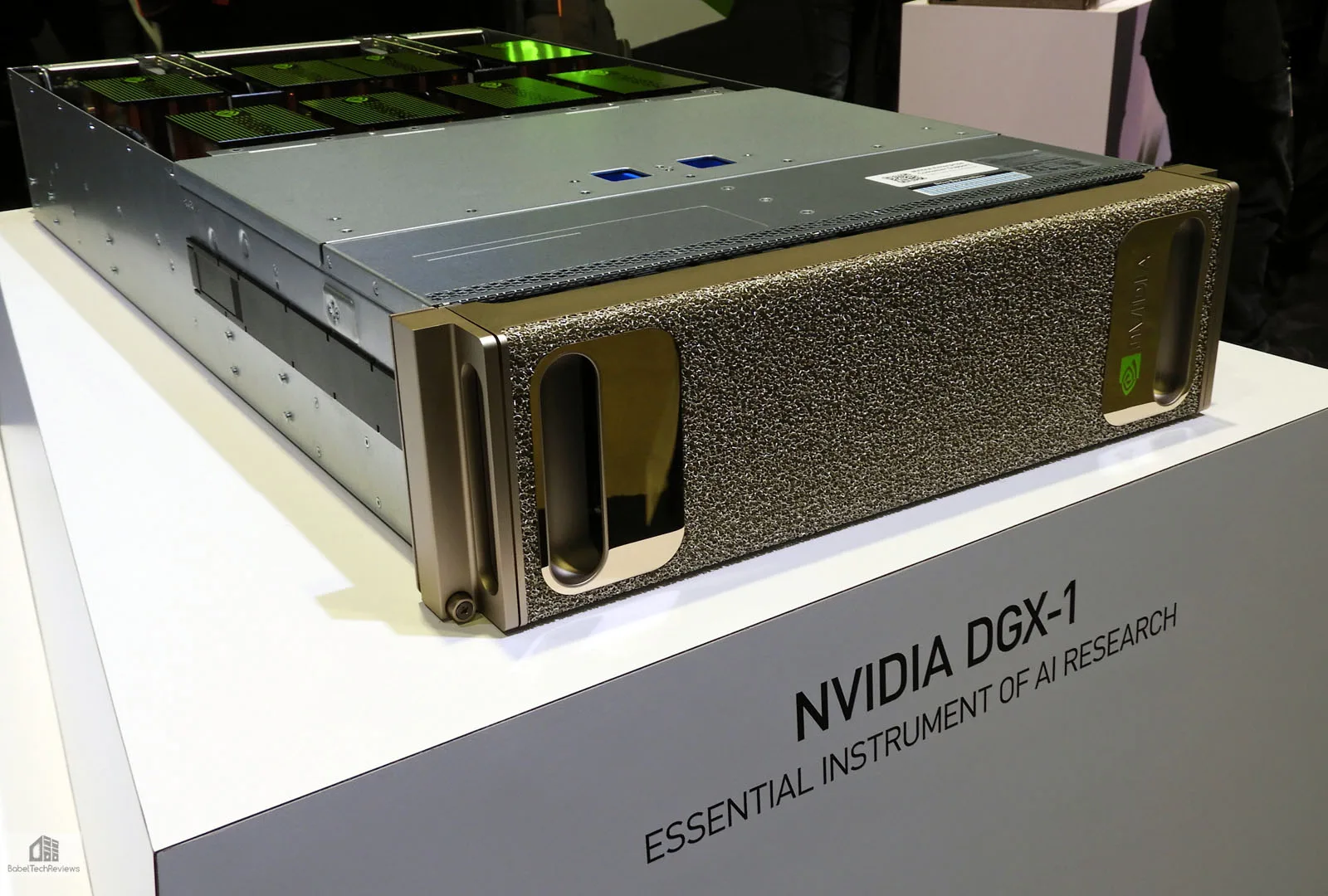

NVIDIA showed off the DGX-1 in gold. And NVIDIA also displayed their DGX-2 and we are reminded of Jensen’s keynote slogan, “the more you buy, the more you save”, referring to the time element as money for those who require their calculations be done faster and faster.

And NVIDIA also displayed their DGX-2 and we are reminded of Jensen’s keynote slogan, “the more you buy, the more you save”, referring to the time element as money for those who require their calculations be done faster and faster.

And some of the GTX 2018 was for fun even though the implications of using a webcam for motion capture and for facial emotion recognition are obvious for game development. OPTIX presented this interactive demo for all to see right in the main hallway of the GTC.

We are reminded that the GTC is all about people. Helpful people. People with passion for GPU computing and desire to share and learn. The GPU and/or VR cannot yet replace face-to-face human contact. Everywhere we saw people networking with each other. We also engaged in it and our readers may ultimately see improvements to this tech site because of knowledge gained from GTC 2018.

NVIDIA’s Headquarters

NVIDIA’s headquarters are only about a 15 to 20 minute drive from the San Jose Convention Center, depending on traffic, of course. We had asked for a tour of Nvidia’s HQ months ago and were met by NVIDIA’s top GeForce representative and were also joined by an industry insider that we both knew from years ago.

One is struck immediately by the impressive industrial design and the symmetry of the architecture, and the triangle motif is repeated almost endlessly.

There are a lot of stairs and it is very easy to get confused inside so as to lose direction. But there’s an app for that although there is no internal GPS or AR for guidance. Security is tight and current badges are required for everyone to pass checkpoints. Soon, it will become more efficient as retinal scans and other security measures are implemented, but implants are evidently not planned.

The industry rep who has also visited Google’s headquarters, says that NVIDIA’s new building and facilities are nicer (!) Although the building is beyond ultra-modern, there is an emphasis on wood, and below is a beautiful table made of thick redwood slabs. Our lunch was excellent and NVIDIA employees have a choice of about 7 or 8 ethnic cuisines whose menus are changed weekly, and choice of two different huge salad bars, one of which was Vegan.

Below is as close to NVIDIA’s secrets as we got. The working areas have no private cubicles, so there are other areas in this building which are set aside for privacy and to think without interruption. It looks like a really great place to work.

It’s an amazing building and it is one apparently designed for younger workers as there are a lot of stairs – besides triangles.

The tour was over after our lunch and we said our thank-yous and good-byes to our host. I headed for home on Highway 101 South about 2:30 PM so as to just miss the San Jose rush hour evening traffic, and I arrived home before midnight without incident.

Conclusion

The experience at the GTC is always amazing as it makes one feel part of an ongoing revolution for GPU programming that started just a few years ago. It is NVIDIA’s disruptive revolution to make the GPU “all purpose” and at least as important as the CPU in computing. Over and over, their stated goal is to put the massively parallel processing capabilities of the GPU into the hands of smart people.

However, this time we believe that GTC 2018 was our best GTC yet and it gets another solid “A+” from this editor. NVIDIA has made the conference much larger and the schedule way more hectic, yet it still runs very smoothly. Next year, attendees can look forward to another 5-day conference from March 18-22, 2019. And if you cannot wait, several GTCs are now held around the world.

Our hope for GTC 2020 is that Nvidia will make it more spectacular like they did with NVISION 08 and open it to the public. It might be time to bring the public awareness of GPU computing to the fore by again highlighting the video gaming side of what NVIDIA’s GPUs can do, as well as with diverse projects including the progress made with VR, with deep learning, and in automotive and robotics.

Each attendee will have their own unique experience and memories of this amazing event. We have many gigabytes of untapped raw video plus hundreds of pictures that did not make it into this wrap-up and we still plan to check out key sessions online that we missed. However, we shall continue to reflect back on GTC 2018 until the next year’s GPU Technology Conference.

Happy Gaming!

-

NVLink, a high-speed interconnect between the CPU and the GPU, enabling these two key processors to share data five to 12 times faster than possible today;

-

Pascal, the next milestone on Nvidia’s GPU architecture roadmap, will leverage NVLink and a new 3D chip design process to improve memory density and energy efficiency;

-

GeForce GTX Titan Z, a dual-GPU graphics card that will become the company flagship when it ships later this year for a suggested $2,999;

-

Jetson TK1, an embedded development board computer that puts supercomputing power in a mobile form factor for $192;

-

The Iray VCA, a graphics processing appliance for real-time photorealistic rendering and real-world simulation.

– See more at: http://gfxspeak.com/2014/03/25/keynote-announcements-wallop/#sthash.nt7y5Lbz.dpuf

-

NVLink, a high-speed interconnect between the CPU and the GPU, enabling these two key processors to share data five to 12 times faster than possible today;

-

Pascal, the next milestone on Nvidia’s GPU architecture roadmap, will leverage NVLink and a new 3D chip design process to improve memory density and energy efficiency;

-

GeForce GTX Titan Z, a dual-GPU graphics card that will become the company flagship when it ships later this year for a suggested $2,999;

-

Jetson TK1, an embedded development board computer that puts supercomputing power in a mobile form factor for $192;

-

The Iray VCA, a graphics processing appliance for real-time photorealistic rendering and real-world simulation.

– See more at: http://gfxspeak.com/2014/03/25/keynote-announcements-wallop/#sthash.nt7y5Lbz.dpuf