A Gamer’s View of GTC 2021 featuring Jensen’s Keynote and with Major Implications for VR

NVIDIA’s GPU Technology Conference (GTC, held virtually April 12-16) was always this editor’s favorite in-person annual tech event before the COVID-19 pandemic, having attended eight out of nine GTCs since NVISION 08, and this virtual GTC 2021 Keynote presentation by NVIDIA’s CEO & Co-Founder, Jen-Hsun Huang (AKA “Jensen”) did not disappoint. Some of the announcements made to set the stage for GTC 2021 were among the most exciting of any GTC – some announcements bordering on what appear to be almost science fiction.

The GTC is an important trade event formerly held at the San Jose Convention Center, and now virtually, is represented by every major auto maker, most major labs, and every major technology company, yet GeForce gaming is absolutely not the focus. However, some of these announcements have strong implications for gamers, VR, and the future of gaming, and we will focus on them. All of the images except as noted are from Jensen’s GTC keynote speech yesterday.

At GTC 2018, Jensen began his keynote by pointing out that photorealistic graphics uses ray tracing (RT) which requires extreme calculations as used by the film industry. Ray tracing in real time has been the holy grail for GPU scientists for the past 40 years. An impressive Unreal Engine 4 RTX demo running in real time on a single $68,000 DGX using 4 Volta GPUs was shown. It was noted that without using deep learning algorithims, ray tracing in real time is impossible.

Just 3 years later, NVIDIA can run the same demo on its second generation GeForce RTX video cards. Amazing progress has been made and now ray tracing has been adopted by Intel, AMD, Microsoft, and all of the major console platforms. RTX was mentioned, and some amazing demos running on current RTX cards were shown, but this time the emphasis is on AI and industry.

However, eight new NVIDIA Ampere architecture GPUs for next-generation laptops, desktops and servers for professionals were announced including the new NVIDIA RTX A5000 and NVIDIA RTX A4000 GPUs for desktops that feature new RT Cores, Tensor Cores and CUDA cores to speed AI, graphics and real-time rendering up to 2x faster than previous generations.

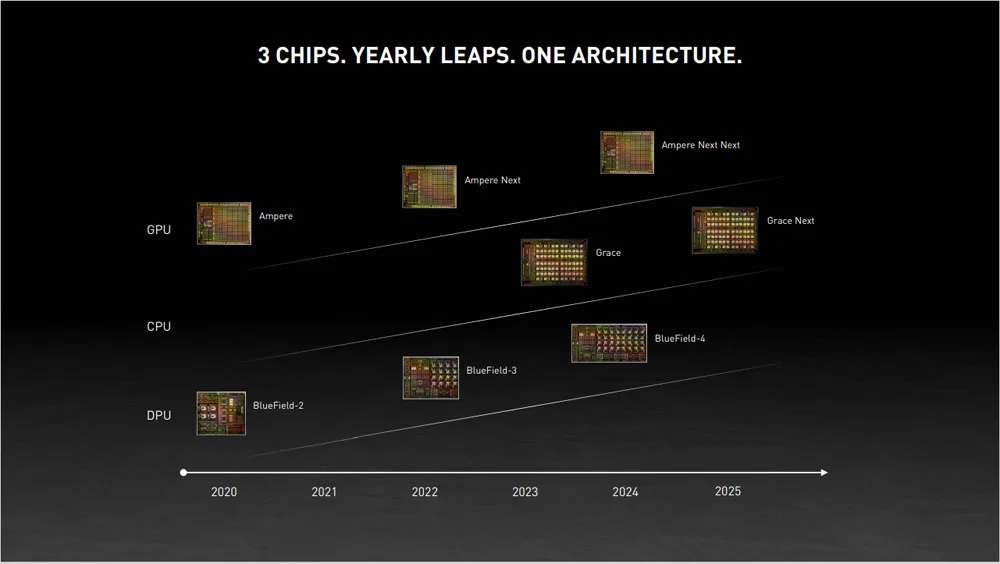

NVIDIA is in the process of acquiring ARM and they have announced a new CPU that will first be used for servers. We learned that this CPU is codenamed “Hopper” after the American scientist and US Navy Rear Admiral, Grace Hopper, and they have committed to a release cycle for their major products on a two year cadence with a refresh happening in-between. That means we can expect new GeForce RTX cards also on this cycle – a new series every two years, and a refresh in-between. There were no codenames given in the roadmap pictured above.

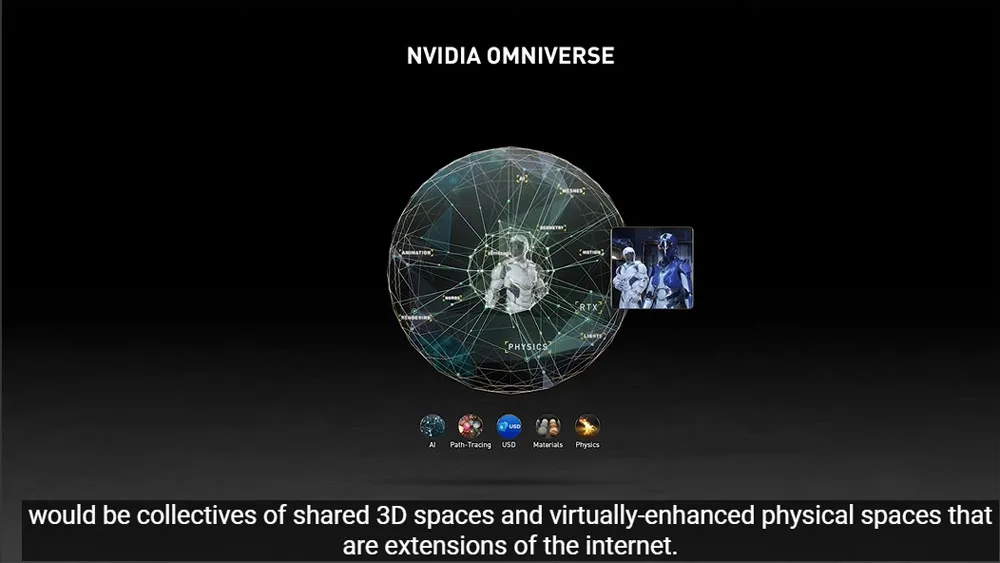

Another major progression is from Project Holodeck which was first announced at the GTC in 2017. It has grown into NVIDIA’s Omniverse that is primarily used for real time collaboration in virtual reality (VR) for industry professionals across the Internet. It enables global 3D design teams working across multiple software suites to collaborate in real time using a shared virtual space.

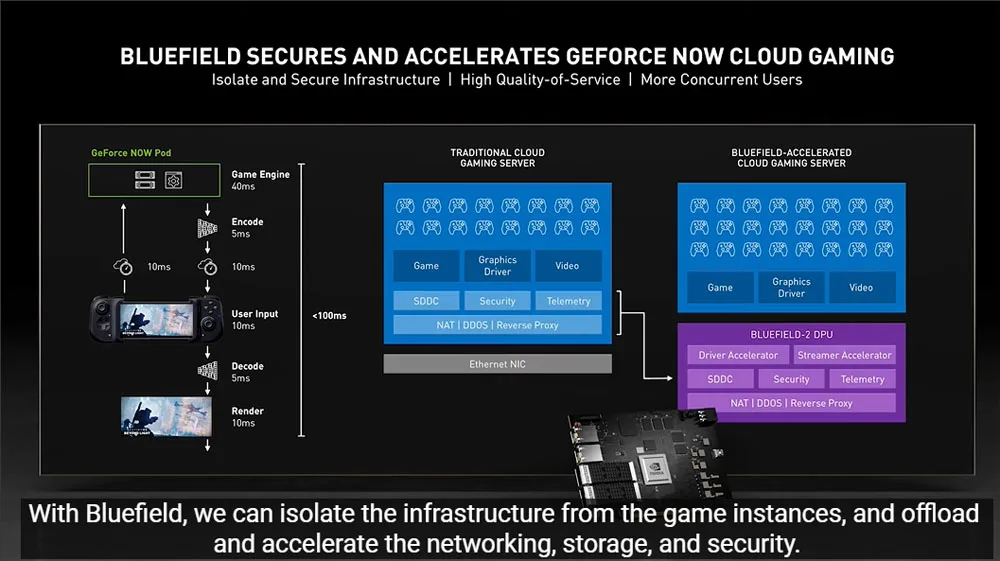

From our interview with NVIDIA earlier this year we already know that they “are working to make high fidelity experiences possible untethered, from anywhere, with our CloudXR technology” for VR, no doubt using their advanced servers, Bluefield, and 5G.

Another NVIDIA-developed app is NVIDIA Broadcast. To supplement it, Jensen announced new GPU-accelerated software development kit was announced for NVIDIA Maxine, the cloud-AI video-streaming platform behind the Broadcast app which allows developers to create real-time video-based experiences that can be deployed to PCs, data centers or in the cloud to efficiently create digital experiences. Maxine will also allow for translation into multiple languages. Touchcast combines AI and mixed reality to reimagine virtual events by using Maxine’s super-resolution features to convert and deliver 1080p streams into 4K.

Notch creates tools that enable real-time visual effects and motion graphics for live events. Maxine provides it with real-time, AI-driven face and body tracking along with background removal. Think of the applications for creating player avatars in a VR or traditional online game setting. Artists can currently track and mask performers in a live performance setting by using a standard camera feed and eliminating the issues associated with needing expensive hardware-tracking solutions.

Of course, this is speculative but we might well ask, how much longer before we see all of this technology combined and applied to VR and used for an “Oasis” as envisioned by “Ready Player One”? Well this also depends on a fast and secure Internet made possible by BlueField and GeForce NOW technology coupled with 5G.

GeForce NOW is NVIDIA’s in the cloud game streaming service that is now in 70 countries with 10 million members. Many gamers who are unable to buy a GPU a reasonable price because of the dual-pandemics – COVID-19 and the cryptocurrency pandemic – are using this service and it is very good if you live in an area served by it and have a fast Internet connection.

With BlueField 3, it will get even better and will no doubt be eventually used to stream VR. Using a doubled Arm core count, doubled network speeds, and PCIe 5 support will allow BlueField hardware’s 3rd generation to deliver a 5X speedup over the generation 2. This allows NVIDIA to position BlueField 3 into accelerated computing and AI to free up traditional x86 servers for application processing instead. This has happened since NVIDIA acquire Mellanox in 2019 for their SOC and BlueField 2 was released late last year.

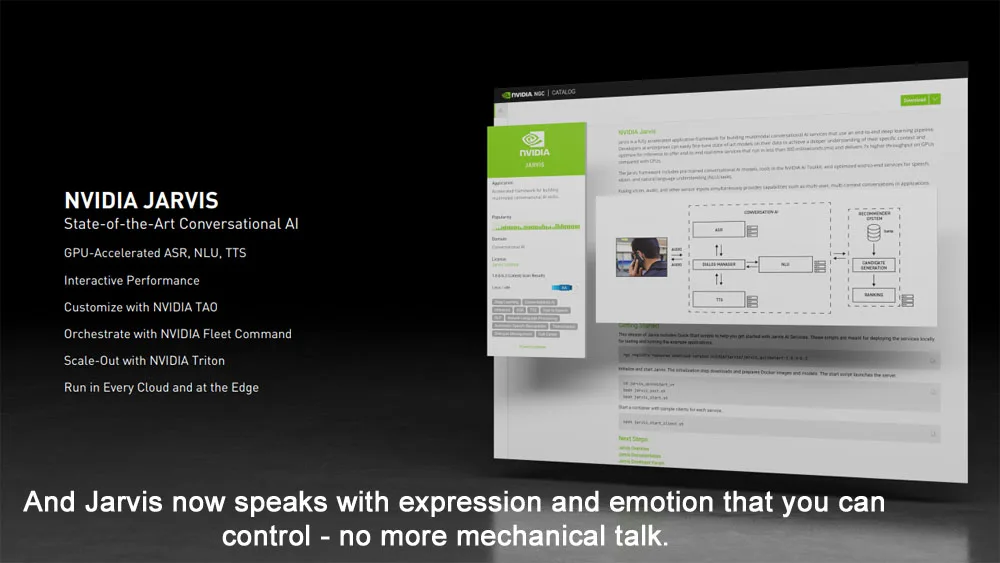

Finally, NVIDIA’s Jarvis is basically an advanced Chatbot that interacts with business and enterprise customers and also can be used to translate into 5 languages currently with 90% plus accuracy out of the box. Think of the time savings this will bring to game developers who have to translate their games into multiple languages! But since it can be used in the cloud, future RPGs may be able to have their NPCs literally talk to a gamer instead of having to click on multiple choice responses!!

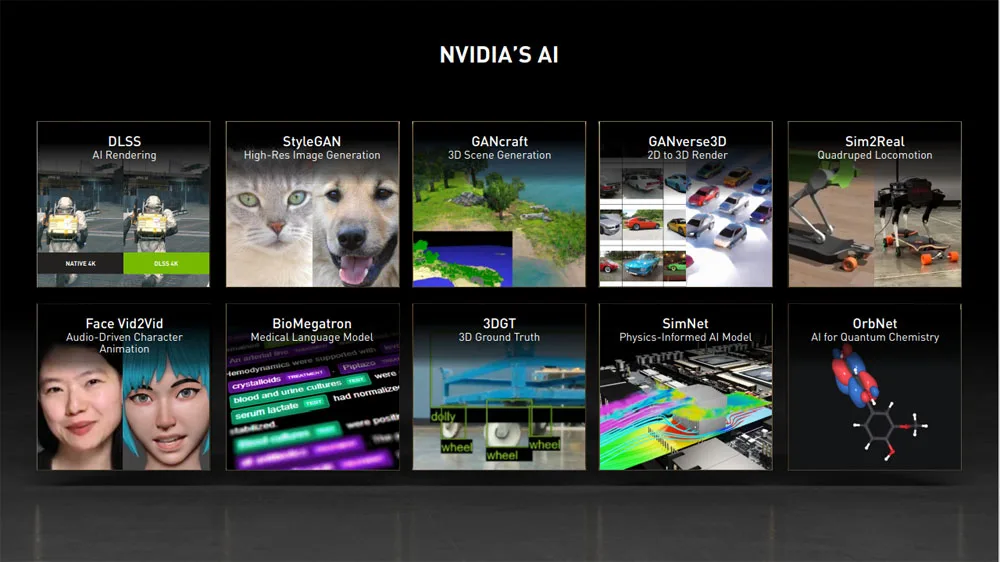

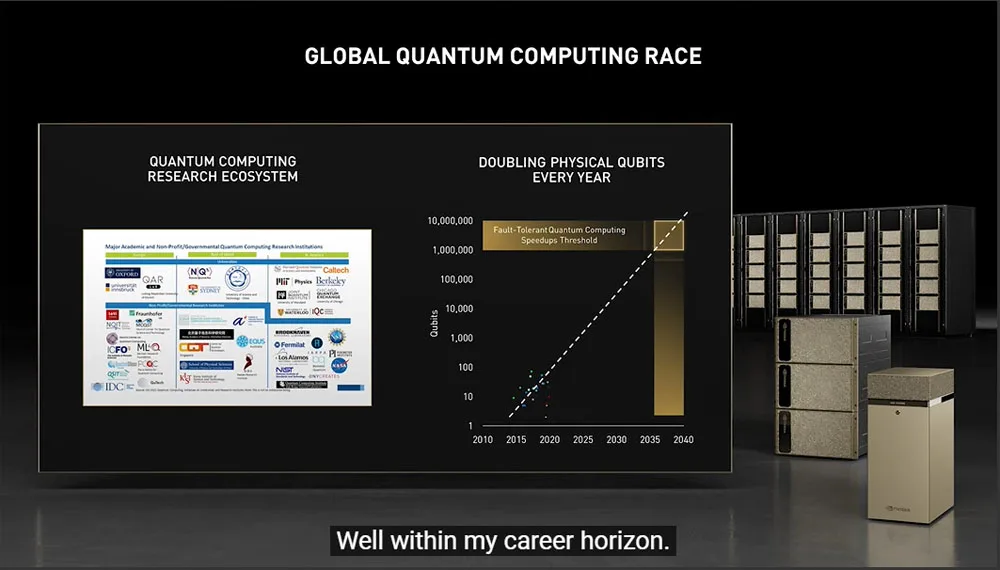

NVIDIA’s AI has already transformed gaming and industry. We are looking forward to it being applied to VR in ways that could only be imagined as Sci-Fi a few years ago.  Jensen even predicted that Quantum computing would reach its goal by 2035 to 2040 “well within my career horizon” – so don’t expect him to retire before he is 80 years old.

Jensen even predicted that Quantum computing would reach its goal by 2035 to 2040 “well within my career horizon” – so don’t expect him to retire before he is 80 years old.

This is just a short overview of Jensen’s keynote delivered yesterday. You can watch it in full from the Youtube video above.

Exciting times!

Happy gaming and future gaming!