15 Premium VR Oculus Rift benchmarks

BTR benchmarked 10 VR games in late August and we concluded that AMD has delivered on its promise for entry-level VR with Polaris, but that the RX Vega 64 needed serious driver improvement before its performance could be called “premium”. Four months later, we now have another premium AMD VR card to test – the PowerColor Red Devil RX Vega 56 – along with our liquid-cooled edition of the RX Vega 64, AMD’s top card. This time, we are benchmarking 15 VR games on the Oculus Rift including Fallout 4 VR using FCAT-VR, pitting the RX Vega 56 and 64 versus the GTX 1070 Ti, the GTX 1080, and the GTX 1080 Ti.

We have been playing more than 50 VR Oculus Rift games using midrange and high-end NVIDIA and AMD video cards last year. Since we posted our original VR evaluation last January, we then benchmarked 6 VR games in our follow-up using FCAT VR, followed by 3 more VR games. We have favorably compared FCAT VR with our own video benchmarks which use a camera to capture images directly from a Rift HMD lens. For BTR’s VR testing methodology, please refer to this evaluation. Currently, we are benching 15 VR games, and will continue to expand our VR benchmarking suite for 2018.

We are going to test 15 VR games using the GTX 1080 Ti FE, the GTX 1080 FE, the GTX 1070 Ti FE, a PowerColor Red Devil RX Vega 56, and a Gigabyte RX Vega 64 liquid cooled edition on a Core i7-8700K where all 6 cores turbo to 4.6GHz, an EVGA Z370 FTW motherboard and 16GB of HyperX DDR4 at 3333MHz on Windows 10 64-bit Home Edition. Here are the fifteen VR games we are benchmarking:

- Alice VR

- Batman VR

- Battlezone

- Chronos

- DiRT: Rally

- EVE: Valkyrie

- Fallout 4

- Landfall

- The Mage’s Tale

- Obduction

- Project CARS 2

- Robinson: The Journey

- Serious Sam: The Last Hope

- The Unspoken

- The Vanishing of Ethan Carter

Until FCAT VR was released in March, there was no universally acknowledged way to accurately benchmark the Oculus Rift as there are no SDK logging tools available. To compound the difficulties of benchmarking the Rift, there are additional complexities because of the way it uses a type of frame reprojection called asynchronous space warp (ASW) to keep framerates steady at either 90 FPS or at 45 FPS. It is important to be aware of VR performance since poorly delivered frames will actually make a VR experience quite unpleasant and the user can even become VR sick.

It is very important to understand how NVIDIA’s VRWorks and AMD’s LiquidVR each work to deliver a premium VR experience, and it is also important to understand how we can accurately benchmark VR as explained here. And before we benchmark our 15 VR games, let’s take a look at our Test Configuration on the next page.

Test Configuration – Hardware

- Intel Core i7-8700K (HyperThreading and Turbo boost are on to 4.6GHz for all 6 cores; DX11 CPU graphics)

- EVGA Z370 FTW motherboard (Intel Z370 chipset, latest BIOS, PCIe 3.0/3.1 specification, CrossFire/SLI 8x+8x), supplied by EVGA

- HyperX 16GB DDR4 (2x8GB, dual channel at 3333MHz), supplied by Kingston

- Oculus Rift, 360 degree set-up with 3 sensors, including Touch Controllers.

- GTX 1080 Ti, reference clocks, supplied by NVIDIA

- GTX 1080, 8GB, Founders Edition, reference clocks, supplied by NVIDIA

- Gigabyte GTX 1070 Ti, 8GB, Founders Edition, reference clocks, supplied by NVIDIA

- PowerColor Red Devil RX Vega 56, 8GB, reference clocks, supplied by PowerColor

- Gigabyte RX Vega 64 Liquid Cooled Edition, 8GB, reference clocks.

- 1.92TB Micron Enterprise class SSD, game storage

- 2 x 240 GB HyperX SSDs for Windows 10, one for AMD and one for NVIDIA, supplied by HyperX

- 2 TB Seagate FireCuda SSHD for game storage

- EVGA 1000G 1000W power supply unit

- EVGA CLC 280 – 280mm CPU watercooler, supplied by EVGA

- Onboard Realtek Audio

- Genius SP-D150 speakers, supplied by Genius

- EVGA DG-77 mid-tower case, supplied by EVGA

- Monoprice Crystal Pro 4K display

- Nikon B700 digital camera

Test Configuration – Software

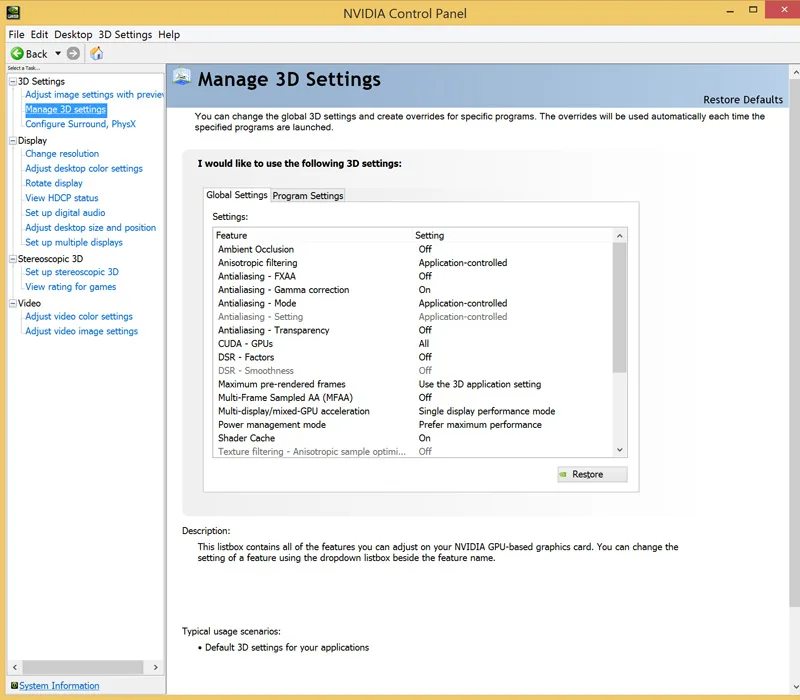

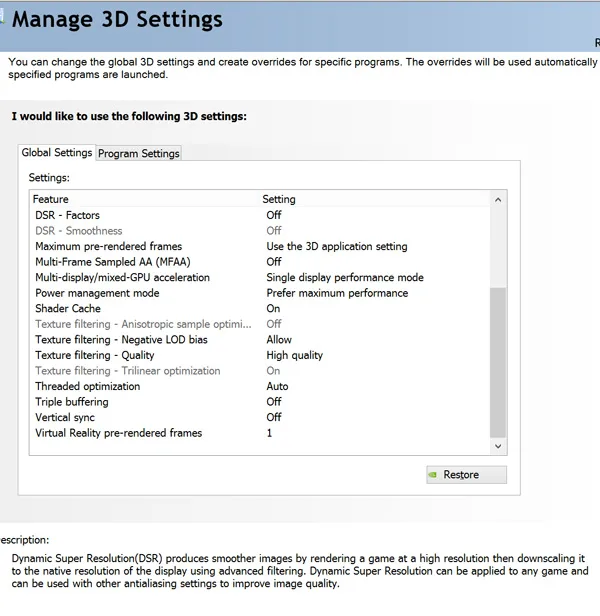

- NVIDIA’s GeForce WHQL 388.71. High Quality, prefer maximum performance, single display.

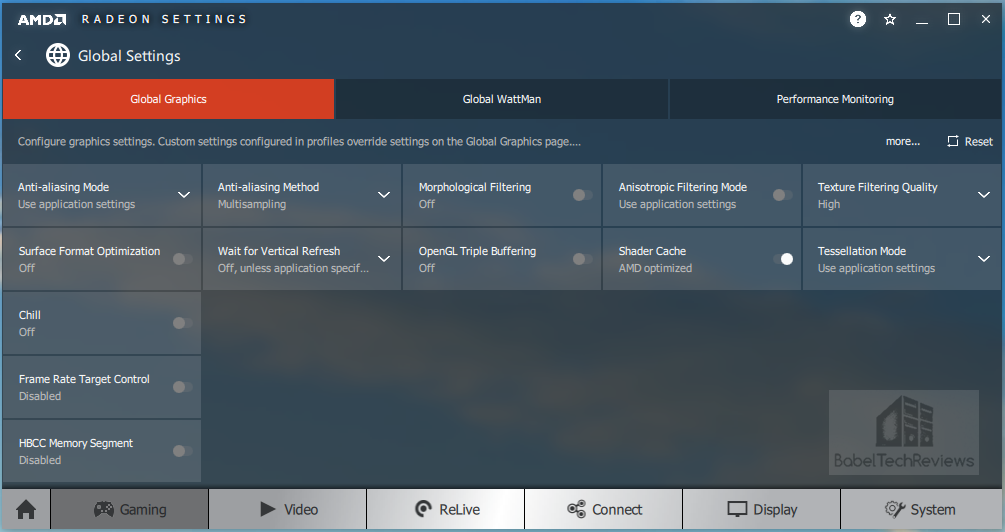

- AMD Adrenalin Software 17.12.2

- FCAT VR benchmarking tools

- Oculus Rift Diagnostic tools

- Highest quality sound (stereo) used in all games.

- Windows 10 64-bit Home edition, all VR hardware was run under Oculus Rift software. Latest DirectX

- All applications are patched to their latest versions at time of publication.

- WattMan was used for AMD cards.

- MSI’s Afterburner, latest beta, was used for NVIDIA cards.

VR Games & Apps

- Alice VR

- Batman VR

- Battlezone

- Chronos

- DiRT: Rally

- EVE: Valkyrie

- Fallout 4

- Landfall

- The Mage’s Tale

- Obduction

- Project CARS 2

- Robinson: The Journey

- Serious Sam: The Last Hope

- The Unspoken

- The Vanishing of Ethan Carter

NVIDIA Control Panel settings

We used MSI’s Afterburner to set the Power and temp limits to their maximums.

No longer is there an option to set Single Display Performance mode.

AMD Adrenalin Driver Settings

Here are the Global setting that we use in the Radeon Control Panel for the RX Vegas:

Power Limit/Temperature/Fan targets are set to automatic maximum by WattMan.

Let’s individually look at the games that we benchmark starting with Alice VR, Batman VR, BattleZone and Chronos.

Let’s individually look at the games that we benchmark starting with Alice VR, Batman VR, BattleZone and Chronos.

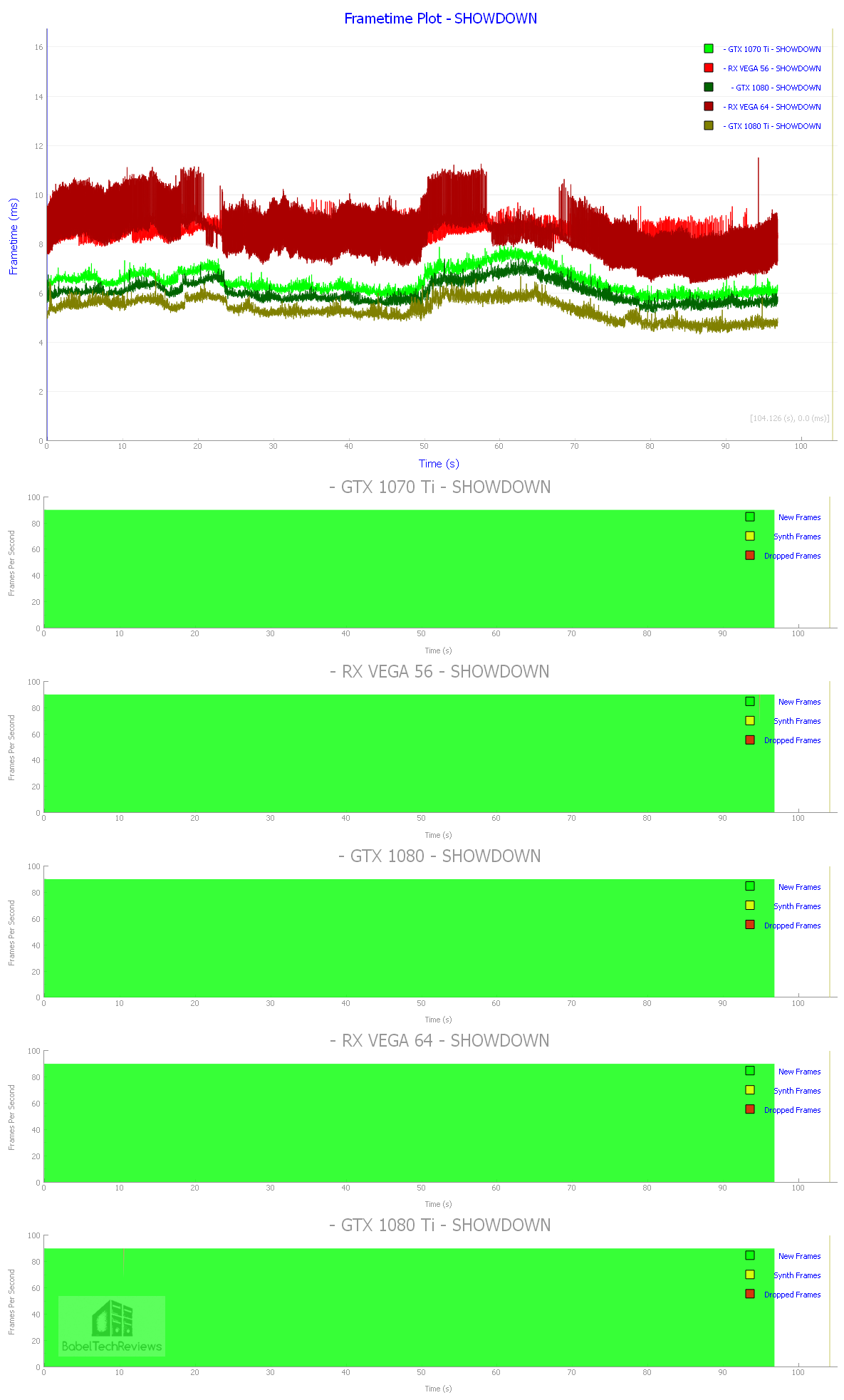

Showdown

Showdown is not a game, but rather a 2014 Unreal Engine 4 demo by Epic that was the genesis for BulletTrain and finally Robo Recall, one of this editor’s favorite VR games. Unfortunately, Robo Recall is not stable with our beta build of FCAT VR, so we will include it in our next VR review. This demo was incredibly popular at trade shows and is still used for demonstrating what VR can do as there are a lot of effects and interactivity. However, this demo is no longer considered demanding as some of the particle effects appear to be 2D sprites instead of actual 3D geometries, and there are no shadow casting lights (i.e. lighting is “faked”) even though the anti-aliasing is at 4xMSAA. It has recently been patched to improve the lighting but it is no more demanding than previously.

Although Showdown may be trivial for even a GPU like GTX 1060 or an RX 580 now, it will still show differences in the amount of performance headroom that each GPU has. Here is the professionally captured demo of Showdown in VR as uploaded by Epic.

Here is our own video capture of Showdown through the lens of the Rift using a GTX 1080 Ti:

Here are our frametime and interval plots:

As expected, all of the cards breeze through the Showdown demo. Let’s check our our first VR game, Alice.

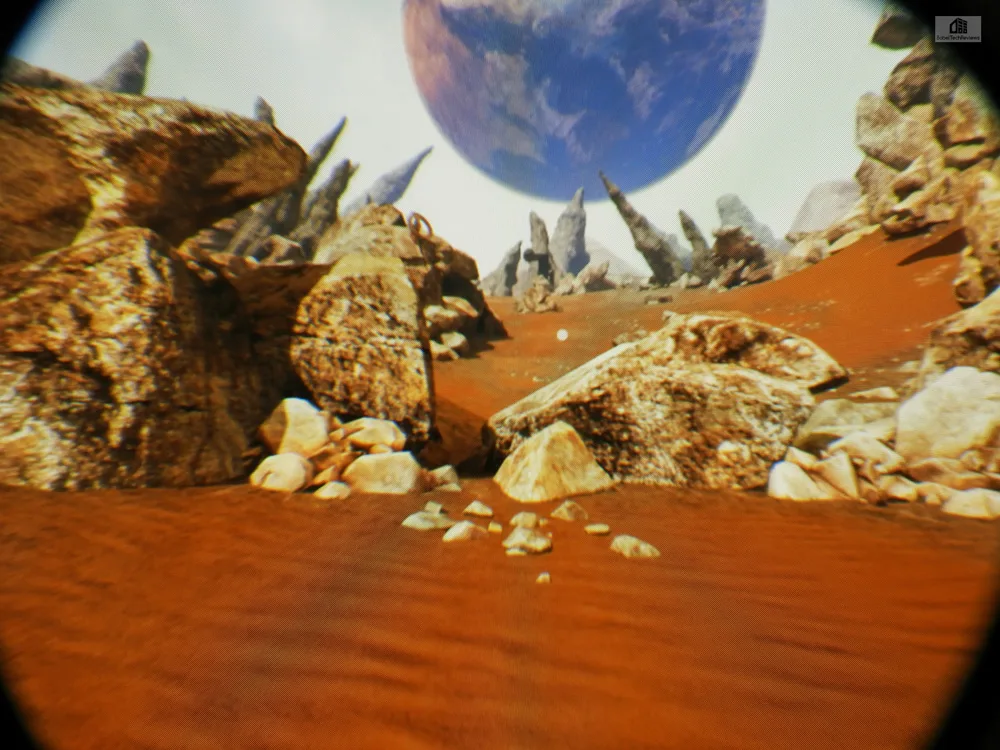

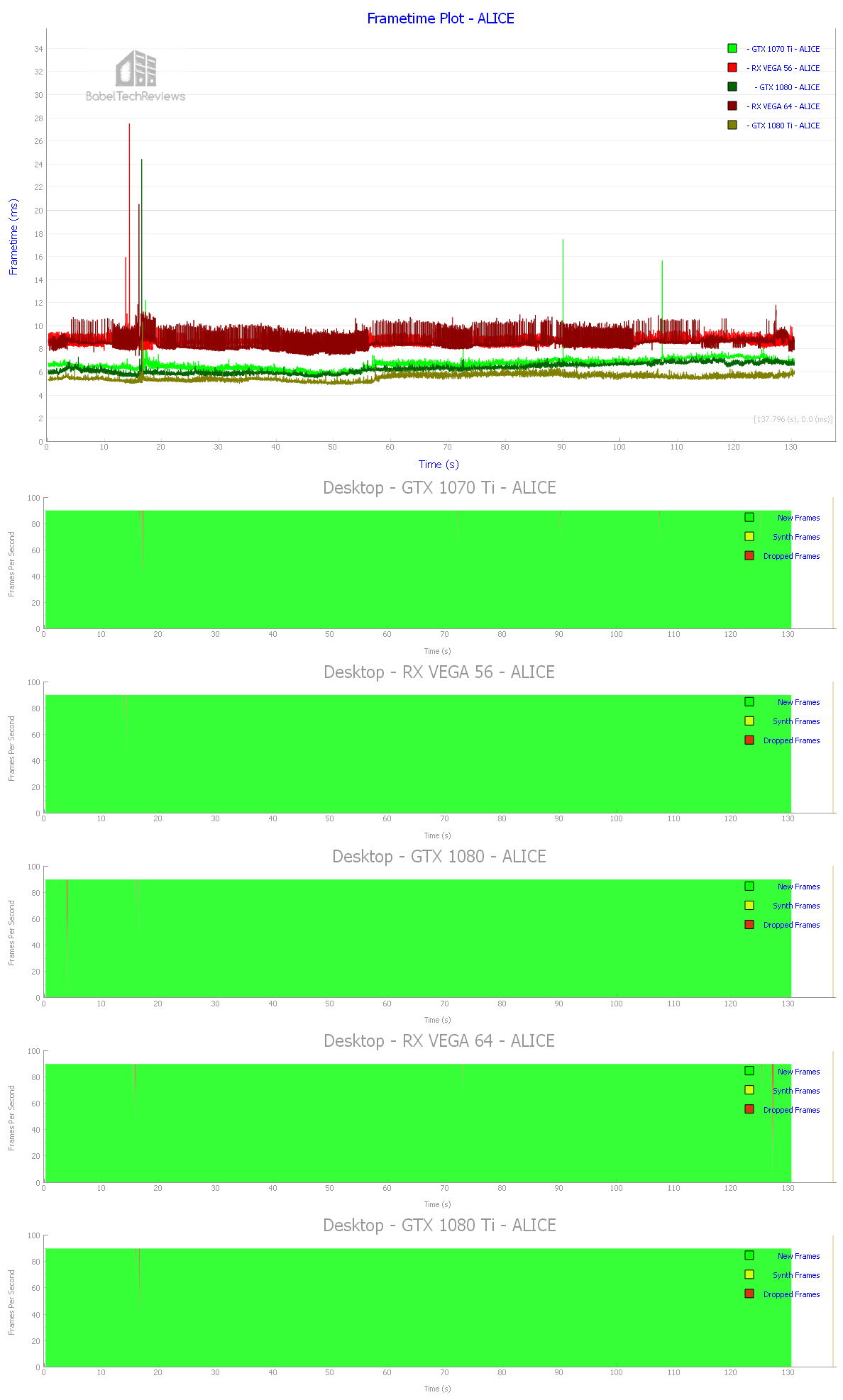

Alice VR

Alice VR is a science fiction space adventure game that is very loosely based on Lewis Carroll’s “Alice in Wonderland”. You are awakened from cryo-sleep and directed by your ship’s AI to fix some malfunctions that require going to a nearby planet’s surface to acquire supplies. It’s a short game of perhaps 5 or 6 hours that has simple puzzles, and it might be worth playing except for the awful way that locomotion is implemented.

Although Alice VR uses the Unreal 4 engine, the outdoor graphics are weak and the interactivity is poor. Even when you get to drive a vehicle on a planet’s surface, the ground is mostly flat and the rocks you drive over only occasionally cause you to feel like you are really driving. The indoor environments are considerably better but the game is at best, average.

Here is the FCAT generated chart for Alice VR with all graphics settings set to their highest. We’d recommend increasing the pixel density to improve the overall visuals as there is plenty of performance headroom for all 5 cards we tested. Here are the frametime and interval plots:

All of the cards have plenty of performance headroom to keep at least 90 FPS steady delivery. There are some occasional dropped frames, but it doesn’t affect the gameplay. It’s pretty clear that Alice VR is not demanding at all. Let’s look at Batman VR next.

Batman Arkham VR

Batman Arkham VR is an unusual game that immerses you into Batman’s world but doesn’t really involve fighting. It is a beautiful-looking game with a lot of interactivity and an emphasis on “detective work” and puzzle solving. It’s short, but it really shows one what VR is capable of right now on the Unreal Engine.

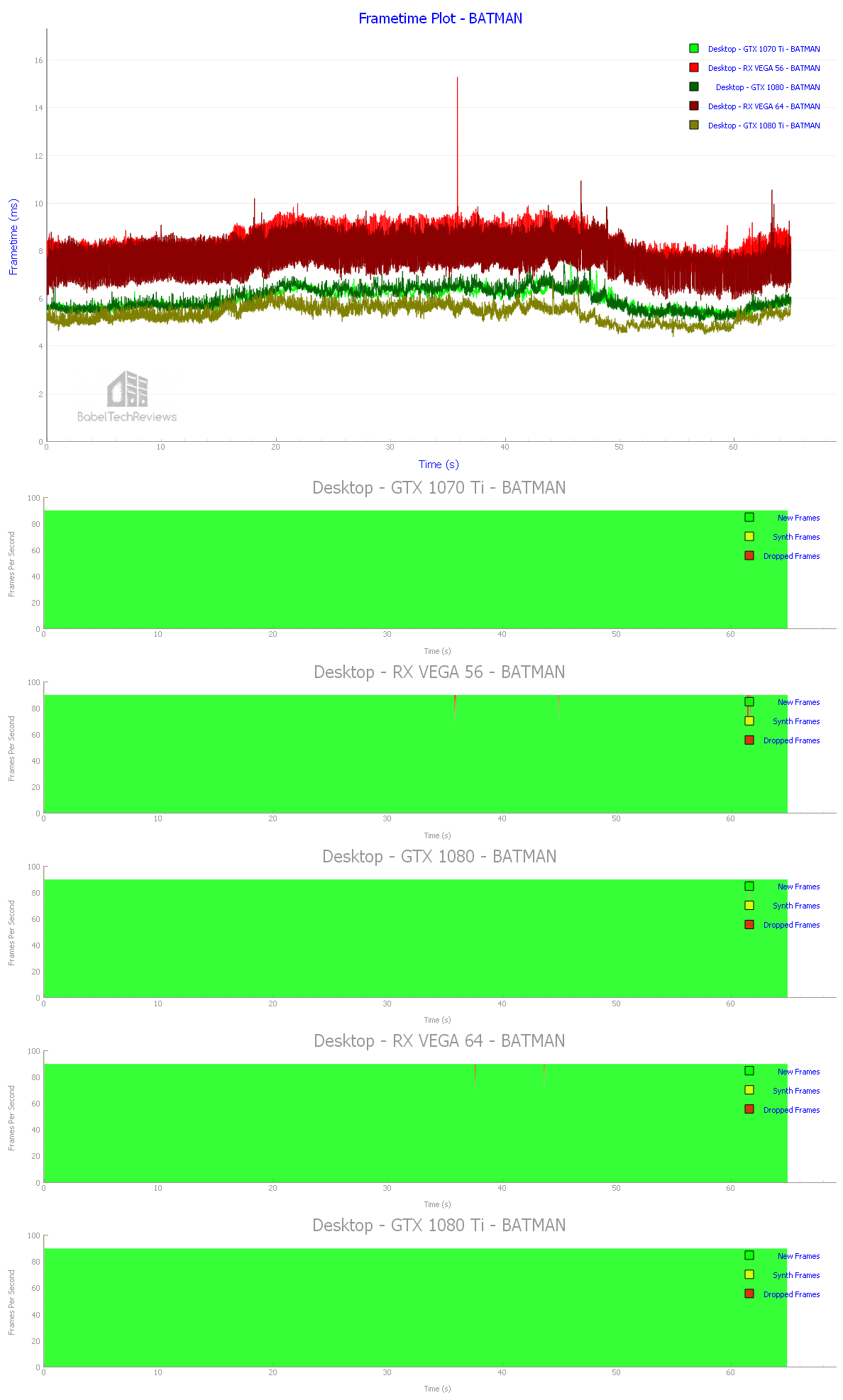

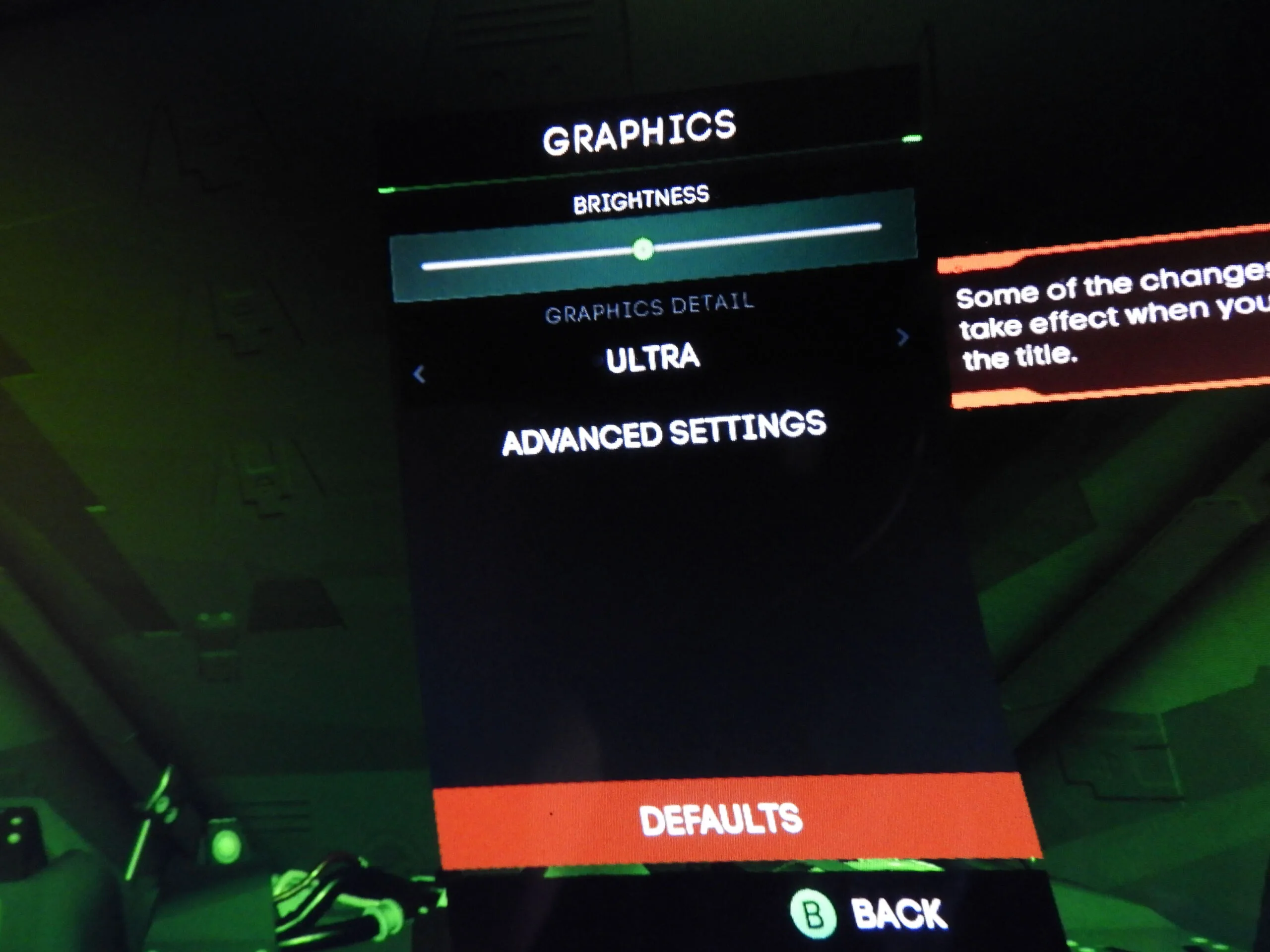

Batman Arkham VR has multiple settings but we benchmark at the stock full resolution, 100% pixel density

Here is the performance across our 5 cards from the FCAT generated charts at 100% Pixel Density.  The frames are generally delivered well with the exception of a couple of stutters. All five of our test cards can play at the highest settings, but even with 100% pixel density, the scenes are slightly blurred and there is a minor screendoor effect visible. The holy grail of VR image quality (IQ) would be to increase the Pixel Density.

The frames are generally delivered well with the exception of a couple of stutters. All five of our test cards can play at the highest settings, but even with 100% pixel density, the scenes are slightly blurred and there is a minor screendoor effect visible. The holy grail of VR image quality (IQ) would be to increase the Pixel Density.

Let’s look at Battlezone next.

Battlezone

Battlezone is a reboot and VR reimagining of an arcade classic that we remember well. The VR game features the Cobra which is the most powerful tank ever developed. As the player, you experience first-person VR combat across a neon-lighted science fiction inspired landscape in solo mode or online with 1-4 players. The landscape is procedurally generated so each campaign is slightly different from the other.

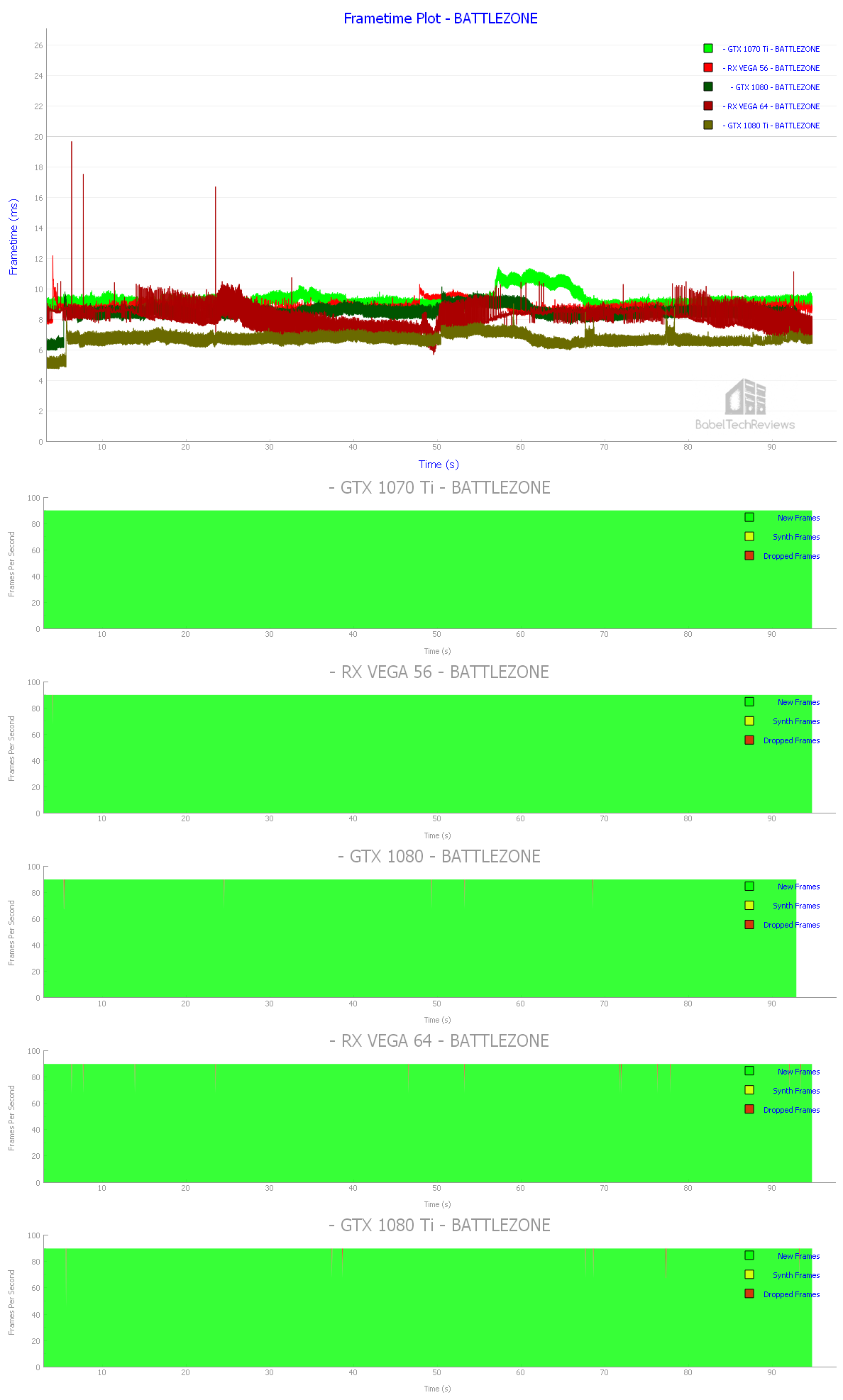

Originally, we got a DX12 error on launch for many weeks, but the game has been patched. It is a lot of fun, but multiplayer is pretty sparse and it may take awhile to get an online match depending on the time of day. We use default Ultra settings for all of our cards and runs.

It is not a very demanding game, and even mid-range video cards should be able to deliver at least 90 FPS. Here is the frametime chart and interval plots of our five competing cards.

All of our cards deliver a satisfactory premium VR experience above 90 FPS with few frame drops that don’t really affect the gameplay. However, Battlezone is our first of three games where the Vegas edge the corresponding GTXes although the GTX 1080 Ti is the fastest card by a significant margin.

Let’s look at Chronos next, which has become a Rift classic.

Chronos

Chronos is an exclusive Rift launch title with graphics options that are good for GPU testing. It is an amazing RPG for the Rift and it is also very hard. It is about 15 hours long and has many difficult puzzles to solve, as well as requires a player to be very good in combat – especially with defense and attack timing. It uses the Xbox One controller although the Touch controllers can also be used, and since it has a 3rd person view, there is little chance of getting VR sick unless you really push the settings too high. Chronos is an excellent VR game.

There are 4 settings and we picked Epic.

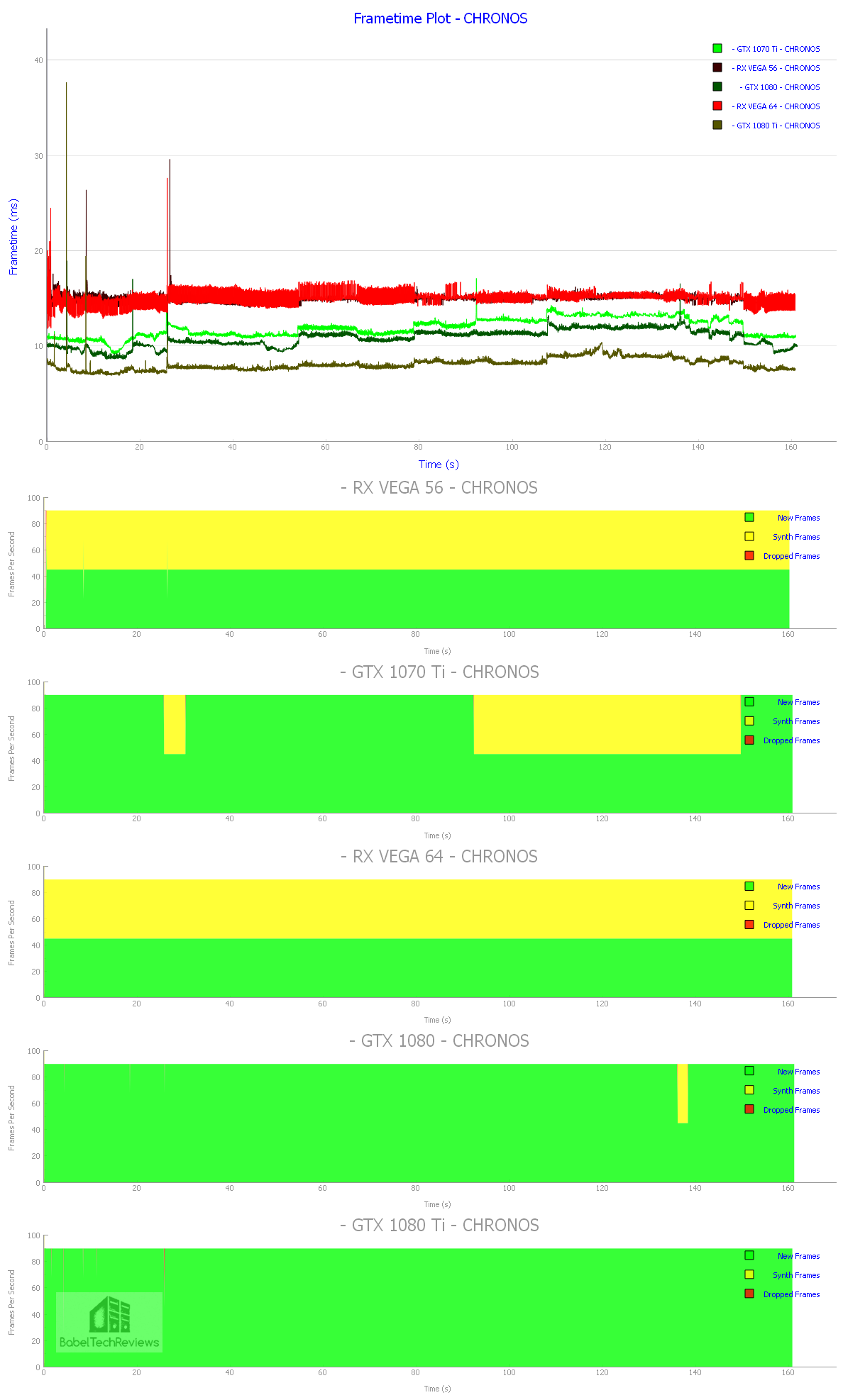

Here are the FCAT generated charts of Chronos with Epic settings:

The GTX 1080 Ti and the GTX 1080 can both play Chronos on Epic without needing to drop to 45 FPS with ASW reprojection like the rest of the cards. When you are fighting, you really want 90 FPS without any ASW or you may encounter some artifacting. However, the RX Vegas and the GTX 1080 Ti cannot play on Epic very well for any length of time as half of the frames are ASW simulated. We’d recommend dropping settings to High or lower as needed.

Let’s check out DiRT: Rally, EVE: Valkyrie, Fallout 4 and Landfall next.

DiRT: Rally

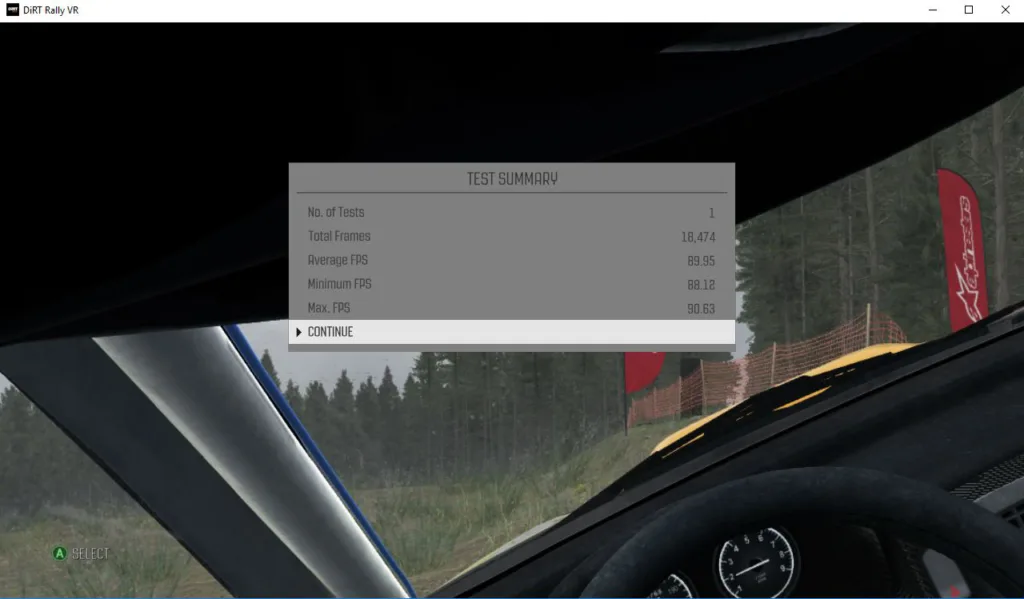

DiRT Rally has a built-in benchmark that is 100% repeatable. It is an excellent VR driving game that is both difficult and demanding. If a framerate drops below 90 FPS, the Test Summary of the built-in benchmark accurately shows the minimum as 45 FPS (with ASW reprojection). Unfortunately, it does not display unconstrained FPS but you can check out our Unconstrained framerate chart in the conclusion. DiRT: Rally is a very fun and demanding game for either PC gaming or for VR that requires the player to really learn the challenging road conditions. Best of all, DiRT: Rally has a lot of settings that can be customized although we benchmarked only the “Ultra” presets

DiRT: Rally is a very fun and demanding game for either PC gaming or for VR that requires the player to really learn the challenging road conditions. Best of all, DiRT: Rally has a lot of settings that can be customized although we benchmarked only the “Ultra” presets

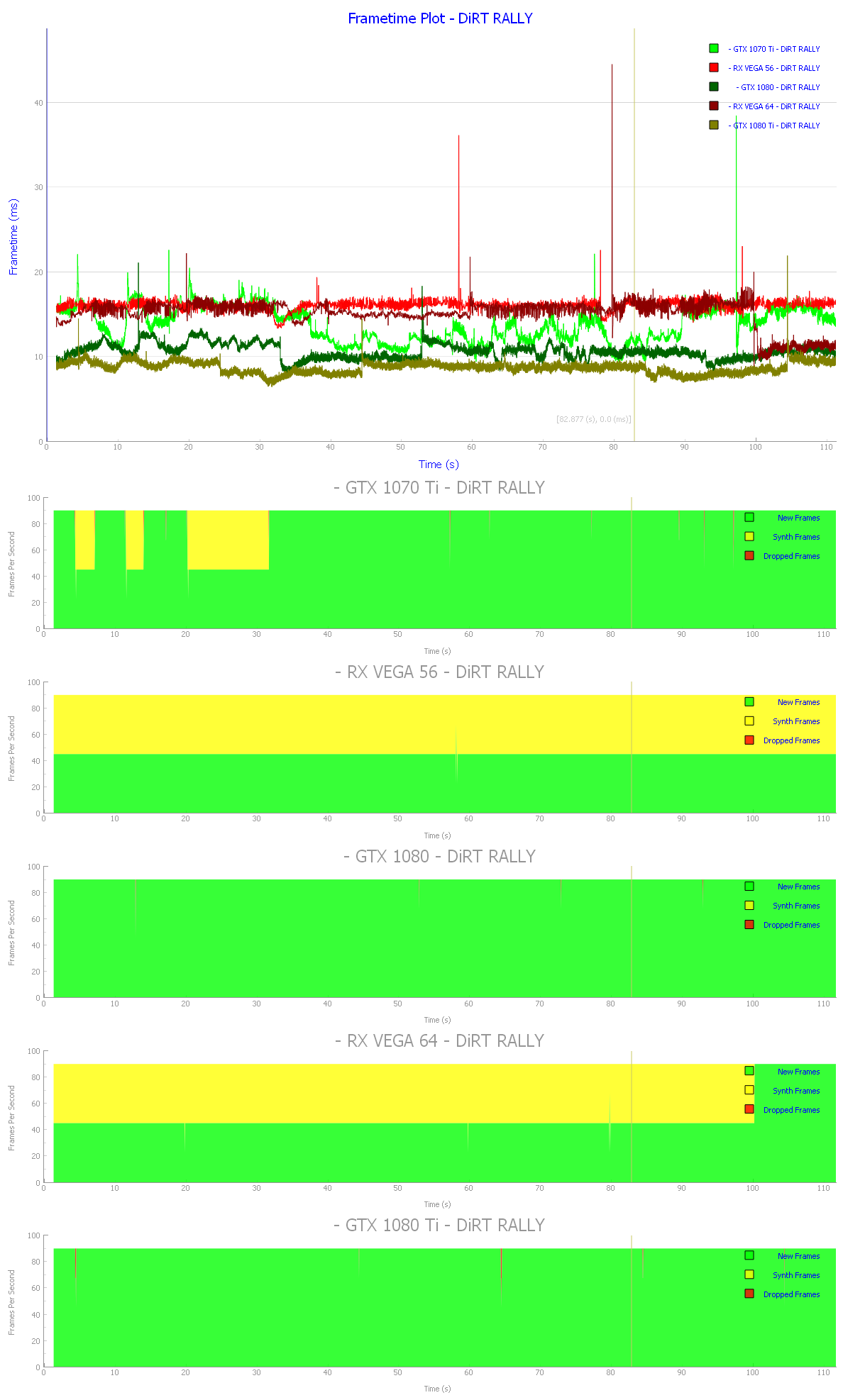

Here is the DiRT: Rally benchmark run by our 5 test cards at Ultra settings.

Just like with Chronos, only the GTX 1080 and the GTX 1080 Ti can play on ultra settings. The other 3 cards cannot maintain 90 FPS and detail settings should be lowered for the best VR experience.

Let’s look at EVE: Valkyrie performance next.

EVE: Valkyrie

EVE Valkyrie is set in space and there is often a lot of empty space outside of fighting and exploration. Unfortunately, it is also hard to find a repeatable benchmark outside of the training experiences. You play as a cloned pilot who is resurrected and is now back to work fighting enemies. You get to experience barrel rolls, loops, and shooting in zero gravity. There is plenty of feedback from the Xbox controller but VR beginners need to beware because of the VR-induced stomach churning some of the maneuvers cause.

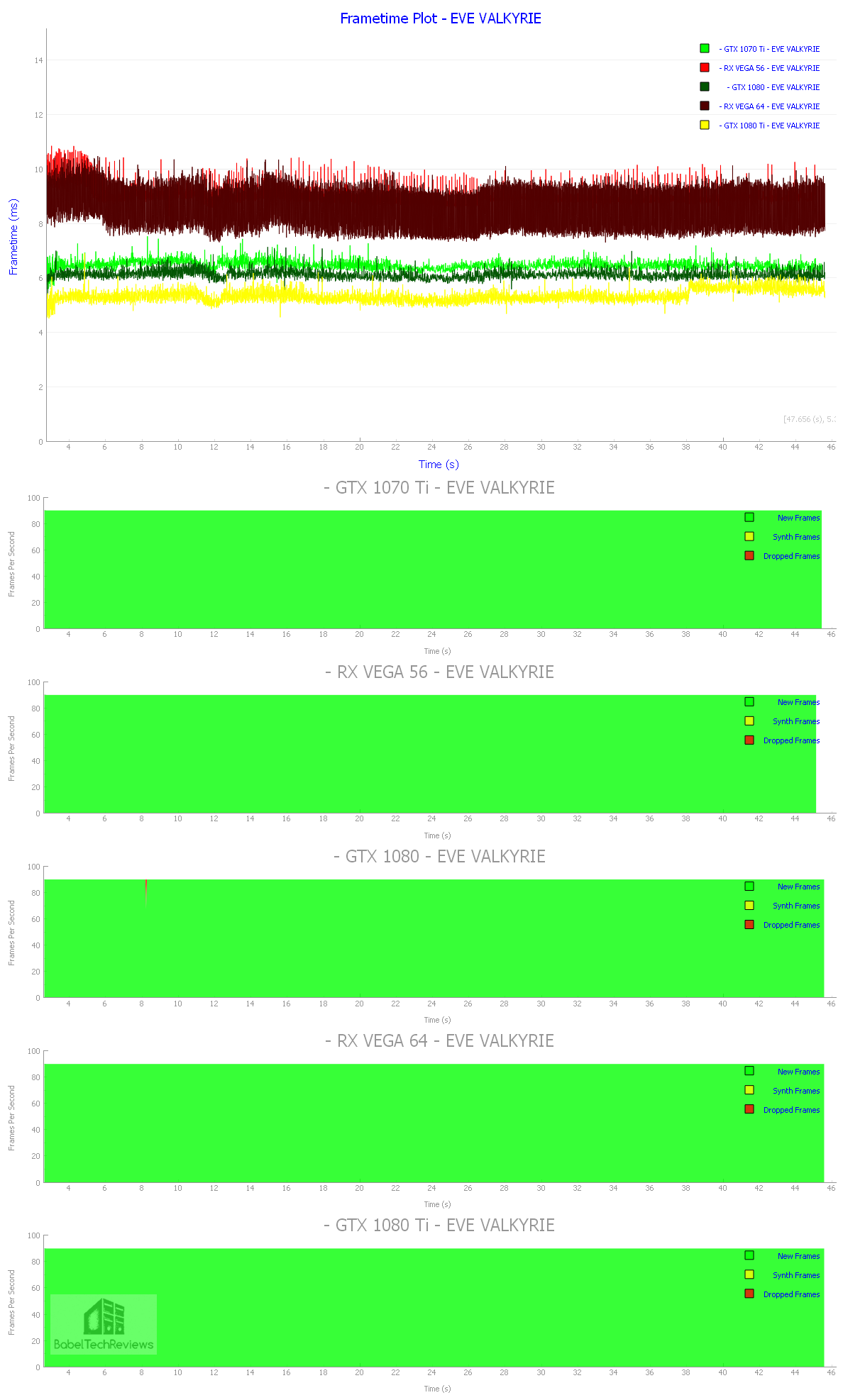

Although graphics are very good and recently improved by a large patch, all five cards can play EVE: Valkyrie on Ultra settings as shown by the frametime graph.  There is sufficient performance headroom for all cards and the player can easily increase the Pixel Density for improved visuals.

There is sufficient performance headroom for all cards and the player can easily increase the Pixel Density for improved visuals.

Let’s look at Fallout 4 VR next

Fallout 4 VR

Fallout 4 VR is a Vive exclusive that was released last month by Bethesda. Don’t expect it officially released anytime soon for the Rift as Bethesda’s parent company Zenimax has been embroiled in legal disputes with Oculus/Facebook. However, it can be played on the Rift if you are willing to put some (read: a lot) of work into it. One of the biggest issues is navigating the Pip Boy’s menus easily, and using a gamepad in addition to the Touch controllers tends to break immersion.

Fallout 4 VR is not a great implementation of VR. There are mods for DOOM 3 and for Skyrim that are more impressive. However, it is a really full length game, and Fallout 4 is a classic on the desktop. Playing in VR adds depth and immersion that is not possible in the PC game, and a Rift player may want to pick it up – especially after it has been patched and modded more.

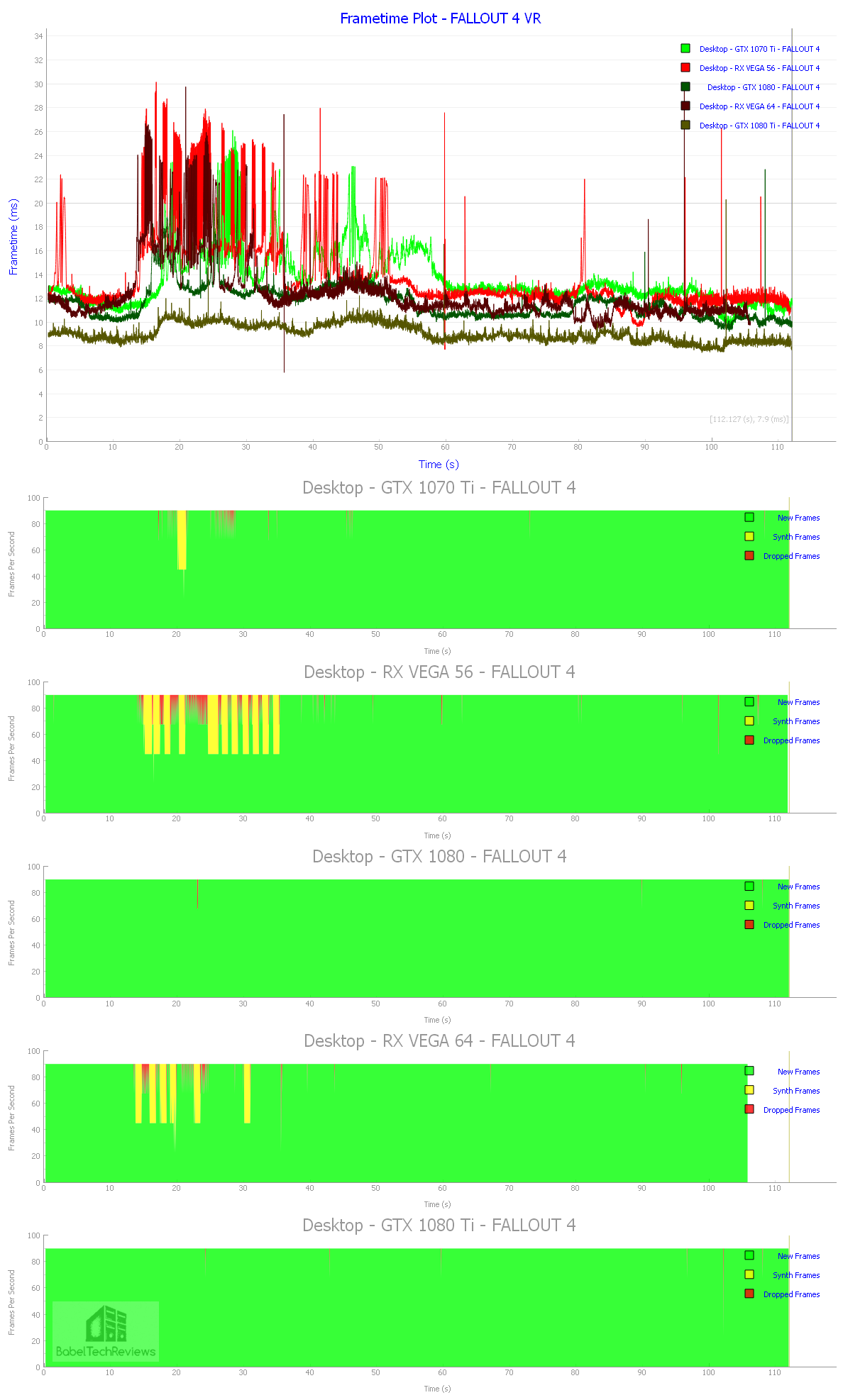

Here are our Frametime Plots and interval plots for our 5 competing cards.

The GTX 1080 and the GTX 1080 Ti are the only two cards to manage this game without reprojecting frames. We are hoping that further patching and optimization will improve performance and we are looking forward to playing this game (easily) on the Rift when the modders fix the controls.

The GTX 1080 and the GTX 1080 Ti are the only two cards to manage this game without reprojecting frames. We are hoping that further patching and optimization will improve performance and we are looking forward to playing this game (easily) on the Rift when the modders fix the controls.

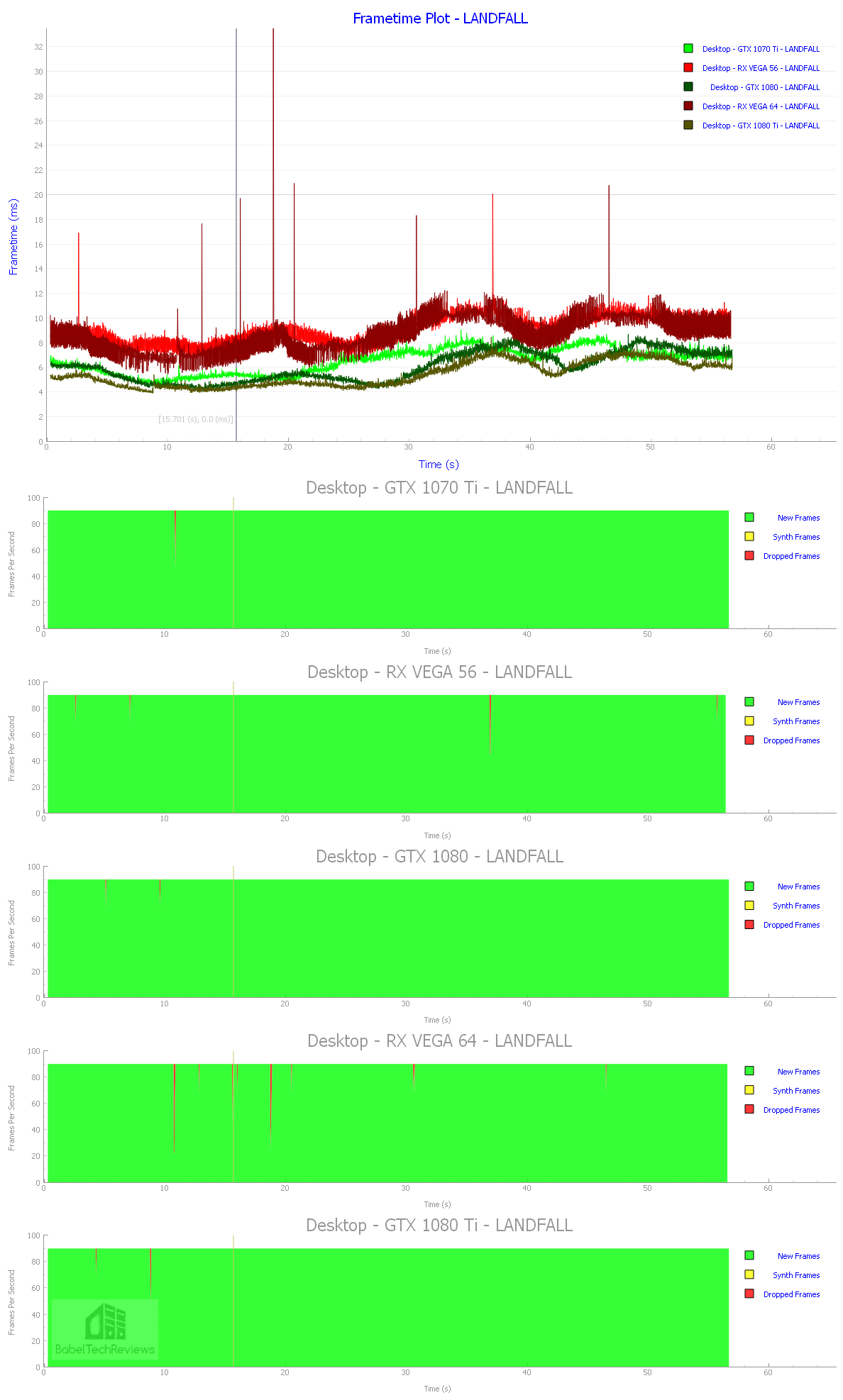

Let’s check out Landfall next

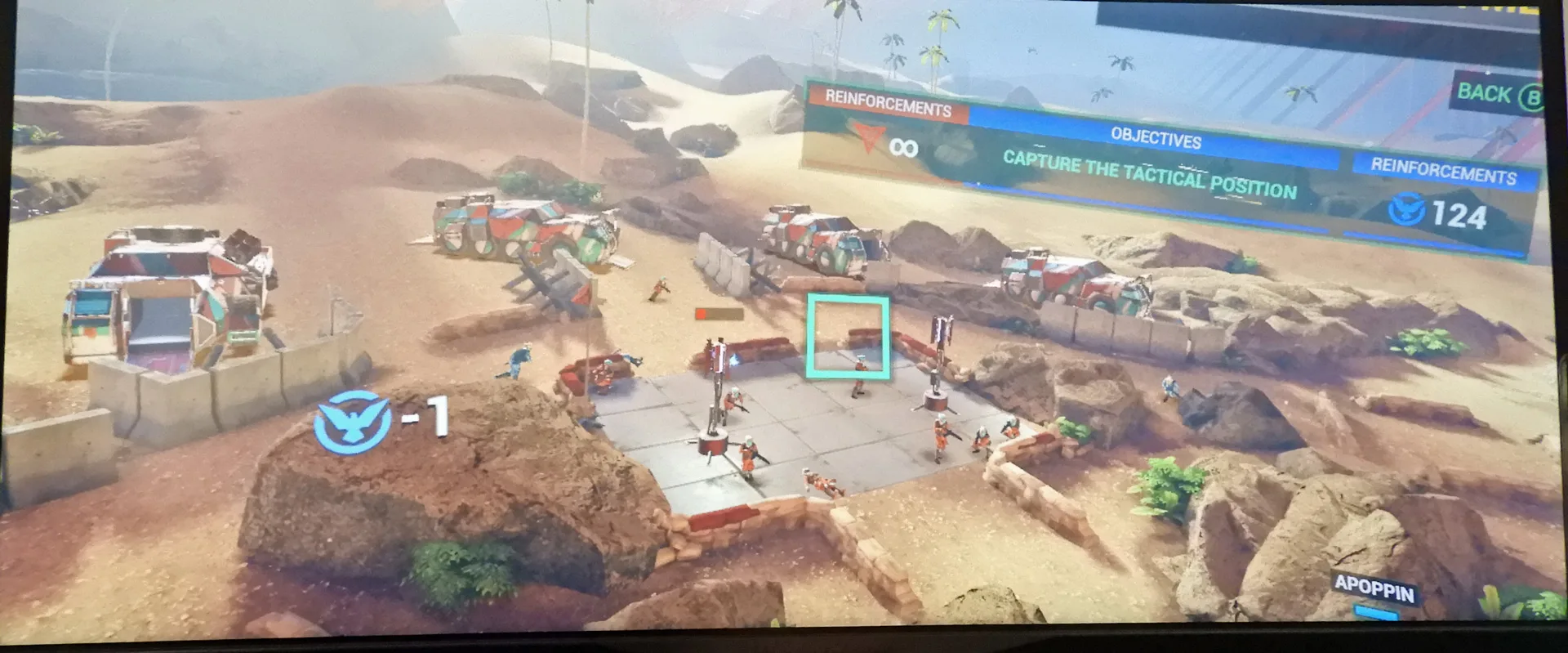

Landfall

Landfall is an interesting game which is basically a top-down 3rd person view tactical shooter with a panoramic view of the changing battlefields. Your objective is to lead a squad of soldiers in capturing objectives. When you are actually fighting, the view will switch to a first person view. There is a single player campaign as well as co-op with a friend for 1v1 or 2v2 action. The usual weapons unlocks and ability loadouts make for some strategy elements also, but the ability to shoot using both sticks with your controller from cover makes for a really enjoyable game.

Landfall is another game that is well-suited for midrange video cards with all settings completely maxed-out. Higher performance cards can easily handle increasing the pixel density for a sharper image. Here are the performance results of our 5 video cards.

There are some dropped frames but nothing significantly affecting gameplay.

There are some dropped frames but nothing significantly affecting gameplay.

Let’s check out The Mage’ Tale next.

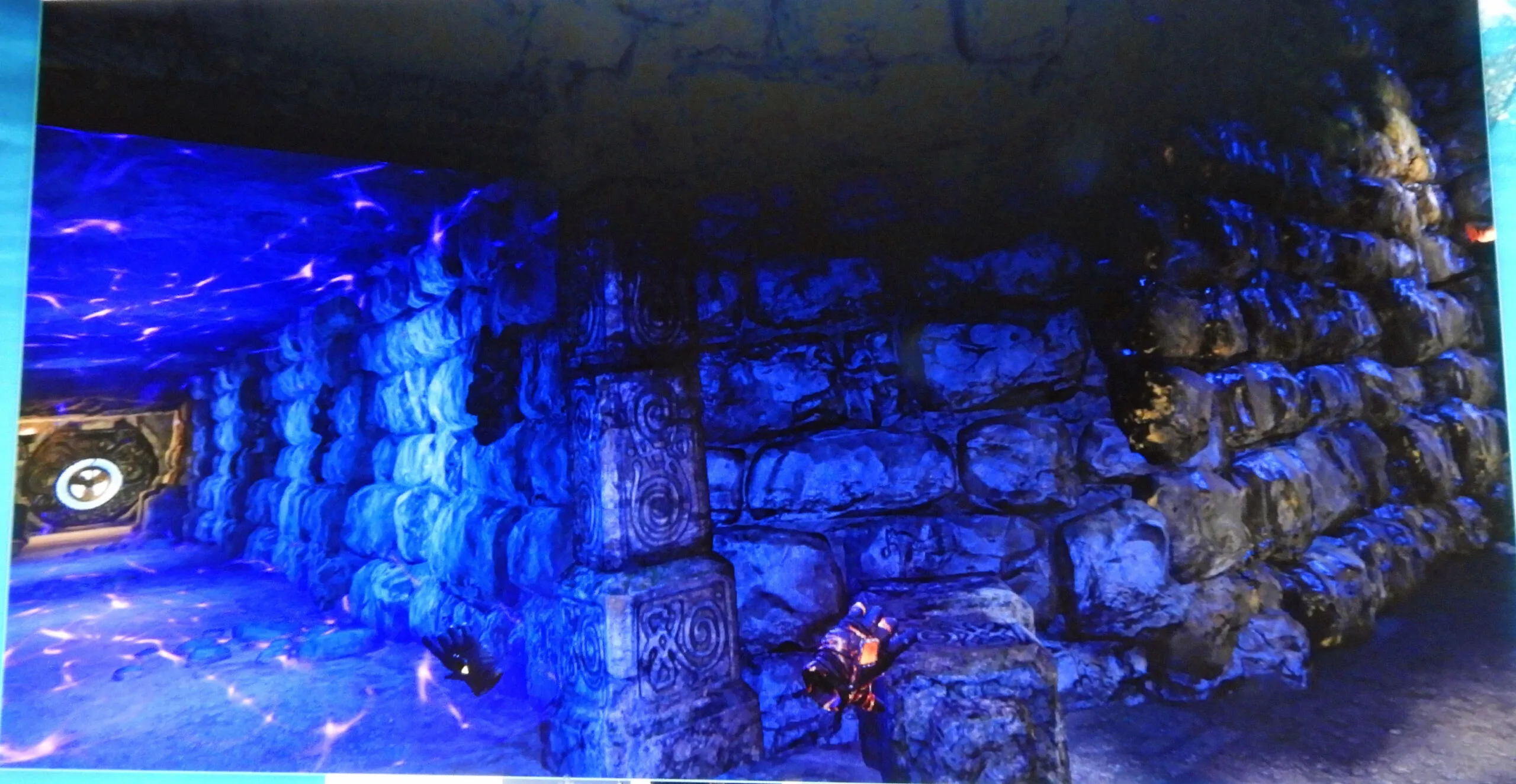

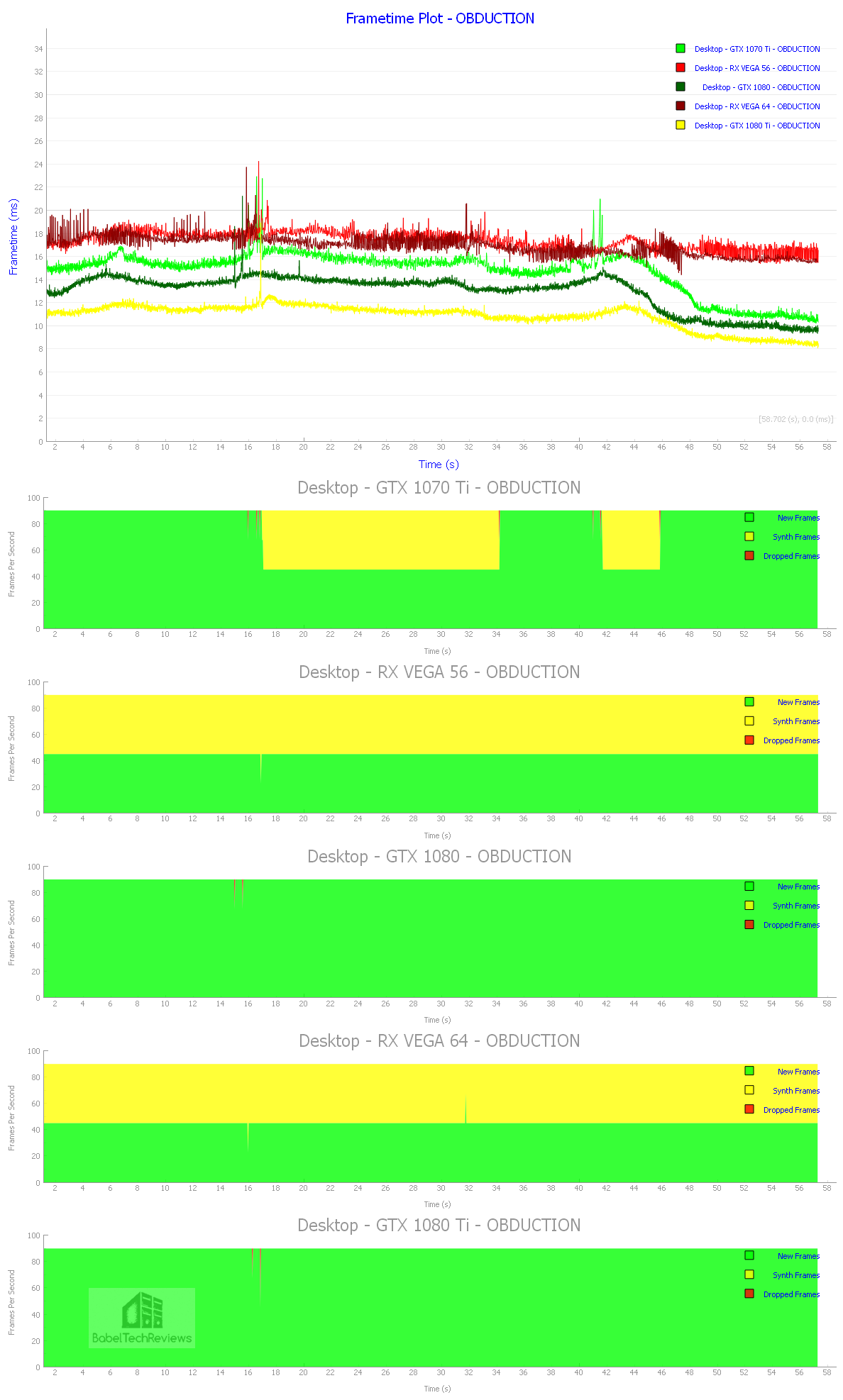

The Mage’s Tale

The Mage’s Tale is a lot of fun and the visuals are excellent. It is a VR RPG dungeon crawler with enough camp to make it really enjoyable. It is a story-driven title where the player is a mage’s apprentice who is on a quest to save his kidnapped master. It is a puzzler with tons of hidden objects and interactions. For example, very early in the game, you can cast fireballs from your fingertips, and if you stand at the edge of an abyss and fire downward (for no reason), you will summon a dragon briefly. It’s a lot of fun discovering the many hidden secrets of this game, and you will also have to use your shield and magic weapons to fight the creatures and monsters you will encounter.

We left the game on default settings, but we could have increased the pixed density to sharpen the scene and limit its screendoor effect. The game delivered well on our 5 cards but there were occasional hitches. Here are the frametime and interval plots.

Although there are dropped frames, the performance is very good and we would recommend increasing the pixel density as appropriate for your graphics card.

Although there are dropped frames, the performance is very good and we would recommend increasing the pixel density as appropriate for your graphics card.

Let’s look at Obduction next.

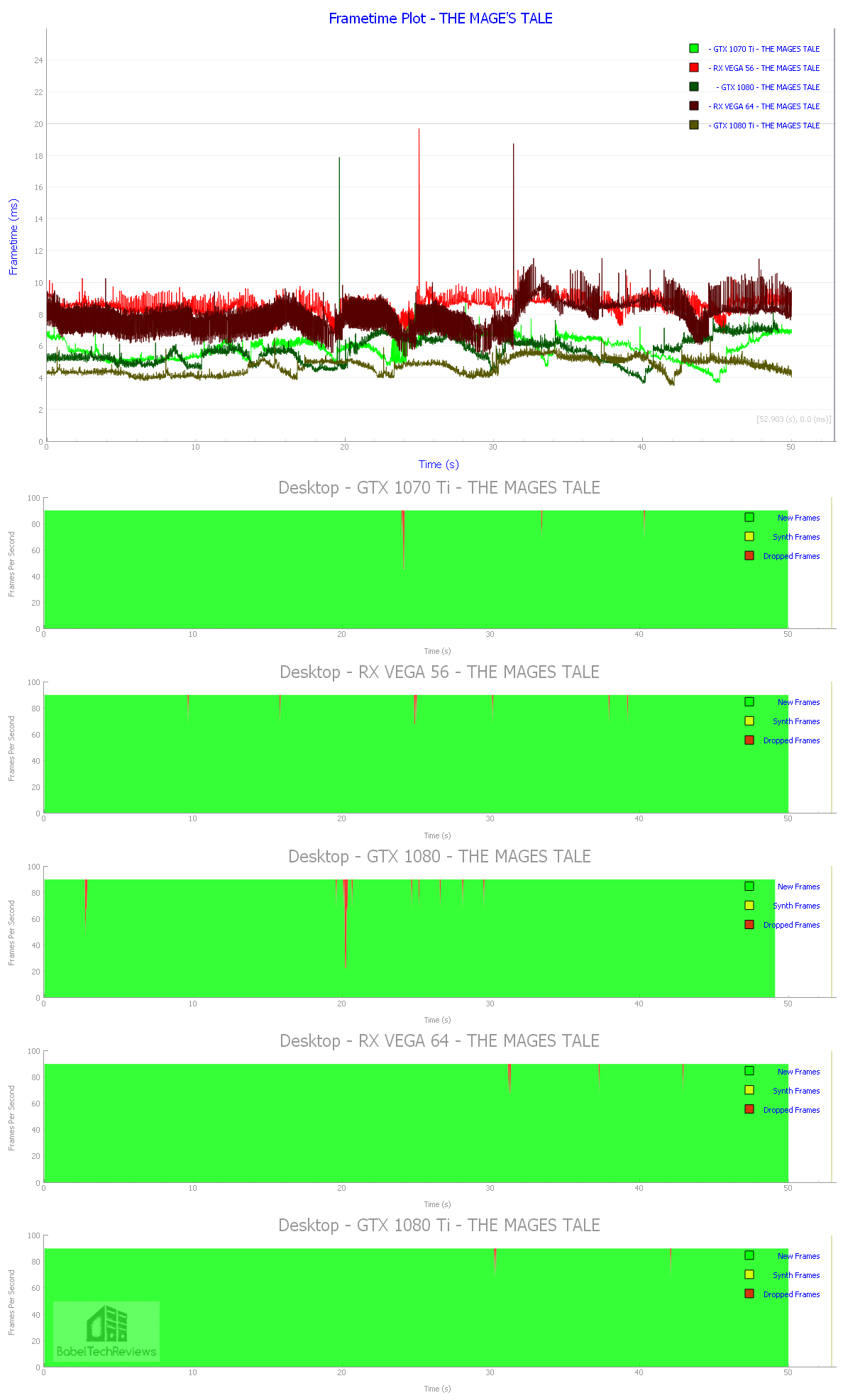

Obduction

Obduction is an adventure video game developed by Cyan Worlds that is considered the spiritual successor to Myst and Riven. A player finds himself in a strangely familiar alien world that he must explore to return home, and there is a big emphasis on puzzle solving which gets more difficult as the player progresses. There are 4 main areas of the game which may take anywhere from 5 to 15 hours to complete.

The game may be played seated or standing and the comfort level is reasonable as locomotion is provided by either teleportation or by a smooth forward movement with snap turn options. Unfortunately, there are still issues with the way that Touch is implemented and it is often difficult to interact with objects which definitely can break immersion. It is also possible to play with a gamepad.

Let’s look at FCAT VR frametimes of Obduction running at Epic settings:

Obduction is still a mess performance-wise. It is playable and some configurations will work better than others. However, it is such a beautiful game with great puzzles and such a good story that one may even forgive the difficulty of interacting with the objects in the world. It’s a flawed masterpiece that doesn’t look like it will be fully patched for VR.

Let’s check out Project CAR 2.

Project CARS 2

Racing games are among the very best VR experiences to be had. Project CARS 2 is a sim, and although it can be dumbed-down into an arcade-style racer, it is best played with a racing wheel and pedals. Although the original game was ground-breaking in many regards, the sequel is a solid improvement over Project CARS, especially with regard to having more of everything – improved weather and changing track conditions, as well as better handling and greatly improved tire physics. We use the same track, California’s Highway 1 as in the original, but we now use Stage 3 as our benchmark run in Project CARS 2 for both our desktop and VR runs.

We originally reviewed Project CARS 2 when it released at the end of September, but our runs cannot be compared as we have picked a more demanding part of the track to test with. We use the highest settings – except for low Motion Blur plus SMAA Ultra – which force most of our cards into reprojection.

Here are the frametimes and intervals plots:

Here are the frametimes and intervals plots:

All of the cards go into major reprojection except for the GTX 1080 Ti which just manages 90 FPS at our extreme settings. Project CARS 2 continues to be our very favorite racing game for VR or for desktop play. Let’s check out Robinson: The Journey.

Robinson: The Journey

Robinson: The Journey is first person adventure/puzzle game developed by Crytek using the CryEngine. Just like with Crytek PC games, the visuals are among the very best to be found in any VR game. Crytek’s Robinson: The Journey is a blast to play in VR but it’s short and can be played in about 6 hours.

We benchmarked at the highest settings as below, but with the resolution scale set to 1.0x.

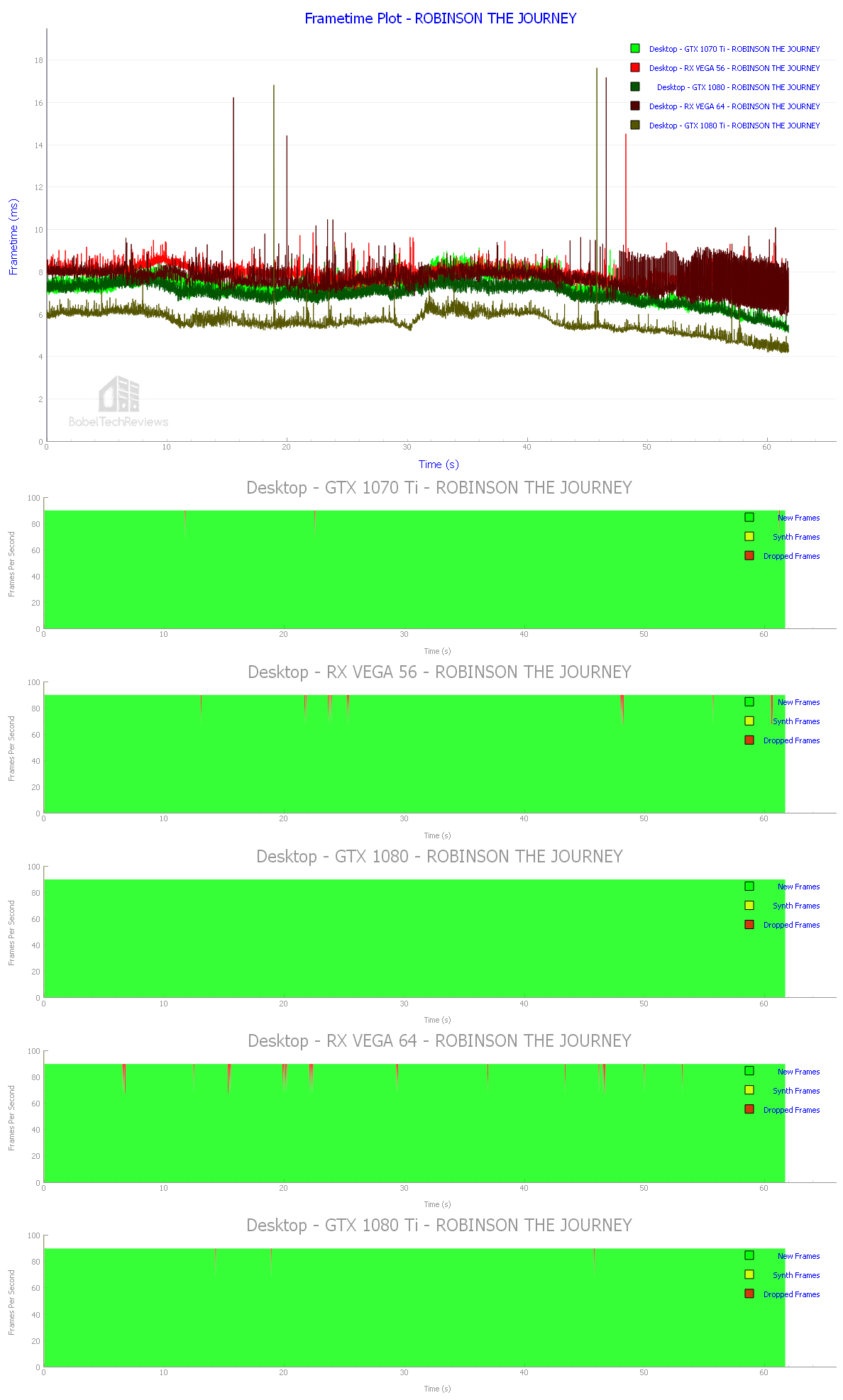

Here are the frametimes of our 5 competing video cards:

All five cards can handle increasing the resolution scale or the pixel density to add to the immersion by sharpening up the visuals and decreasing the screendoor effect. Overall, Robinson: The Journey is a visually impressive game that appears well-optimized and it gives a satisfactory experience across all of our cards at the highest settings.

Let’s at look Serious Sam: The Last Hope, The Unspoken, and The Vanishing of Ethan Carter.

Serious Sam: The Last Hope

Serious Sam The Last Hope is a lot of fun and is also very good for benching. There was a large patch released last Summer that completed the number of planets that were promised, and it gets more challenging as one continues to play which is typical of the series.  Serious Sam: The Last Hope is a lot different from the Serious Sam PC games as VR places the player into a theater-like setting facing forward in a mostly fixed position while you are rushed by many waves of enemies from the front, left and right sides. Unlike the PC version, you have real 2-handed gunplay and can use vastly different strategies.

Serious Sam: The Last Hope is a lot different from the Serious Sam PC games as VR places the player into a theater-like setting facing forward in a mostly fixed position while you are rushed by many waves of enemies from the front, left and right sides. Unlike the PC version, you have real 2-handed gunplay and can use vastly different strategies.

There are a plethora of options that can be customized individually, but we picked default Ultra for the four basic performance settings.

Here are the graphs using all-Ultra settings:

All of our cards can manage Ultra settings with no reprojection. This is the second game where the Vega 56 edges out the GTX 1070 Ti and the Vega 64 edges the GTX 1080 while the GTX 1080 Ti is still King. Let’s check out The Unspoken.

The Unspoken

The Unspoken is a multiplayer urban magic fight club set in contemporary Chicago whose name is so secret, that it is unspoken. There is now a single player mode although it is still primarily a multiplayer game that makes the one of best and most intuitive uses of the Touch controllers that we have found to date. Since it has been patched to include a very decent single player campaign, we highly recommend this game!

You must choose a wizard class and artifacts before you begin. Artifacts are powerful spells that can be used on occasion that require a more complex set of gestures than the usual attack or defense spell. The spells are simple and intuitive and the gesture motions are quite natural.

The Unspoken appears well-optimized and we picked the highest Ultra settings for our five cards.

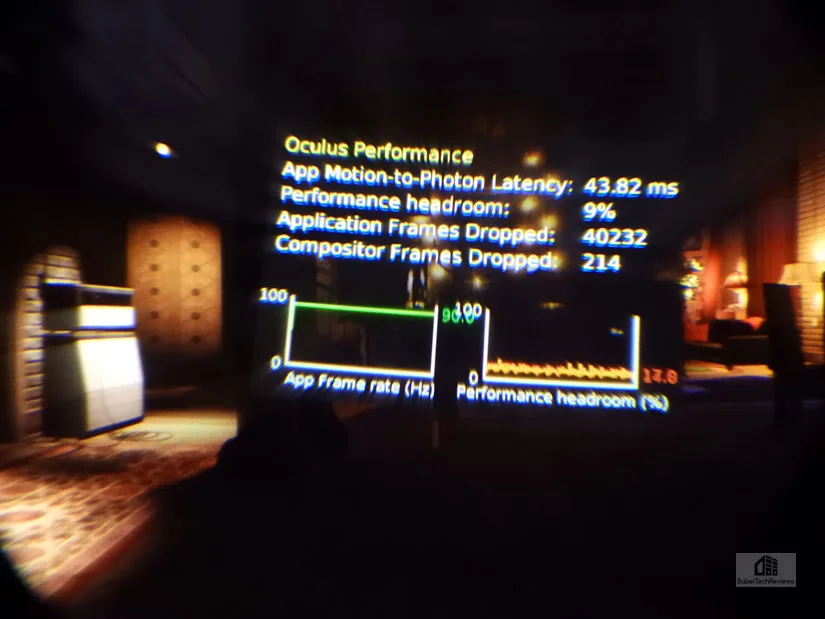

We performed our benchmark on the Midway Pier by casting one skull spell after another. We used the very highest settings and the frametime graph shows the comparative performance of all three cards. The dips and rises in the charts tend to match up with the more demanding special effects of each spell being cast.

We performed our benchmark on the Midway Pier by casting one skull spell after another. We used the very highest settings and the frametime graph shows the comparative performance of all three cards. The dips and rises in the charts tend to match up with the more demanding special effects of each spell being cast.

This is the third game where the Vegas edge out the competing GTXes with the exception of the GTX 1080 Ti. However, all of our cards can handle ultra settings with no reprojection and few dropped frames. Let’s finally check out The Vanishing of Ethan Carter as our 15th VR game benchmark.

The Vanishing of Ethan Carter

The Vanishing of Ethan Carter is extraordinarily well-adapted for VR with the exception that the locomotion method employed can lead to intense VR-sickness if the player does not take care to move and turn very slowly. The visuals are extra-ordinary and among the best that the Unreal Engine 4 can provide – all with reasonable performance.

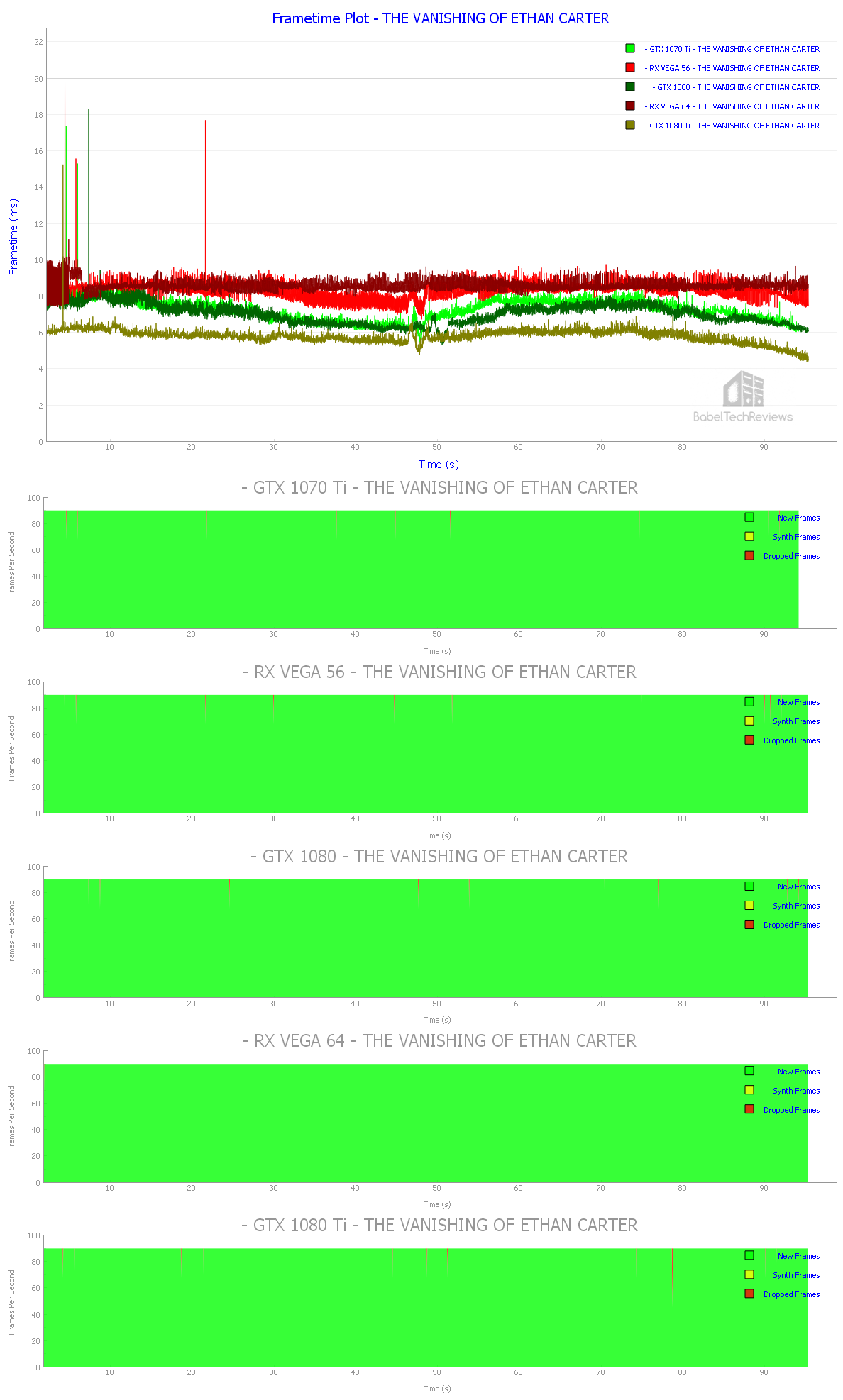

In the game, The Vanishing of Ethan Carter, a player can move among the trees of a realistic forest and see them from any perspective that they want to – you can even “stoop down” to stand or to look under objects to closely examine them from their underside which is perfect for this kind of walking simulator supernatural detective story. It is recommended only if you can handle the motion. Here are our frametime and interval plots for our 5 video cards:

All of our cards can easily manage 90 FPS and we would highly recommend setting the pixel density to 1.20 or higher. The few dropped frames do not affect the gameplay noticeably.

Let’s head for our conclusion.

Unconstrained Framerate Leaderboard

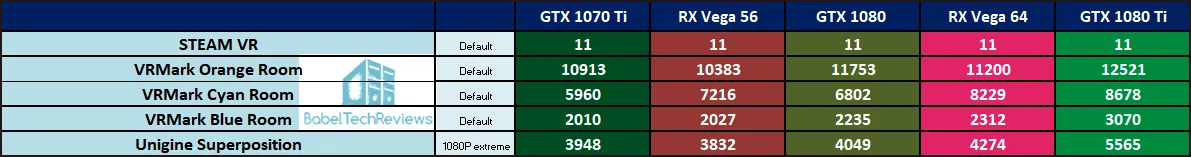

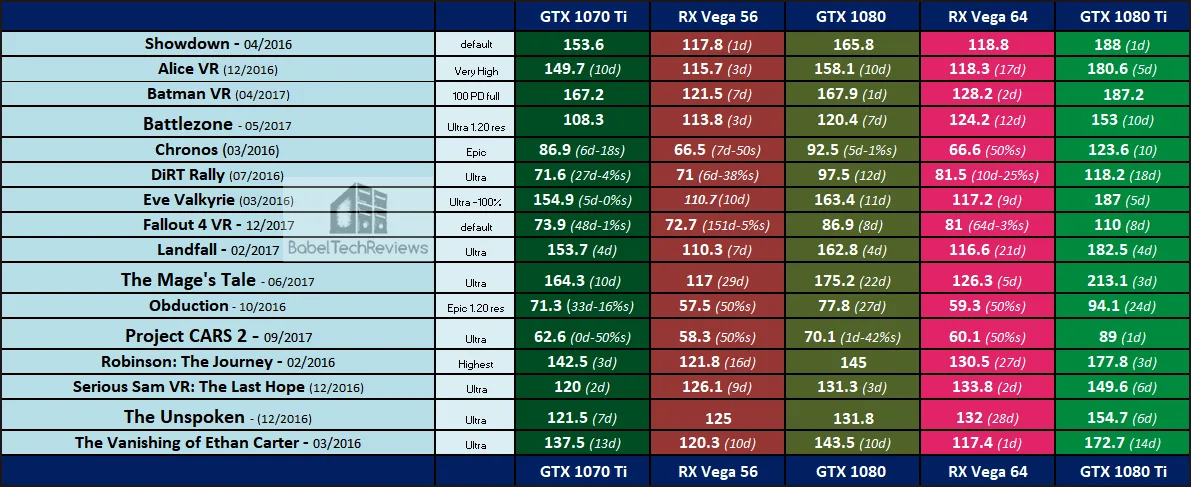

Here is the unconstrained FPS leaderboard summary for our 15 VR games which allow us to see how quickly the system could have displayed the frames if not for the fixed 90 Hz refresh cadence. All cards were benched on the latest drivers. The first 5 benchmarks are synthetic and are expressed by “scores” where higher is better. Showdown is a demo using the Unreal 4 engine and the other 15 applications are VR games.

The bold number represents the unconstrained FPS and higher is better. Numbers in parenthesis shows the number of frames dropped as “d” and the number of synthetic frames are expressed as “s”. In these cases, lower is better and if there is no d nor s, then no frames were dropped, and no synthetic frames were produced which is ideal.

The above individual unconstrained FPS results are averaged over multiple benchmark runs and may not match up with each graph exactly although they are close. The cards are ranked in terms of average performance using fifteen VR game benchmarks. Clearly the GTX 1080 Ti is the fastest video card for VR, and together with the GTX 1080, they are able to provide a premium VR experience. The RX Vega 64 provides a somewhat similar class of performance to the GTX 1080 although generally lower, and they are both more consistent than the GTX 1070 Ti and the RX Vega 56. We have seen significant performance increases for the Vega 64 over 4 months ago, but it still performs below the GTX 1080, and the GTX 1070 Ti is generally faster than the Red Devil RX Vega 56.

AMD has finally delivered on its promise for premium VR with the aftermarket RX Vega 56 and the liquid-cooled 64 although they still trail the GTX 1070 Ti and the GXT 1080 respectively. AMD’s Vegas only win 3 out of 15 of the titles that we tested versus their competitors and clearly, the GTX 1080 Ti is in the top VR class by itself winning every single benchmark by a large margin.

We feel that it is important to understand and compare performance of VR games across competing video cards so as to make informed choices. It is important to get a judder-free VR experience as your health is literally at stake! VR sickness is quite unpleasant.

BTR plans to stay at the forefront of the VR “revolution” and we have added VR benching to all of our video card reviews in addition to reviewing and benchmarking the 35 latest PC games. We are playing and will add Lone Echo, Raw Data, ARKTIKA.1, Robo Recall and Arizona Sunshine to our next VR review for a total of 20 games. But next up, we will be overclocking the PowerColor Red Devil RX Vega 56 for a showdown with the overclocked Gigabyte GTX 1070 Ti, and we will follow up with a mega 50-game RX Vega CrossFire vs GTX Pascal SLI Showdown after CES.

Stay tuned.

Happy Gaming!

Comments are closed.