A Gamer’s View of GTC 2022 featuring Jensen’s Keynote with Implications for GeForce Gaming & VR

NVIDIA’s GPU Technology Conferences (GTC, held virtually this year March 20-24) were always this editor’s favorite in-person annual tech event, having attended nine out of ten GTCs since NVISION 08, and this virtual one hour and forty minute GTC 2022 Keynote presentation by NVIDIA’s CEO & Co-Founder, Jen-Hsun Huang (AKA “Jensen”) did not disappoint. His announcements setting the stage for GTC 2022 were among the most exciting of any GTC – some bordering on what appear to be science fiction made virtually real.

The GTC is an important annual trade event formerly held at the San Jose Convention Center, and now virtually, is represented by every major auto maker, most major labs, and every major technology company, yet GeForce gaming is absolutely not the focus. However, some of these announcements have strong implications for gamers, VR, and the future of gaming, and we will focus on them. Two years ago, NVIDIA released Ampere architecture in April 2020 at GTC for industry, and by September, GeForce video cards based on Ampere were released for gamers. The GPUs are not the same but they share the same architecture.

Let’s look back to GTC 2018, just four years ago. Jensen began his keynote by pointing out that photorealistic graphics used ray tracing which requires extreme calculations as used by the film industry. Ray tracing in real time had been the holy grail for GPU scientists for the past 40 years. An impressive Unreal Engine 4 RTX demo running in real time on a single $68,000 DGX using 4 Volta GPUs was shown. It was noted that without using deep learning algorithims, ray tracing in real time is impossible.

Three years later, NVIDIA ran the same demo on its second generation GeForce RTX video cards in real time. Amazing progress was made and now ray tracing has been adopted by Intel, AMD, Microsoft, and all of the major console platforms. Yet this year, RTX was barely mentioned except as for use in creating realistic lighting for virtual worlds, and now the emphasis is on AI, industry, and the Omniverse for collaboration – NVIDIA’s version of the “metaverse” – the next generation of the Internet. This “Spacial Internet” will require creating a virtual world that mirrors our real world in every detail and it will require massive computing power. NVIDIA believes they have the Hopper GPUs that will enable the tools to undertake this immense task.

NVIDIA has committed to a release cycle for their major products on a two year cadence with a refresh happening in-between. That means we can expect new GeForce RTX cards also on this cycle – a new series every two years and a refresh in-between. We are due a new GeForce GPU, Lovelace, based on Hopper this year, probably in Q3. The new H100 Hopper GPU announced by Jensen today is touted as having a huge performance improvement over A100 Ampere.

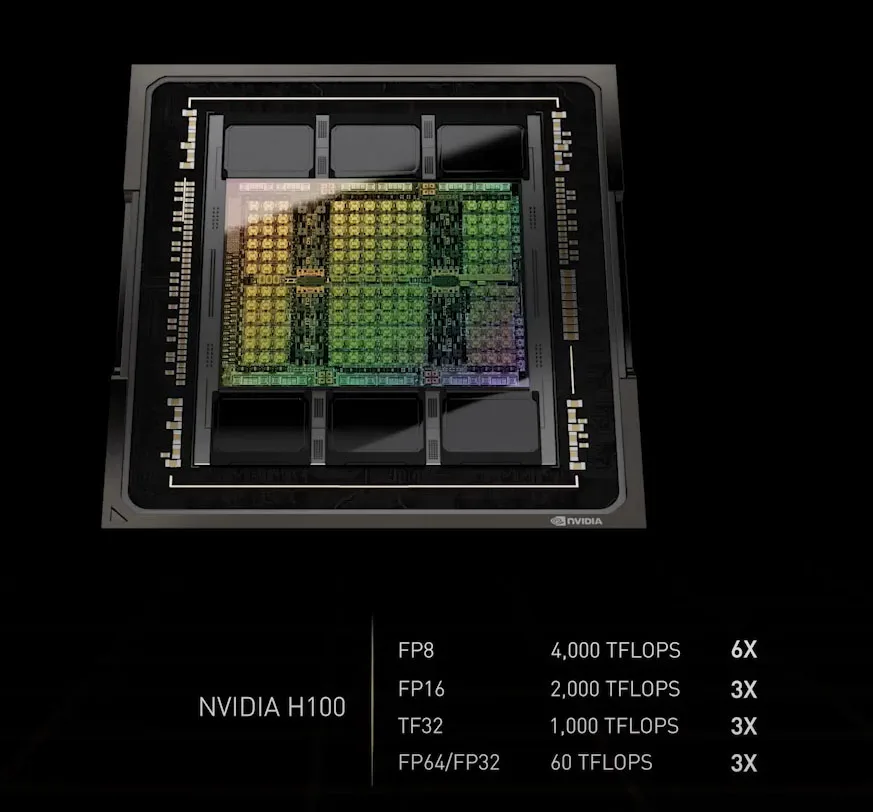

Jensen announced both a new GPU and a new ARM-based CPU codenamed Hopper and Grace respectively after the American scientist and US Navy Rear Admiral, Grace Hopper. This new GPU architecture has new and much more powerful Tensor Cores which are specialized for training AI to accelerate machine learning Transformer models on H100 GPUs by a factor of six compared with A100, while the newest NVlink can connect up to 256 H100s with nine times the bandwidth for supercomputers.

At 80 billion transistors, Hopper is the first GPU to support PCle Gen5 and HBM3 with a memory bandwidth of 3TB/s. The PCIe version of H100 uses 350W and is claimed to be three times faster than A100 at FP16/FP32/FP64 compute, and six times faster at 8-bit floating point math. For those interested in the Hopper architecture, NVIDIA has published a whitepaper for download as a .pdf

This kind of 3X massive performance increase in computing power with a reasonable power increase bodes well for the Lovelace GeForce GPU based on Hopper to aso be a huge improvement over the current Ampere RTX video cards. Since Hopper has moved from TSMC’s 4Nnm from Ampere’s 7nm, there is a possibility that Lovelace will also be rather power hungry like the H100 but with a similar solid performance increase over Ampere GeForce, or perhaps more power efficient with a more modest gain.

We will have to wait and see as we do not know if the 4000 RTX series cards will be on the TSMC 4nm node like Hopper or on 5nm. If on 4nm, there may be even more gaming performance gains as Ampere GeForce GPUs are made on the 8Nnm Samsung node unlike A100 which is on the TSMC 7nm node. Of course, this is all speculation since we don’t have any concrete details yet, but it bodes well for GeForce gamers this year.

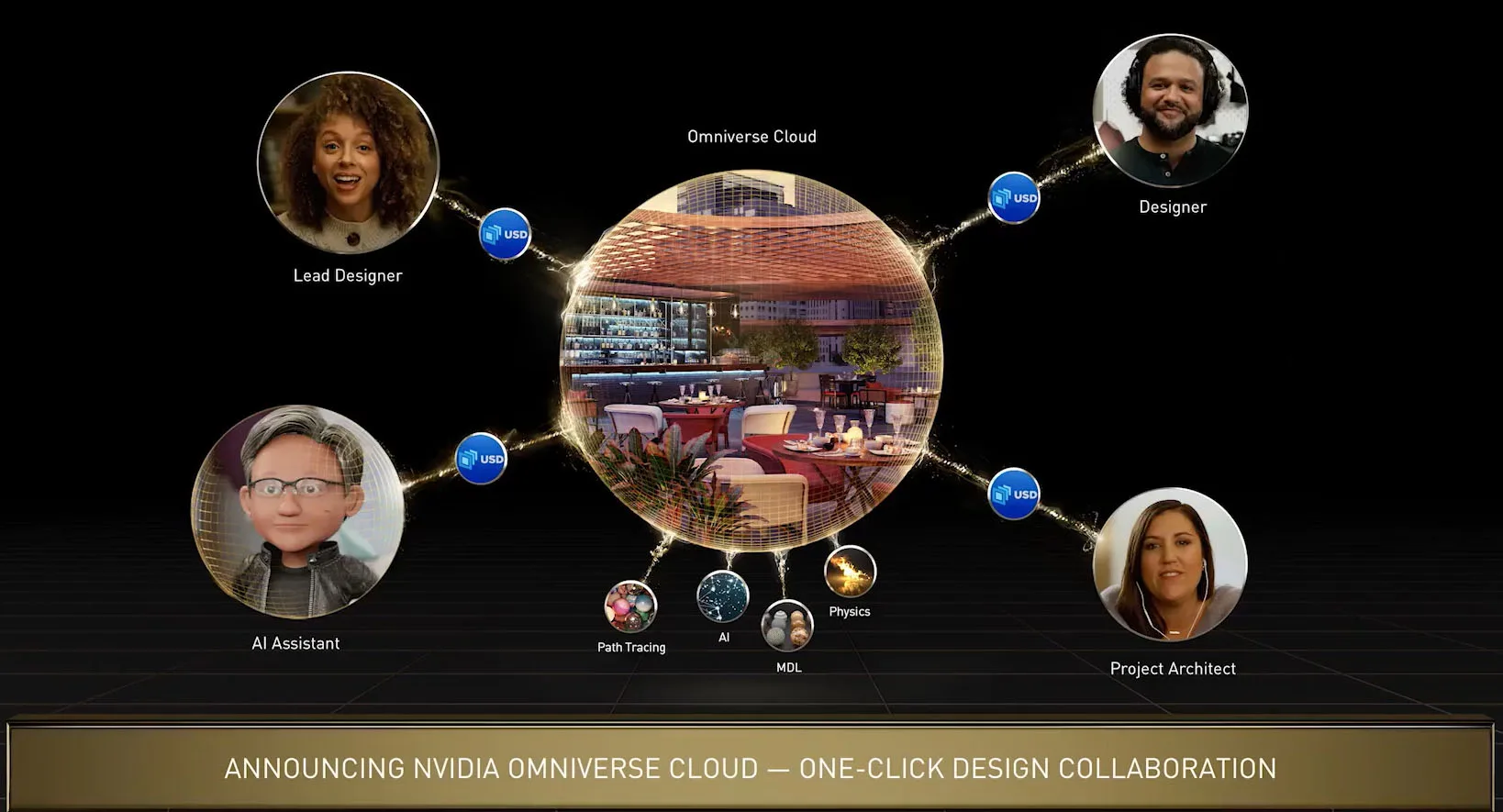

Another major progression detailed at GTC 2022 is from Project Holodeck which was first announced at the GTC in 2017. It has grown into NVIDIA’s Omniverse that is primarily used for real time collaboration in virtual reality for industry professionals across the Internet. NVIDIA’s ambitious goal is to create a digital twin of our own world to enable global 3D design teams working across multiple software suites to collaborate in real time using a shared virtual space with real time lighting which interacts perfectly with matter, photorealism, and with incredible accuracy to 1mm. Virtual game worlds do not have to obey the laws of physics and other real world rules that infrastructure collaboration requires.

Along with the news coming out of the GTC arrived related news from the Game Developers Conference (GDC) in the form of the new NVIDIA Omniverse for Game Developers. New Omniverse features will make it easier for developers to share assets, collaborate, and deploy AI to animate characters’ facial expressions using a new game development pipeline.

Generally global teams of artists collaborate to build massive libraries of 3D content which include realistic lighting, accurate physics and AI-powered technologies. Omniverse helps game developers build photorealistic and physically accurate games better by connecting artists, their assets, and software tools in a single platform

Using the Omniverse real-time design collaboration and simulation platform, game developers can use either AI and RTX tools or build custom ones to speed up and enhance their development workflows. Here are some of the tools they can use:

-

Omniverse Audio2Face is an AI-powered application allowing character artists to generate high-quality facial animation from an audio file which supports full facial animation with the ability to control the emotion of the performance. Game developers can add realistic expressions to their game characters to make them more realistic and relatable.

-

Omniverse Nucleus Cloud allows game developers in real time to share and collaborate on 3D assets with their teams.

-

Omniverse DeepSearch, an AI-enabled service allows game developers to use natural language inputs and imagery to quickly search through their catalogs of even untagged 3D assets, objects, and characters.

-

Omniverse Connectors are plugins that enable “live sync” collaborative workflows between third-party design tools and Omniverse allowiing game artists to exchange language data between the game engine and Omniverse.

-

The Omniverse platform received major updates to the Create, Machinima and Showroom apps with View coming soon.

-

NVIDIA Omniverse’s Unreal Engine Connector and Adobe Substance 3D Material Extension betas are expanding the Omniverse ecosystem with Adobe’s Substance 3D Painter Connector beta launching shortly.

-

Artists may now use Pixar HD Storm, Blender Cycles, Chaos V-Ray, Maxon Redshift and OTOY Octane renderers within the viewport of all Omniverse apps.

From our interview with NVIDIA last year we already know that they “are working to make high fidelity experiences possible untethered, from anywhere, with our CloudXR technology” for VR, no doubt using their advanced servers, Bluefield, and 5G. Gaming has made the metaverse or omniverse possible with game engines that describe what happens in real time in their virtual worlds, and now it has come full circle by using the same tools to create a digital twin of our own world. AI is the focus of Hopper and we can expect to see more of its use for gaming.

At GTC 2021, Jensen announced a GPU-accelerated software development kit for NVIDIA Maxine which has been supercharged this year. This cloud-AI video-streaming platform allows developers to create real-time video-based experiences that can be deployed to PCs, data centers or in the cloud to efficiently create digital experiences. Maxine will also allow for translation into multiple languages. Maxine also enables real-time voice-to-text translation for a growing number of languages. At this GTC, Jensen demonstrated Maxine translating between English, French, German and Spanish seamlessly using the same voice.

Think of the time savings for game developers who have to translate their games into multiple languages! Since it can be used in the cloud, future RPGs may be able to have their NPCs literally talk to a gamer instead of having to click on multiple choice responses.![]()

Maxine enhances tools to enable real-time visual effects and motion graphics for live events. with real-time, AI-driven face and body tracking along with background removal. These applications may be used to create AI-driven and player avatars in a VR or traditional online game setting. Artists can track and mask performers in a live performance setting by using a standard camera feed and eliminating the issues associated with expensive hardware-tracking solutions.

To develop a Ready Player One “Oasis” in the Omniverse depends on a fast and secure Internet made possible by BlueField and GeForce NOW technology coupled with 5G. To that end, Hopper has enhanced security to safeguard IP as thousands of people interact, collaborate, and eventually play together in the new spacial Internet.

NVIDIA’s AI has already transformed gaming and industry. We are looking forward to it being applied to gaming and VR in ways that could only be imagined as Sci-Fi a few years ago. The GTC is relevant to GeForce gaming.

This is just a short overview of Jensen’s keynote and the announcements delivered today. You can watch it in full from the Youtube video above. For a collection of all of NVIDIA GTC Day One announcements, this excellent Reddit thread at r/nvidia collects everything conveniently in one place.

Exciting times to be a gamer!

Happy gaming!