We recently purchased a XFX Fury X for $699 in our quest for a quiet pump after returning two Sapphire Fury Xes with whining pumps. It has been well over 3 months since Fury X was launched as an “overclocker’s dream”, and we wanted to see if anything has changed versus the GTX 980 Ti.

We recently purchased a XFX Fury X for $699 in our quest for a quiet pump after returning two Sapphire Fury Xes with whining pumps. It has been well over 3 months since Fury X was launched as an “overclocker’s dream”, and we wanted to see if anything has changed versus the GTX 980 Ti.

Originally, we purchased a Sapphire Fury X at the end of June and reviewed it against the reference GTX 980 Ti and also versus the EVGA GTX 980 Ti SC. Besides being generally slower than the GTX 980 Ti, we found that our Fury X sample had a very irritating pump whine, as well as being a poor overclocker.

Because of its annoying pump whine, we secured a RMA and received a second Sapphire Fury X several weeks later. We evaluated our second Fury X and published our results which also included pump noise and poor overclocking characteristics. Since our second Fury X had the same pump whine, we returned it for a refund as there was no stock left for a RMA.

Two weeks ago, we purchased a XFX Fury X with the hope that the pumps would be fixed. Unfortunately, we probably received our XFX Fury X from the same batch as our original units. We notice Ver. 1.0 on the UPC sticker. Interestingly, shortly after we received it, it appears that another substantial Fury X batch has arrived in the USA. Evidently, Fury X has come back into stock just over ten days ago, and it is apparently not selling out at its 650 dollar plus pricepoint.

Two weeks ago, we purchased a XFX Fury X with the hope that the pumps would be fixed. Unfortunately, we probably received our XFX Fury X from the same batch as our original units. We notice Ver. 1.0 on the UPC sticker. Interestingly, shortly after we received it, it appears that another substantial Fury X batch has arrived in the USA. Evidently, Fury X has come back into stock just over ten days ago, and it is apparently not selling out at its 650 dollar plus pricepoint.

This XFX Fury isn’t quite as noisy as the last, and the ultrasonic frequencies are less irritating. However, we are an audio-sensitive user, and our PC usually sits right next to us while gaming. Our temporary solution for benching Fury X was to move the PC into an adjoining room and to shut the door.

We ran the same overclocking tests that we ran with our first two units. Our first Fury X managed an overclock of only 25MHz to the core and 24MHz to the High Bandwidth Memory (HBM) – 1075MHz/524MHz overclocked – compared with 1050MHz/500MHz stock. We managed a better stable overclock with the second Sapphire Fury X sample of +50MHz, but the HBM did not overclock quite as well. Our final stable overclock reached 1100MHz core/523MHz memory. How does the XFX Fury X overclock? We will give you overclocked numbers compared with the overclocked reference GTX 980 Ti on the performance chart.

It has been over 3 month since the Fury X was released into retail and it is clear that there are still some units being sold that have pump whine which sensitive users may find intolerable. BTR needs to have a Fury X for our benching, and we can hope that our next one is whine free.

If you want a good look at our main two competing cards, check out our our original Sapphire Fury X showdown versus the EVGA GTX 980 Ti SC+.

Let’s take a brief look at the XFX Fury X.

The XFX Fury X

All of the Fury X cards are the same except for the box cover and the accessories each of AMD’s partners may choose to include. There is no game bundle, or any bundle other than the driver CD and start guide offered with the XFX Fury X for $699.

Our Testbed of Competing Cards

Here is our testbed of competing cards and we shall test 28 games and 3 synthetics using Core i7-4790K turbo locked to 4.4GHz, ASUS Z97+ motherboard and 16GB of Kingston “Beast” 2133MHz HyperX DDR3 on Windows 10 Home Edition:

- XFX Fury X – 4GB – $699 (AMD’s new Fiji flagship single GPU card, plus our own OC)

- GTX 980 Ti – 6GB – $649 – (Nvidia’s mainstream GM220 single-GPU Maxwell flagship, plus our own OC)

- GTX 980 Ti SLI

- GTX 980 4GB, $499 – formerly $549 and Nvidia’s flagship before the TITAN X

- GALAX GTX 970 EXOC, 4GB – $319

- MSI R9 390X OC 8GB – $429

- R9 290X 4GB – reference non-throttling 1000MHz Uber mode, originally $579 – discontinued

- R9 290X CrossFire – reference 1000MHz, non-throttling Uber mode.

Our latest game benchmarks include Mad Max and MGSV.

How does the GTX 980 Ti compare with its rival, AMD’s top single GPU, the XFX Fury X three months after launch?

This is the big question: How does the GTX 980 at $649 compare now with the XFX Fury X at $699?

Unboxing the XFX Fury X

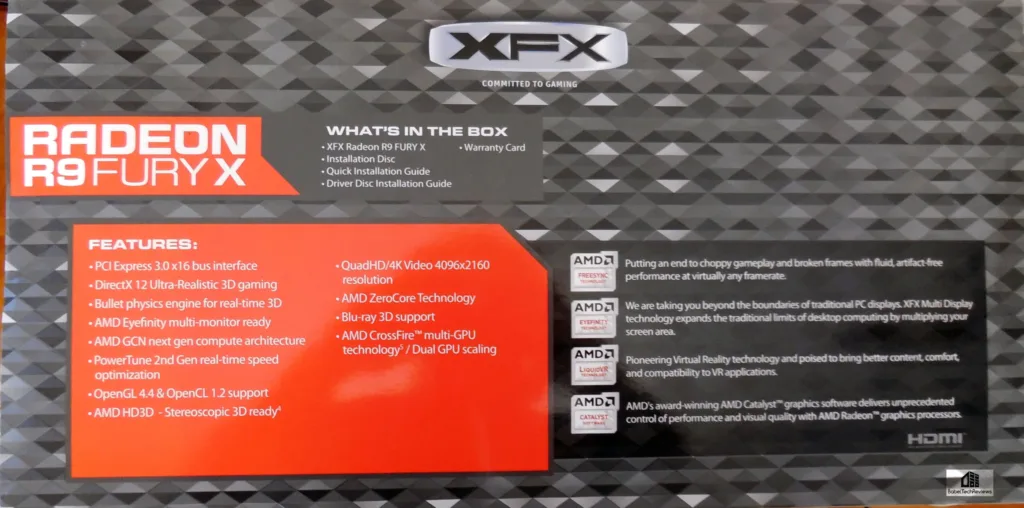

The XFX Fury X box is huge. It advertises the ultra-wide 4096-bit HBM, HDMI, and DirectX12 are advertised. On the back, what’s in the box and other features including Eyefinity are also touted as Fury X is designed for multi-panel gaming.

On the back, what’s in the box and other features including Eyefinity are also touted as Fury X is designed for multi-panel gaming.  The packing is superb and it designed to stand up to your delivery person on a bad transport day. Inside the box is a driver CD and quick installation guides.

The packing is superb and it designed to stand up to your delivery person on a bad transport day. Inside the box is a driver CD and quick installation guides.

The XFX Radeon Fury X is styled very well with an aluminum exoskeleton and soft touch sides. The radiator is large and it is connected to the pump inside the card by braided cables.

Two 8-pin connectors are available but there are no adapters included with the card in case your PSU only supports one 8-pin and one 6-pin PCIe cable. The Radeon Logo lights up and is a perfect counter to the lighted GeForce logo. AMD definity succeeded in making Fury look like a premium brand.

Here is the other side with the soft touch sides a very nice feature.

Here is the Fury X with its radiator side by side. There are no CrossFire fingers/bridges with Hawaii/Grenada and Fiji GPUs.

Here is the Fury X with its radiator side by side. There are no CrossFire fingers/bridges with Hawaii/Grenada and Fiji GPUs.

AMD did not include a HDMI 2.0 port like its GeForce competitor. That means that most 4K TVs will require an active adapter to run over HDMI at 60Hz. Most current 4K TVs do not use DisplayPort and this a serious disadvantage for Fury X 4K TV gamers compared with the GTX 980 Ti.

AMD did not include a HDMI 2.0 port like its GeForce competitor. That means that most 4K TVs will require an active adapter to run over HDMI at 60Hz. Most current 4K TVs do not use DisplayPort and this a serious disadvantage for Fury X 4K TV gamers compared with the GTX 980 Ti.

The XFX Radeon Fury X is a handsome card. AMD has got their industrial design right and it is nice to have a small card, although the cooler itself is big and perhaps may be hard to fit in many smaller cases. We would have issues with our own large Thermaltake full tower case trying to fit more than one Fury X inside.

The XFX Radeon Fury X is a handsome card. AMD has got their industrial design right and it is nice to have a small card, although the cooler itself is big and perhaps may be hard to fit in many smaller cases. We would have issues with our own large Thermaltake full tower case trying to fit more than one Fury X inside.

The specifications look extraordinary for both the GTX 980 Ti and the flagship Fury X, with solid improvements over just about anything else from the previous generation. Let’s check out their performance after we look over our test configuration on the next page.

Test Configuration – Hardware

- Intel Core i7-4790K (reference 4.0GHz, HyperThreading and Turbo boost is on to 4.4GHz; DX11 CPU graphics), supplied by Intel.

- ASUS Z97-E motherboard (Intel Z97 chipset, latest BIOS, PCOe 3.0 specification, CrossFire/SLI 8x+8x)

- Kingston 16 GB HyperX Beast DDR3 RAM (2×8 GB, dual-channel at 2133MHz, supplied by Kingston)

- XFX Fury X, 4GB HBM, reference and overclocked further

- MSI R9 390X OC 8GB, at MSI’s factory overclock.

- VisionTek R9 290X, 4GB, stock reference (non-throttling) Uber clocks (also tested in 290X CF)

- PowerColor R9 290X PCS+ (BF4 edition), 4GB, reference Uber (non-throttling) clocks (also tested in 290X CF)

- GALAX GTX 970 EXOC, 4GB, at GALAX’ factory overclock, supplied by GALAX

- GeForce GTX 980, 4GB, reference version, supplied by Nvidia

- GeForce GTX 980 Ti, 6GB reference clocks and further overclocked, supplied by Nvidia

- EVGA GTX 980 Ti 6GB, used in SLI at reference clocks, supplied by EVGA

- Two 2TB Toshiba 7200 rpm HDDs

- EVGA 1000G 1000W power supply unit

- Cooler Master 2.0 Seidon, supplied by Cooler Master

- Onboard Realtek Audio

- Genius SP-D150 speakers, supplied by Genius

- Thermaltake Overseer RX-I full tower case, supplied by Thermaltake

- ASUS 12X Blu-ray writer

- Monoprice Crystal Pro 4K

Test Configuration – Software

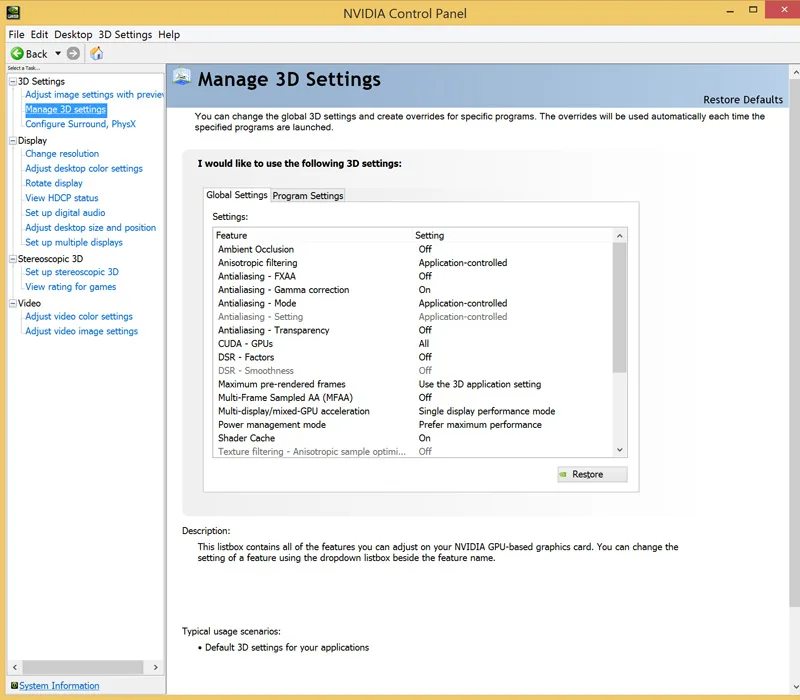

- Nvidia GeForce 355.98 WHQL drivers for the GTX 980 Ti and for GTX 980 Ti SLI. GeForce 355.82 used for the GTX 970 and for both of the GTX 980s. High Quality, prefer maximum performance, single display, use application settings except for Vsync. The latest EVGA Precision X is used for overclocking.

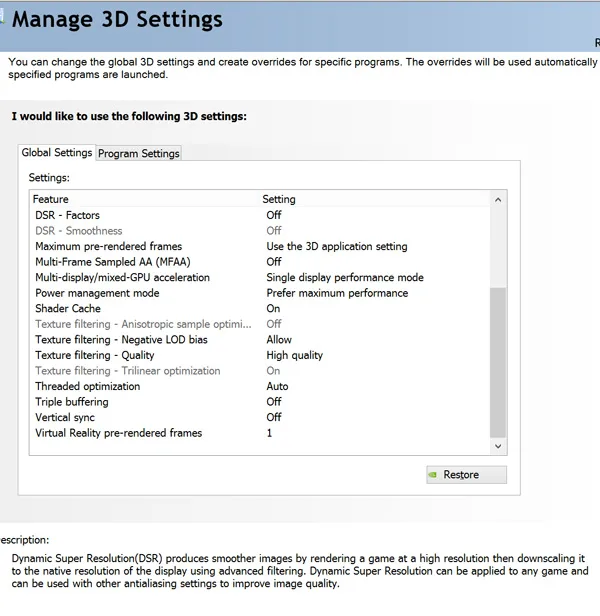

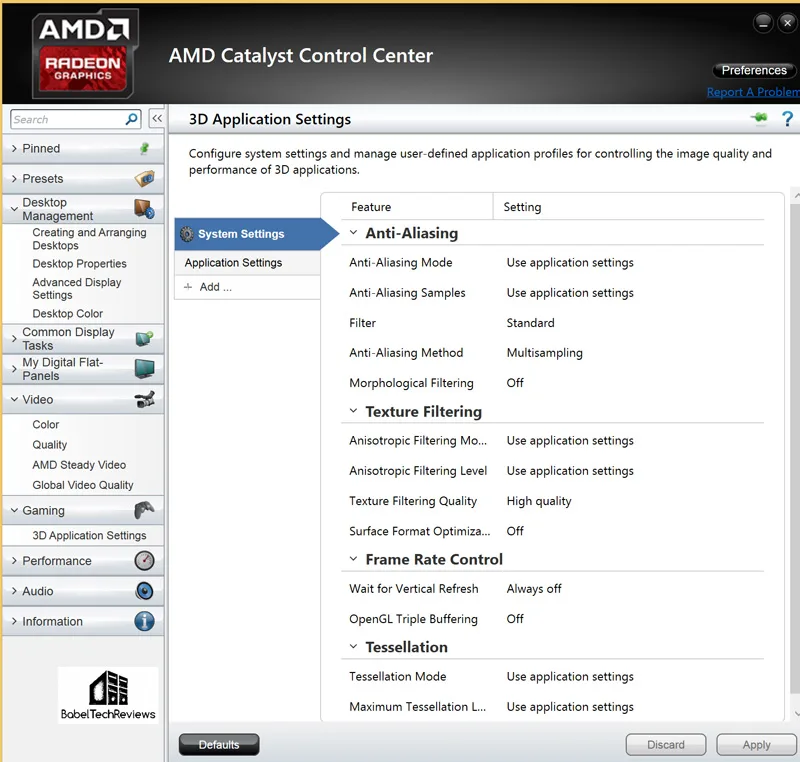

- AMD Catalyst 15.9.1 used for Fury X and 15.8 Beta for all other Radeons. The latest MSI Afterburner is used for overclocking. High Quality, all optimizations off, use application settings except for Vsync.

- VSync is off in the control panel.

- AA enabled as noted in games; all in-game settings are specified with 16xAF always applied.

- All results show average frame rates except as noted.

- Highest quality sound (stereo) used in all games.

- Windows 10 64-bit Home Edition, all DX11 titles were run under DX11 render paths. Latest DirectX

- All games are patched to their latest versions at time of publication.

Here is the settings we always use in AMD’s Catalyst Control Center for our default benching.

Here is Nvidia’s Control Panel and the settings that we run:

The 28 Game benchmarks & 3 synthetic tests

- Synthetic

- Firestrike – Basic & Extreme

- Heaven 4.0

- Kite Demo, Unreal Engine 4

-

DX11

- STALKER, Call of Pripyat

- the Secret World

- Sleeping Dogs

- Hitman: Absolution

- Tomb Raider: 2013

- Crysis 3

- BioShock: Infinite

- Metro: Last Light Redux (2014)

- Battlefield 4

- Thief

- Sniper Elite 3

- GRID: Autosport

- Middle Earth: Shadows of Mordor

- Alien Isolation

- Assassin’s Creed Unity

- Civilization Beyond Earth

- Far Cry 4

- Dragon’s Age: Inquisition

- The Crew

- Evolve

- Total War: Attila

- Wolfenstein: The Old Blood

- Grand Theft Auto V

- ProjectCARS

- the Witcher 3

- Mad Max

- MSGV

- Batman: Arkham Origins

The above is our test bench. Let’s check out overclocking next.

Overclocking the XFX Fury X vs. overclocking the GTX 980 Ti

Overclocking the GTX 980 Ti

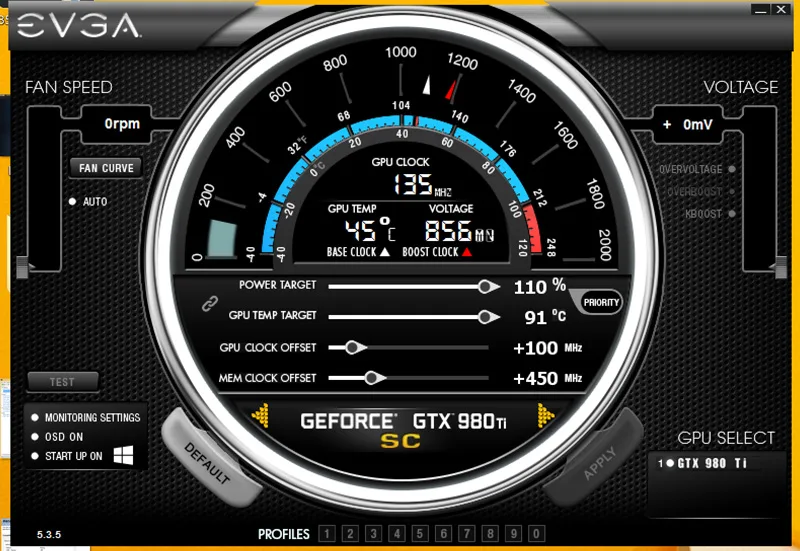

Overclocking the GTX 980 Ti is just as easy as overclocking the GeForce TITAN X, the GTX 980, or any other Maxwell architecture-based card. The reference GTX 980 Ti accepted a stable offset of +230MHz core/+500MHz memory over the base clock of 1000MHz. The reference version Boost peaked at 1418MHz and hit 1408MHz regularly.

We did not adjust any of our cards’ fan profiles (except for the 290Xes whose fans were allowed to spin up to 100% to prevent throttling), nor voltage for our benchmark runs. We did however, push up the temperature and power target controls to maximum since we tested in Summer-like (warm – 78F-80F) conditions. We also made very sure to warm up all of our cards before benching.

We used the very latest version of EVGA PrecisionX for GPU overclocking. You can download PrecisionX directly here: www.evga.com/precision where you can download it for free. It is also available for free on Steam.

We used the very latest version of EVGA PrecisionX for GPU overclocking. You can download PrecisionX directly here: www.evga.com/precision where you can download it for free. It is also available for free on Steam.

Moving up the power slider to 110% and the temperature up to the maximum 91C using EVGA’s Precision showed very little performance gain over simply setting the temperature limiter to 85C. However, we always run our benches with both PowerTune and AMD’s PowerDraw to their maximum although we never touch the voltage nor fan profile (except for the 290X fan profile which is allowed to spin up to 100% to prevent throttling)

The reference GTX 980 Ti’s VGA fan became noticeable over 60% and much more so at 75%. Our reference GTX 980 Ti appears to let the fan spin up a bit higher than the TITAN X with a little more noise under full load.

Overclocking the XFX Fury X

We used the latest version of MSI’s Afterburner which exposed HBM overclocking. Overclocking the Fury X is problematic and there appears to be almost no headroom as the 1050MHz clocks appear to be very close to their maximum even with the stock watercooling. After many hour of experimenting with our XFX Fury X, we found we could manage only +45MHz offset to the core for a maximum of 1095MHz. Adding +5MHz more to reach 1100MHz caused instability in many games. We also found that the heat from the radiator increased significantly with this mini-overclock.

Overclocking the HBM was just as disappointing. We finally settled on a +30MHz overclock after finding that we could overclock it further to 50MHz, but there was no performance improvement.

Fury X runs cool but it requires watercooling to do it. We would say that even after voltage tools were exposed over a month ago yet not made publically available, Fury X is still not a great overclocker as it appears to be already pushed to the edge.

Let’s head to the performance charts and graphs to see how the GTX 980 Ti compares with XFX Fury X, and with the top cards of Autumn, 2015.

Performance summary chart

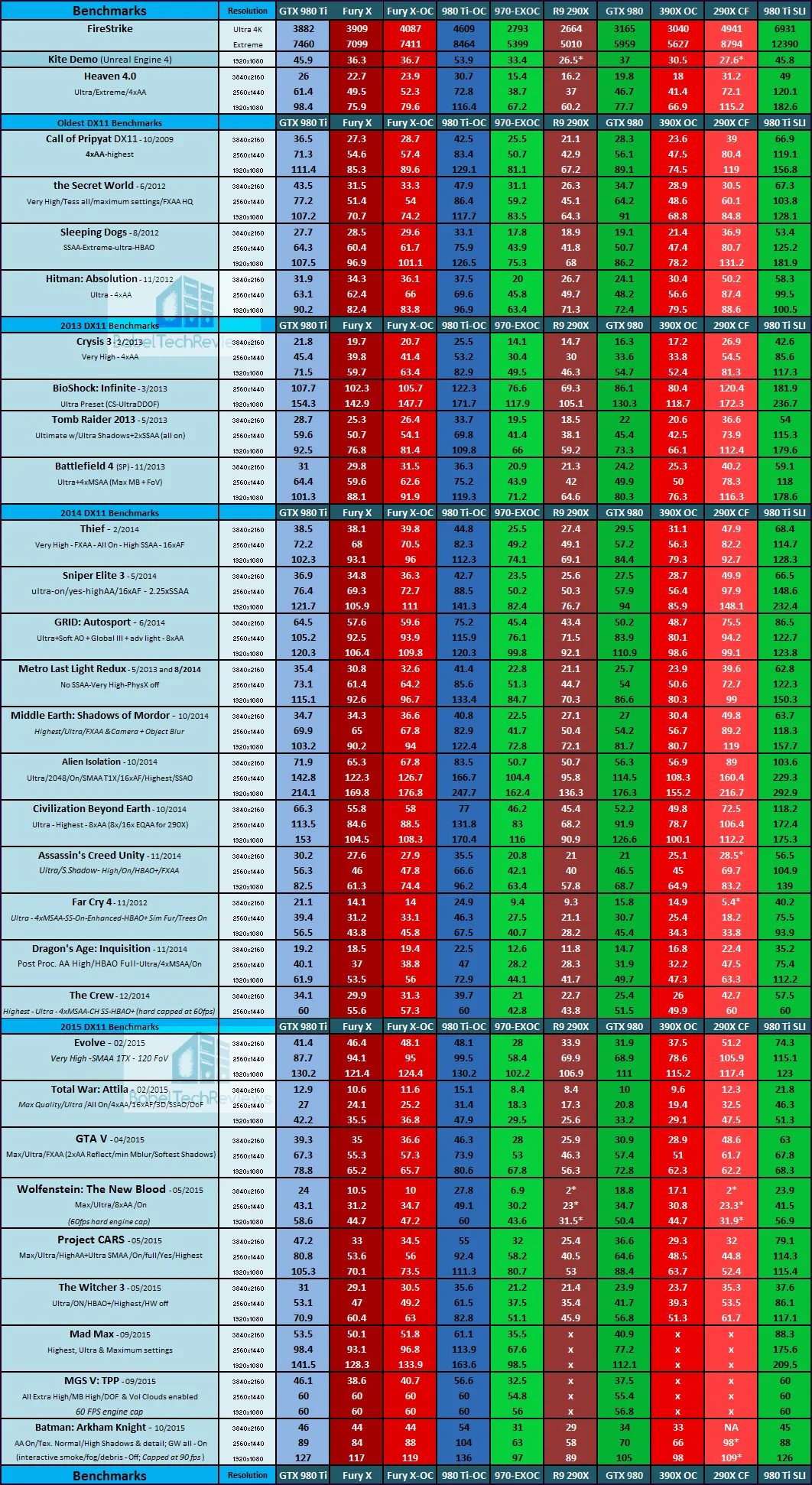

Here are the summary charts of 28 games and 3 synthetic tests. The highest settings are always chosen and it is DX11 with the settings at ultra or maxed. Specific settings are listed on the main performance chart. We have added the “Kite Demo” on Unreal Engine 4 using the default settings at 1920×1080 as measured by Fraps.

The benches are run at 1920×1080, 2560×1440, and 3840×2160. All results, except for Firestrike, show average framerates and higher is always better. In-game settings are fully maxed out and they are identically high or ultra across all platforms. “NA” means the game would not run at the settings chosen, and “X” means the benchmark was not tested at all. An asterisk* means that there were issues with the benchmark, usually visual artifacting.

The summary chart: The Big Picture

This main summary chart is what we call, “The Big Picture” since it places our two test cards into a much larger test bed. Please bear in mind that the GTX 980 and GTX 970, as well as R9 290X/390X results are on slightly older drivers than the Fury and the GTX 980 Ti.

Our chart provides a lot of information across 28 games and 3 synthetics. What we can take away from the results generally is that the GTX 980 Ti is the fastest single GPU video card on the chart. Overall, the GTX 980 Ti is significantly faster than the Fury X. It is a blowout at 1920×1080 where the Fury X is held back by a CPU bottleneck due to drivers, architectural differences, and DX11 multi-threading. It is closer at 2560×1440, but the GTX 980 Ti is about ten percent faster. At 4K, the Fury X finally becomes competitive, but is still bested by the GTX 980 Ti overall.

When we compare the overclocked $699 XFX Fury X against the overclocked $649 GTX 980 Ti, the gap widens significantly further in favor of the less expensive GeForce.

Let’s head for our conclusion.

The Conclusion

Unfortunately, the lackluster Fury X performance at less than 4K resolution, the minimal 4GB framebuffer that depends on AMD drivers to manage that may not work well for CrossFireX at 4K, the hot running Fiji chip with little overclocking headroom, and the lack of quality control with a noisy pump, are negatives that still accumulate for a general non-recommendation at even $650 at more than 3 months after launch.

AMD has missed their target, and Fury X fell quite short of the performance of the GTX 980 Ti. At $649, the GTX 980 Ti is quite a bargain performance-wise compared with the $699 XFX Fury X. The GTX 980 Ti has a larger 6GB framebuffer which makes it suitable for SLI at 4K, whereas the 4GB Fury X is somewhat on the edge even as a single GPU.

We found little overclocking headroom with Fury X with our 3 retail samples from their reference 1050MHz core clock. We were only able to manage adding 25 to 50MHz offset to the core, and less than that for the memory. In contrast, our two GTX 980 Tis appear to have no difficulty overclocking from the reference 1000MHz core to near 1400MHz! And the Fury X pump noise lottery is still very worrisome after more than 3 months after launch.

Let’s sum it up:

XFX Fury X Pros

- The XFX Fury X is very impressive as an “Exotic Industrial Design”. It is heavy, solid, and looks great. The AIO cooling may appeal to some.

- At 4K the Fury X trades blows with the reference GTX 980 Ti.

- Fury X is a fast card and a good replacement for the 290X as AMD’s flagship. It is innovative and bold to chose HBM.

- FreeSync eliminates tearing and stuttering.

XFX Fury X Cons

- The price – $699. At even $650, Fury X performance is overall slower than the $650 reference GTX 980 Ti.

- The pump noise may still be a deal breaker for some.

- Even with watercooling, there is a lot of heat coming from the radiator.

- Overclocking of the memory and of the core are poor, and there are no voltage tools yet available.

- A 4GB of framebuffer is minimal for 4K, and there may be issues with Fury X CrossFire at 4K at high details/AA.

- Installing 1 large radiator may be difficult for some smaller cases; CrossFire may compound the issue with multiple radiator placement.

- Lack of HDMI 2.0 may turn off 4K TV gamers.

We have no trouble giving a recommendation to the reference GTX 980 Ti. At $649, it looks great, runs cool, overclocks well, and is reasonably quiet; and out of the box it is faster than the Fury X. We suspect that there may be downward pressure on Fury X pricing now that more stock has become recently available.

If you currently game on an older generation video card, you will do yourself a big favor by upgrading. The move to a GTX 980 Ti or Fury X will give you better visuals on the DX11 (and DX12 !) pathway, and you are no doubt thinking of multi-GPU if you want to get the most in gaming performance. Price is the only issue and if you are a bang-for-buck gamer, a pair of GTX 970s or R9 390s cost about the same or more, but they have all of the issues associated with multi-GPU gaming. And if you are looking for current DX11 gaming performance, the GTX 980 Ti is faster than the Fury X.

AMD offers their own great set of features including Eyefinity 2.0 and FreeSync. The XFX Fury X is a well-performing card that just appears to be priced a bit high compared with its competition. Ultimately, the XFX Fury X card simply cannot touch the raw power of the GTX 980 Ti – especially when overclocking is considered.

Stay tuned, there is a lot coming from us at BTR. Next week we will look at AMD’s latest Beta drivers and Nvidia’s latest WHQL drivers.

Happy Gaming!

Mark Poppin

BTR Editor-in-Chief

Comments are closed.