Nvidia’s GTC 2016 Wrap-Up

This is the sixth time that this editor has been privileged to attend Nvidia’s GTC (GPU Technology Conference) which was held last week, April 4-7. It has not disappointed although GeForce gaming was not the focus of this event. This year saw about 5,500 attendees which is more than double the attendance of the 2012 event. The San Jose Convention Center seemed almost too small of a venue compared with even last year because of the large number of attendees and its jam-packed schedule.

This is the sixth time that this editor has been privileged to attend Nvidia’s GTC (GPU Technology Conference) which was held last week, April 4-7. It has not disappointed although GeForce gaming was not the focus of this event. This year saw about 5,500 attendees which is more than double the attendance of the 2012 event. The San Jose Convention Center seemed almost too small of a venue compared with even last year because of the large number of attendees and its jam-packed schedule.

Some big announcements were made this year, including the launch of the Pascal P100 on 16nm, as detailed in Jensen’s keynote regarding the progress of self-driving cars, plus an overall emphasis on Deep Learning, as well as on Virtual Reality (VR). The usual progress reports of the quick acceptance and adoption of CUDA and of Nvidia’s GRID, also were featured during this conference.

The very first tech article that this editor wrote covered Nvision 08 – a combination of LAN party and the GPU computing technology conference that was open to the public. The following year, Nvidia held the first GTC in 2009 which was a much smaller industry event that was held at the Fairmont Hotel, across the street from the San Jose Convention Center, and it introduced Fermi architecture. This editor also attended GTC 2012, and it introduced Kepler architecture.

Two years ago we were in attendance at GTC 2014 where the big breakthrough in Deep Learning image recognition from raw pixels was “a cat”. Last year, at GTC 2015, we saw that computers can easily recognize “a bird on a branch” faster than a well-trained human which demonstrates incredible progress in using the GPU for image detection . And this year, we see computers creating works of art, and we also saw a deep learning computer beat the world champion Go player, something that researchers claimed would take decades more to accomplish.

Two years ago we were in attendance at GTC 2014 where the big breakthrough in Deep Learning image recognition from raw pixels was “a cat”. Last year, at GTC 2015, we saw that computers can easily recognize “a bird on a branch” faster than a well-trained human which demonstrates incredible progress in using the GPU for image detection . And this year, we see computers creating works of art, and we also saw a deep learning computer beat the world champion Go player, something that researchers claimed would take decades more to accomplish.

Bearing in mind past GTCs, we cannot help but to compare them to each other. We have had just over a week to think about GTC 2016, and this is our summary of it. This recap will be briefer than usual as we we unable to attend as many sessions as we had with previous GTCs due to health reasons, and we left shortly after the Thursday Keynote session. However, we were able to attend all of the keynote sessions and some of the sessions on Tuesday, Wednesday, and on Thursday. We visited the exhibit hall on all three days, played the Eve: Valkyrie Oculus Rift VR demo, and caught up with some of our editor friends from years past. Every GTC has been about sharing, networking, and learning – everything related to GPU technology. And this year we were able to network with some programmers to possibly bring a new feature to BTR’s community.

As an invaluable resource, please check out Nvidia’s library of over 500 sessions that are being recorded and will be available for watching in their entirety. We are only going to give our readers a small slice of the GTC and our own short unique experience as a member of the press.

The GTC 2016 highlights for this editor included ongoing attempts to learn more about Nvidia’s future roadmap, as well as noting Nvidia’s progress in GPU computing over the past 8 or 9 years. Each attendee at the GTC will have their own unique account of their time spent at the GTC. The GTC is a combination trade show/networking/educational event attended by more than five thousand people, each of whom will have their own unique schedule as well as different reasons for attending. But all of them share in common a passion for GPU computing. This editor’s reasons for attending this year were the same as the previous years – an interest in GeForce and Tegra GPU technology primarily for PC and now for Android gaming, and of course, in deep learning.

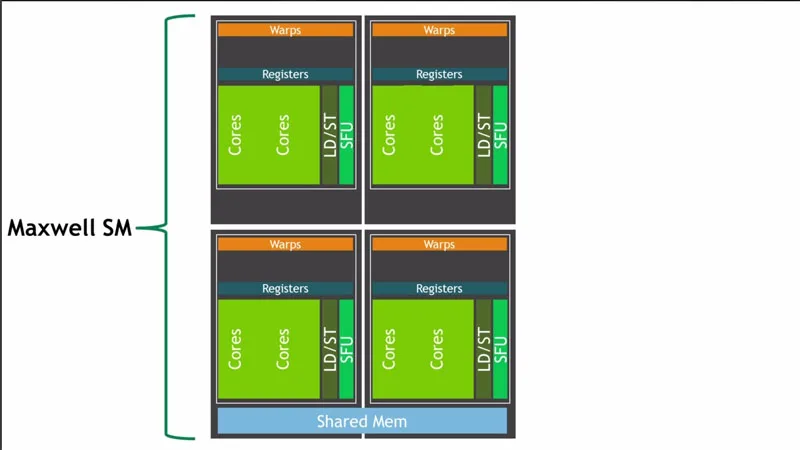

As is customary, Nvidia used the GTC to showcase their new and developing technology even as they transition from current Maxwell architecture on 28nm to Pascal architecture on 16nm this year. We saw Nvidia transition two years ago from the Kepler generation of GPU processing power to Maxwell’s energy-saving yet performance-increasing architecture on the same 28nm node. Just as Maxwell architecture is much more powerful as well as significantly more energy-efficient than Kepler, we will see a similar but even larger improvement by moving to Pascal. Nvidia intends to use Pascal to continue to revolutionize GPU and cloud computing, including for gaming and for virtual reality (VR)

As is customary, Nvidia used the GTC to showcase their new and developing technology even as they transition from current Maxwell architecture on 28nm to Pascal architecture on 16nm this year. We saw Nvidia transition two years ago from the Kepler generation of GPU processing power to Maxwell’s energy-saving yet performance-increasing architecture on the same 28nm node. Just as Maxwell architecture is much more powerful as well as significantly more energy-efficient than Kepler, we will see a similar but even larger improvement by moving to Pascal. Nvidia intends to use Pascal to continue to revolutionize GPU and cloud computing, including for gaming and for virtual reality (VR)

Everything has certainly grown since the first GTC in 2009. Nvidia is again using the San Jose Convention Center for their show. Each time the GTC schedule becomes far more packed than the previous year, and this editor was forced to make several hard choices for attending sessions that were held at the same time.

First of all, it is impossible for anyone to attend even a small portion of the 500 plus talks and sessions which are devoted to showcasing how GPU technology is being applied to some of today’s most important computing challenges. As reflected in the Nvidia CEO and co-founder Jen-Hsun Huang’s (AKA Jensen) Keynote, this is the year of deep learning – for cars, for voice and image recognition, and for a myriad of other important applications.

First of all, it is impossible for anyone to attend even a small portion of the 500 plus talks and sessions which are devoted to showcasing how GPU technology is being applied to some of today’s most important computing challenges. As reflected in the Nvidia CEO and co-founder Jen-Hsun Huang’s (AKA Jensen) Keynote, this is the year of deep learning – for cars, for voice and image recognition, and for a myriad of other important applications.

To facilitate ongoing research and practical applications, Nvidia has released a new SDK toolset as well as a deep learning optimized new architecture Pascal GPU and turnkey supercomputer solution in a single box. VR is also highlighted although there is less emphasis placed on gaming at this event.

The GTC at a Glance

Here is just part of one day’s GTC schedule “at a glance”. This is a rare moment captured with no one else around.

There has been some real progress with signage at the upgraded San Jose Convention Center compared with years past. No longer does Nvidia have to make do with an entire wall covered with schedule posters, but now the electronic signs are updated hourly, and there is far less clutter making it much easier for the attendees. And there is a GTC mobile app which this editor downloaded to his SHIELD which helped him keep track of his schedule.

After years of experience with running the GTC, Nvidia has got the logistics of the GTC completely down. It runs very smoothly considering that they also make sure that lunch is provided for each of the full pass attendees daily, and they offer custom dietary choices, including vegan and “gluten free”, for which this editor is personally grateful. Nvidia’s employees that staff the GTC are extraordinarily friendly and helpful.

Now we will look at each day that we spent at the GTC and will briefly focus on the sessions that we attended.

Monday, April 4

We left the high desert above Palm Springs on Monday morning, and arrived in San Jose late Monday afternoon, completing our journey in just over seven hour hours in overall light traffic. After parking our car at the convention center parking indoor parking lot for $20 for each day, and checking in at the Marriott, we picked up our press pass.

The Convention Center’s Wi-Fi was better than tolerable and the Press Wi-Fi was also OK considering the many hundreds of users that were using it simultaneously. The wired connection (and the Wi-Fi) inside the Marriott rooms were excellent, and 6 or 7MB/s peak downloads were not unusual until the hotel and conference got packed. It took a quick call to the Marriott tech support to add the SHIELD as an additional device, and we were good to go.

Nvidia treats their attendees and press well, and this year the attendees received a very nice backpack and a commemorative GPU Technology T-shirt included with the $1,500 all-event pass to the GTC. The press gets in free. Small hardware review sites like BTR are rarely invited to attend the GTC, and we again thank Nvidia for the invitation.

Mondays are always reserved at all GTCs for the hardcore programmers and for the developer-focused sessions that are mostly advanced. There was a poster reception that was held between 4-6 PM, and anyone could talk to or interview the exhibitors who were mostly researchers from leading universities and organizations who were focusing on their GPU-enabled research. The press had a 7-9 PM evening reception across the street from the convention center and although we got the invitation too late, we still got to meet with a few of our friends from past events

There were dinners scheduled and tables reserved at some of San Jose’s finest restaurants for the purpose of getting like-minded individuals together. And discussions were scheduled at some of the dinners while other venues were devoted to discussing programming, and still others talked business – or just enjoyed the food. Instead, we got a good night’s sleep, took a shower, and headed to Jensen’s Keynote at 9 AM Tuesday morning.

The BTR Community and its readers are particularly interested in the Pascal architecture as it relates to gaming yet we were not disappointed with the keynote. Nvidia is definitely oriented toward gaming, graphics, and computing, and we eagerly listened to Nvidia’s CEO Jen-Hsun Huang (Jensen) launch their brand new mega chip, the Pascal P100.

Jensen’s Keynote on Tuesday morning reinforced Nvidia’s commitment to gaming although they have branched out into many directions including into automotive. There were no deep dives into the Maxwell architecture as we had with Fermi and with Kepler at previous GTCs, so we were pleased to see that there was a deep dive scheduled for Pascal’s P100. It covered some of the important differences between Pascal, Maxwell, and Kepler architecture after Tuesday Keynote which we did not miss.

Tuesday, April 5

S6699 – Opening Keynote

The Keynote hall was packed. Here are some of the important highlights from Jensen’s 2-hour Keynote which set the stage for the rest of the conference:

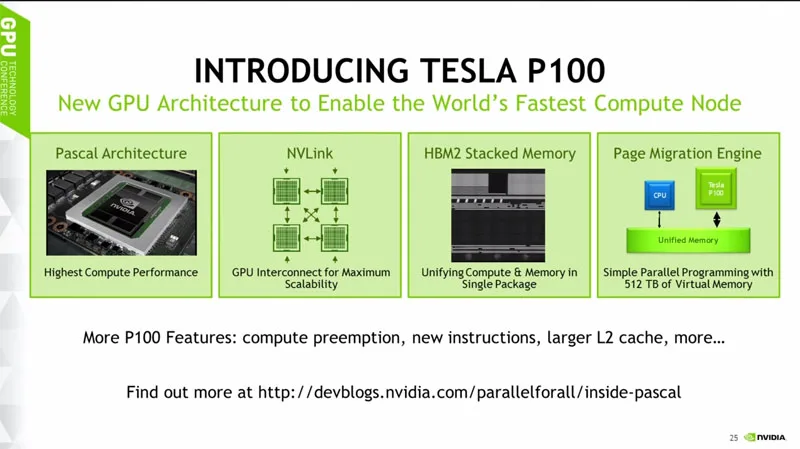

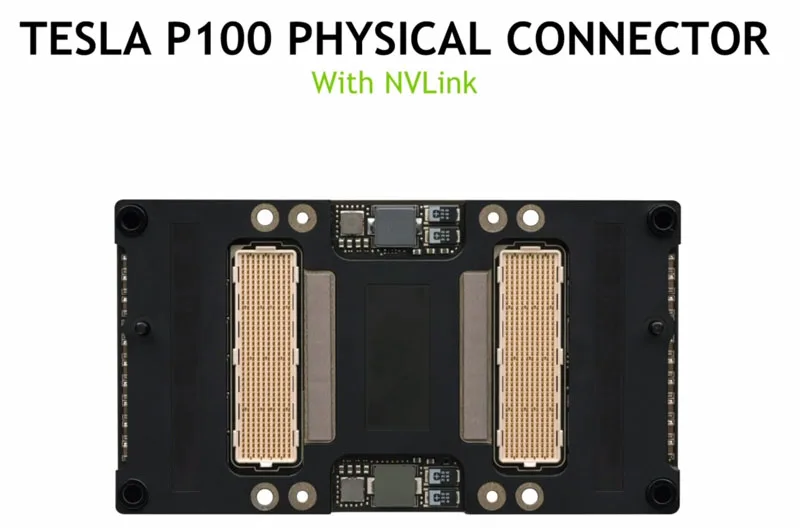

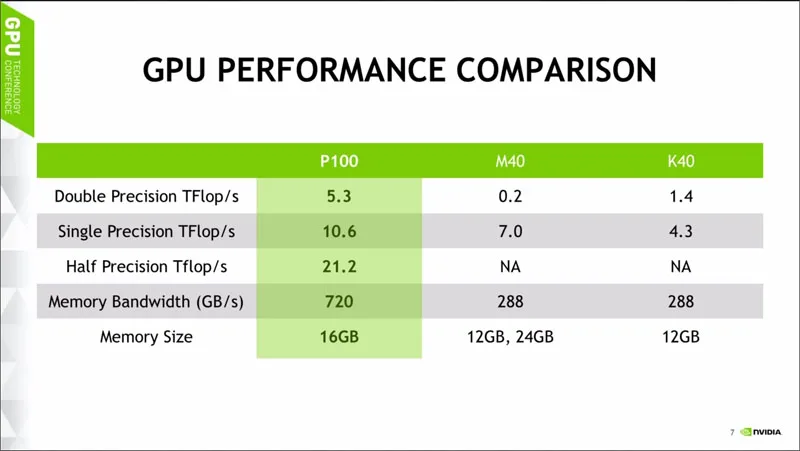

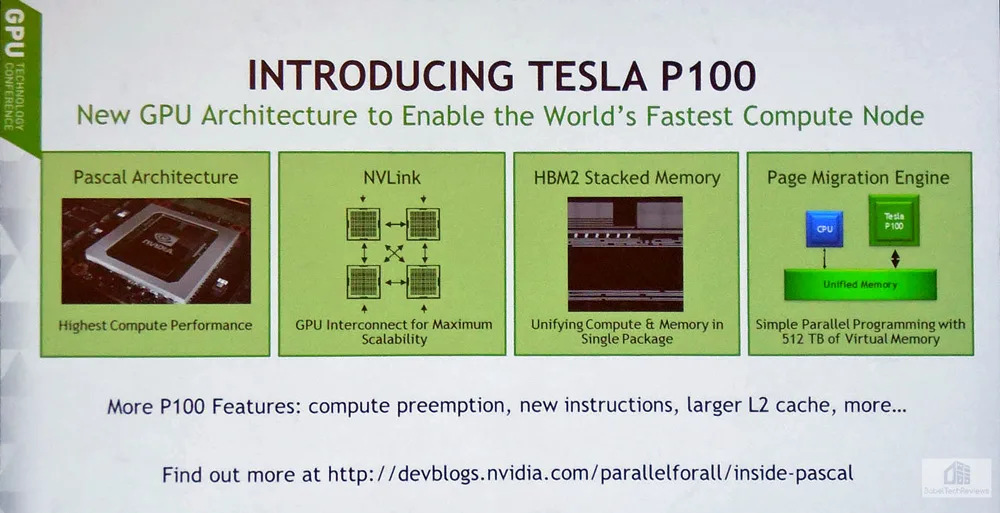

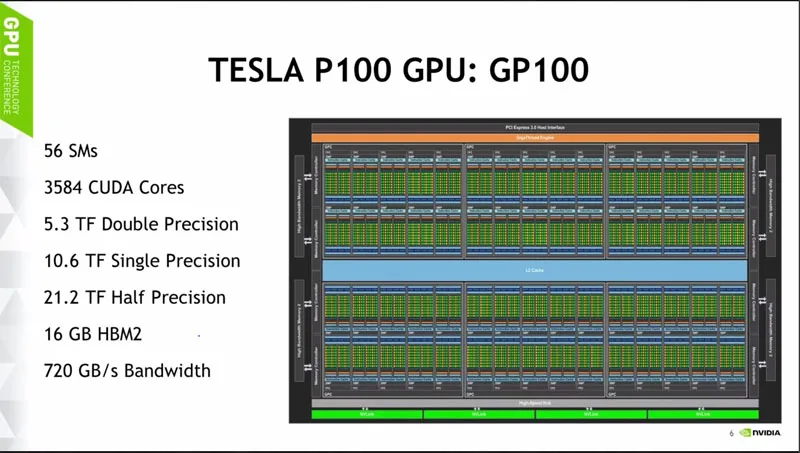

First and foremost of interest to professionals and eventually to gamers, Pascal was released as the Nvidia Tesla P100 GPU – the most advanced hyperscale data center accelerator ever built. P100 is the latest addition to the NVIDIA Tesla Accelerated Computing Platform

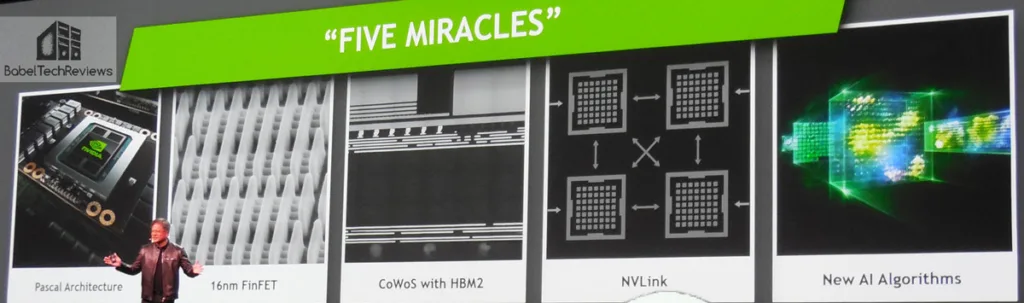

First and foremost of interest to professionals and eventually to gamers, Pascal was released as the Nvidia Tesla P100 GPU – the most advanced hyperscale data center accelerator ever built. P100 is the latest addition to the NVIDIA Tesla Accelerated Computing Platform The Tesla P100 enables a new class of servers that can deliver the performance of hundreds of CPU server nodes. It is a massive 610mm chip that uses 3D stacking and HBM2 that is made possible by what Jensen called “5 miracles”. We were also able to attend the deep dive session which we will share with our readers in this wrap up. Unfortunately, there is no Pascal white paper yet available.

The Tesla P100 enables a new class of servers that can deliver the performance of hundreds of CPU server nodes. It is a massive 610mm chip that uses 3D stacking and HBM2 that is made possible by what Jensen called “5 miracles”. We were also able to attend the deep dive session which we will share with our readers in this wrap up. Unfortunately, there is no Pascal white paper yet available.

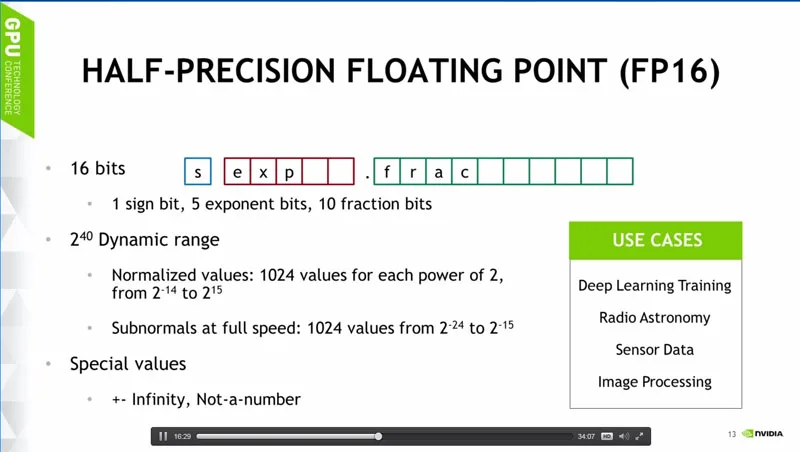

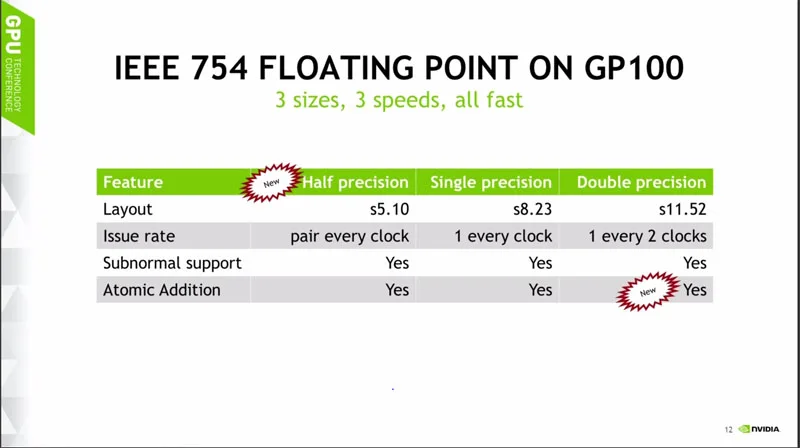

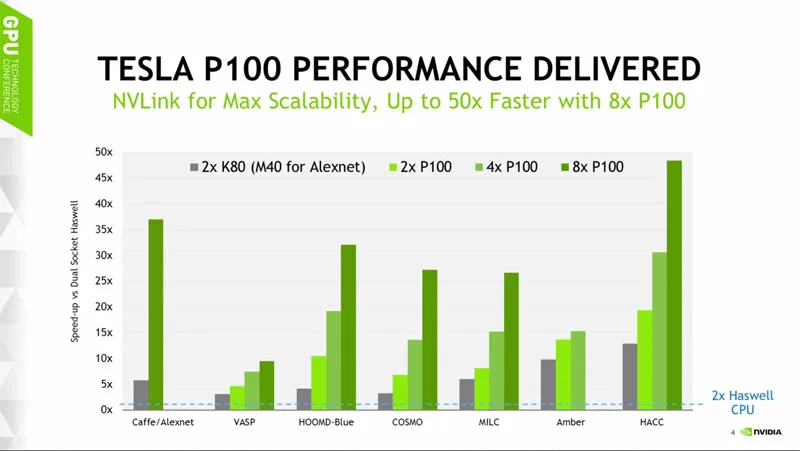

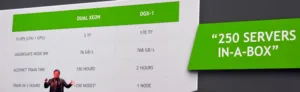

Jensen also announced Nvidia’s DGX-1 – the world’s first deep learning supercomputer which was developed to meet the intense computing demands of artificial intelligence. It features eight Tesla P100 GPU accelerators that deliver up to 170 teraflops of half-precision (FP16) peak performance.

Jensen also announced Nvidia’s DGX-1 – the world’s first deep learning supercomputer which was developed to meet the intense computing demands of artificial intelligence. It features eight Tesla P100 GPU accelerators that deliver up to 170 teraflops of half-precision (FP16) peak performance.

The DGX-1 is not inexpensive at $129,000, but it will save a lot of money over the millions of dollars it would cost to buy and set up comparable CPU servers. And the energy costs will be far lower for Nvidia’s DGX-1 system at just over 3,000W compared with the electricity usage for comparably performing CPU servers. The first systems will go out to researchers next month, and by Q1 of next year they will be available to OEMs for general purchase.

Much emphasis was placed on future cars that will also use Deep Learning, and the World’s first autonomous race car was announced. Not just one, but all 20 cars in the ROBORACE Formula 1 2016/2017 E circuit will use Nvidia’s DRIVE PX 2 as the “brains” of their autonomous platform.

Much emphasis was placed on future cars that will also use Deep Learning, and the World’s first autonomous race car was announced. Not just one, but all 20 cars in the ROBORACE Formula 1 2016/2017 E circuit will use Nvidia’s DRIVE PX 2 as the “brains” of their autonomous platform.

Nvidia believes that AI for Medicine is critically important to all of us. This year Nvidia has partnered with Massachusetts General to advance healthcare by applying AI to improve the quality of treatment and the management of diseases.

Jensen’s keynote also held the first public demo of Mars 2030 , an outer space VR simulation experience created by FUSION that uses satellite imagery and data provided from NASA. On stage, Jensen introduced the VR experience to PC pioneer Steve “Woz” Wozniak over a video link powered by Cisco TelePresence. Jensen and Woz were joking when Woz commented that he “felt a little dizzy” by the VR experience, to which Jensen replied, “that was not a helpful comment”, much to the delight of the audience.

Jensen’s keynote also held the first public demo of Mars 2030 , an outer space VR simulation experience created by FUSION that uses satellite imagery and data provided from NASA. On stage, Jensen introduced the VR experience to PC pioneer Steve “Woz” Wozniak over a video link powered by Cisco TelePresence. Jensen and Woz were joking when Woz commented that he “felt a little dizzy” by the VR experience, to which Jensen replied, “that was not a helpful comment”, much to the delight of the audience.

The full Mars 2030 experience will transport its viewer to Mars in VR. The terrain, lighting and gravity models, and even the habitats its viewer encounters in this VR simulation are grounded by scientific data. And Nvidia’s VRWorks technologies will make the experience richer by using Multi-resolution Shading and even VR SLI support.

Some GTC attendees were able to experience the first part of Mars 2030 which is a VR exploration of an enormous Martian lava tube where the first explorers are actually expected to live. This experience is expected to be commercially available in the Autumn. Unfortunately, the lines were long and we missed this experience. We did get to check out the Oculus Rift VR demo of EVE: Valkyrie in Nvidia’s “VR Village”.

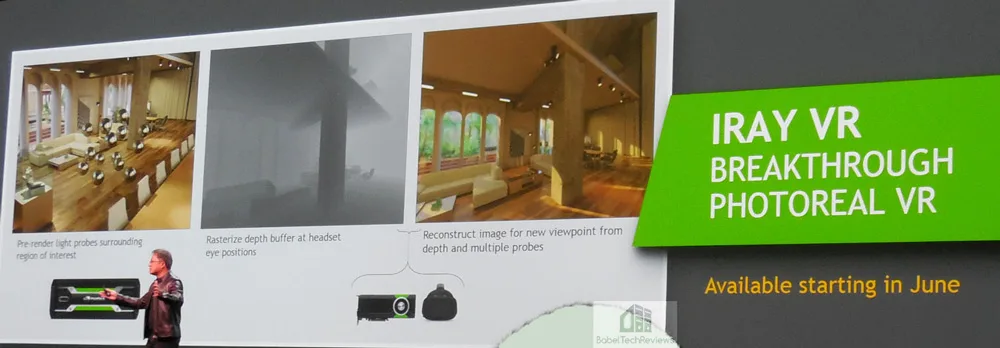

Jensen also announced Iray VR which is Photorealistic VR rendering that lets architects and design professionals simulate their creations accurately. Of course, photorealistic VR is computationally expensive, so there also is a scaled-down “lite” version of it available for smartphones that sacrifices little.

Jensen also announced Iray VR which is Photorealistic VR rendering that lets architects and design professionals simulate their creations accurately. Of course, photorealistic VR is computationally expensive, so there also is a scaled-down “lite” version of it available for smartphones that sacrifices little.

Jensen also announced Nvidia’s Unified SDK which helps developers create solutions for deep learning, accelerated computing, self-driving cars, design visualization, autonomous machines, robots, gaming, VR, and more.

After Jensen’s two hour keynote ended at 11 AM, we ran for the press conference. Here is Nvidia’s Panel as moderated by their VP of Corporate Communications, Bob Sherbin:

Unfortunately, we learned very little new from this half-hour session as so many of the questions were directed at unreleased products that Nvidia’s representatives could not comment on. We did note that Nvidia is expecting rapid growth in automotive and in deep learning applications and that their solutions are scalable.

After we grabbed a quick lunch and a brief look at the exhibits, remembering to schedule our VR experience for the next morning, we headed to the Deep Dive into Pascal architecture inside the main ballroom which was packed.

S6176 – Inside Pascal

Level: All

Type: Featured Presentation

Tags: Supercomputing & HPC

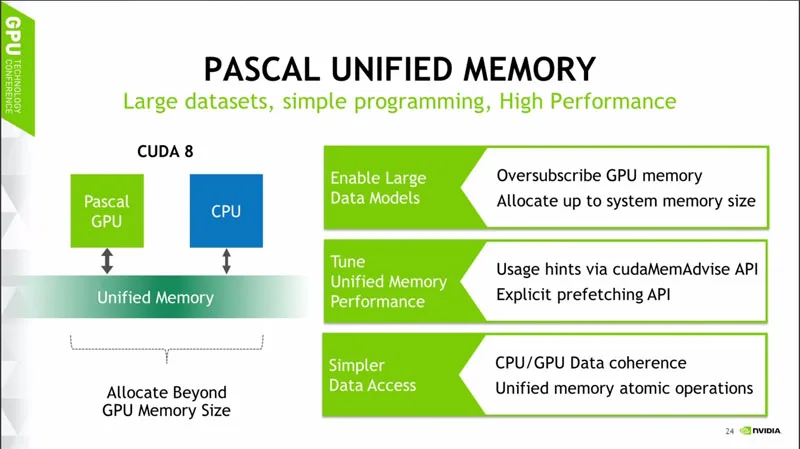

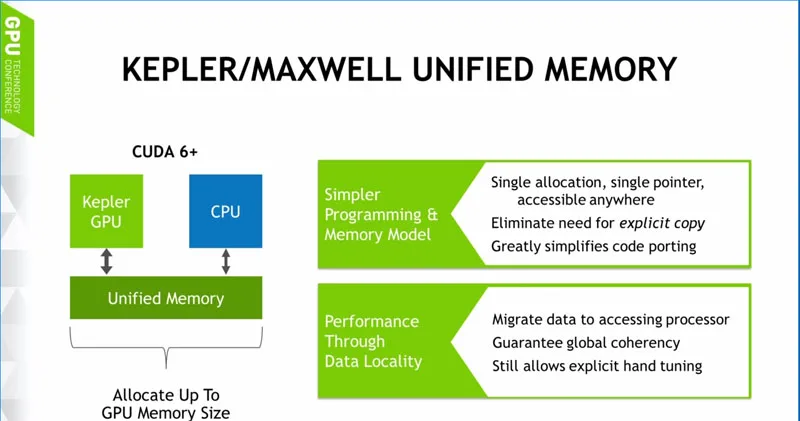

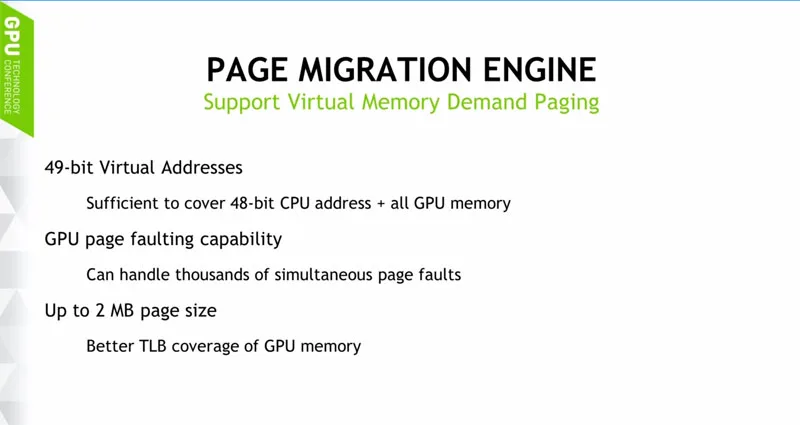

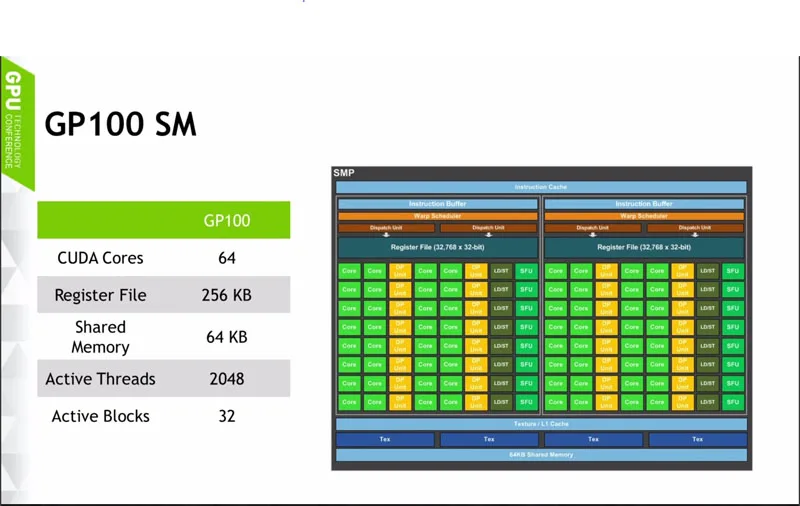

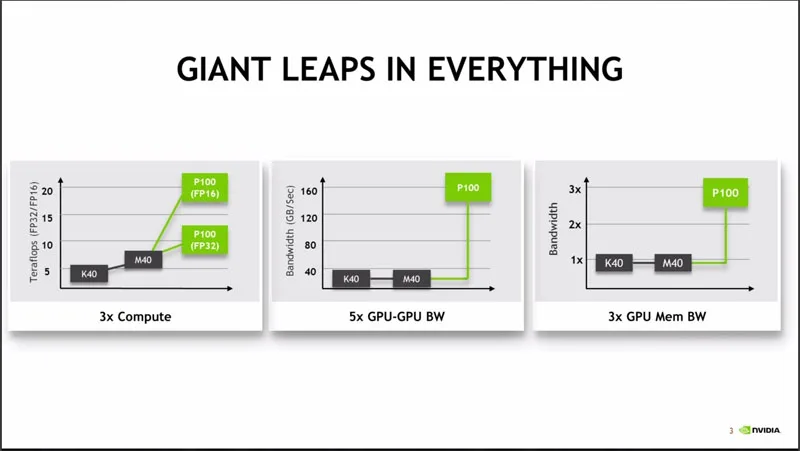

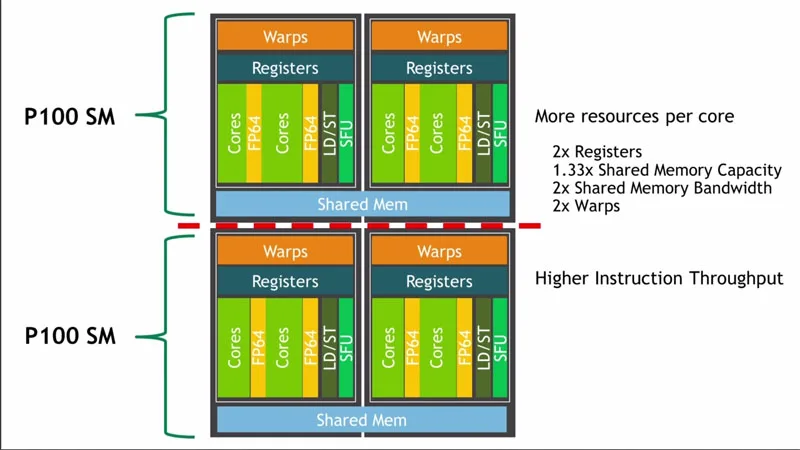

This was a fascinating look at Pascal architecture compared with Maxwell and Kepler as it applies to the new mega-chip, the P100. Here are the slides from the presentation:

Now we look at the differences between the Kepler and the Pascal cores. Pascal cores are half the size of Kepler, but they do more work.

Now here’s a look at the way the P100 is laid out:

Interestingly, the P100 is not the full chip as 60 SMs are possible, but in the case of the P100, 56 are enabled.

The above are the charts from the deep dive but here is the link to the entire presentation.

We returned to the exhibit room, grabbed a bite to eat and then headed back to our room to prepare for another full day on Wednesday.

Wednesday, April 6

After a quick breakfast from the Press Lounge we headed to Pixar’s presentation.

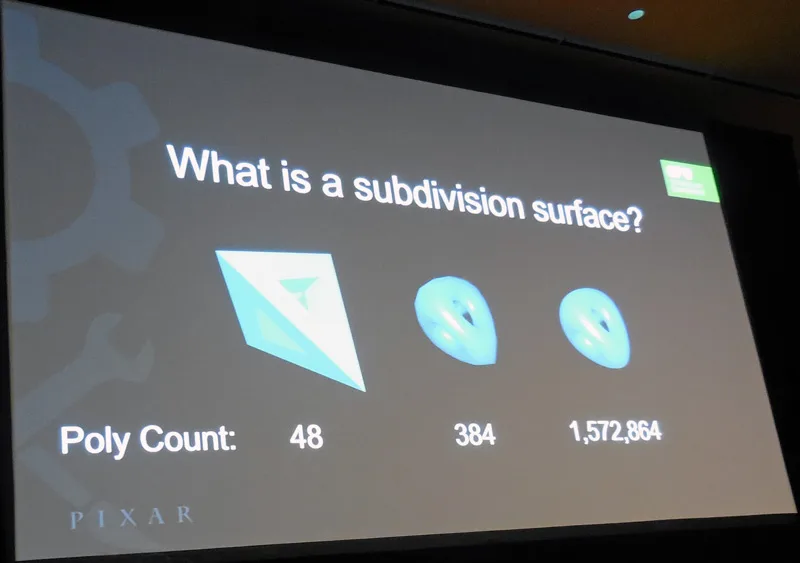

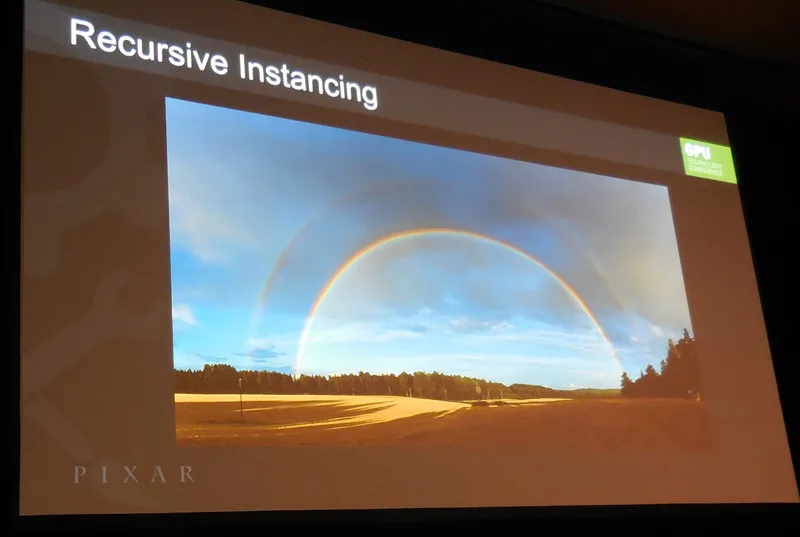

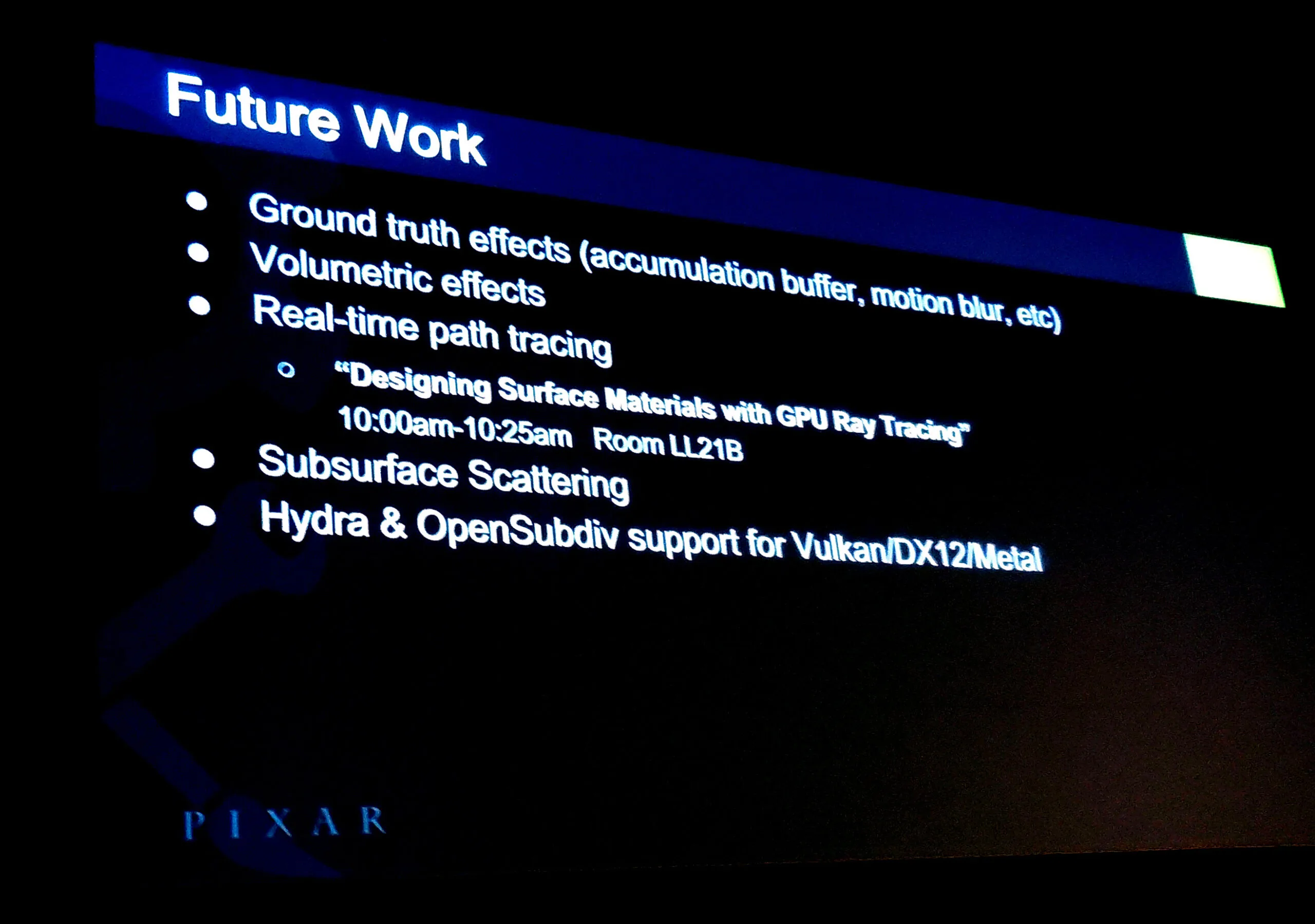

S6454 – Real-Time Graphics for Film Production at Pixar

This editor has been interested in following Pixar’s progress since Nvision08, and this year did not disappoint.

This editor has been interested in following Pixar’s progress since Nvision08, and this year did not disappoint.

As usual, Pixar begins simply and then becomes more complex as the session continues.

They have made many of their projects open source and their last slide shows their future work which will probably be discussed at future GTCs.

They have made many of their projects open source and their last slide shows their future work which will probably be discussed at future GTCs.

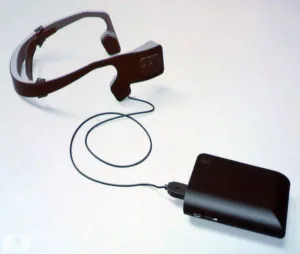

S6793 – Designing a Wearable Personal Assistant for the Blind: The Power of Embedded GPUs

S6793 – Designing a Wearable Personal Assistant for the Blind: The Power of Embedded GPUs

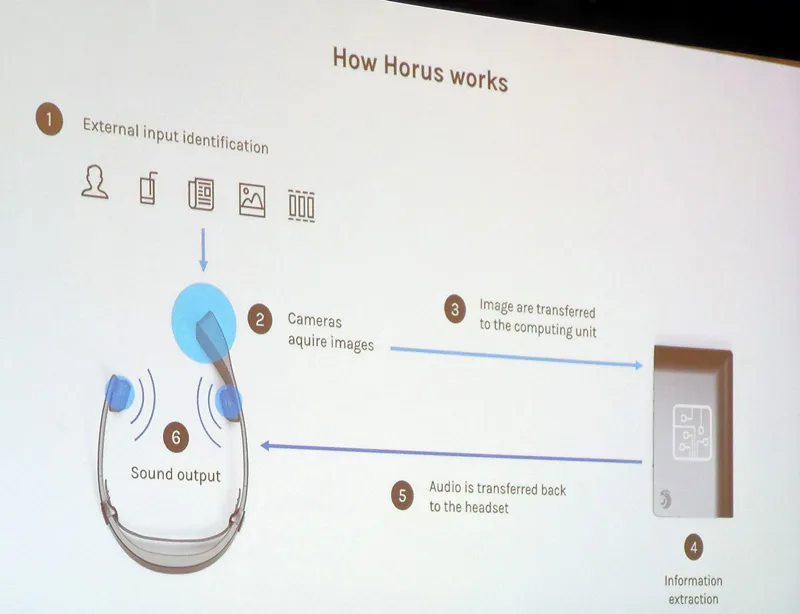

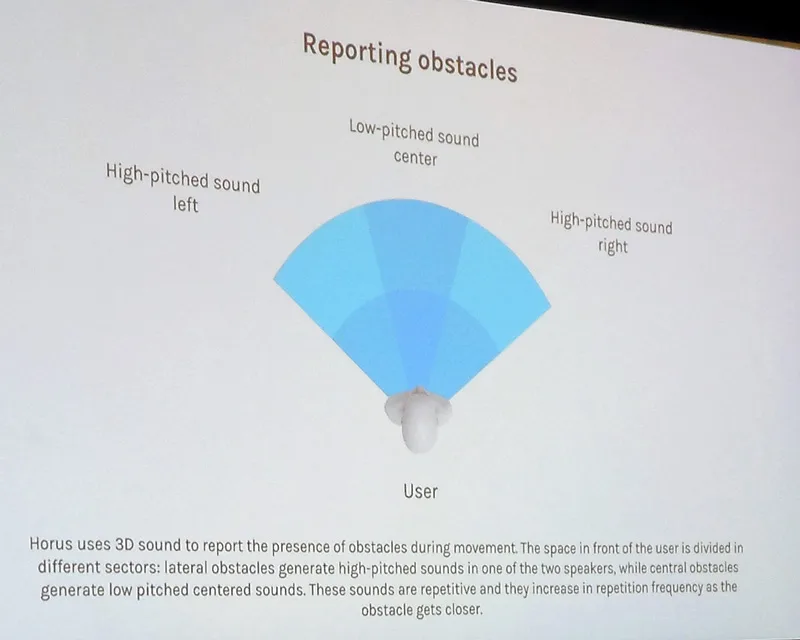

We also attended a very interesting session that featured a customized Jetson TK-1 coupled with a smartphone to enable the blind and those that do not have normal eyesight to navigate more normally by using sound cues. Horus, the company who is creating it won a major cash prize at the GTC for their work. Five other other startups joined Horus as winners, splitting more than $550,000 worth in prizes.

Truly Horus is helping the blind to “see”. It should be available later this year and will cost about $1,500.

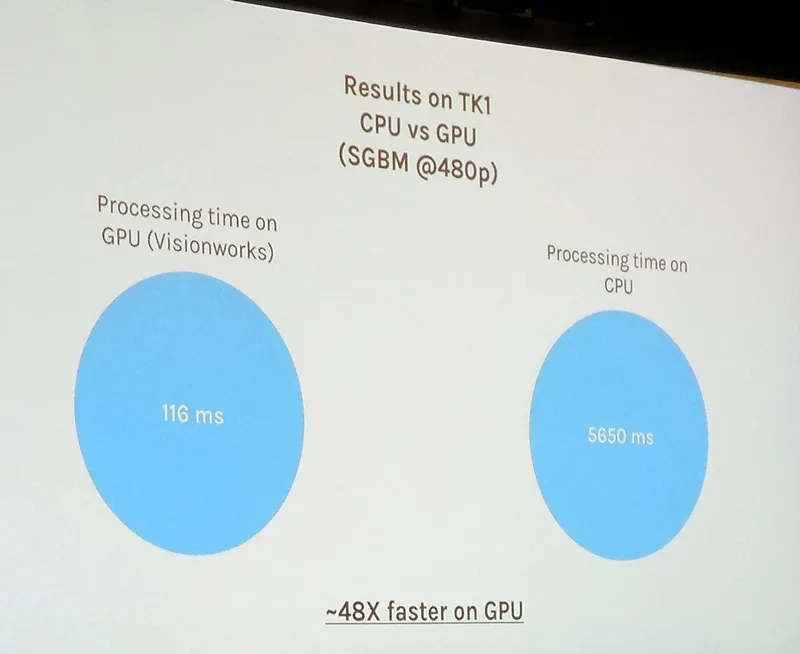

As we can see, using a CPU is not possible but the extremely small size and the efficiency of Nvidia’s Jetson TK-1 makes this all possible. Horus is planning to make their device available in several languages and they are working on improved face recognition as well as object recognition. The entire presentation is available on Nvidia’s GTC site here and it is fascinating.

Horus is planning to make their device available in several languages and they are working on improved face recognition as well as object recognition. The entire presentation is available on Nvidia’s GTC site here and it is fascinating.

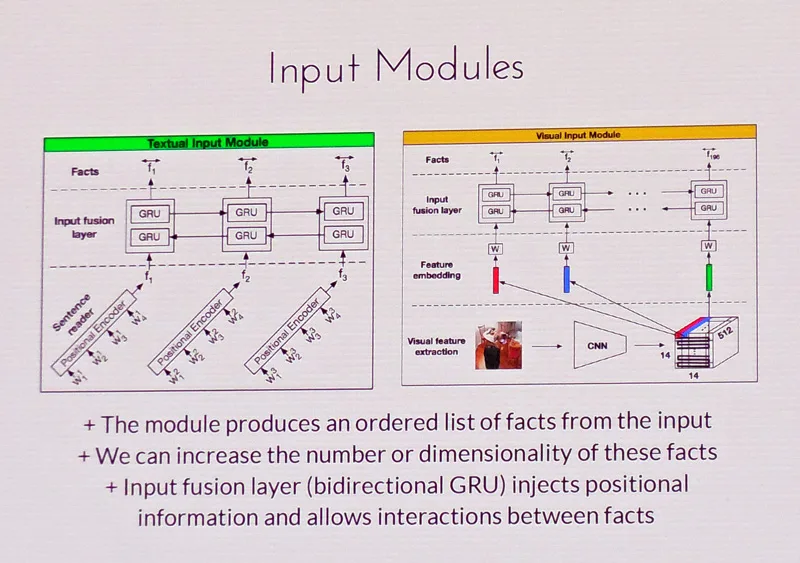

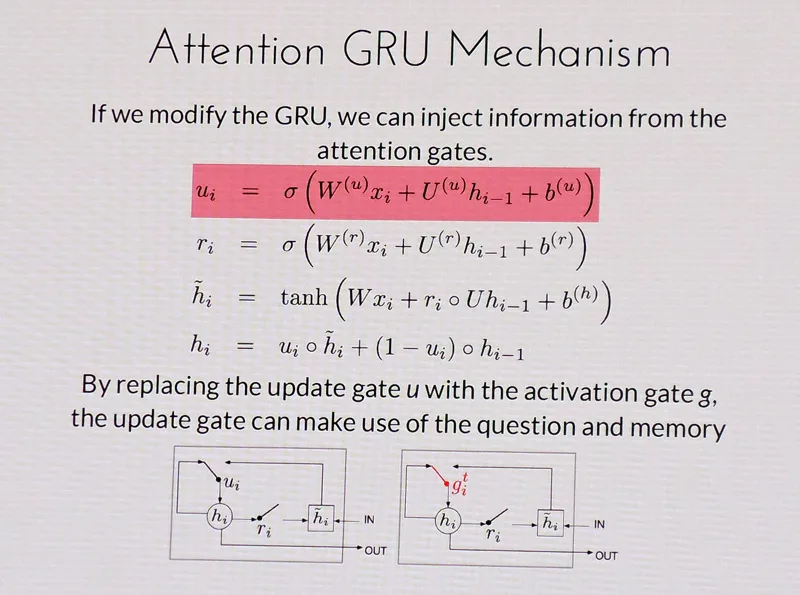

We also attended a Deep Learning session regarding voice and text recognition, and managed to find some important deep learning resources that may be useful to BabelTechReviews’ Community.

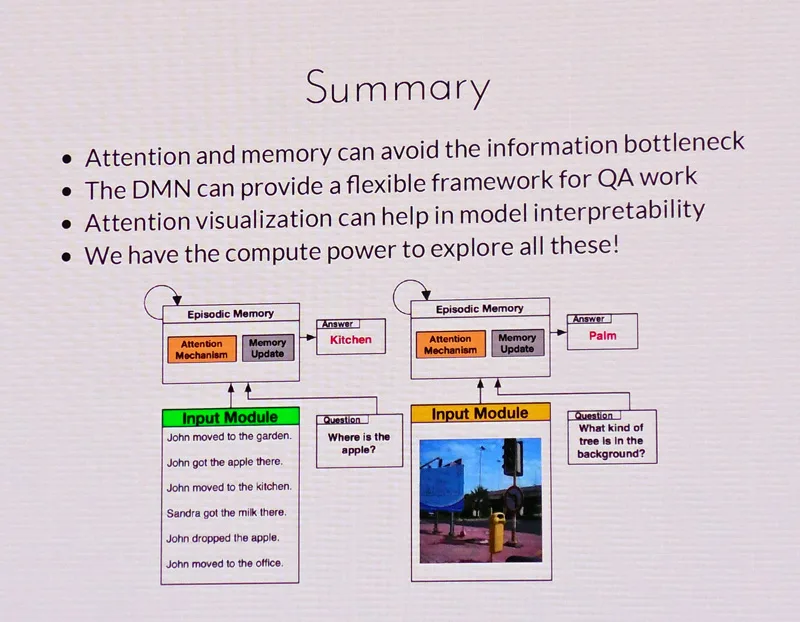

S6861 – Dynamic Memory Networks for Visual and Textual Question Answering

S6901 – Keynote Presentation – IBM Watson

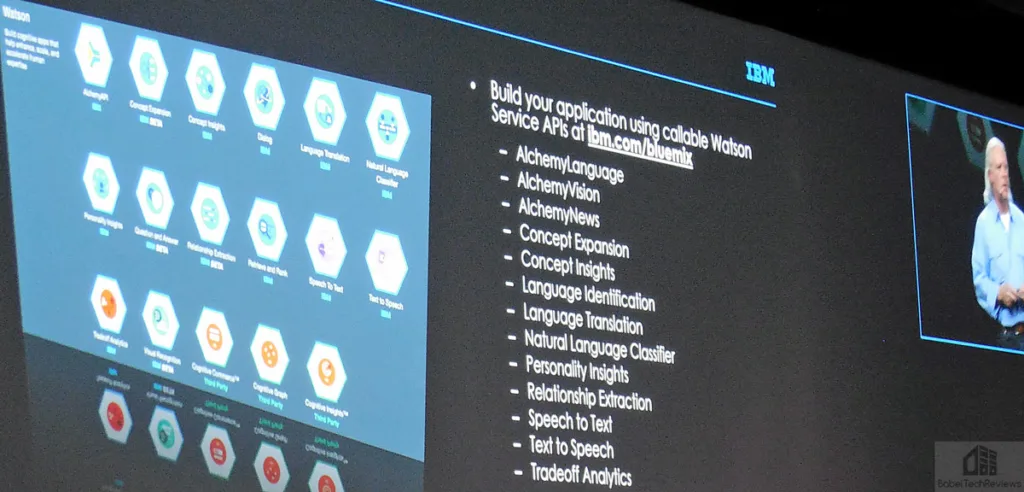

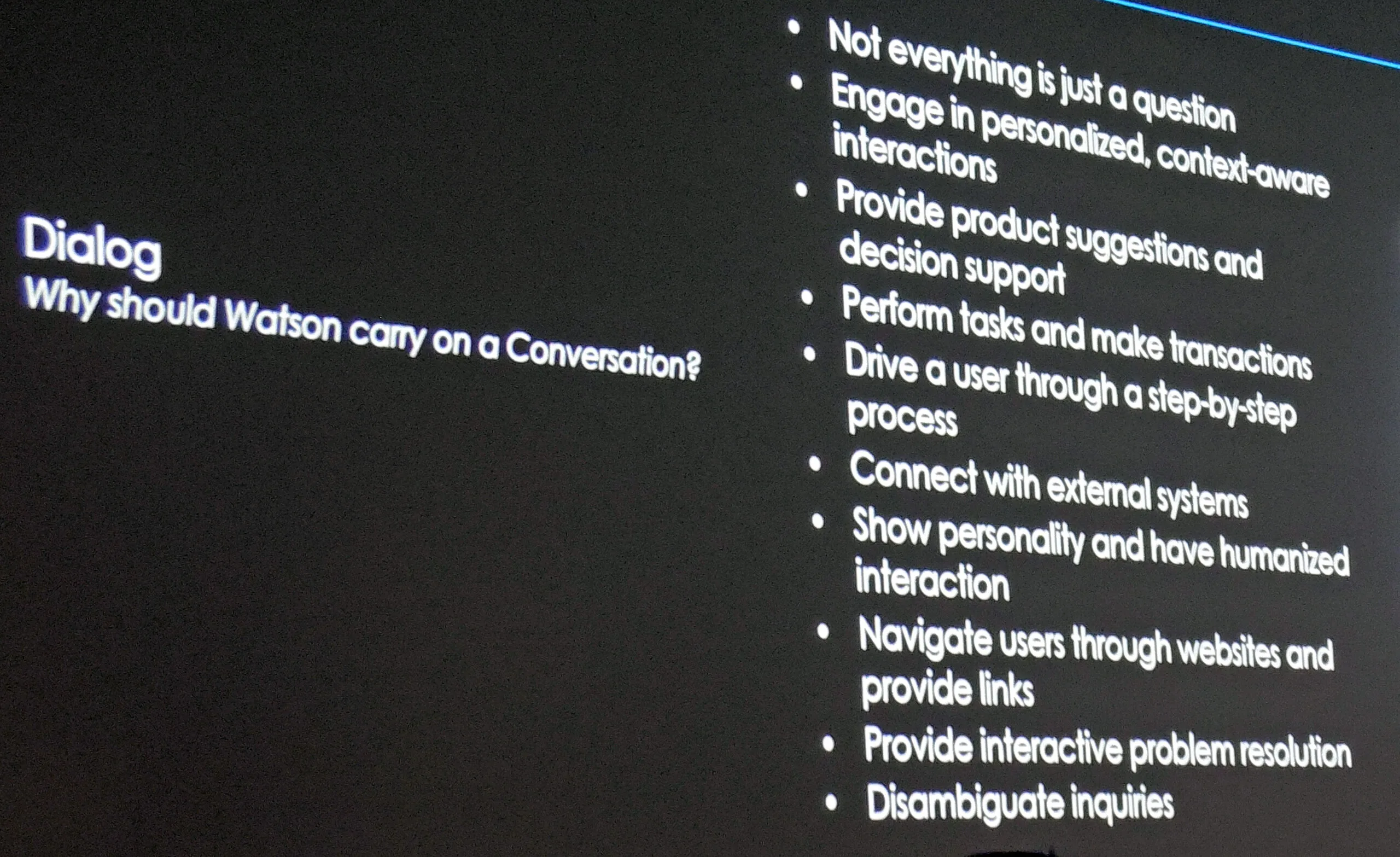

The highlight of Day 2 for many was the second Keynote delivered by a very gifted lecturer, Rob High, IBM Fellow and CTO of IBM Watson. Five years after winning big on TV’s Jeopardy!, IBM Watson has moved on to far more complicated AI applications, and the platform has evolved to help doctors, lawyers, marketers and others understand data in human-like ways.

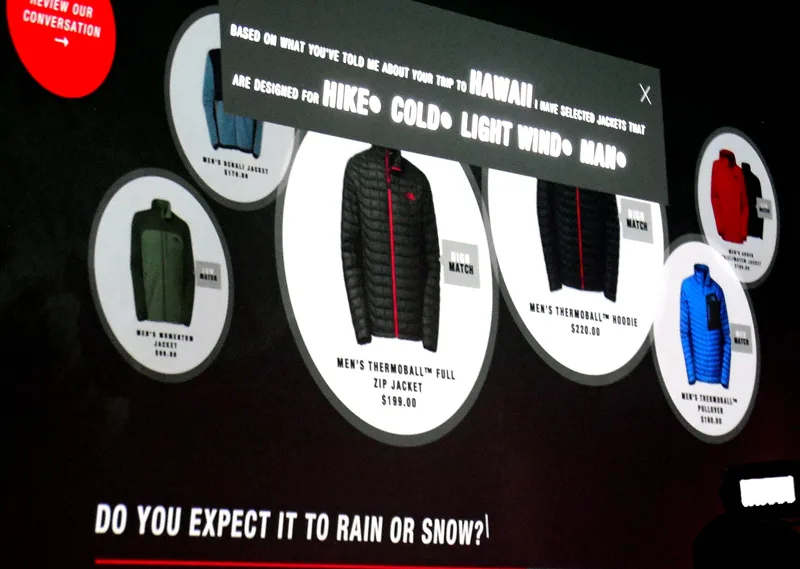

Here is a partial list of what Watson is capable of:

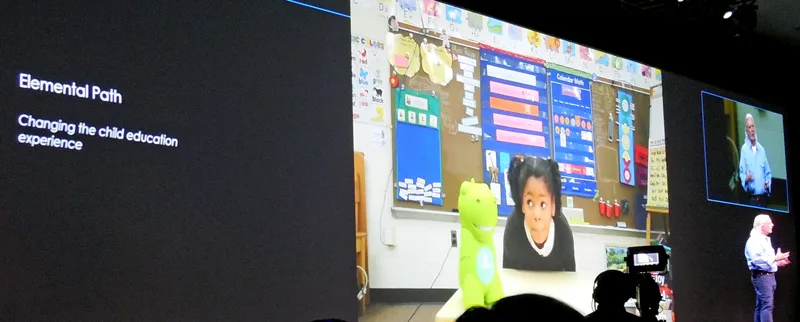

Watson is being used commercially as by a major sporting equipment/clothing company to interact with its customers by using Watson’s intelligent questions, and giving advice based on its customers’ responses. Even Kellogg’s website uses Watson to suggest custom breakfasts. And Watson is also used in education.

Even Kellogg’s website uses Watson to suggest custom breakfasts. And Watson is also used in education.

The question comes up, why have Watson engage in conversation beyond answering questions?

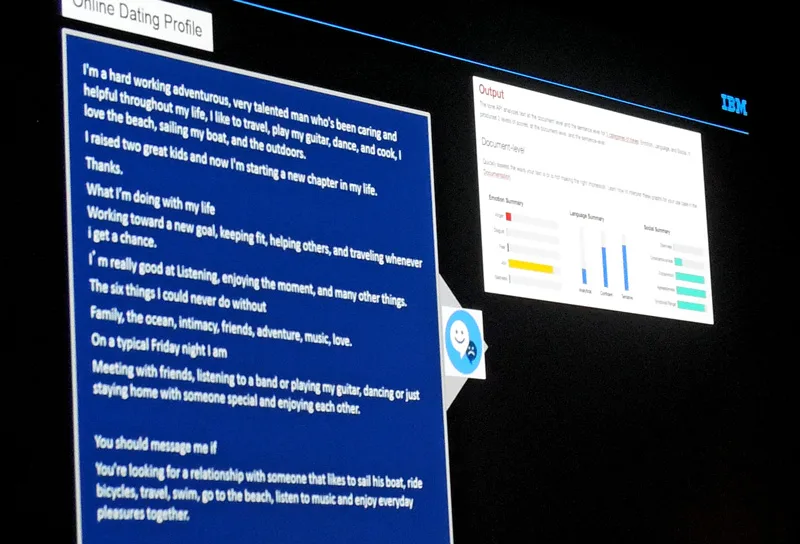

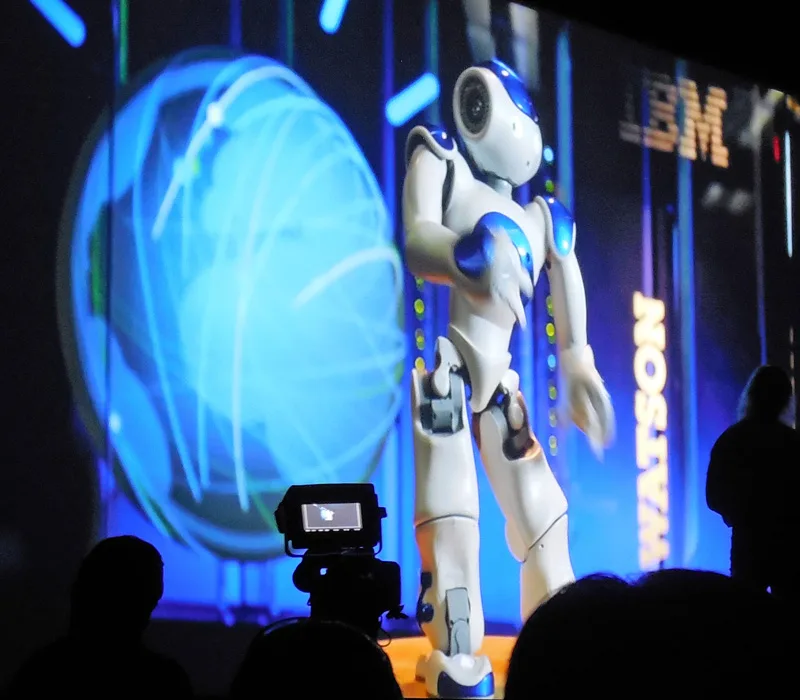

Watson is even being used to analyze personality for online dating. Interactive Watson robotics are also being explored.

Interactive Watson robotics are also being explored.

IBM needs much more computing power for truly cognitive computing and the GPU is an important part of it. IBM reports an 8.5X speedup in training times by using GPUs.

After Wednesday’s sessions, we again headed to the exhibit hall for a quick bite to eat, and the GTC party which never disappoints was scheduled for 7-11 PM. Unfortunately, we were unable to attend, and we wrote and published our first Live at Nvidia’s GTC 2016 article instead. Thursday would be our last day at the GTC.

Thursday Keynote, Networking Sessions, the Exhibit Hall, and VR Village

S6831 – Keynote Presentation – Toyota Research Institute

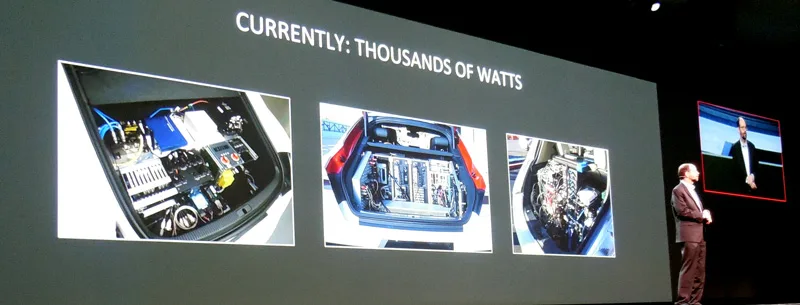

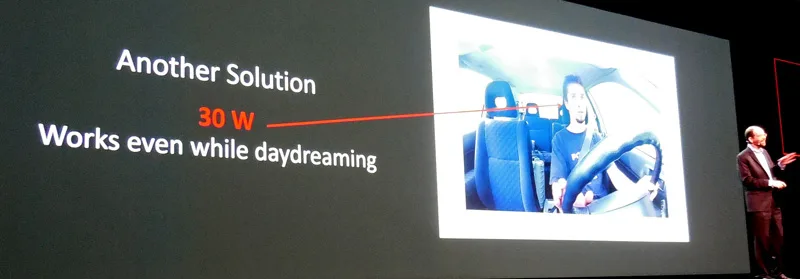

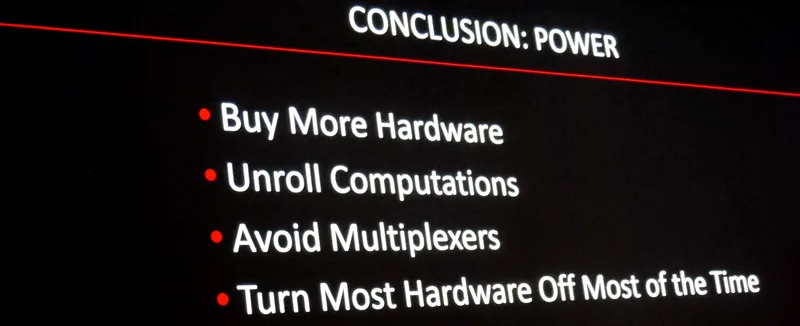

The speaker began his presentation by pointing out that 1.2 million people world wide die in car crashes every year. A possible solution is self-driving cars but the power envelop needed up until now has been excessive.

Contrast this with the human brain.

Of course, part of the problem is daydreaming and inattention by human drivers and so Toyota is looking for a solution. Part of the solutions for using computers are illustrated below:

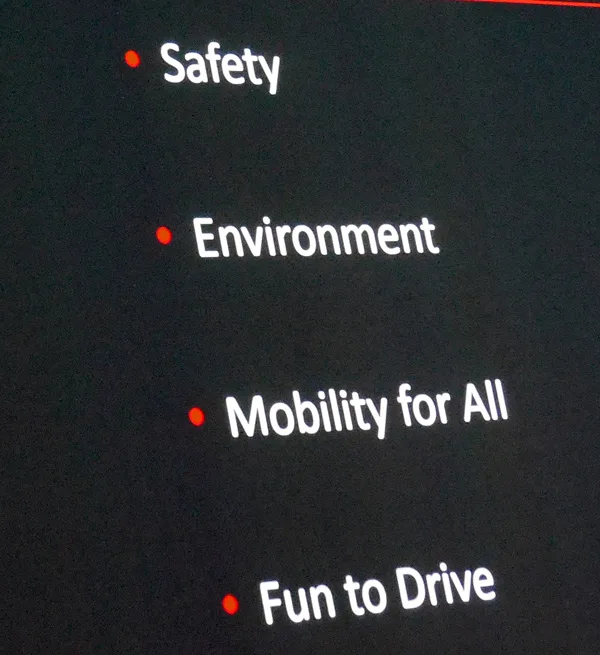

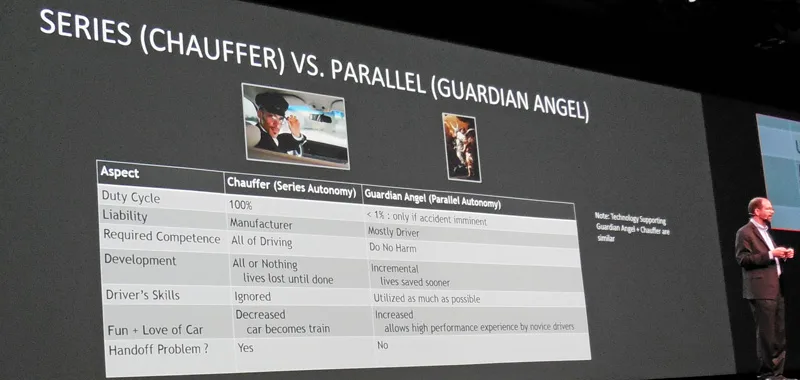

Our own Andy Marken wrote a very timely piece, The More Connected the Car, the More Disconnected You’ll Be. He pointed out that as cars become autonomous, they will no longer be an expression of their owners, and “driving” will be about as much fun as taking a train. Almost in answer, Toyota’s priorities for autonomous cars are show as below:

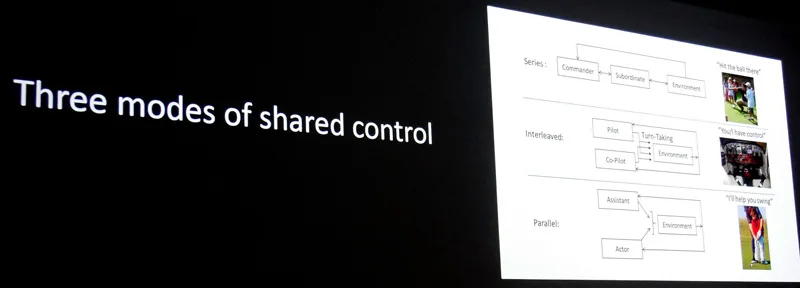

Fun to drive? How so? Well, evidently Toyota believes in “shared control”.

The Keynote speaker went on to discuss the advantages and disadvantages of each pathway to autonomous driving and how Toyota was planning to achieve it. Simulation is a big part of Toyota’s plan, and Toyota is using Ann Arbor, Michigan, as their primary test site for connected cars to gather data for research into autonomous driving and safety applications.

In partnership with the University of Michigan’s Transportation Research Institute, Toyota’s goal is to equip 5,000 cars with information-gathering boxes that communicate wirelessly with similar vehicles and infrastructure such as traffic signals. Just three days ago, Toyota made this announcement:

Toyota, in partnership with the University of Michigan Transportation Research Institute (UMTRI), is transforming the streets of Ann Arbor, Mich. into the world’s largest operational, real-world deployment of connected vehicles and infrastructure.

Connected vehicle safety technology allows vehicles to communicate wirelessly with other similarly equipped vehicles, and to communicate wirelessly with portions of the infrastructure – such as traffic signals. The Ann Arbor Connected Vehicle Test Environment (AACVTE) is a real-world implementation of connected vehicle safety technologies being used by everyday drivers in Ann Arbor and around Southeast Michigan. AACVTE will build on existing model deployment in Ann Arbor, including an upgraded and expanded test environment, making it the standard for a nation-wide implementation.

This research will elevate UMTRI and U.S. Department of Transportation (US DOT) real-world exploration of connected vehicle technology. The current limitation of connected vehicle testing outside of a closed circuit test tracks is the lack connected vehicles. In order to move autonomous driving toward reality, testing requires more cars, more drivers and more day-to-day miles travelled than any combination of research facilities could support. The AACVTE begins to solve this problem.

Ann Arbor is already the largest Dedicated Short Range Communication test bed in the world, and the Michigan State government is very active in expanding deployment throughout the State of Michigan.

As part of its partnership agreement with UMTRI, Toyota will invite team members and their families to participate in the AACVTE initiative. The Toyota participants will allow their vehicles to be equipped with devices to support accelerated research and deployment of advanced Vehicle-to-Vehicle (V2V)/Vehicle-to-Infrastructure (V2I) systems in the region. The goal is to deploy 5,000 vehicles with vehicle awareness devices throughout the Ann Arbor area. The Ann Arbor deployment is one stepping stone toward achieving the U.S. Department of Transportation’s vision for national deployment of V2V/V2I vehicles.

We headed back once more to the Exhibit hall for lunch and to look at the exhibits as they were winding down. We met with some of our friends and did not attend any more sessions but prepared to head for home about 2:30 PM so as to miss any San Jose rush hour traffic.

-

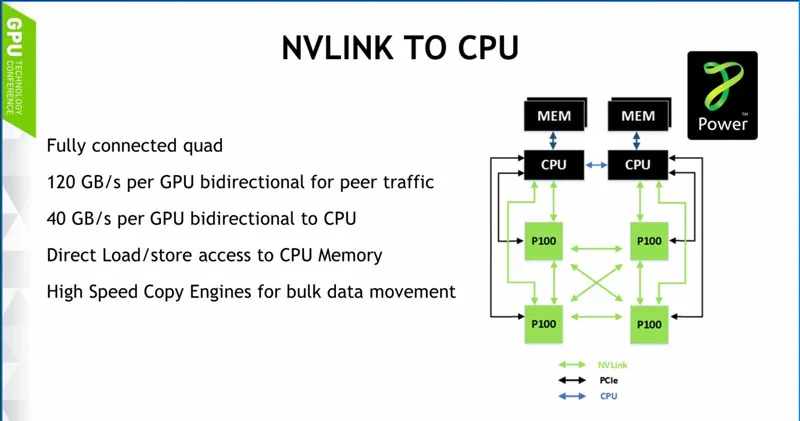

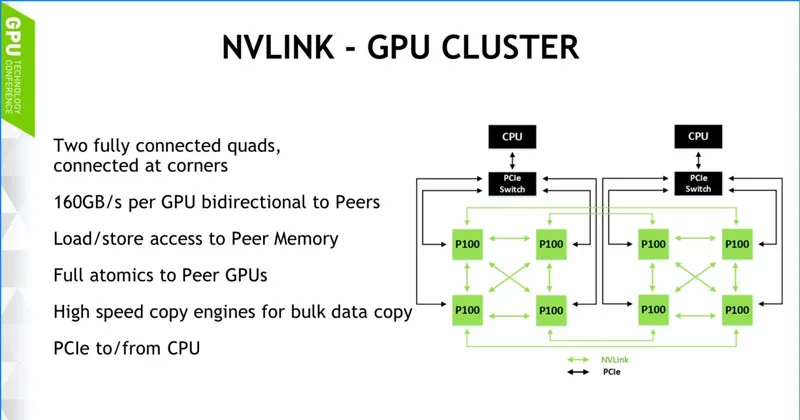

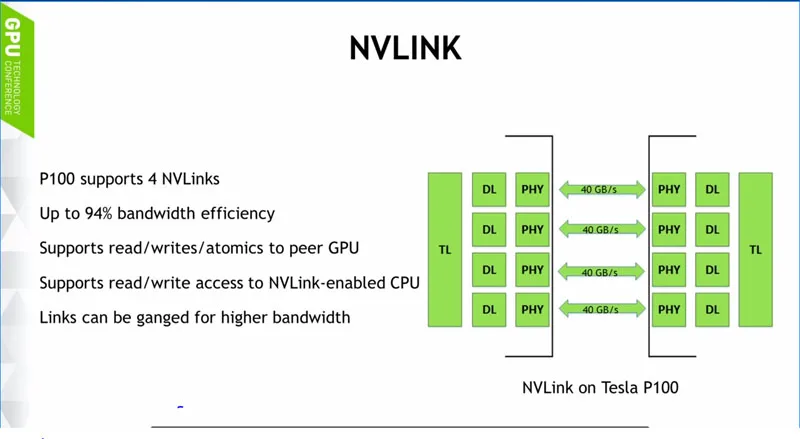

NVLink, a high-speed interconnect between the CPU and the GPU, enabling these two key processors to share data five to 12 times faster than possible today;

-

Pascal, the next milestone on Nvidia’s GPU architecture roadmap, will leverage NVLink and a new 3D chip design process to improve memory density and energy efficiency;

-

GeForce GTX Titan Z, a dual-GPU graphics card that will become the company flagship when it ships later this year for a suggested $2,999;

-

Jetson TK1, an embedded development board computer that puts supercomputing power in a mobile form factor for $192;

-

The Iray VCA, a graphics processing appliance for real-time photorealistic rendering and real-world simulation.

– See more at: http://gfxspeak.com/2014/03/25/keynote-announcements-wallop/#sthash.nt7y5Lbz.dpuf

-

NVLink, a high-speed interconnect between the CPU and the GPU, enabling these two key processors to share data five to 12 times faster than possible today;

-

Pascal, the next milestone on Nvidia’s GPU architecture roadmap, will leverage NVLink and a new 3D chip design process to improve memory density and energy efficiency;

-

GeForce GTX Titan Z, a dual-GPU graphics card that will become the company flagship when it ships later this year for a suggested $2,999;

-

Jetson TK1, an embedded development board computer that puts supercomputing power in a mobile form factor for $192;

-

The Iray VCA, a graphics processing appliance for real-time photorealistic rendering and real-world simulation.

– See more at: http://gfxspeak.com/2014/03/25/keynote-announcements-wallop/#sthash.nt7y5Lbz.dpuf

Networking/exhibits/VR

Nvidia had several large sections in the main exhibit hall.

Although an autonomous water-craft was featured (above), cars are always popular, and now much more than Infotainment/navigation and entertainment are provided by Nvidia. It seems that every corner of the GTC featured a autonomous or super car.

Many of Nvidia’s major partners in professional GPU computing were represented with their servers, including Dell, HP, and Super Micro, major sponsors of the GTC.

This is the first time that we have seen EVGA at the GTC and we were able to reconnect with their representatives. No, we didn’t use flash.

This is the first time that we have seen EVGA at the GTC and we were able to reconnect with their representatives. No, we didn’t use flash.

There was a lot to take in. And this year, there was a jam packed VR Village where you had to make an appointment, or miss out!

VR (Virtual Reality)

We had an early 8:30 AM appointment, before the exhibits were open, and already there was a line. We chose to demo EVE: Valkyrie using the Oculus Rift as we wanted to see how we would fare with a fast-paced shooter in VR.

Without great content, VR will probably be as short lived as S3D was. Content developers need to intelligently develop VR content to aid the progression of a story or game and not be the reason for it. At the recent GDC (Game Developer’s Conference), Oculus announced 30 titles that will be priced between $19 and $59 that will be available for its Rift at launch. The selection includes traditional games as well as more experimental ones.

There will ideally be no gut wrenching motions that can make the user sick. Even so, we still felt queasy after playing EVE: Valkyrie for just a few minutes, as I deliberately picked the game most likely to affect me. I was not terribly impressed with the grainy demo as there was no improvements in resolution since I last demoed VR in 2014. Perhaps it was because it was demoed on a GTX 980 notebook instead of a more powerful SLI desktop solution like below.

Computer Deep Learning Art

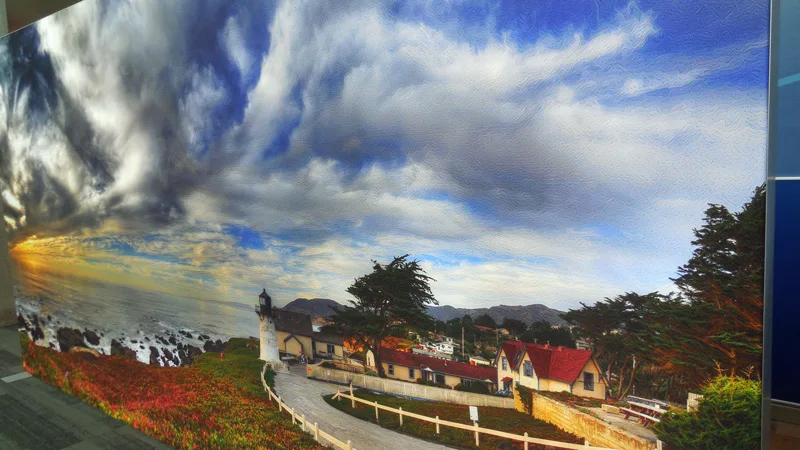

Something new appeared at this GTC.

A deep learning computer is shown thousands of images of art and then is asked to create a scene; a landscape, for example, with this as one of the results:

In fact, this was so successful that these images were raffled off for charity with the winner getting the image he voted for with his $5 minimum contribution. We picked the GTX Titan Z picture, but did not win it.

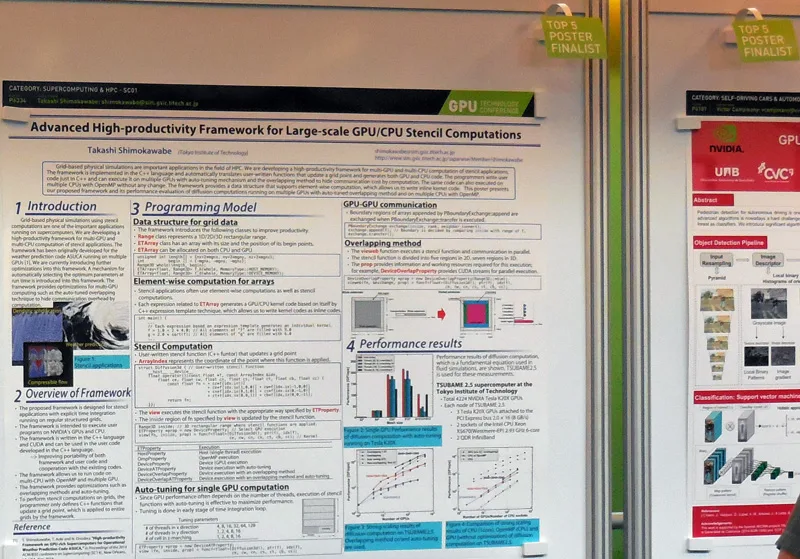

Posters and Hangouts

Hundreds of posters demonstrating the myriad of uses for the GPU were again featured. It would be interesting to see them digitized for future GTCs as the schedule is so a lot of room could be saved.

There was also a competition to reward the best poster by voting for them.

Hangouts were also important. Instructors wore large “ask me” buttons, and we joined in on the conversation on more than one occasion, learning much. If you didn’t get your questions answered, you probably didn’t try hard enough.

We are reminded that the GTC is all about people. Helpful people. People with a passion for GPU computing and desire to share and learn. The GPU and/or VR cannot yet replace face-to-face human contact. Everywhere we saw people networking with each other. We also engaged in it and our readers may see improvements to this tech site because of our knowledge gained from the GTC.

Appetizers were also served at no charge. It was quite a logistical feat to care for over 5,500 attendees, but Nvidia was once again up to the task. The only issue with the GTC was that there is realistically too much to take in at once. No one could see the hundreds of sessions that were held simultaneously. There also were over 200 volunteers which made GTC 2015 possible. Our thanks to Nvidia for inviting BTR!

Conclusion

On Thursday afternoon, we packed up early and headed home. We said goodbye to our adventure at the 2016 GTC and we hope that we can return next year. It was an amazing experience as it is really an ongoing revolution that started as part of Nvidia’s vision for GPU programming just a few years ago. It is Nvidia’s disruptive revolution to make the GPU “all purpose” and at least as important as the CPU in computing. Over and over, their stated goal is to put the massively parallel processing capabilities of the GPU into the hands of smart people.

Here is our conclusion from the first GPU Technology Conference, GTC 2009:

Nvidia gets a 9/10 for this conference; a solid “A” for what it was and is becoming and I am looking forward to GTC 2010! … This editor sees the GPU computing revolution as real and we welcome it!

Well, the GTC 2016 gets another solid “A+” from this editor again. Nvidia has made the much conference larger and the schedule even more hectic, yet it ran very smoothly. Next year, attendees can look forward to another 4-day conference at the San Jose Convention Center from March 27-30, 2017. And if you cannot wait, several GTCs are now held around the world including a brand new one in Europe.

Our hope for GTC 2018 is that Nvidia will make it “spectacular” like they did with Nvision08 – open to the public. It might be time to bring the public awareness of GPU computing to the fore by again highlighting the video gaming side of what Nvidia GPUs can do, as well as with diverse projects including the progress made with Deep Learning, and in automotive, for example.

This was a very short and personal report on GTC 2016. We have many gigabytes of untapped raw video plus hundreds of pictures that did not make it into this wrap-up. However, we shall continue to reflect back on the GTC until the next GPU Technology Conference.

Mark Poppin

BTR Editor-in-Chief