Nvidia’s GTC 2015 Wrap-Up – Self-Driving, Deep Learning, Pascal, & TITAN X

This is the fifth time that this editor has been privileged to attend Nvidia’s GPU Technology Conference (GTC). GTC 2015 was held March 16-20, in San Jose, California. Some big announcements were made this year, including the hard launch of the TITAN-X, Nvidia’s roadmap teasing Pascal, as well as Jensen’s interview of Elon Musk regarding the future of self-driving cars, plus an overall emphasis on Deep Learning. The usual progress reports of the quick acceptance and adoption of CUDA, and now of Nvidia’s GRID, also were featured during this conference.

This is the fifth time that this editor has been privileged to attend Nvidia’s GPU Technology Conference (GTC). GTC 2015 was held March 16-20, in San Jose, California. Some big announcements were made this year, including the hard launch of the TITAN-X, Nvidia’s roadmap teasing Pascal, as well as Jensen’s interview of Elon Musk regarding the future of self-driving cars, plus an overall emphasis on Deep Learning. The usual progress reports of the quick acceptance and adoption of CUDA, and now of Nvidia’s GRID, also were featured during this conference.

The very first Tech article that this editor wrote covered Nvision 08 – a combination of LAN party and the GPU computing technology conference that was open to the public. The following year, Nvidia held the first GTC 2009, which was a much smaller event for the industry that was held at the Fairmont Hotel, across the street from the San Jose Convention Center, and it introduced Fermi architecture. This editor also attended GTC 2012, and it introduced Kepler architecture. Last year we were in attendance at GTC 2014 where the big breakthrough in Deep Learning image recognition from raw pixels was “a cat”. This year, computers can easily recognize “a bird on a branch” faster than a well-trained human which demonstrates incredible progress in using the GPU for image detection .

Bearing in mind past GTCs, we cannot help but to compare them to each other. We have had two weeks to think about GTC 2015, and this is our summary of it. This recap will be much briefer than usual as we we unable to attend as many sessions as we had with previous GTCs due to personal health reasons, and we left before attending any Friday sessions. However, we were able to attend all of the keynote sessions and some of the sessions on Tuesday and Wednesday. We visited the exhibit hall on Thursday, encountered a T-Rex in the latest CryTek Oculus Rift demo, and caught up with some of our editor friends from years past. Every GTC has been about sharing, networking, and learning – everything related to GPU technology.

For an invaluable resource, please check out Nvidia’s library of over 500 sessions that are already recorded and available for watching now. We are only going to give our readers a small slice of the GTC and our own short unique experience of it as a member of the press.

The GTC 2015 highlights for this editor included ongoing attempts to learn more about Nvidia’s future roadmap (with more success than usual), as well as noting Nvidia’s progress in GPU computing over the past 7 or 8 years. Each attendee at the GTC will have their own unique account of their time spent at the GTC. The GTC is a combination trade show/networking/educational event attended by approximately three thousand people, each of whom will have their own unique schedule as well as different reasons for attending. All of them share in common a passion for GPU computing. This editor’s reasons for attending this year was the same as the years before – the interest in GeForce and Tegra GPU technology primarily for PC and now for Android gaming.

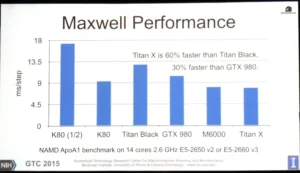

As is customary, Nvidia used the GTC to showcase their new and developing technology even though everything currently is still built on mostly 28nm architecture. We saw Nvidia transition last year from the Kepler generation of GPU processing power to Maxwell’s energy-saving yet performance-increasing architecture. Maxwell architecture is much more powerful as well as significantly more energy-efficient than Kepler. Nvidia intends to use Maxwell to continue to revolutionize GPU and cloud computing, including for gaming. This is shown by their release of the $999 GeForce flagship – the12GB vRAM-equipped TITAN-X – where the emphasis is on 4K gaming and also now on VR (Virtual Reality).

As is customary, Nvidia used the GTC to showcase their new and developing technology even though everything currently is still built on mostly 28nm architecture. We saw Nvidia transition last year from the Kepler generation of GPU processing power to Maxwell’s energy-saving yet performance-increasing architecture. Maxwell architecture is much more powerful as well as significantly more energy-efficient than Kepler. Nvidia intends to use Maxwell to continue to revolutionize GPU and cloud computing, including for gaming. This is shown by their release of the $999 GeForce flagship – the12GB vRAM-equipped TITAN-X – where the emphasis is on 4K gaming and also now on VR (Virtual Reality).

Everything has certainly grown since the first GTC in 2009. Nvidia is again using the San Jose Convention Center for their show. Each time the GTC schedule becomes far more packed than the year previously, and this editor was forced to make one especially hard choice because of the timing with the TITAN-X launch during Jensen’s Keynote on Tuesday. We chose to benchmark it using all 30 games and to attend just a few of the sessions, and to skip most of the others.

The GTC at a Glance

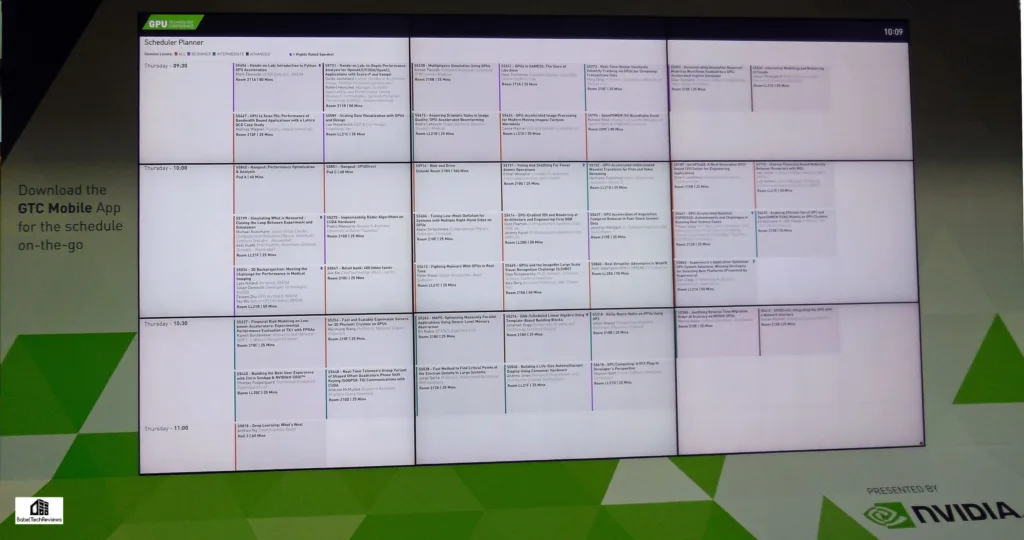

Here is the GTC schedule “at a glance”.

There has been some real progress with signage at the upgraded San Jose Convention Center compared with years past. No longer does Nvidia have to make do with an entire wall covered with posters, but now the electronic signs are updated hourly, and there is far less clutter making it much easier for the attendees.

Just like at last year’s GTC, there was a very useful mobile app that this editor downloaded to his SHIELD tablet that kept him on schedule and from getting lost. After years of experience with running the GTC, Nvidia has got the logistics of the GTC completely down. It runs very smoothly considering that they also make sure that lunch is provided for each of the full pass attendees daily, and they offer custom dietary choices, including vegan and “gluten free, for which this editor is personally grateful.

Now we will look at each day that we spent at the GTC and will briefly focus on the few sessions that we attended.

Monday

We left the high desert above Palm Springs on Monday morning, just ten minutes after completing our nearly non-stop 3-day marathon TITAN-X benchmarking. We arrived in San Jose late Monday afternoon, completing our journey in just over eight hour hours in overall light traffic. After parking our car at the convention center parking indoor parking lot for $20 for each day, and checking in at the Marriott, we picked up our press pass and headed to the GTC Press Party across the street. We got to see a few of our tech editor friends from events past, but quickly headed back to our room to work on writing our TITAN-X launch article.

The Convention Center’s Wi-Fi was better than tolerable and the Press Wi-Fi was also OK considering the hundreds of users that were using it simultaneously. However, the wired connection (and the Wi-Fi) inside the Marriott rooms were excellent, and 6 or 7MB/s peak downloads were not unusual until the hotel and conference got packed.

Nvidia treats their attendees and press well, and there was a choice of a nice backpack or of a commemorative GPU Technology T-shirt included with the $1,200 all-event pass to the GTC. The press gets in free and we always pick the T-shirt. Small hardware review sites like BTR are rarely invited to attend the GTC, and we again thank Nvidia for the opportunity.

Mondays are always reserved at all GTCs for the hardcore programmers and for the developer-focused sessions that are mostly advanced. There was a poster reception between 4-6PM, and anyone could talk to or interview the exhibitors who were mostly researchers from leading universities and organizations who were focusing on GPU-enabled research. The press had an early 6-9 PM evening reception at the St. Claire Hotel across the street from the convention center and this editor got to see a few of his friends from past events

There were dinners scheduled and tables reserved at some of San Jose’s finest restaurants for the purpose of getting like-minded individuals together. And discussions were scheduled at some of the dinners while other venues were devoted to discussing programming, and still others talked business – or just enjoyed the food. Instead, we worked on our TITAN-X launch article into the early hours of the morning, took a shower, and headed to Jensen’s Keynote at 9AM Tuesday morning.

The BTR Community and its readers are particularly interested in the Maxwell architecture as it relates to gaming and we were not disappointed with the keynote. Nvidia is definitely oriented toward gaming, graphics, and computing, and we eagerly listened to Nvidia’s CEO Jen-Hsun Huang (Jensen) launch their $999 flagship-GPU TITAN X.

Jensen’s Keynote on Tuesday reinforced Nvidia’s commitment to gaming although they have branched out into many directions. We miss the deep dives into the Maxwell architecture as we had with Fermi and with Kepler at previous GTCs. Although increasing architectural “complexity” is given by Nvidia representatives as an issue, the real reason for the lack of a Maxwell deep dive appears to be one of caution, to keep competitors in the dark.

Tuesday featuring Jensen’s Keynote

The GTC Keynote Address always sets the stage for each GTC. Nvidia’s CEO outlines the progress that they have been making in CUDA and with hardware since the last GTC. And this year, there were four major announcements that we shall look at in detail; last year there were six. Several of the other sessions that we attended were related to the keynote and we have been following their progress since the first GTC.

We can look back on each of Jensen’s Keynotes since Nvision08 as a progress report about the state of CUDA and GPU computing. Each year, besides introducing new companies to GPU computing, we see the same companies invited back – including Pixar and VMworks – and we see their incredible progress which is tied to the GPU and its increases in performance. Every year, for example, we see Pixar delivering more and more incredible graphics and each movie requires exponentially more processing power than the one before it. This is progress that is *impossible* using the CPU. And it is almost now taken for granted.

We can look back on each of Jensen’s Keynotes since Nvision08 as a progress report about the state of CUDA and GPU computing. Each year, besides introducing new companies to GPU computing, we see the same companies invited back – including Pixar and VMworks – and we see their incredible progress which is tied to the GPU and its increases in performance. Every year, for example, we see Pixar delivering more and more incredible graphics and each movie requires exponentially more processing power than the one before it. This is progress that is *impossible* using the CPU. And it is almost now taken for granted.

This 2015 GTC Keynote speech delivered by Nvidia’s superstar CEO Jensen highlighted the rapid growth of GPU computing from its humble beginnings. Although the interview with Elon Musk was saved for the last part of his two-hour keynote, Jensen made several major announcements.

Major announcements during the keynote

- TITAN X – the world’s fastest GPU ($999)

- DIGITS DevBox, a deep-learning platform ($15,000)

- Pascal – promising 10X acceleration beyond Maxwell (TBA)

- NVIDIA DRIVE PX – a deep-learning platform for self-driving cars ($10,000)

-

NVLink, a high-speed interconnect between the CPU and the GPU, enabling these two key processors to share data five to 12 times faster than possible today;

-

Pascal, the next milestone on Nvidia’s GPU architecture roadmap, will leverage NVLink and a new 3D chip design process to improve memory density and energy efficiency;

-

GeForce GTX Titan Z, a dual-GPU graphics card that will become the company flagship when it ships later this year for a suggested $2,999;

-

Jetson TK1, an embedded development board computer that puts supercomputing power in a mobile form factor for $192;

-

The Iray VCA, a graphics processing appliance for real-time photorealistic rendering and real-world simulation.

– See more at: http://gfxspeak.com/2014/03/25/keynote-announcements-wallop/#sthash.nt7y5Lbz.dpuf

-

NVLink, a high-speed interconnect between the CPU and the GPU, enabling these two key processors to share data five to 12 times faster than possible today;

-

Pascal, the next milestone on Nvidia’s GPU architecture roadmap, will leverage NVLink and a new 3D chip design process to improve memory density and energy efficiency;

-

GeForce GTX Titan Z, a dual-GPU graphics card that will become the company flagship when it ships later this year for a suggested $2,999;

-

Jetson TK1, an embedded development board computer that puts supercomputing power in a mobile form factor for $192;

-

The Iray VCA, a graphics processing appliance for real-time photorealistic rendering and real-world simulation.

– See more at: http://gfxspeak.com/2014/03/25/keynote-announcements-wallop/#sthash.nt7y5Lbz.dpuf

The opening Keynote defines the GTC

As in previous keynotes, Jensen detailed the history and the incredible growth of GPU computing and how it is changing our lives for the better. From humble beginnings, GPU computing has grown significantly since the first Nvision08, just seven years ago. CUDA is Nvidia’s own proprietary GPU language which can be considered similar to x86 for CPU. There were over 600 presentations at the GTC. We attended the 3 keynotes but only a half-dozen sessions over two and one-half days.

The Keynote

As Jensen took the stage, he said he would cover four things: a new GPU and deep learning; a very fast box and deep learning; Nvidia’s future roadmap and deep learning; and self driving cars and deep learning. Jensen says that deep learning is as exciting as the invention of the Internet. The potential of this technology and its implications for all industries and science are endless.

Jensen highlighted breakthroughs in gaming, including game-streaming to the television like Netflix for movies. He also cites breakthroughs in cars, which he says are becoming ultimately software and hardware on wheels. Jensen also describes breakthroughs with GRID-accelerated VDI, and breakthroughs in deep learning – for the first time, a computer was recently able to beat the very fastest humans at recognizing images.

We saw a demo of a real-time animation called “Red Kite” by Epic Games running on TITAN X. It 3D captures 100 square miles of a valley with millions of plants in incredible detail. Any given frame is composed of 20-30 million polygons by using physically-based rendering. Although this animation is fairly close to film-based “photo realism”, the only flaw that we could see was that the main character’s hair still looked to be made of plastic. And speaking of the TITAN-X …

TITAN-X released

Jensen was speaking of the TITAN-X as we were cleaning up the typos of our TITAN-X Launch review which was to be published at 12 noon as the keynote ended. TITAN-X is $999, was hard launched immediately and It has 8 billion transistors, 3,072 CUDA cores, 7 teraflops of single-precision throughput, and a 12GB framebuffer.

Jensen says it will pay for itself in a single afternoon of serious research. We don’t know about that, but speaking as a gamer, it may pay for itself in a few evenings of stress-free 4K gaming perhaps compared to playing with multi-GPU solutions. Next, Jensen began to speak of a “very fast box” and deep learning.

Deep Learning and a Very Fast Box

Progress with deep learning began in the mid 1990s at Bell Labs. Within a few years, researchers developed a new method for handwriting and number recognition. Soon algorithms for deep learning moved from computer vision to deep neural networks, and accuracy began to improve dramatically as GPU processing power began to replace the CPUs.

Andrew Ng covered Deep Learning in much greater detail in his Thursday keynote, but the takeaway here is that there has been incredible breakthroughs recently in Deep Learning with computers able to identify images in greater detail and with better accuracy than humans.

So to help deep learning research, Jensen introduced DIGITS, It allows a researcher to configure a deep neural network, monitor progress along the way, and visualize layers.

So to help deep learning research, Jensen introduced DIGITS, It allows a researcher to configure a deep neural network, monitor progress along the way, and visualize layers.

Although it can be a DIY project, Nvidia has built a single appliance called the DIGITS DevBox, which plugs into the wall and uses 220V. It’s being touted as the maximum GPU performance from a single wall socket. It’s packed with TITAN X and Nvidia hardware and boots up Linux and software especially made for deep learning research and it will be available next month for $15,000.

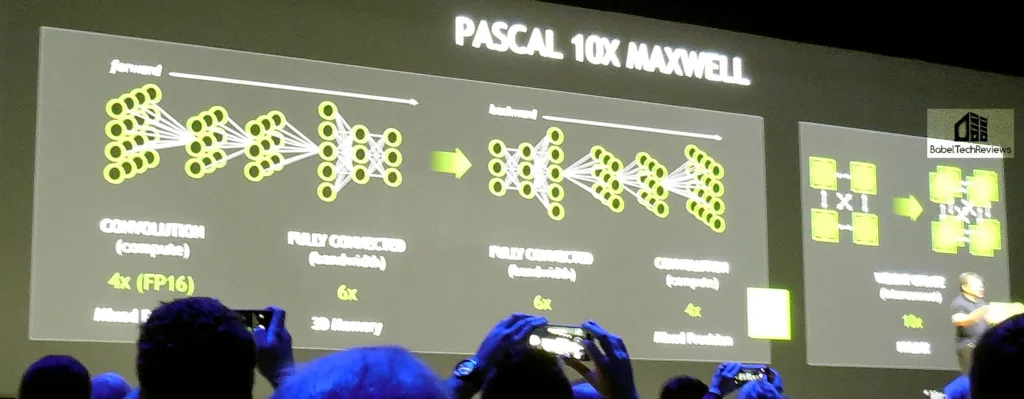

Pascal Architecture – Needed for big data

NVLink will be introduced with Pascal architecture which is now scheduled after Maxwell and before Volta. Nvidia realizes that there needs to be a leap in performance from one generation to the next, and Pascal will feature stacked 3D memory interconnected by vias. This editor has been following the progress of 3D memory since its beginning.

3D or stacked memory is a technology which enables multiple layers of DRAM components to be integrated vertically on the package along with the GPU. Compared to current GDDR5 implementations, stacked memory provides significantly greater bandwidth, doubled capacity, and increased energy efficiency. Faster data movement plus unified memory, will simplify GPU programming. Unified Memory allows the programmer to treat the CPU and GPU memories as one block of memory without worrying about whether it resides in the CPU’s or the GPU’s memory.

In addition to continuing to use PCIe, NVLink technology will be used for connecting GPUs to NVLink-enabled CPUs as well as for providing high-bandwidth connections directly between multiple GPUs. The basic building block for NVLink is a high-speed, 8-lane, differential, dual simplex bidirectional link.

The large increase in GPU memory size and bandwidth provided by 3D memory and NVLink will enable GPU applications to access a much larger working set of data at higher bandwidth, improving efficiency and computational throughput, and reducing the frequency of off-GPU transfers allowing it to handle Big Data. Jensen said that Pascal would gain approximately 10 times the performance over Maxwell – using rough “CEO Math”.

Pascal has new great features including offering support up to 32GB of memory, and it is said to deliver much-improved floating point performance through the use of mixed-precision computing. Pascal’s mixed precision is said to provides 3X the level of Maxwell’s. 3D memory will provide more bandwidth and capacity simultaneously while NVLink will allow for multiple GPUs to be connected at very high speeds beyond USB 4.0 specifications. Pascal also has 2.7X more capacity than Maxwell. We are looking forward to it next year!

DRIVE PX

Jensen shifted gears to talking about cars, deep learning and assisted/self-driving cars. It’s going to be very difficult to code scenarios for an infinite number of possibilities to lead to a self-driving cars. To help with deep learning programs, Nvidia introduced the DRIVE PX dev kit for ten thousand dollars, available next month.

DRIVE PX is based on two NVIDIA Tegra X1 processors, and takes input from 12 cameras. It’s specifications are quite impressive. AlexNet on DRIVE PX has 630 million connections and can process its program at 184 million frames a second. DRIVE PX can fire off its neural capacity at a rate of 116 billion times a second!

The Interview with Elon Musk

There was some extra security brought in for this interview although it was unobtrusive. It was very interesting to see the interplay between Jensen and Musk. Jensen dove right in and asked about Elon’s AI “Demon”. Last year, Musk indicated that ultimately AI is the biggest threat facing humans, and we are “summoning the demon”.- the real concern that the ultimately self-aware AI we are creating could be potentially more dangerous than nuclear power.

Jensen asked how Elon rationalized researching AI which put the Tesla CEO immediately on the defensive, and he denied making it that simplistic. Elon responded by pointing out that his remarks were preceded with the word “potentially”, and added that self-driving cars are merely “a narrow form” of AI, not the deep intelligence AI that will ultimately become self-aware.

Jensen continued aggressively, asking about Tesla’s upcoming announcement which Elon dodged. Then the interview got serious and Musk talked about auto security and ways Tesla Motors is working on to secure their cars from hacking.

Elon laid out the technological hurdles of autonomous driving. At 5-10 MPH it’s relatively easy because you can stop quickly when indicated by sensors. It gets tricky around 30-40 MPH in an open driving environment with a lot of unexpected things happening. But over 50 MPH in a freeway environment, it gets easier because the possibilities become more limited.

Musk also told the GTC audience that mainstream adoption of autonomous vehicles will happen more quickly than many expect, and much of it will depend on passing governmental regulations. Jensen’s interview of Elon Musk concluded, the TITAN-X officially launched at noon for sale, and most of the audience headed for lunch in the main exhibit hall. This reporter first headed for the hour-long Q&A session with Nvidia’s officials for the financial analysts and also open to the Press which was very heavy with questions from the automotive press.

More Tuesday Sessions

The question and answer session that the Press was invited to was moderated by Nvidia Senior Vice President Robert Sherbin and it included Nvidia’s Senior Vice President of GPU Engineering who was very adept at dodging questions about future architecture and chips. Present on Nvidia’s panel also included Sumit Gupta, GM of Accelerated Computing; Scott Herkelman, GM of GeForce Desktop; and Danny Shapiro, Senior Director of Nvidia’s Automotive Division. We did not get to ask our question about Elon Musk’s “Demon” and Nvidia’s thoughts about hastening a possible Technological Singularity, but was promised an email follow up by Mr. Sherbin afterward.

The question and answer session that the Press was invited to was moderated by Nvidia Senior Vice President Robert Sherbin and it included Nvidia’s Senior Vice President of GPU Engineering who was very adept at dodging questions about future architecture and chips. Present on Nvidia’s panel also included Sumit Gupta, GM of Accelerated Computing; Scott Herkelman, GM of GeForce Desktop; and Danny Shapiro, Senior Director of Nvidia’s Automotive Division. We did not get to ask our question about Elon Musk’s “Demon” and Nvidia’s thoughts about hastening a possible Technological Singularity, but was promised an email follow up by Mr. Sherbin afterward.

As we didn’t get any real sleep since Friday when we received our TITAN-X, we skipped the rest of the Tuesday sessions and went to bed in the early afternoon after lunch.

There were many more sessions at the GTC that are quite technical and dealt with CUDA programming. From GTC to GTC, our goal has been to attend similar lectures and workshops to see the progress. We will try to catch up with the ones we missed at next year’s GTC.

After the sessions

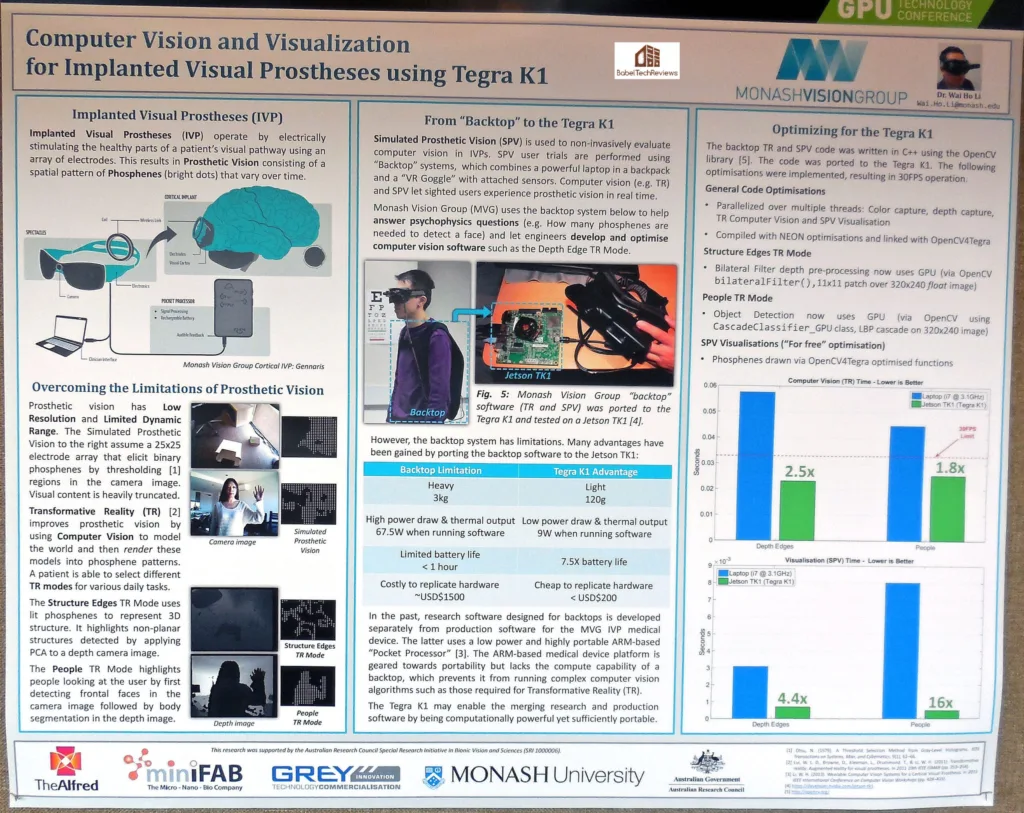

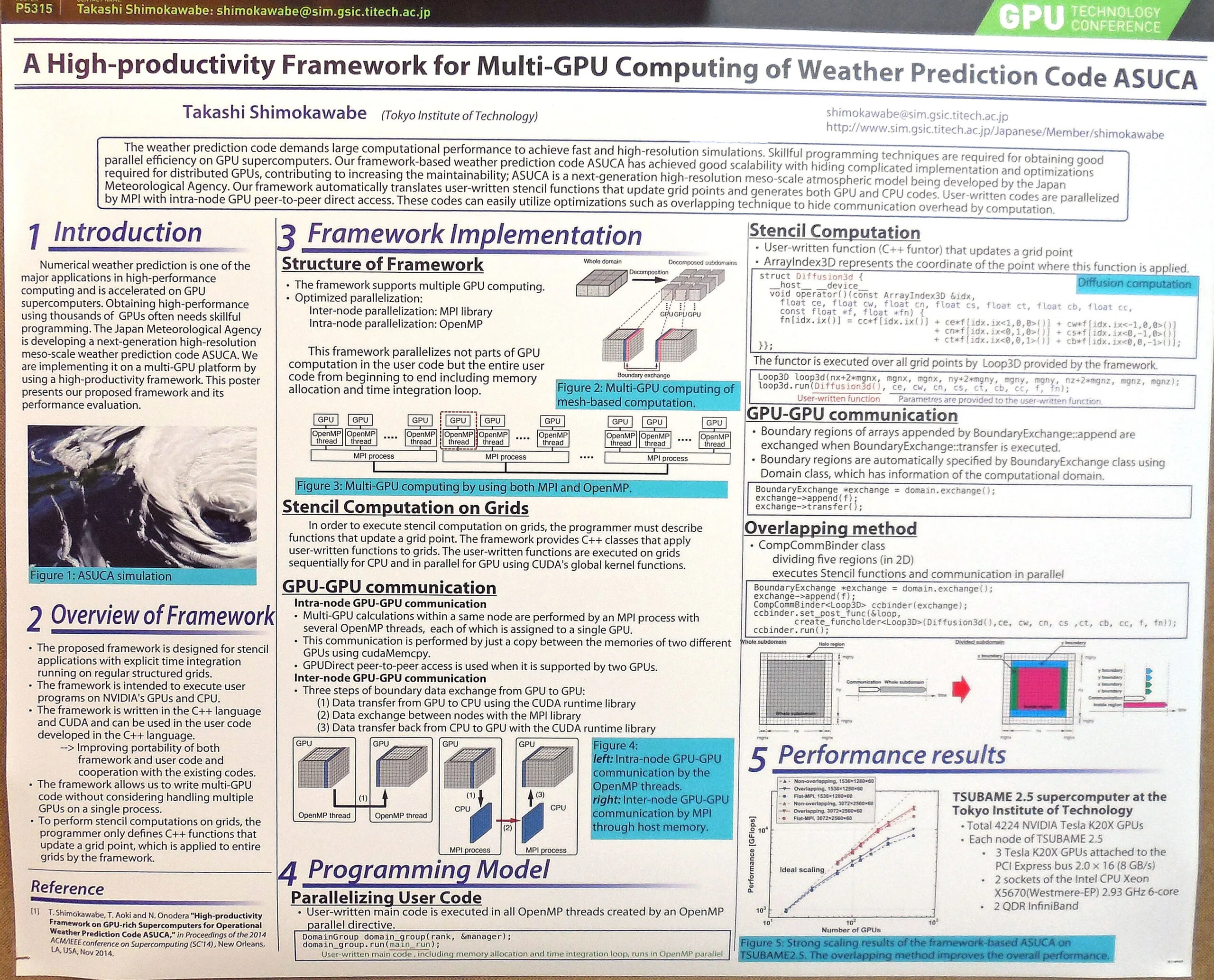

There was a lot of note taking, and of course, these and all GTC sessions can be accessed on Nvidia’s web site by the end of this month. Each day, after the sessions end at 6 PM, there was a networking happy hour and it was time to visit some of the exhibits until 8 PM. On the way to the exhibitors hall, one passed the multitude of posters on display. The many uses of the GPU were illustrated at the GTC by the posters displayed for all to see. There are some impressive uses of the GPU that affect our life.

Almost all of the posters are quite technical – some of them deal with extremes, from national security and tsunami modeling to visually programming the GPU and neural networks.There were “hangout” areas where you could get answers to very specific questions. You could even vote for your top 5 favorite posters.

Almost all of the posters are quite technical – some of them deal with extremes, from national security and tsunami modeling to visually programming the GPU and neural networks.There were “hangout” areas where you could get answers to very specific questions. You could even vote for your top 5 favorite posters.

All of the posters have in common using the massively parallel processing of the GPU.

Of course there is much more to the GTC and Nvidia’s partners had many exhibits that this editor just got a glimpse of. We have just barely scratched the surface of GTC 2015. Tuesday was a full day and there were still two days for us left to go.

Wednesday & Thursday

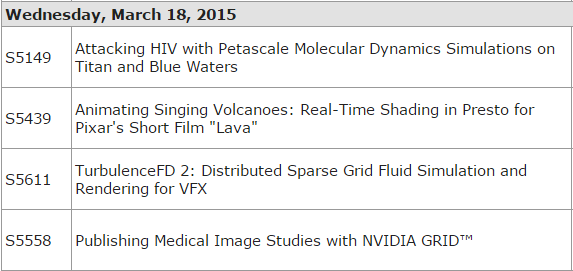

Here are some of our Wednesday sessions.

At each of the previous GTCs, we have been following the GPU in medical research; and we are especially interested in what and Pixar has been most recently working on. This year we noticed that GRID was becoming popular and was replacing expensive physical workstations with virtualized ones. And we were not disappointed with the sessions as much progress has been made from year-to-year. But before we look at each of these sessions, let’s check out the GTC’s Keynote address.

At each of the previous GTCs, we have been following the GPU in medical research; and we are especially interested in what and Pixar has been most recently working on. This year we noticed that GRID was becoming popular and was replacing expensive physical workstations with virtualized ones. And we were not disappointed with the sessions as much progress has been made from year-to-year. But before we look at each of these sessions, let’s check out the GTC’s Keynote address.

Wednesday’s Keynote – Google’s Jeff Dean presents Large Scale Deep Learning

Large Scale Deep Learning was the subject of Google’s Jeff Dean. The 1 hour long entire keynote may be replayed from Nvidia’s GPU 2015 library.

This keynote is well worth watching. Jeff Dean showed off Google’s new deep learning technology that was used build a machine that has mastered 50 classic Atari video games much better than a human can. Google’s deep leaning work is built on creating neural networks very roughly modeled on the human brain so that these new digital brains rely on sophisticated algorithms to teach machines to perform increasingly more complex tasks.

Teaching a digital neural network is similar to teaching a human child to learns to identify different kinds of things by being shown many examples. And training a computer to learn how to do these tasks saves vast amounts of time. Google’s deep learning network has moved from mastery of classic Atari games to more complex ones and we see deep learning being used by Google in many of their other products, such as search and in translation. What has really helped Google speed up their project is by moving their tasks to the GPU.

Other Wednesday Sessions:

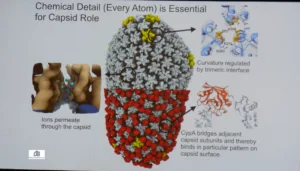

Attacking HIV with Petascale Molecular Dynamics Simulations on Titan and Blue Waters

This was a short 1/2 hour session devoted to demonstrating the complexity of this project by Dr. James Philips The entire .pdf may be downloaded from Nvidia’s GTC site.

This was a short 1/2 hour session devoted to demonstrating the complexity of this project by Dr. James Philips The entire .pdf may be downloaded from Nvidia’s GTC site.

The takeaway from this session was that the chemical detailing of the HIV molecule is incredibly complex and that progress can only be made by harnessing the parallel processing power of the GPU Supercomputing. A single microsecond simulation involves 64 million atoms!

The importance was stressed of remote virtualization for many users – not just those with direct access to a supercomputer. There is much progress that has been made in this field and there is quite a long way to go.

The importance was stressed of remote virtualization for many users – not just those with direct access to a supercomputer. There is much progress that has been made in this field and there is quite a long way to go.

It appears that Maxwell architecture will have a direct impact on the performance of running these massive simulations. It is also interesting to see that professionals and researchers get just as excited as gamers do in looking for new GPU architecture and speeding up our programs. And we actually stayed for two sessions that covered medical imaging.

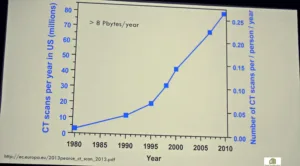

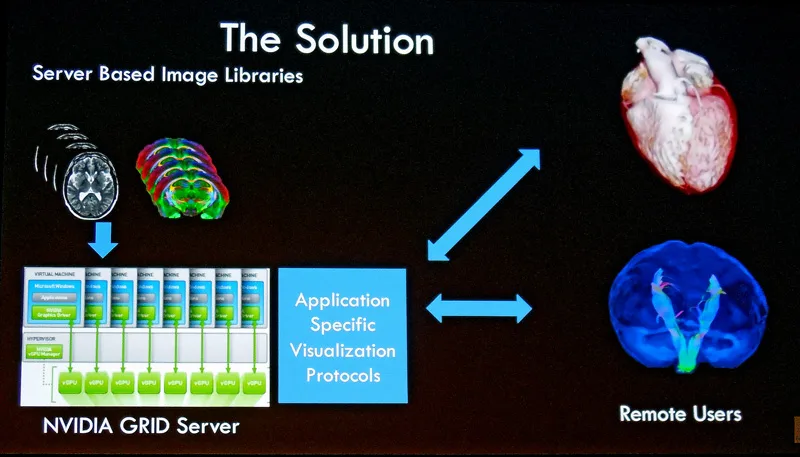

Publishing Medical Image Studies with NVIDIA GRID

This was another short session. This time we had a pioneer in medical imaging explain the progress of the CAT scan over the past 30 years. The complexity of the scans has increased and the GPU has been pressed into service to allow for it and to speed up the process significantly.

This was another short session. This time we had a pioneer in medical imaging explain the progress of the CAT scan over the past 30 years. The complexity of the scans has increased and the GPU has been pressed into service to allow for it and to speed up the process significantly.

Now the takeaway is that the workstations required to process these scans cost over 100 thousand dollars, and the medical establishment is making a “leap of faith” with GRID to virtualize these workstations.

The report is that GRID is both cost effective and useful for the medical establishment. For a change of pace, we headed over to Pixar’s presentation.

The report is that GRID is both cost effective and useful for the medical establishment. For a change of pace, we headed over to Pixar’s presentation.

Animating Singing Volcanoes: Real-Time Shading in Presto for Pixar’s Short Film “Lava”

Pixar’s sessions are always fun and interesting. Although this session was for everyone, it was quite technical. And as always, the GPU is used for rendering so that for every step of a short feature film as “Lava”, great feedback can be provided to not only the director, but to the animators.

Pixar’s sessions are always fun and interesting. Although this session was for everyone, it was quite technical. And as always, the GPU is used for rendering so that for every step of a short feature film as “Lava”, great feedback can be provided to not only the director, but to the animators.

In this particular case, the challenge was to make the volcano appear to be made of rock while still being animated. They succeeded and the film should be fun to watch.

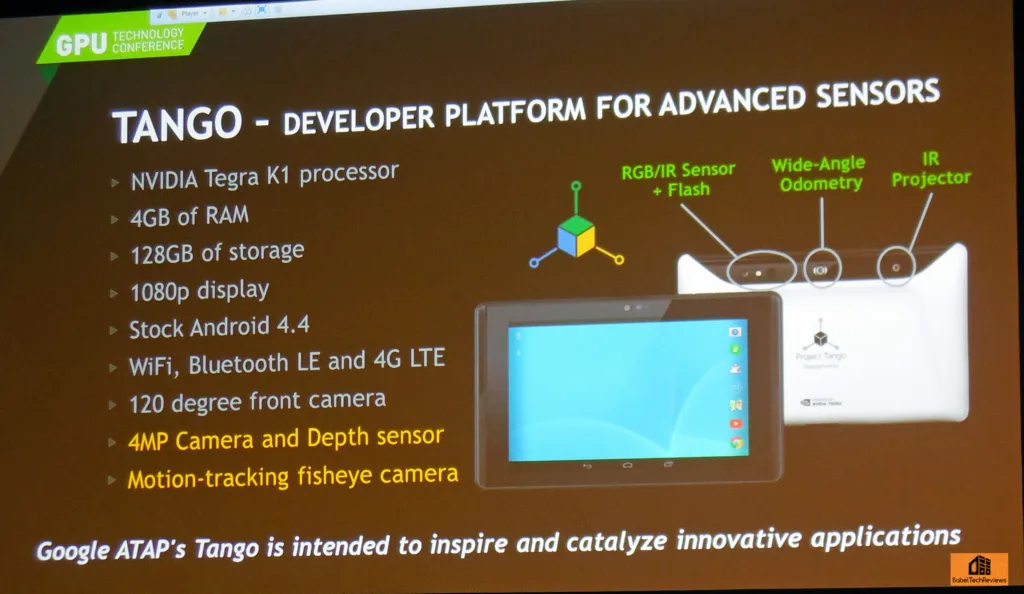

We headed for a few more Wednesday sessions and got to look in on the progress with Google’s Project Tango.

Well, it was time to check out the exhibit hall again then head across the street for an informal GeForce dinner with the other Tech Press, and then to head back to our room to prepare for Thursday.

The Thursday Keynote .

Baidu’s Chief Scientist Andrew Ng delivered Thursday’s keynote address. During his hour-long presentation, Dr. Ng showed us the recent progress with deep learning being driven by high-performance computing and the increasing digitization of everything.

Baidu’s Chief Scientist Andrew Ng delivered Thursday’s keynote address. During his hour-long presentation, Dr. Ng showed us the recent progress with deep learning being driven by high-performance computing and the increasing digitization of everything.

Dr. Ng compared this progress to building a rocket and even covered the basics of neural networks. We were treated to a live demo of Baidu Deep Speech recognizing speech in real-time in quiet and noisy environments.

Dr. Ng also played down the danger of AI research, saying it is too far into the future to be concerned about any dangers of self-aware AI.

We headed back once more to the Exhibit hall for lunch and to look at the exhibits as they were winding down. We passed by a section with oversized checks awarded to some lucky winners and a few charities. It made us feel good to see the happiness in their faces. We met with some of our friends and did not attend any more sessions but prepared to visit the GTC Thursday Party and then head for home about 10 PM so as to miss any traffic.

Networking/exhibits

In the main exhibit hall, Nvidia had several large sections.

Cars are always popular, and now much more than Infotainment/navigation and entertainment are provided by Nvidia. The press was also invited to check out and drive the new BMWs and their prominent infotainment systems powered by Tegra.

There was also exhibit space devoted to a virtual showroom to demonstrate how a potential buyer might customize his auto, including the background in real time. Here are two more cars that will use mobile Tegra. Here are some more exotics cars:

There was also exhibit space devoted to a virtual showroom to demonstrate how a potential buyer might customize his auto, including the background in real time. Here are two more cars that will use mobile Tegra. Here are some more exotics cars:

All of Nvidia’s major partners in professional GPU computing were represented with their servers, including HP, a major sponsor of the GTC.

All of Nvidia’s major partners in professional GPU computing were represented with their servers, including HP, a major sponsor of the GTC.

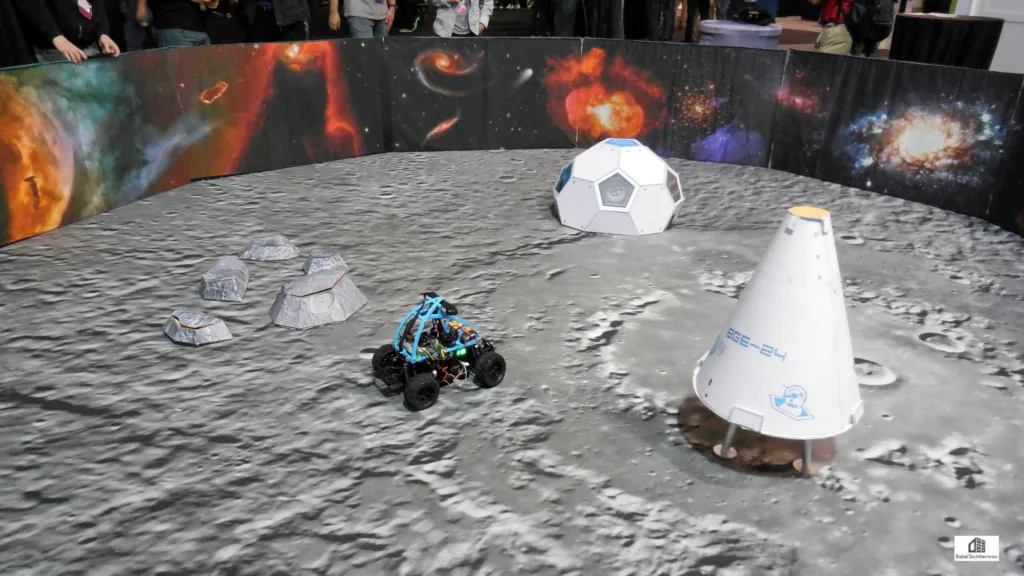

There was a lot to take in. You could pilot a mini moon rover-type vehicle. Even 3D printing was showcased and we ran into Jensen quite by chance who was also checking out this 3-D printed off-road vehicle!

Even 3D printing was showcased and we ran into Jensen quite by chance who was also checking out this 3-D printed off-road vehicle!

We are reminded that the GTC is all about people. Helpful people. People with a passion for GPU computing and desire to share and learn. In this booth, we were able to come face to face with a T-Rex using Oculus Rift and CryTek’s latest demo. From what we could see, the state of VR has progressed since we last visited it at Nvidia’s Press Day in September, 2014, just six months ago!

The GPU cannot yet replace face-to-face human contact.

The GPU cannot yet replace face-to-face human contact.

Everywhere we see networking. We also engaged in it and our readers will see improvements to this tech site because of knowledge gained and shared at the GTC with fellow editors.

Appetizers were also served at no charge. It was quite a logistical feat to care for over 3,000 attendees, but Nvidia was once again up to the task. The only issue with the GTC is that there is realistically too much to take in at once. No one could see the hundreds of sessions that were held simultaneously. There also were over 200 volunteers which made GTC 2015 possible. Our thanks to Nvidia for inviting BTR!

Conclusion

On Thursday night, after a quick visit to the GTC party, we packed up early and headed home. We said goodbye to our adventure at the 2015 GTC and we hope that we can return next year. It was an amazing experience as it is really an ongoing revolution that started as part of Nvidia’s vision for GPU programming just a few years ago. It is Nvidia’s disruptive revolution to make the GPU “all purpose” and at least as important as the CPU in computing. Over and over, their stated goal is to put the massively parallel processing capabilities of the GPU into the hands of smart people.

Here is our conclusion from the first GPU Technology Conference, GTC 2009:

Nvidia gets a 9/10 for this conference; a solid “A” for what it was and is becoming and I am looking forward to GTC 2010! … This editor sees the GPU computing revolution as real and we welcome it!

Well, the GTC 2015 gets another solid “A” from this editor again. Nvidia has made the conference larger and the schedule even more hectic. Next year, attendees can look forward to another 5-day conference from April 4-8, 2016 in Silicon Valley; with no mention of the San Jose Convention Center this time.

Well, the GTC 2015 gets another solid “A” from this editor again. Nvidia has made the conference larger and the schedule even more hectic. Next year, attendees can look forward to another 5-day conference from April 4-8, 2016 in Silicon Valley; with no mention of the San Jose Convention Center this time.

Our hope for GTC 2016 is that Nvidia will make it “spectacular” like they did with Nvision08 – open to the public. It might be time to bring the public awareness of GPU computing to the fore by again highlighting the video gaming side of what Nvidia GPUs can do, as well as with diverse projects including the progress made with Deep Learning and in automotive, for example.

This was a very short and personal report on GTC 2015. We have many gigabytes of untapped raw video plus hundreds of pictures that did not make it into this wrap-up. However, we shall continue to reflect back on the GTC until the next GPU Technology Conference.

Mark Poppin

BTR Editor-in-Chief