Post Production – The Drudgery Part of VR Filmmaking

If you were lucky enough to live in the Americas, you were able to watch most of the Summer Olympics in near real time – streamed to your computer at the office, to your smartphone when you were out and about and to your huge 4K HDR (high dynamic range) TV at home.

For everyone else around the globe … sorry.

More than 6,70 hours of Games programming was captured – 356 hours a day (19 days) – which should satisfy any binge viewer.

I enjoyed the 360 video stuff that Facebook and Google streamed to my kid’s HMD (head mounted display) and couldn’t wait to get feedback from my favorite VR filmmakers – Lewis Smithingham, 30ninjas; Nick Bicanic, RVLVR Labs; George Krieger, Sphericam; and Marcelo Lewin, DigMediaPros.

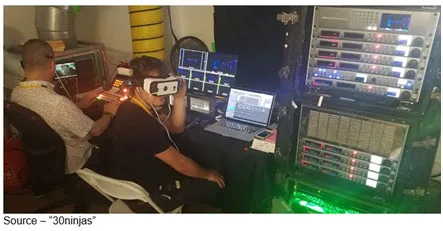

Having finished a Conan 360 show not long before, Lewis was gentle in his Olympics coverage review. Both he and Nick know the chaos that goes on behind the scenes to shoot, stitch and post when the clock is ticking.

Lewin, who has done 360 video coverage of Cine Gear Expo and Siggraph to give people the highlights of the events for DigMediaPros.com, liked the near real time feel but like the others, bristled when announcers referred to it as VR coverage.

“Real time events like sports coverage work well in 360 but it certainly isn’t VR, it’s simply a 360 degree view,” Lewin emphasized.

“Early 360 video has mostly been quite gimmicky. Producers have banked on the awe of swimming with a whale underwater or wandering around a Syrian refugee camp,” Nick explained. “And while that worked for a while, I’ll gladly trade ten minutes of awe for two seconds of emotion – the first day of school, kicking a ball into the goal, first kiss, broken heart. That is what we are trying to evolve VR storytelling towards.”

To deliver that emotion in any film, every filmmaker agrees that the production and post production part of the project is arduous; requiring days, weeks, months of going through pure hell.

It was difficult when the industry first began to produce films digitally because film cutting was left in the past.

It’s worse with VR filmmaking because the workflow and tools are still in their infancy.

But even digital production was still very linear and statically dynamic. With VR, filmmakers have to break work habits built up over decades.

Filmmakers who are still resting on the laurels of a proven 4K/HDR (high dynamic range) workflow dismiss the emerging immersive films and say you can’t edit in VR since there is no frame. A creator doesn’t know what the viewer is looking at – therefore, any kind of cut is too dangerous because you could confuse them.

Sounds good unless you haven’t thought through the VR project at the outset.

Bicanic’s Approach

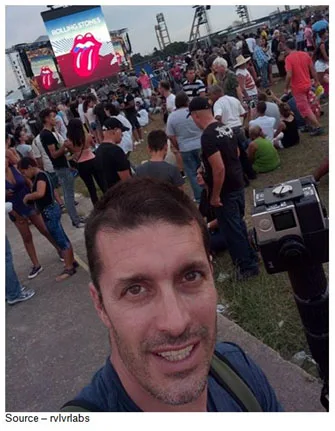

During his shoot in Cuba, Nick had the good fortune to also squeeze in a music industry documentary, the first major music event in the country in nearly 50 years, The Rolling Stones concert.

Regarding this project and others, Nick noted, “Moving this medium forward, 360 storytelling demands a healthy balance of technological expertise with creative vision – and no small manner of comfort in risk-taking.”

To guide the viewer as a storyteller, he uses what he calls an Arc of Attention which he has set at 25 degrees (The number is arbitrary — color/contrast/movement/sound could help increase or decrease the arc — but essentially, it’s a section of the visible area of the sphere that the viewer can reasonably pay attention to). Then, using object-based sound, movement, staging, dialogue, mise-en-scene, etc., cutting is entirely possible.

For the documentary and many of his VR projects, Nick had a short script of what he wanted to capture/show the viewer.

Using the Izagar Z4X, he situated his camera rigs to capture a variety of wide shots, a lot of OTS (over the shoulder), NPOV (near point-of-view) and the performer action as well as crowd response of more than million attendees.

The first thing every VR filmmaker finds is that he/she has a lot of very good, rich content to work with – as much as 20TB per day – and Nick was no exception.

The hard work is editing 360/VR videos efficiently and Nick isn’t shy in using fast match-cutting. He works with the Premiere and After Effects timeline, using the Oculus Rift and HTC Vive to preview edits in real-time through Mettle’s Skybox suite of tools.

Editing in VR requires the creator to think in terms of orientation in addition to framing and instead of a traditional storyboard the focus is on composition and location of objects in relation to frontal orientation.

Traditional close-ups and wide shot framings can be approximated by camera proximity to action – and can be used very successfully to immerse the viewer; but they don’t always have the same effect as a traditional editor or director might expect. For example, very tight closeups can register as over the top and invasive of a character’s private space.

During production and post, Nick practices very aggressive cuts because the brain can handle more rapid juxtaposition than most filmmakers have tried. His intention is to help define a cinematic language to allow compression of time and the telling of different kinds of stories.

“We live every day in 360 degrees,” he emphasized. “Our eyes only see about 170 degrees but we can hear 360 degrees with our ears. VR is a different medium and we can certainly do better than cross-fades, wipes and fades to black!”

Smithingham’s Cut

Whether it’s on the ocean floor, in poverty-ridden, hurricane-battered Haiti or a Hollywood studio; Lewis’ approach to production is to transport viewers into and between scenes in a way that the viewers feel they initiated the action.

That sounds relatively easy – and fun – but Lewis noted, “Content that looks and feels natural is extraordinarily hard.”

Folks say it’s all about the technology and making it cheaper, more accessible; but that’s such a minor part of making VR a success. The key is having really talented people involved in the new medium of storytelling.

Lewis plans his shots and prefers to overshoot – a lot – so he has every element he could possibly need when he edits.

“People say we can’t manipulate viewer behavior in VR, which is BS,” Lewis emphasized. “It’s the fundamentals of good video storytelling. We use establishing shots and use hints to tell the story a certain way. We can’t prevent the viewer from looking left or right, but we can draw their attention to our story.”

While action shots make it difficult to set an even horizon, their dynamics establish the stage for the viewer so viewers can be guided in the right direction, giving them a break to look around so they avoid the “car sick” effect.

Filmmakers and critics tend to forget that viewers can run the gamut from distracted, lazy, easily confused, curious, enchanted, engaged and even bored; so to keep viewers involved Lewis, like Nick, prefers fast cuts, some might say ruthless cuts.

“The average shot length in today’s mainstream pictures is about two seconds (often less),” Lewis noted. “And VR editing is no different. The key is still to keep the viewer interested and involved.”

To have all of the material that he needs when he sits down to edit, Lewis regularly captures more than 20TB of content a day, sometimes more … lots more.

Lewis explained the complexity and volume by reminding us a minimum of six (at times 10-14) cameras are required and at least 60 fps (frames per second). Lower frame rates can cause HMDs to drop frames or cause motion sickness.

A 4K FOV (field of view) rectangular image is 12,288 x 6,144 pixels and even with conservative ProRes 4:2:2 compression, we’re talking upward of 3GB/s at the workstation.

No wonder high-performance computers and high-throughput, expandable storage are mandatory!

After stitching (the process by which multiple camera angles are fused together into a spherical video panorama) Adobe and Final Cut tools make his content smart and expandable for the viewer.

“Production and editing is a labor of love that every filmmaker I know hates,” He commented. “The problem is most get so wrapped up in the elegance of the story, the audience gets left behind. The key to a great 360/VR film is that we constantly think about the audience…then everyone wins!”

For Lewis, the most frustrating part of the 360/VR creative process is stitching. While the software is steadily improving, it is still very laborious and expensive – often $10,000 plus per finished minute.

Krieger’s Answer

George Krieger has been a respected UAV (unmanned aerial vehicle – drone) and 360 video filmmaker for years and shares the opinion of most filmmakers, “Production jobs are hell, but we love it so much we make that hell, our little oyster of a world.”

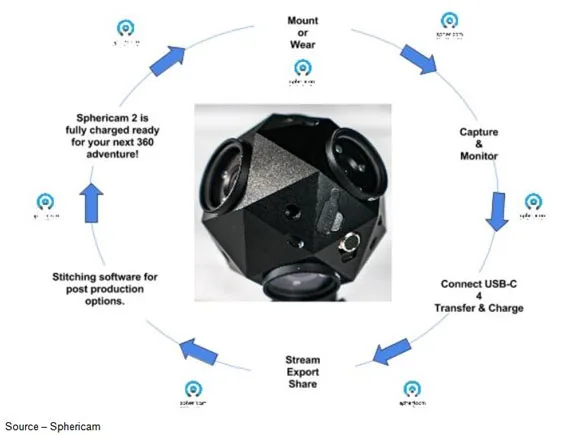

While the workflow on typical high-res, high frame rate 360 video workflow is universally hated, Krieger began working with a system that is a 360 degree camera vs a camera array developed by Jeffrey Martin that made his shooting life so much easier he recently joined the company as a TME (technical marketing engineer). He still does 360/VR film work to stay in practice.

Since most of the time and money spent on VR is generally in the post production phase, the Sphericam was designed to reduce both budgets.

Higher frame rates are important for VR because the smoother the movement, the more natural, realistic and immersive the experience; which adds to the data payload and computational challenge.

The key difference with the Spehricam is that the six-lens camera does the stitching internally and uses a unified file system so the filmmaker knows which file is from which camera and which shoot. Each clip can be converted, adjusted and exported ready for post production with Adobe Premiere or After Effects.

Nick and Lewis are more than a little interested in testing the Sphericam because every day, they learn something new they can do with the new format.

While there is still a lot of confusion about what exactly VR is, Merritt McKinney observed, “I’m just trying to create the space for wisdom.”

While there is still a lot of confusion about what exactly VR is, Merritt McKinney observed, “I’m just trying to create the space for wisdom.”

# # #