The RTX 3090 FE vs. RTX 2080 Ti x2 mGPU using SLI, Pro Apps & Workstation and GPGPU benchmarks

This review follows up on the RTX 3090 Founders Edition (FE) launch review. It is the fastest video card in the world, and it is a GeForce optimized for gaming – it is not a TITAN nor a Quadro replacement. However, we demonstrated that it is very fast for SPEC and GPGPU benches, and its huge 24GB vRAM framebuffer allows it to excel in many popular creative apps making it especially fast at rendering.

The RTX 3090 is NVIDIA’s flagship card that commands a premium price of $1500, and some gamers and pro app users may consider buying a second RTX 2080 Ti as an alternative for SLI/mGPU (Multi-GPU) gaming, pro apps, SPEC, GPGPU, and for creative apps. So we purchased a EVGA RTX 2080 Ti XC from eBay and a RTX TITAN NVLink High Bandwidth bridge from Amazon to test 2 x RTX 2080 Tis versus the RTX 3090.

The RTX 3090 is the fastest video card for gaming and it is the first card to be able to run some games at 8K. But the RTX 2080 Ti is still very capable as NVIDIA’s former flagship card, and we will demonstrate how two of them perform in SLI/mGPU games, SPECworkstation3, creative apps using the Blender 2.90 and OTOY benchmarks, and in Sandra 2020 and AIDA64 GPGPU benchmarks. In addition, we will also focus on pro applications like Blender rendering, DaVinci’s Black Magic, and OTOY OctaneRender. It will be interesting to see if two RTX 2080 Ti’s pool their memory to 22GB using these pro apps versus the RTX 3090 24GB.

We benchmark SLI games using Windows 10 64-bit Pro Edition at 2560×1440 and at 3840×2160 using Intel’s Core i9-10900K at 5.1/5.0 GHz and 32GB of T-FORCE DARK Z 3600MHz DDR4. All benchmarks use their latest versions, and we use the same GeForce Game Ready drivers for games and the latest Studio driver for testing pro apps.

Let’s check out our test configuration.

Test Configuration

Test Configuration – Hardware

- Intel Core i9-10900K (HyperThreading/Turbo boost On; All cores overclocked to 5.1GHz/5.0Ghz. Comet Lake DX11 CPU graphics)

- EVGA Z490 FTW motherboard (Intel Z490 chipset, v1.3 BIOS, PCIe 3.0/3.1/3.2 specification, CrossFire/SLI 8x+8x), supplied by EVGA

- T-FORCE DARK Z 32GB DDR4 (2x16GB, dual channel at 3600MHz), supplied by Team Group

- RTX 3090 Founders Edition 24GB, stock clocks on loan from NVIDIA

- RTX 2080 Ti Founders Edition 11GB, clocks set to match the EVGA card, on loan from NVIDIA

- EVGA RTX 2080 Ti Black 11GB, factory clocks

- 1TB Team Group MP33 NVMe2 PCIe SSD for C: drive

- 1.92TB San Disk enterprise class SATA III SSD (storage)

- 2TB Micron 1100 SATA III SSD (storage)

- 1TB Team Group GX2 SATA III SSD (storage)

- 500GB T-FORCE Vulcan SSD (storage), supplied by Team Group

- ANTEC HCG1000 Extreme, 1000W gold power supply unit

- BenQ EW3270U 32″ 4K HDR 60Hz FreeSync monitor

- Samsung G7 Odyssey (LC27G75TQSNXZA) 27? 2560×1440/240Hz/1ms/G-SYNC/HDR600 monitor

- DEEPCOOL Castle 360EX AIO 360mm liquid CPU cooler

- Phanteks Eclipse P400 ATX mid-tower (plus 1 Noctua 140mm fan) – All benchmarking performed with the case closed

Test Configuration – Software

- GeForce 456.38 – the last driver to offer a new SLI profile. Game Ready (GRD) drivers are used for gaming and the Studio drivers are used for pro/creative, SPEC, workstation, and GPGPU apps.

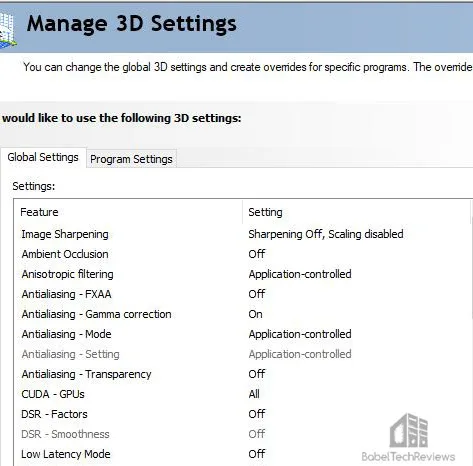

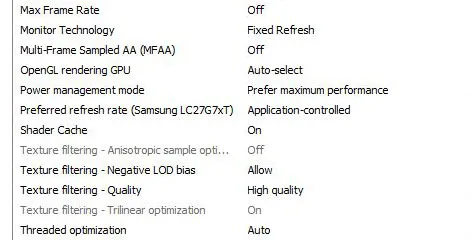

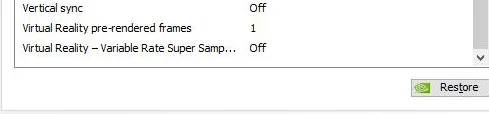

- High Quality, prefer maximum performance, single display, fixed refresh, set in the NVIDIA control panel.

- VSync is off in the control panel and disabled for each game

- AA enabled as noted in games; all in-game settings are specified with 16xAF always applied

- Highest quality sound (stereo) used in all games

- All games have been patched to their latest versions

- Gaming results show average frame rates in bold including minimum frame rates shown on the chart next to the averages in a smaller italics font where higher is better. Games benched with OCAT show average framerates but the minimums are expressed by frametimes (99th-percentile) in ms where lower numbers are better.

- Windows 10 64-bit Pro edition; latest updates v2004.

- Latest DirectX

- MSI’s Afterburner, 4.6.3 beta

- OCAT 1.6

SLI/mGPU Games

- Strange Brigade

- Shadow of the Tomb Raider

- Ashes of the Singularity, Escalation

- Project CARS 2

- Star Wars: Jedi Fallen Order

- The Outer Worlds

- Destiny 2 Shadowkeep

- Far Cry New Dawn

- RTX Quake II

Synthetic

- TimeSpy (DX12)

- 3DMark FireStrike – Ultra & Extreme

- Superposition

- Heaven 4.0 benchmark

- AIDA64 GPGPU benchmarks

- Blender 2.90 benchmark

- Sandra 2020 GPGPU Benchmarks

- SPECworkstation3

- Octane benchmark

Professional Applications

- Black Magic Design DaVinci Resolve, supplied by NVIDIA

- Blender 2.90

- OTOY Octane Render 2020 1.5 Demo – 8K Redcode RAW projects

NVIDIA Control Panel settings

Here are the NVIDIA Control Panel settings.

We used MSI’s Afterburner to set all video cards’ power and temperature limits to maximum as well as to set the Founders Edition clocks to match the XC’s clocks. By setting the Power Limits and Temperature limits to maximum for each card, they do not throttle, but they can each reach and maintain their individual maximum clocks better. When SLI is used, it is set to NVIDIA optimized.

So let’s check out performance on the next page.

SLI & mGPU

BTR has always been interested in SLI, and the last review we posted was in January, 2018 – GTX 1070 Ti SLI with 50 games. We concluded:

“SLI scaling is good performance-wise in mostly older games and where the devs specifically support SLI in newer DX11 and in DX12 games. When GTX 1070 Ti SLI scales well, it easily surpasses a single GTX 1080 Ti or TITAN Xp in performance. . . . SLI scaling in the newest games – and especially with DX12 – is going to depend on the developers’ support for each game [but] recent drivers may break SLI scaling that once worked, and even a new game patch may affect SLI game performance adversely.”

Fast forward 2-1/2 years to today. There are still very few mGPU games, and NVIDIA has relegated SLI to legacy. They will not be adding any new SLI profiles, and the only Ampere card that supports it is the RTX 3090 using a new NVLink bridge – for benchmarking – to set world records in synthetic tests like 3DMark. NVIDIA has this to say about SLI support “transitioning”, quoted in part:

“NVIDIA will no longer be adding new SLI driver profiles on RTX 20 Series and earlier GPUs starting on January 1st, 2021. Instead, we will focus efforts on supporting developers to implement SLI natively inside the games. We believe this will provide the best performance for SLI users.

Existing SLI driver profiles will continue to be tested and maintained for SLI-ready RTX 20 Series and earlier GPUs. For GeForce RTX 3090 and future SLI-capable GPUs, SLI will only be supported when implemented natively within the game.

[Natively supported] DirectX 12 titles include Shadow of the Tomb Raider, Civilization VI, Sniper Elite 4, Gears of War 4, Ashes of the Singularity: Escalation, Strange Brigade, Rise of the Tomb Raider, Zombie Army 4: Dead War, Hitman, Deus Ex: Mankind Divided, Battlefield 1, and Halo Wars 2.

[Natively supported] Vulkan titles include Red Dead Redemption 2, Quake 2 RTX, Ashes of the Singularity: Escalation, Strange Brigade, and Zombie Army 4: Dead War.

… Many creative and other non-gaming applications support multi-GPU performance scaling without the use of SLI driver profiles. These apps will continue to work across all currently supported GPUs as it does today.”

It looks bleak for SLI’s future and dev-supported mGPU titles are beyond rare. So we tested our 40-game benching suite and identified just nine games that scaled well with RTX 2080 Ti. Of the baker’s dozen games that NVIDIA lists and that we have, Civilization VI using the ‘Gathering Storm’ expansion benchmark did not scale, and Red Dead Redemption 2 crashed when we tried to use it. The other games on their list are old and run great on any modern GPU negating any reason to use SLI anyway, except perhaps for extreme supersampling.

We didn’t bother listing the performance of games that barely scale, scale negatively, or exhibit issues when SLI is enabled. That list is very long. Of course, there are still SLI enthusiasts who tweak their games with NVIDIA Inspector and roll back to old drivers to indulge their hobby – but we use the latest drivers without tediously trying workarounds that may or may not be successful.

Build-Gaming-Computers.com was able to identify a total of 57 games (out of many thousands) that scaled well or partially with SLI in 2020, and they have concluded that overall it isn’t worth the trouble or the expense of maintaining two finicky cards using an extra-large PSU. However, here are nine relatively modern games that we tested that show SLI scaling without a lot of microstutter or other issues associated with them.

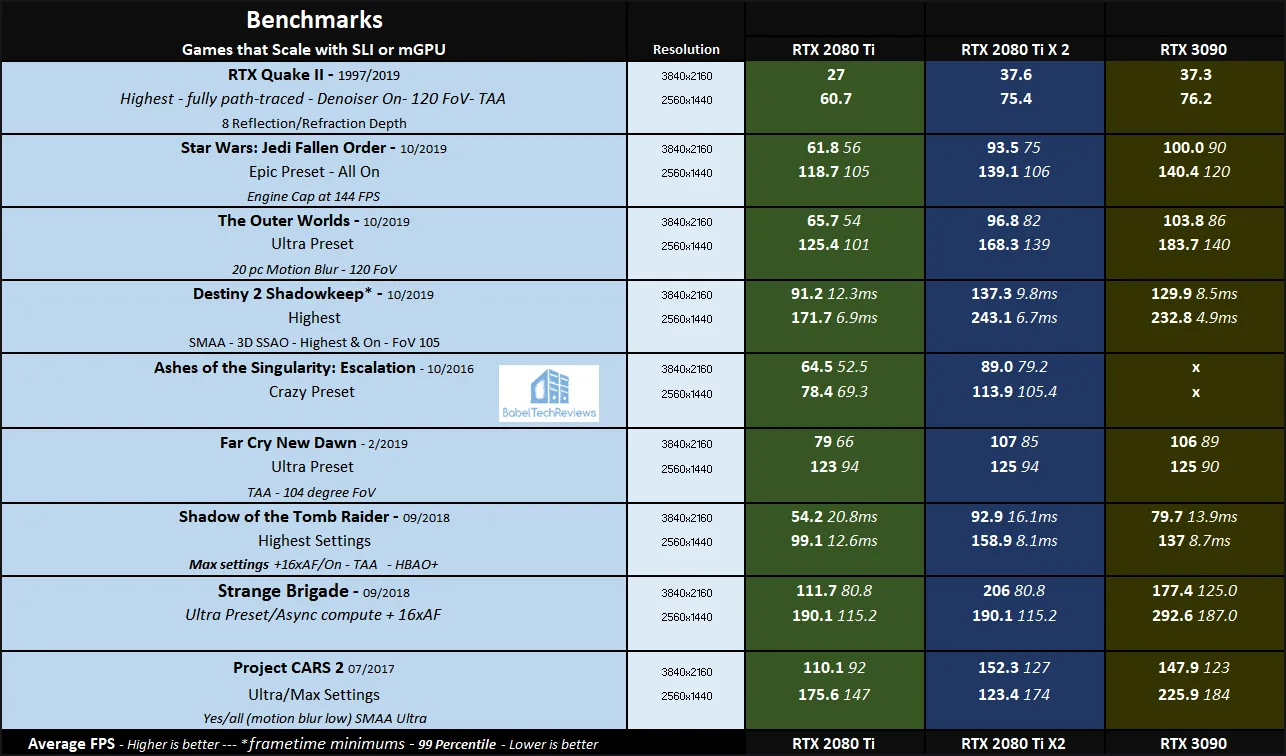

SLI Gaming Summary Charts

Here are the summary charts of 9 games and 3 synthetic tests that scale with mGPU or SLI. The highest settings are always chosen and the settings are listed on the chart. The benches were run at 2560×1440 and at 3840×2160. The first column represents the performance of a single RTX 2080 Ti, the second represents two RTX 1080 Tis, and the third column gives RTX 3090 results. ‘X’ means the game was not tested.

Most results show average framerates, and higher is better. Minimum framerates are next to the averages in italics and in a slightly smaller font. Destiny 2, benched with OCAT show average framerates, but the minimums are expressed by frametimes (99th-percentile) in ms where lower is better.

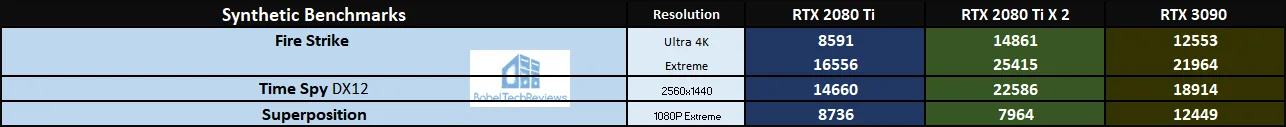

Although SLI scaling is good with these nine games at 4K, there are some issues with 256×1440 and framerate caps. We would prefer to play these nine games with a RTX 3090 that has no issues with microstutter. However, synthetic benches look pretty good.

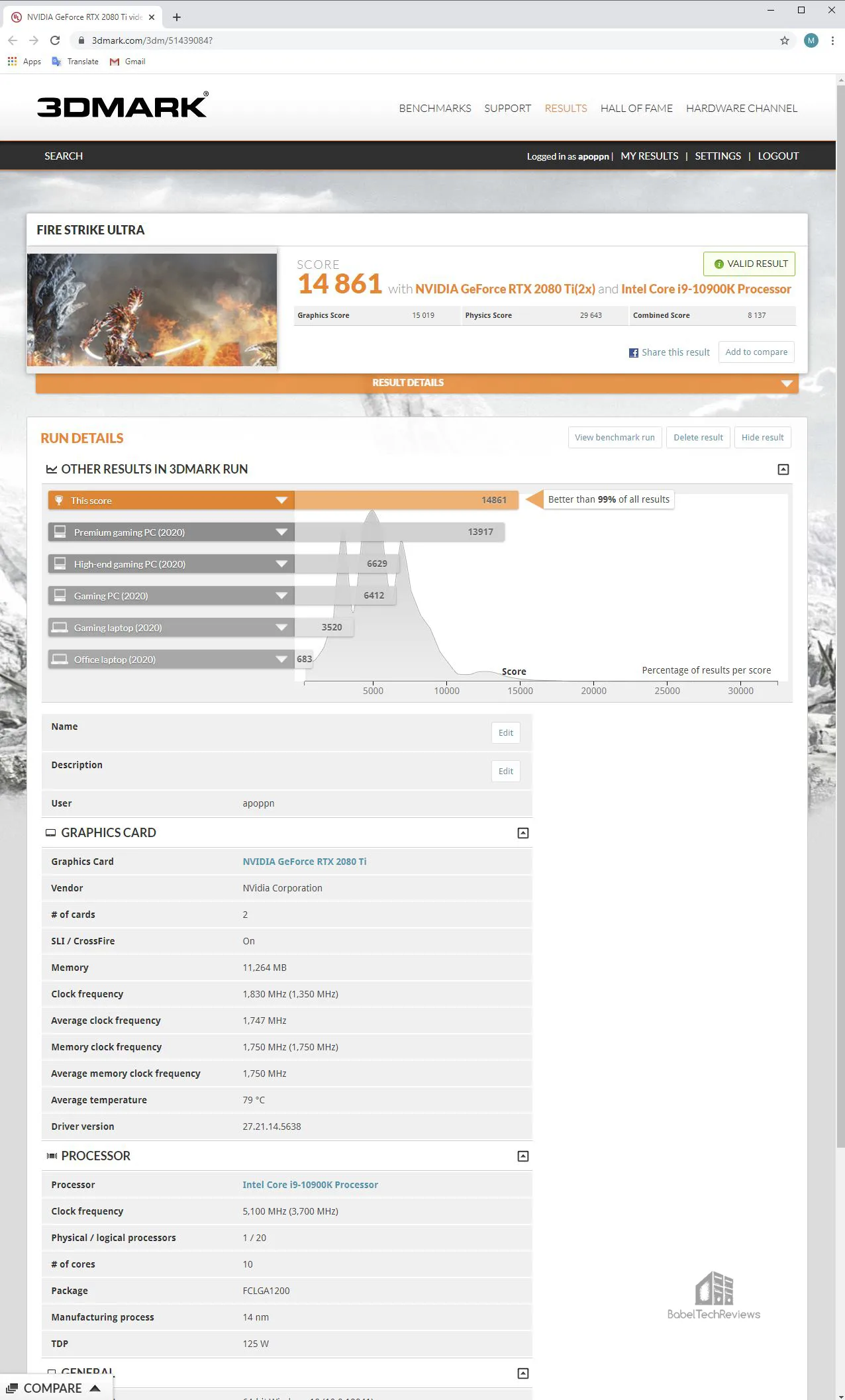

Even though the RTX 2080 Ti has been surpassed by both the RTX 3080 and the RTX 3090, our PC scored in the top 1% of all PCs using Fire Strike Ultra.

If you are a professional overclocker and/or want to set a world record, we would suggest buying two RTX 3090s for that purpose instead of using any two Turing video cards.

We cannot recommend SLI to any gamer unless they have a very large library of old(er) games that they revisit and play regularly and who don’t mind the issues associated with tweaking and maintaining SLI profiles using old drivers. Then there is the added inconvenience of disabling SLI each time most modern games are played. Besides, there are the additional issues of heat and noise coupled with using two powerful cards with a large PSU, not to mention the expense of buying a second card, and the higher cooling power bills associated with using SLI during the warm months of Summer.

So let’s look at Creative applications next to see if 2 x RTX 2080 Tis are a viable option versus the RTX 3090 starting with the Blender benchmark.

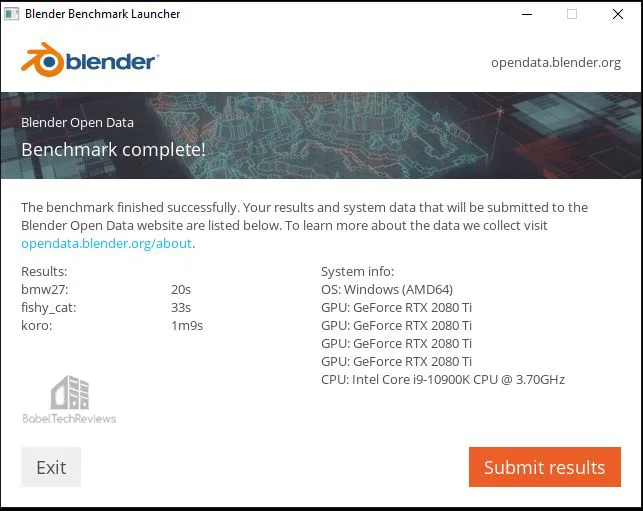

Blender 2.90 Benchmark

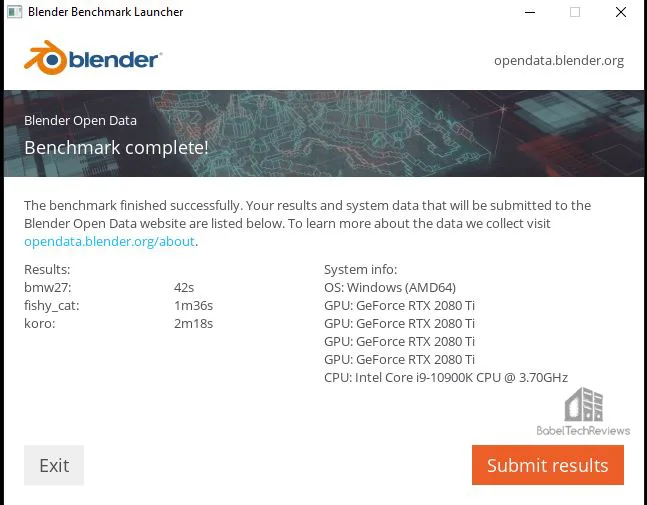

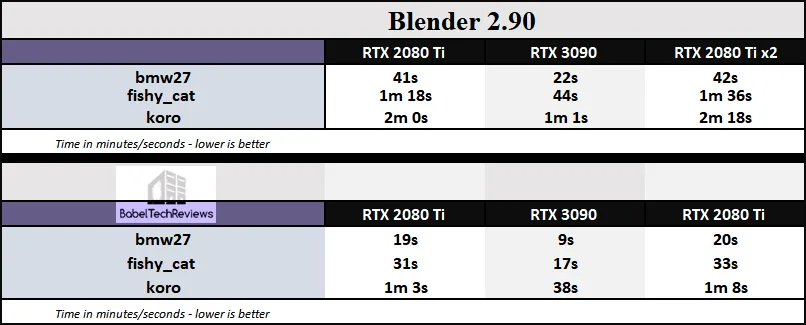

Blender is a very popular open source 3D content creation suite. It supports every aspect of 3D development with a complete range of tools for professional 3D creation. We will look at Blender rendering later in this review, but here are the official benchmark results.

For the following results, lower is better as the benchmark renders a scene multiple times and gives the results in minutes and seconds. First up, two RTX 2080 Ti’s using the RTX TITAN NVLink bridge with CUDA.

Next we try Optix using the two Tis.

There is no difference with SLI enabled or disabled. Here is the chart comparing the performance of a single RTX 2080 Ti with two versus a RTX 3090.

Performance is worse using the second RTX 2080 Ti as the benchmark is not optimized for a second video card. However, we will try to render a large scene in Blender as we show later.

Performance is worse using the second RTX 2080 Ti as the benchmark is not optimized for a second video card. However, we will try to render a large scene in Blender as we show later.

Next we look at the OctaneBench.

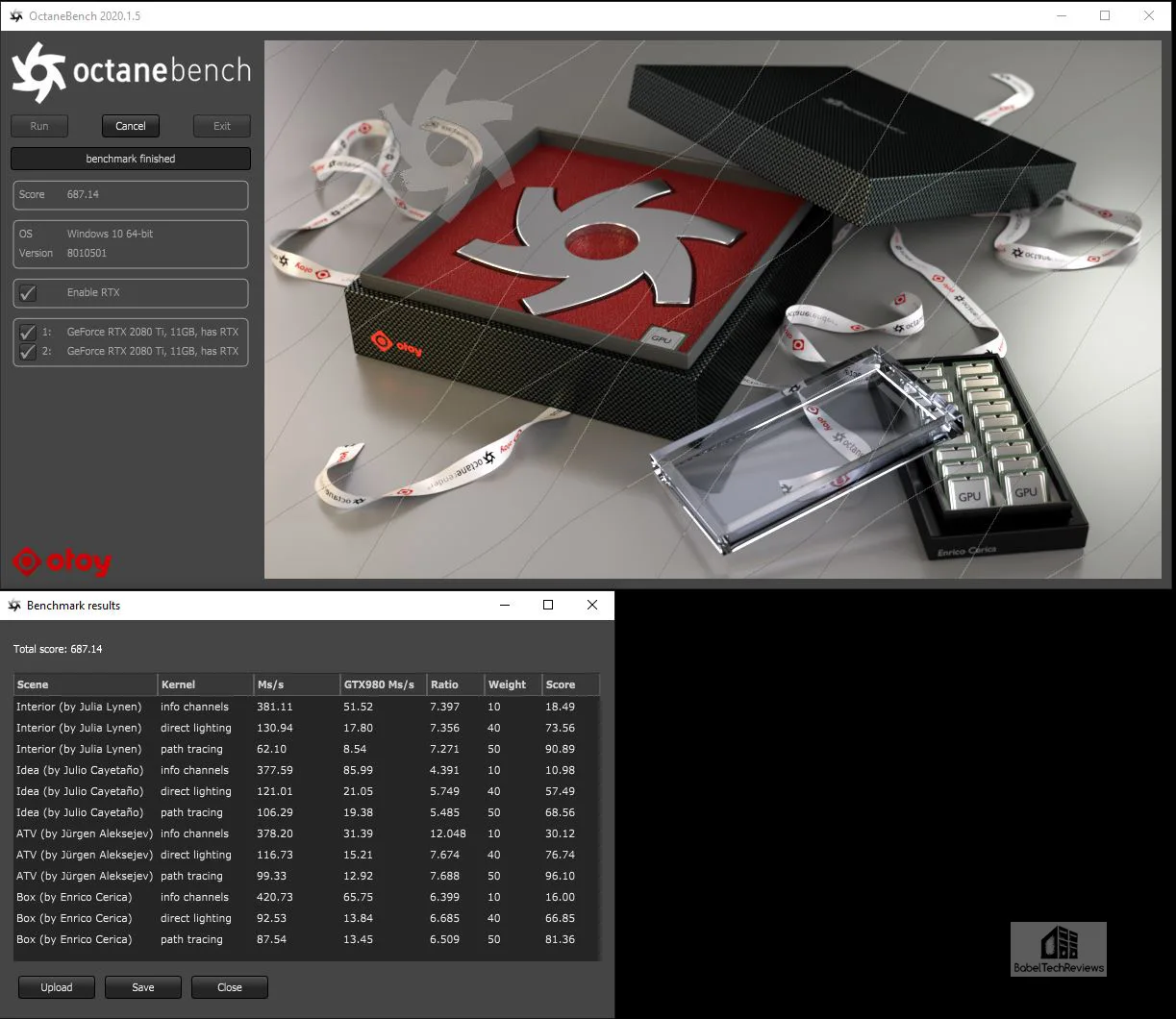

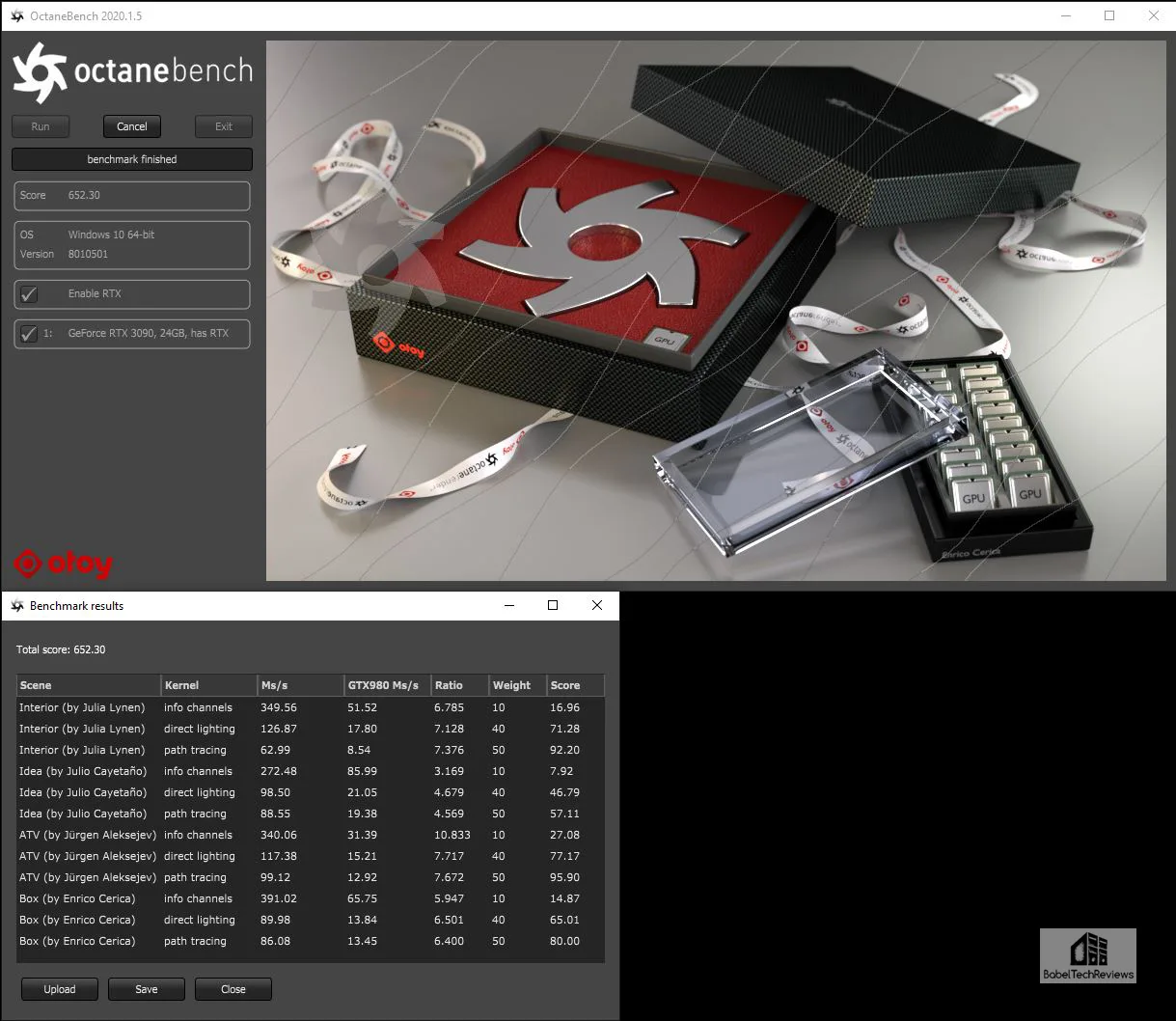

OTOY Octane Bench

OctaneBench allows you to benchmark your GPU using OctaneRender. The hardware and software requirements to run OctaneBench are the same as for OctaneRender Standalone and we shall also use OctaneRender for a specific rendering test later, under “Professional Apps”.

First we run OctaneBench 2020.1 for windows, and here are two NVlinked RTX 2080 Ti’s complete results and overall score of 687.14.

We run OctaneBench 2020.1 again and here are the RTX 3090’s complete results and overall score of 652.30.

We have a win for 2 linked RTX 2080 Ti’s scaling. Here is the summary chart.

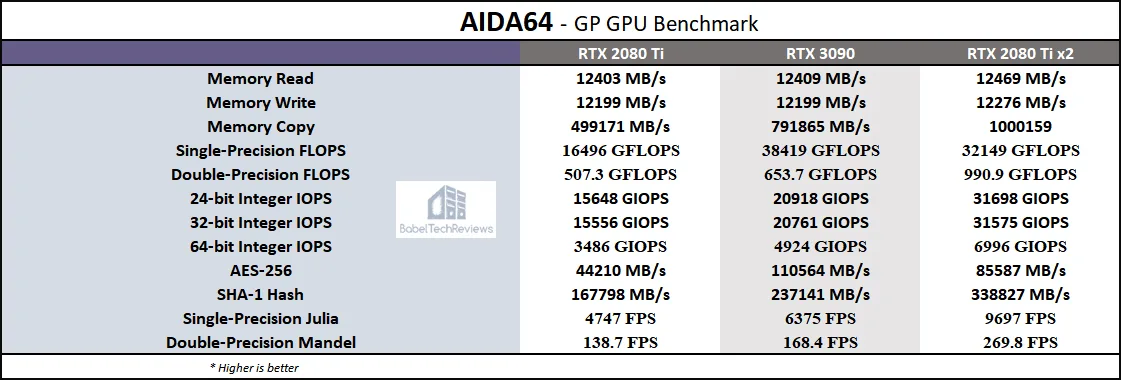

Next, we move on to AIDA64 GPGPU synthetic benchmarks that are built to scale with mGPU.

AIDA64 v6.25

AIDA64 is an important industry tool for benchmarkers. Its GPGPU benchmarks measure performance and give scores to compare against other popular video cards.

AIDA64’s benchmark code methods are written in Assembly language, and they are generally optimized for popular AMD, Intel, NVIDIA and VIA processors by utilizing their appropriate instruction set extensions. We use the Engineer’s full version of AIDA64 courtesy of FinalWire. AIDA64 is free to to try and use for 30 days. CPU results are also shown for comparison with the video cards’ GPGPU benchmarks.

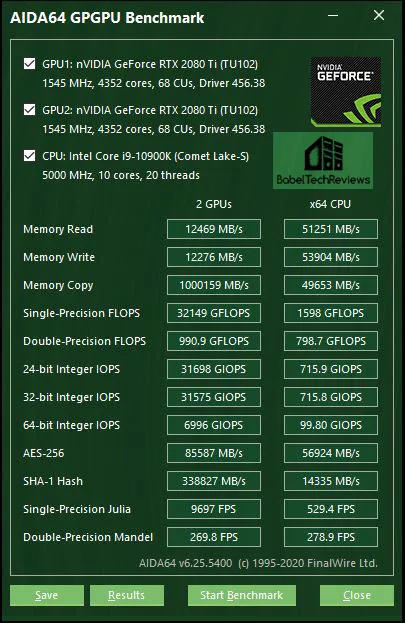

First the results with a pair of RTX 2080 Tis.

Now the RTX 3090:

Here is the chart summary of the AIDA64 GPGPU benchmarks with the RTX 2080 Ti, the RTX 3090, and NVLinked RTX 2080 Tis side-by-side.

Again the pair of linked RTX 2080 Tis are faster at almost all of AIDA64’s GPGPU benchmarks including the RTX 3090. So let’s look at Sandra 2020 which which is also optimized for mGPU.

SiSoft Sandra 2020

To see where the CPU, GPU, and motherboard performance results differ, there is no better comprehensive tool than SiSoft’s Sandra 2020. SiSoftware SANDRA (the System ANalyser, Diagnostic and Reporting Assistant) is a excellent information & diagnostic utility in a complete package. It is able to provide all the information about your hardware, software, and other devices for diagnosis and for benchmarking.

There are several versions of Sandra including a free version of Sandra Lite that anyone can download and use. Sandra 2020 R10 is the latest version, and we are using the full engineer suite courtesy of SiSoft. Sandra 2020 features continuous multiple monthly incremental improvements over earlier versions of Sandra. It will benchmark and analyze all of the important PC subsystems and even rank your PC while giving recommendations for improvement.

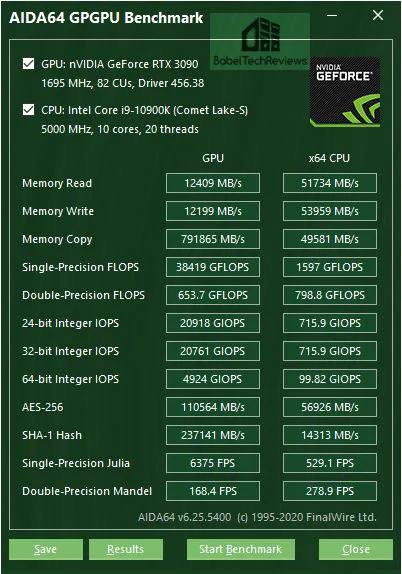

We ran Sandra’s extensive GPGPU benchmarks and charted the results summarizing them below. The performance results of the RTX 2080 Ti are compared with the performance results of the RTX 3090, and versus the two linked RTX 2080 Tis.

In Sandra synthetic GPGPU benchmarks which are optimized for mGPU, the linked RTX 2080 Tis are faster than the RTX 3090 and they generally scale well over a single Ti. Next we move on to SPECworkstation 3 GPU benchmarks.

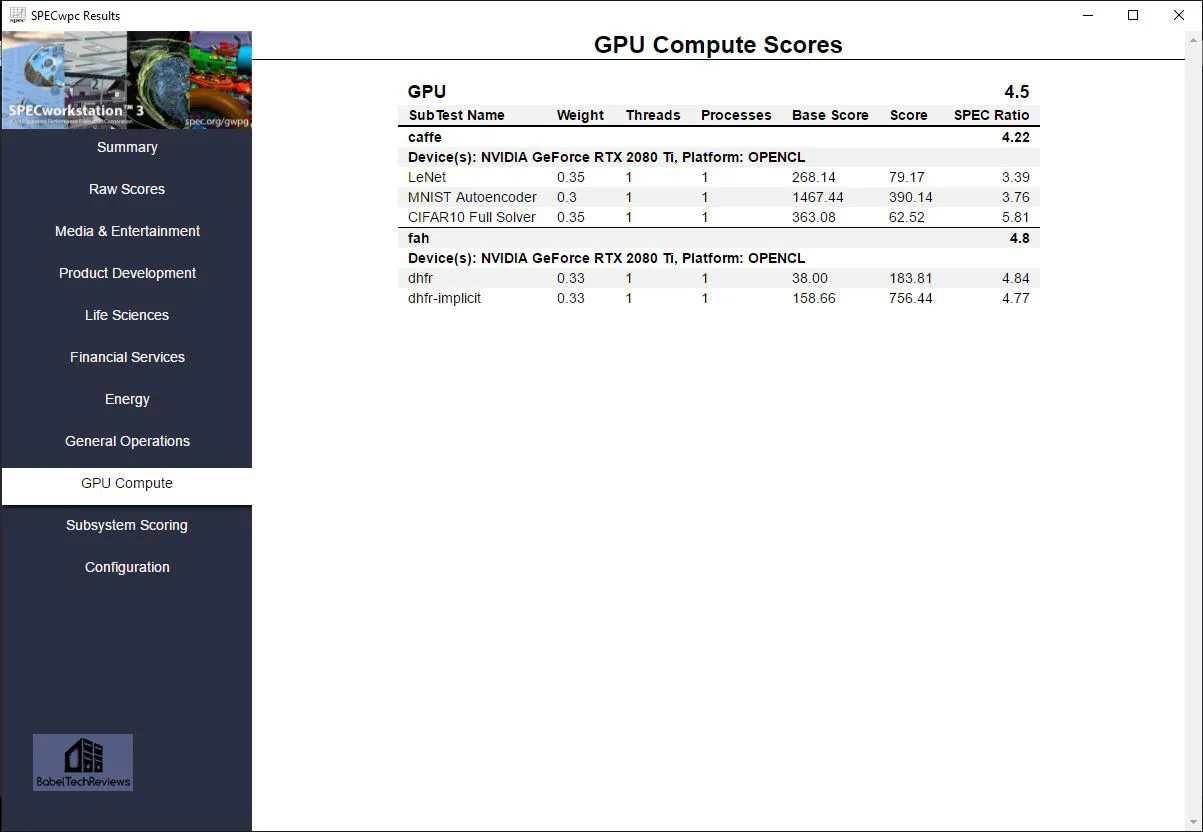

SPECworkstation3 (3.0.4) Benchmarks

All the SPECworkstation 3 benchmarks are based on professional applications, most of which are in the CAD/CAM or media and entertainment fields. All of these benchmarks are free except to vendors of computer-related products and/or services.

The most comprehensive workstation benchmark is SPECworkstation 3. It’s a free-standing benchmark which does not require ancillary software. It measures GPU, CPU, storage and all other major aspects of workstation performance based on actual applications and representative workloads. We only tested the GPU-related workstation performance. We did not use SPECviewperf 13 since SPECviewperf 2020 is coming out in mid-October.

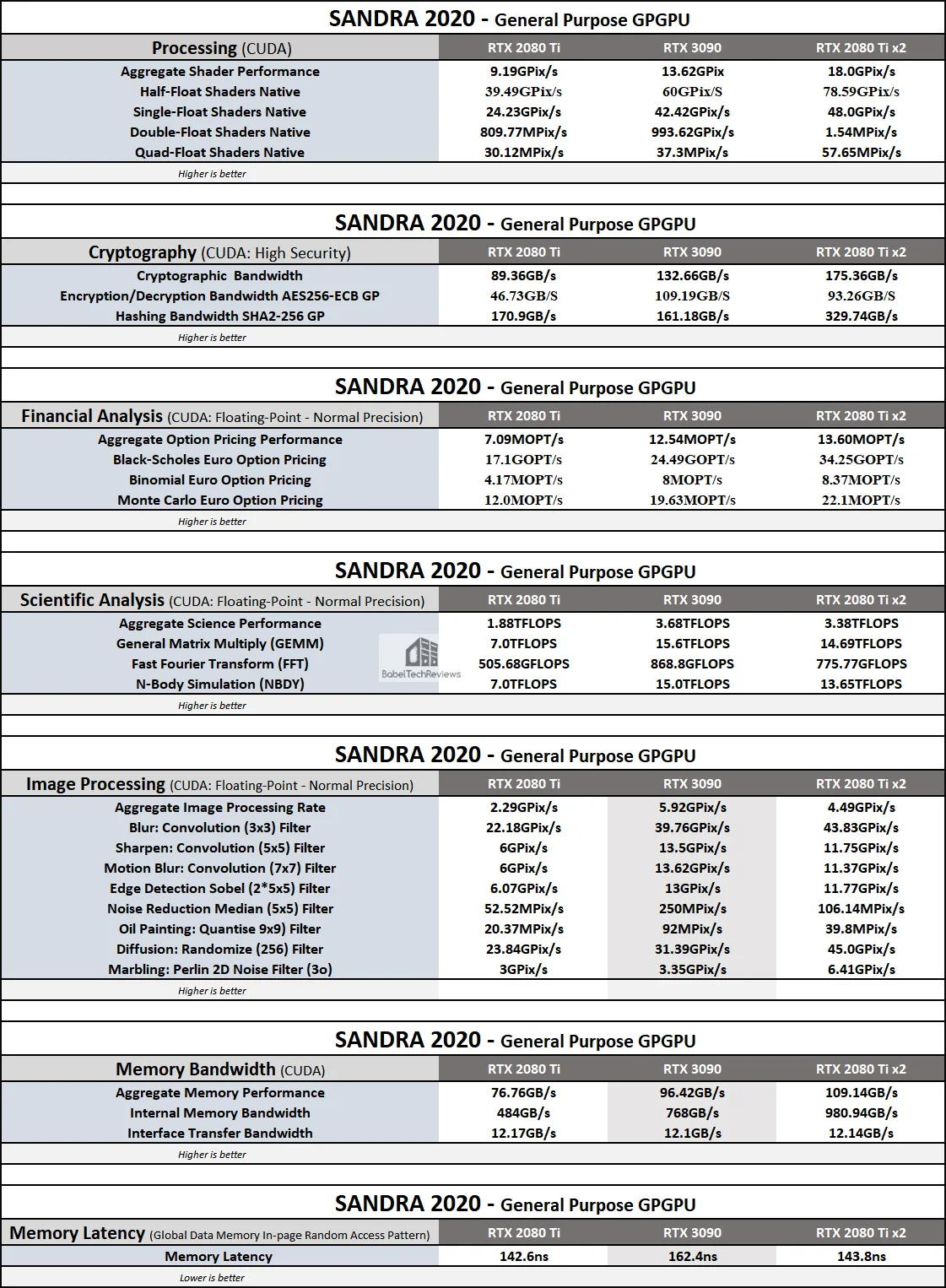

Here are the SPECworkstation3 results for two linked RTX 2080 Tis. Higher is better since we are comparing scores.

Here are the SPECworkstation3 GPU benches summarized.

Here are the SPECworkstation3 GPU benches summarized.

The RTX 3090 was unable to complete two benches, probably because of a conflict with Ampere’s new drivers. But there is no scaling whatsoever, or negative scaling for the NVLinked RTX 2080 Tis. So we questioned the people who are responsible for maintaining the SPECworkstation benchmarks:

Q: I am comparing its SPECworkstation results with 2 X RTX 2080 Ti that are connected using a RTX Titan NVLink HB Bridge. Are using two GPUs in this manner supported by the benchmark?

A: The short answer is “no”, it will not produce the desired scaling effect if you bridge the two cards. The longer answer has more to do with your expectations and that the benchmark does not explicitly do anything to preclude multi-GPU scenarios from improving support, but it does not have any code that explicitly enables it.

The graphics portions of SPECworkstation come from SPECviewperf which, in turn, is based on recordings of real-world applications. The creation of a rendering context to draw 3D scenes is done in a way that tries to very closely mimic the real-world application and thus, if the real-world application would benefit from multiple GPUs, so might the viewsets that comprise the benchmark.

The GPU compute portions of SPECworkstation run on only a single GPU. We are working toward multi-GPU support in the next major version but it’s not in there now.

So mGPU scaling may depends on if a benchmark is optimized for it or not. However, let’s next look at some professional applications where a large memory buffer makes a big performance improvement over having a smaller one.

Creative Applications with Large Memory Workloads

Rendering large models, detailed scenes, and high-resolution textures require powerful GPUs with a lot of vRAM. Render artists using the highest quality renders, require high capacity GPU memory which allows them to create more detailed final frame renders without needing to reduce the quality of their final output, or to split scenes into multiple renders which take a lot of extra time. Until now, no GeForce has been equipped with 24GB of vRAM while the RTX 2080 Ti offers 11GB. Let’s look at three pro apps that can use much more than 11GB and also test render times. First up is OTOY OctaneRender.

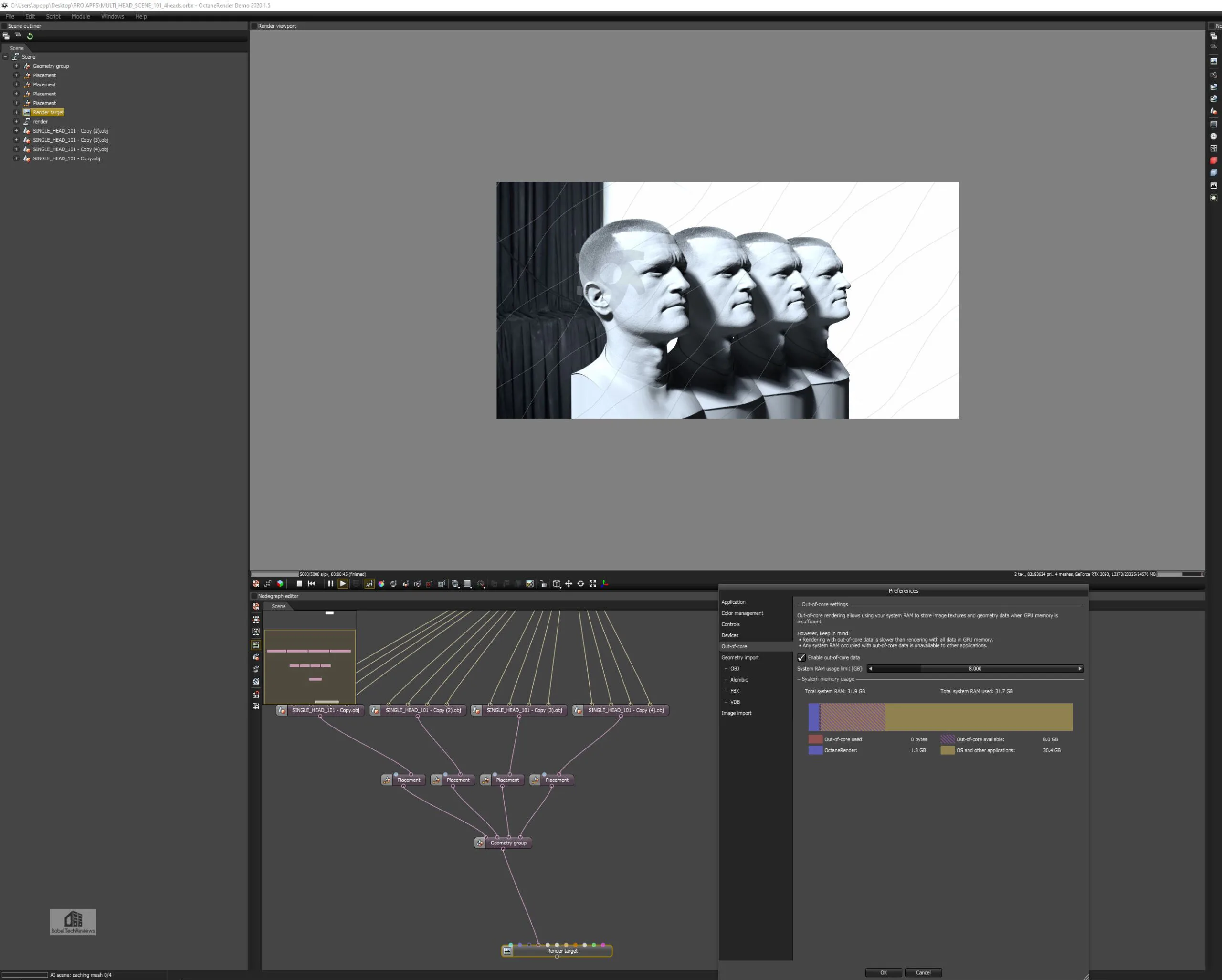

OctaneRender

OctaneRender is the world’s first spectrally correct GPU render engine with built-in RTX ray tracing GPU hardware acceleration. The RTX 3090 allows large scenes to fit completely into the 24 GBs of GPU memory so out-of-core rendering is not necessary, providing faster than rendering times using out-of-core data for GPUs with lesser memory capacity. We tested the RTX 3090/24GB against the bridged RTX 2080 Tis.

Following NVIDIA’s very specific instructions, we rendered a very large detailed image. Looking closely, we see that out-of-core data was not needed since the entire render fit into the 24GB vRAM buffer, and the large image provided only took 45 seconds to render.

We tested the NVLinked RTX 2080 Tis, and it took much longer at 2 minutes and 27 seconds because it requires much slower out-of-core memory. The 11GB vRAM of the RTX 2080 Tis are evidently not pooled for this render.

However, a pair of RTX 2080 Tis are faster than a single card and the results are summarized in the chart below.

So for rendering, it appears that two linked RTX 2080 Tis are faster than one in OTOY rendering. Let’s look at Blender next.

Blender

Blender is a popular free open source 3D creation suite that supports modeling, rigging, animation, simulation, rendering, compositing, motion tracking, video editing, and the 2D animation pipeline. NVIDIA’s OptiX accelerated rendering in Blender Cycles are used to accelerate final frame rendering and interactive ray-traced rendering in the viewport to give creators real-time feedback without the need to perform time-consuming test renders. The 24 GB framebuffer on the RTX 3090 allows it to perform final frame together with interactive renders that may fail due to a smaller vRAM framebuffer on the RTX 3080 or issues with linked RTX 2080 Tis.

This large render took 31.24 seconds using the RTX 3090 but it caused an error when we tried fitting the scene into the linked RTX 2080 Ti’s framebuffer and it could not complete the render as shown below.

However, it did render with a single RTX 2080 Ti, and here is the summary chart.

So in regard to mGPU and Blender rendering, it appears that “it depends”.

Finally, we looked at Blackmagic Design DaVinci Resolve and 8K Redcode RAW projects.

Blackmagic Design DaVinci Resolve | 8K Redcode RAW projects

Blackmagic’s DaVinci Resolve combines professional 8K editing, color correction, visual effects and audio post production into one software package. With 8K projects featuring 8K REDCODE Raw (R3D), files will use most of the memory available on a RTX 3080 which result in out of memory errors particularly when intensive effects are added. Indeed, the RTX 3090/24GB was able to perform a very intensive LFB project quickly using an 8K R3D RED CAMERA clip on an 8K timeline with a temporal noise reduction processing effect applied. In contrast, the RTX 3080 and a pair of RTX 2080 Tis just generated error messages which means that we would have to workaround – taking a lot of extra time and effort. There is really no quantitative benchmark here.

Older single cards – the RTX 2080 Ti and the TITAN Xp – can run many of these workloads with various degrees of success without errors, but they are much slower than the RTX 3090.

After seeing the totality of these benches, creative users will probably prefer to upgrade their existing systems with a new RTX 3090 based on the performance increases and the associated increases in productivity that they require. The question to buy the RTX 3090 or a second RTX 2080 Ti should probably be based on the workflow and requirements of each user as well as their budget. Time is money depending on how these apps are used. If a professional needs a lot of framebuffer, the RTX 3090 is a logical choice. Hopefully the benchmarks that we ran may help you decide.

Let’s head to our conclusion.

Conclusion

This has been an enjoyable exploration evaluating the Ampere RTX 3090 versus the a pair of NVLinked RTX 2080 Tis – formerly the fastest gaming card in the world. Overall, the RTX 3090 totally blows away its other competitors and it is much faster at almost everything we threw at it. The RTX 3090 at $1499 is the upgrade from a (formerly) $1199 RTX 2080 Ti since a $699 RTX 3080 gives about 20-25% improvement in 4K gaming. If a gaming enthusiast wants the very fastest card – just as the RTX 2080 Ti was for the past two years, and doesn’t mind the $300 price increase – then the RTX 3090 is the only choice.

Forget RTX 2080 Ti SLI as it is legacy, finicky, and requires workarounds with most games to get it to scale at all. SLI gaming uses too much power, puts out extra heat and noise, and it works mostly with older games – but if you are willing to tweak them and use older drivers and don’t mind some frametime instability it may be an option. Native mGPU is supported by so few game devs that it is almost non-existent.

For pro apps and rendering, using two RTX 2080 Tis with a NVLink high bandwidth bridge is somewhat hit or miss. Some applications support it well while others have issues with it unless they have specific support for it. Some very creative users who are able to do their own programming may be able to work around, but a general creative app user should probably skip adding a second card and use a single more powerful card instead. And if you are looking to set benchmarking world records, pick a pair of RTX 3090s instead and put your system on LN2.

The Verdict:

Skip mGPU unless you are willing to put up with its idiosyncrasies and are very skilled at working around, or if it fits your particular requirements. This is BTR’s last SLI/mGPU review for the foreseeable future. We are going to send our EVGA RTX 2080 Ti XC to Rodrigo for his future driver performance analyses so he can compare the Turing RTX 2080 Ti with the Ampere RTX 3080. He will post a GeForce 456.71 driver analysis using a RTX 3080 soon.

Stay tuned, there is a lot more on the way from BTR. Mario has upgraded his CPU platform from a quad-core i7-6700K to a i9-10850K and will have a Destiny 2 comparison between the two platforms shortly. We will also have a very special review for you soon that we just cannot talk about yet. And Sean is already working on his next VR sim review! Stay tuned to BTR.

Happy Gaming!