Both AMD and Nvidia recommend their respective multi-GPU solutions as ideal for increasing performance when a single card is not enough. Nvidia has recently recognized the futility of using more than two GPUs in SLI for effectively scaling gaming performance, and they no longer officially support Tri-SLI. In contrast, AMD still recommends CrossFiring up to 4 video cards, and they support mixed cards for increasing performance.

AMD has recently announced their upcoming $499 Polaris at Computex which is a dual GPU card to take on the GTX 1070 and the GTX 1080. So it is very relevant to ask – just how useful is CrossFire or SLI for increasing performance with today’s games?

We are going to test 25 modern PC games with both SLI and CrossFire to evaluate scaling with 290X Crossfire as well as with GTX 980 and GTX 980 Ti SLI. The results may surprise you as these games mostly include AAA titles from 2015 and 2106, including 3 DX12 games.

This multi-GPU analysis will examine the performance of 25 PC games using the latest Crimson Software Catalyst 16.5.3 drivers which were released late last month. We will compare R9 290X CrossFire performance versus GTX 980 Ti and GTX 980 SLI using the latest GeForce drivers. We are also going to benchmark multi-GPU using our latest DX12 benchmarks, Ashes of the Singularity, Rise of the Tomb Raider, and & Hitman, as well as our latest DX11 games including DOOM.

We will also compare single GPU performance using the GTX 1080 and GTX 1070 as well as the Fury X, versus 290X CrossFire and GTX 980/980 Ti SLI. BTR’s The Big Picture, once reserved only for video card reviews, is also included to give you a total of 12 video card configurations on very recent drivers. Here we are going to give you the performance results of our cards at 1920×1080, 2560×1440, and at 3840×2160, using 25 games and 1 synthetic test.

Our testing platform is a very recent clean install of Windows 10 64-bit Home Edition, and we are using an Intel Core i7-4790K which turbos all cores to 4.4GHz in the BIOS, an ASUS Z97E motherboard, and 16GB of Kingston “Beast” HyperX RAM at 2133MHz. The settings and hardware are identical except for the drivers being tested.

At R9 280X and above, we test at higher settings and at higher resolutions generally than we test mid-range and lower-end cards. All of our games are now tested at three resolutions at 60Hz: 3840×2160, 2560×1440, and 1920×1080, except for the 280X and the GTX 970 which are not strong enough for 4K; and we use DX11 whenever possible with a very strong emphasis on the latest DX11 and DX 12 games.

Let’s get right to the test configuration and then to our results.

Test Configuration

Test Configuration – Hardware

- Intel Core i7-4790K (reference 4.0GHz, HyperThreading and Turbo boost is on to 4.4GHz; DX11 CPU graphics), supplied by Intel.

- ASUS Z97-E motherboard (Intel Z97 chipset, latest BIOS, PCIe 3.0 specification, CrossFire/SLI 8x+8x)

- Kingston 16 GB HyperX Beast DDR3 RAM (2×8 GB, dual-channel at 2133MHz, supplied by Kingston)

- GTX 1080, 8GB, Founder’s Edition, reference clocks supplied by Nvidia

- GTX 1070, 8GB Founder’s Edition, reference clocks, supplied by Nvidia

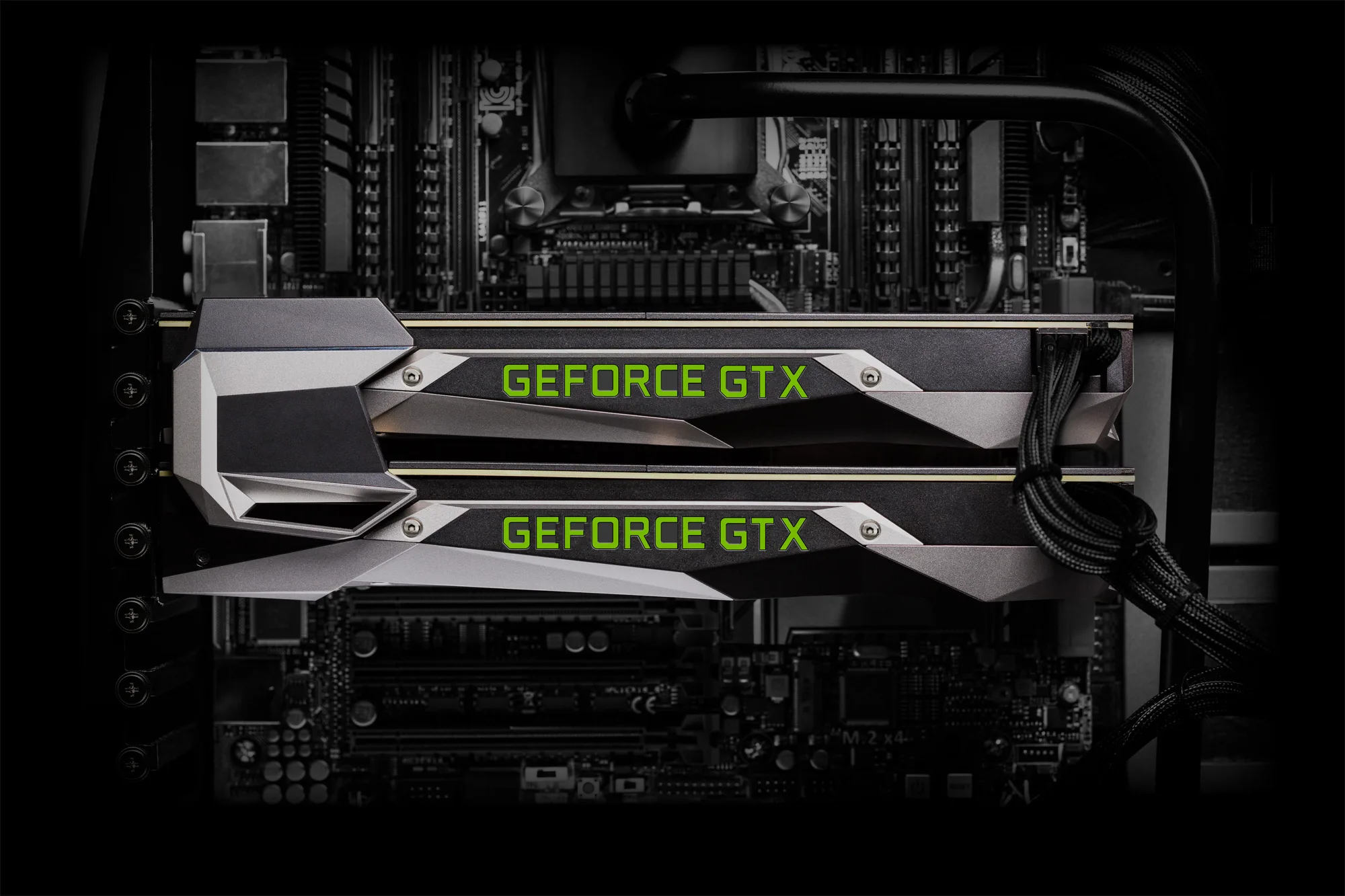

- GeForce GTX 980 Ti, 6GB in SLI and also tested as single GPU, reference clocks, supplied by Nvidia

- EVGA GTX 980 Ti SC, 6GB in SLI and also tested as single GPU, at reference reference clocks, supplied by EVGA

- 2 x GeForce GTX 980, 4GB, reference clocks, in SLI and also tested as single GPU, supplied by Nvidia

- GALAX GTX 970 EXOC 4GB, GALAX factory overclock, supplied by GALAX

- PowerColor R9 Fury X 4GB, at reference clocks.

- VisionTek R9 290X 4GB, reference clocks, in CrossFire and also tested as single GPU; fan set to 100% to prevent throttling.

- PowerColor R9 290X, 4GB, reference clocks, in CrossFire; fan set to 100% to prevent throttling.

- PowerColor R9 280X, 3GB, reference clocks, supplied by PowerColor.

- Two 2TB Toshiba 7200 rpm HDDs

- EVGA 1000G 1000W power supply unit

- Cooler Master 2.0 Seidon, supplied by Cooler Master

- Onboard Realtek Audio

- Genius SP-D150 speakers, supplied by Genius

- Thermaltake Overseer RX-I full tower case, supplied by Thermaltake

- ASUS 12X Blu-ray writer

- Monoprice Crystal Pro 4K

Test Configuration – Software

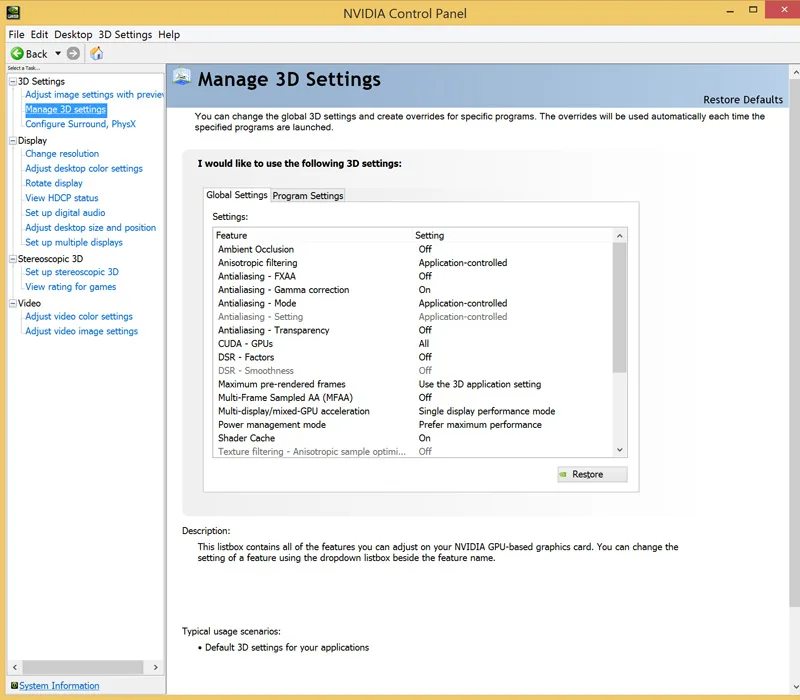

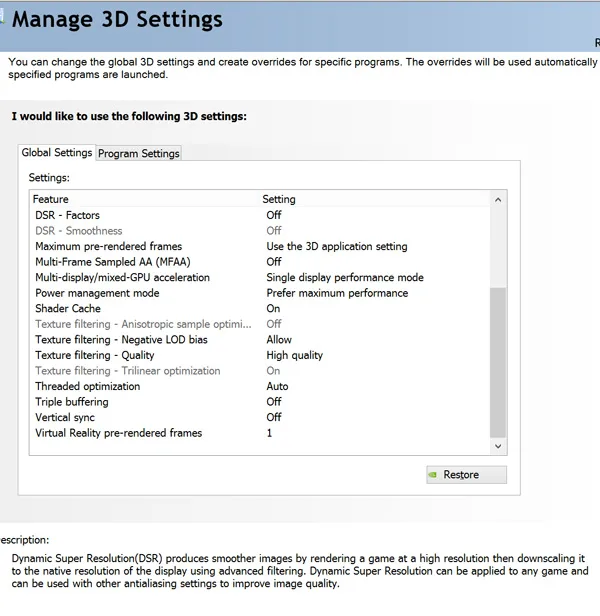

- Nvidia’s GeForce GTX 1080 Launch Drivers 368.13 were used for the GTX 1080 and the TITAN X; and GTX 1070 368.19 launch drivers were used to benchmark the GTX 1070. The same family of GeForce drivers, the latest WHQL public drivers GeForce 368.22, were used for GTX 980 Ti and the GTX 980 – including for SLI – and also for the GTX 970 EXOC as noted on the Big Picture chart. High Quality, prefer maximum performance, single display.

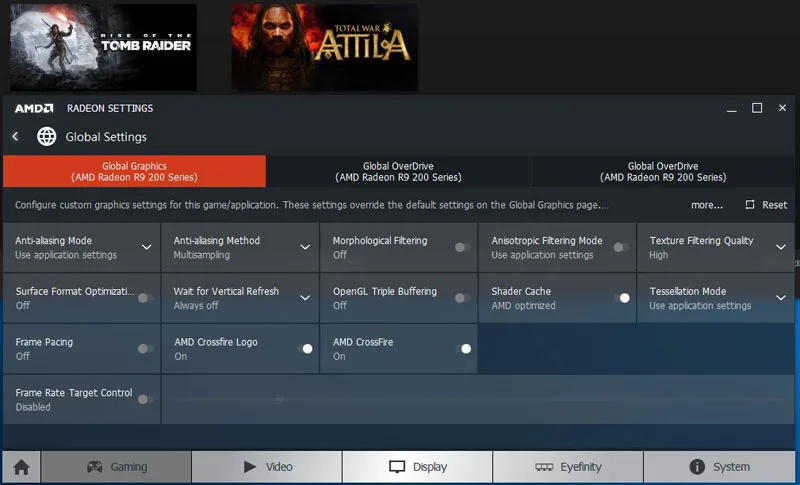

- The AMD Crimson Software 16.5.3 beta hotfix driver was used for benching the Fury X, the 290X and for 290X CrossFire, and 16.5.2.1 Hotfix was used for the 280X. Global settings are noted below after the benchmark suite.

- VSync is off in the control panel.

- AA enabled as noted in games; all in-game settings are specified with 16xAF always applied

- All results show average frame rates including minimum frame rates shown in italics on the chart next to the averages in smaller font.

- Highest quality sound (stereo) used in all games.

- Clean install of Windows 10 64-bit Home edition, all DX11 titles were run under DX11 render paths. Our DX12 titles are run under the DX12 render path. Latest DirectX

- All games are patched to their latest versions at time of publication.

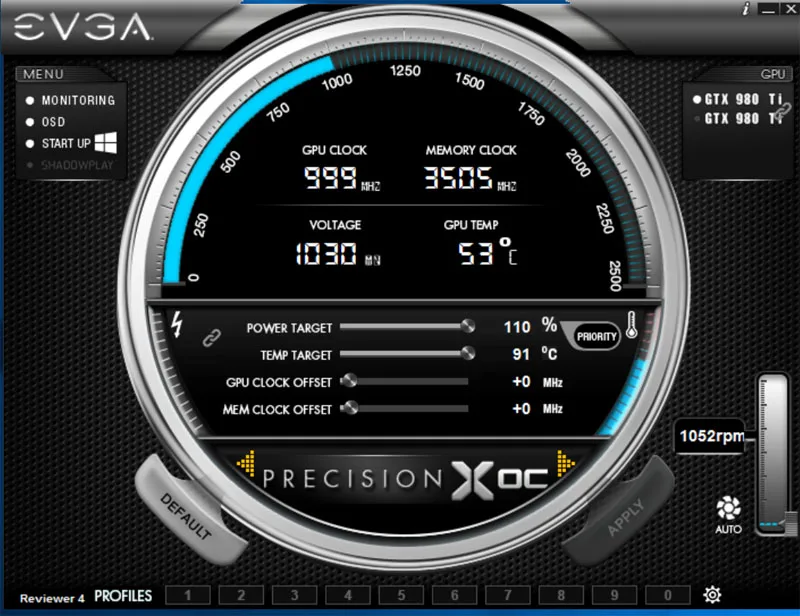

- EVGA’s Precision XOC, latest beta version for Nvidia cards.

The 25 PC Game benchmark suite & 1 synthetic test

- Synthetic

- Firestrike – Basic & Extreme

-

DX11* Games

- Crysis 3

- Metro: Last Light Redux (2014)

- GRID: Autosport

- Middle Earth: Shadows of Mordor

- Alien Isolation

- Dragon’s Age: Inquisition

- Dying Light

- Total War: Attila

- Grand Theft Auto V

- ProjectCARS

- the Witcher 3

- Batman: Arkham Knight

- Mad Max

- Fallout 4

- Star Wars Battlefront

- Assassin’s Creed Syndicate

- Just Cause 3

- Rainbow Six Siege

- DiRT Rally

- Far Cry Primal

- Tom Clancy’s The Division

- DOOM (OpenGL game)

- DX12 Games

- Ashes of the Singularity

- Rise of the Tomb Raider

- Hitman

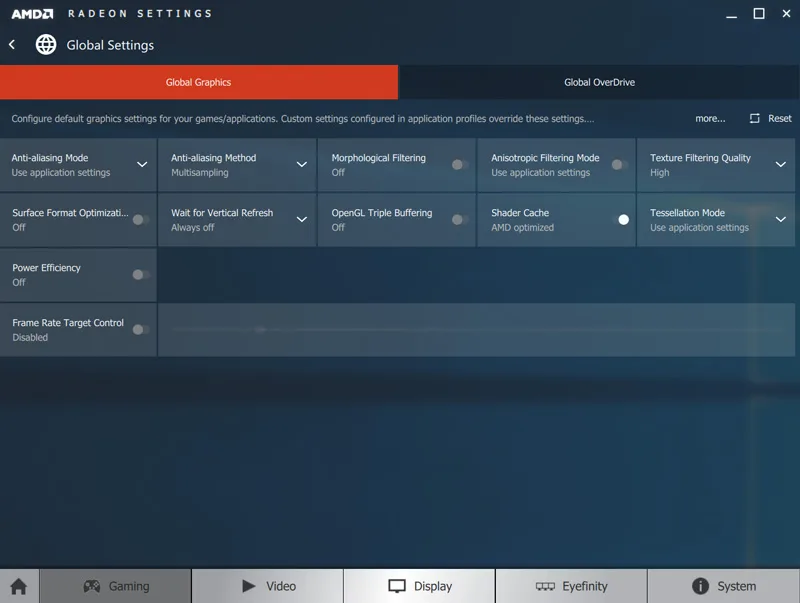

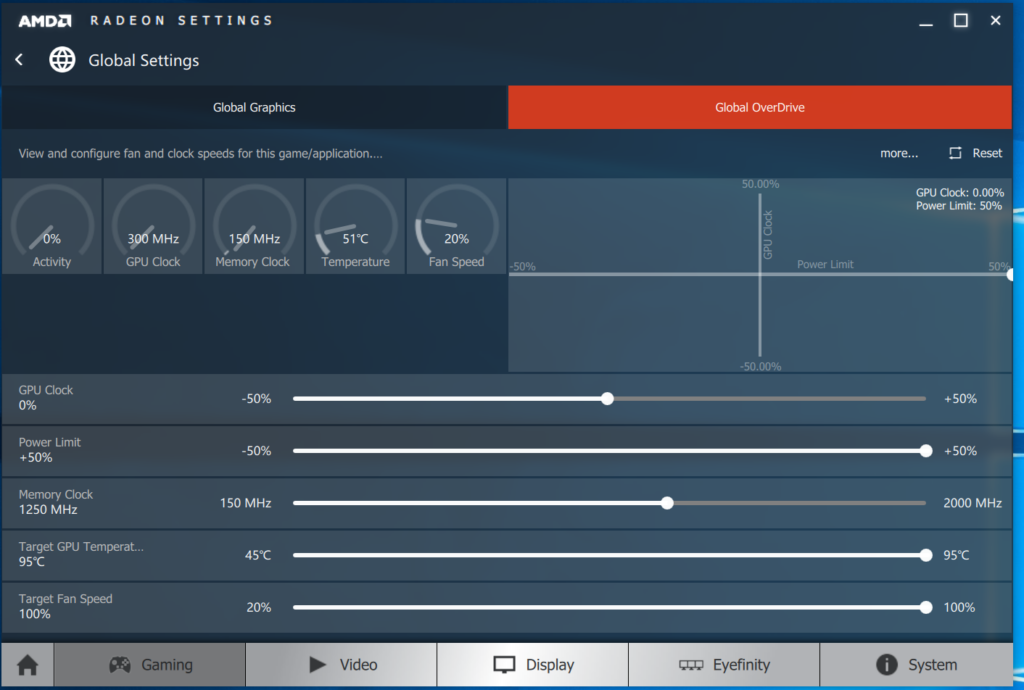

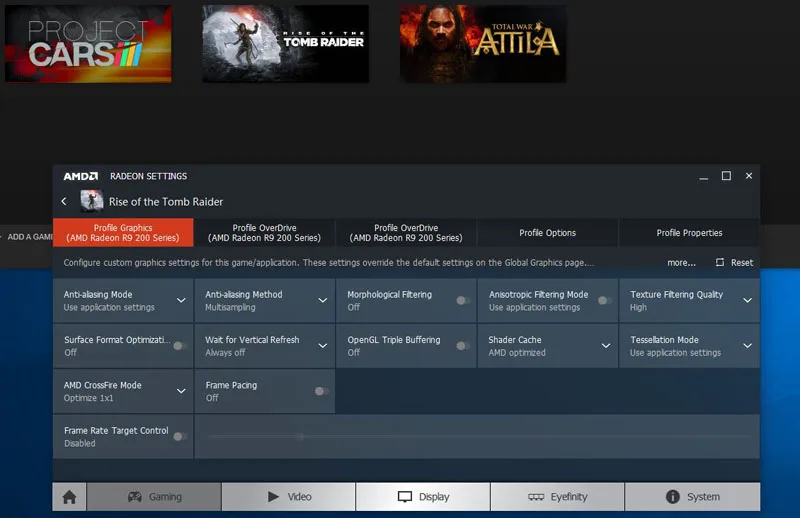

Here are the general settings that we always use in AMD’s Crimson Control Center for our default benching. Specific settings for AMD CrossFire are shown under CrossFire Options. The new Power Efficiency Toggle which was made available for the Fury X and some 300 series cards after Crimson Software 16.3, is left off in our benching of the Fury X. Please note that 100% fan speed is used for benching the 290X reference versions, and they do not throttle at all.

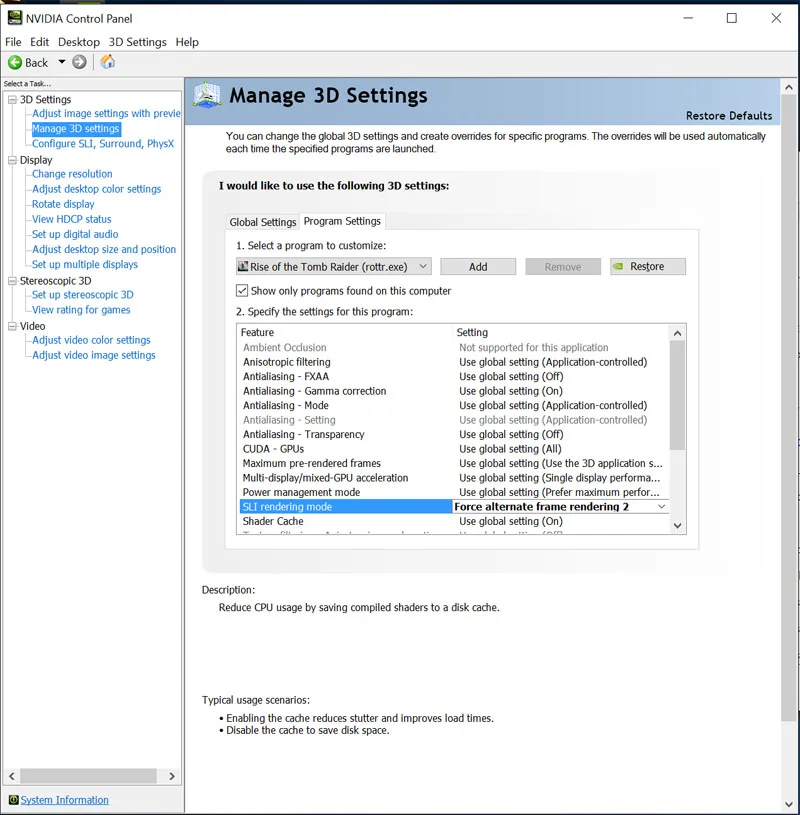

Nvidia’s Control Panel settings

These are the general settings used in Nvidia’s Control Panel. Specific SLI settings are shown under SLI Options.

How We Benchmarked

We noted that CrossFire support, once scaling better for DX12 Hitman with 16.3, now gives somewhat less scaling performance for 290X Crossfire with the latest driver. We did not enable any workarounds for CrossFire or SLI – as with using Nvidia Inspector, for example – but we benchmarked using the default multi-GPU enabled settings that the latest performance drivers offered to us.

We tested 25 games with the options provided for us in the drivers – for example, forcing AFR-1 and AFR-2 for games where SLI did not work well; and we also used the profiles provided to us by the Crimson Software drivers.

CrossFire Options

These are the Global setting that we used with Crimson Software 16.5.3 with 290X CrossFire. CrossFire bridges are not used with 290X, 390X or Fury X cards, unlike with multi-GPU Nvidia cards which require an SLI bridge.

In Global Settings we enabled “CrossFire”, On, and “AMD CrossFire Logo”, On. Enabling the second setting displayed the AMD CrossFire Logo overlay in most DX11 games in the upper right corner of the game, although it does not display in any of our 3 DX12 games.

The CrossFire logo was displayed in Fallout 4 even though the game mostly scaled negatively for us using 290X CrossFire compared with using just one 290X.

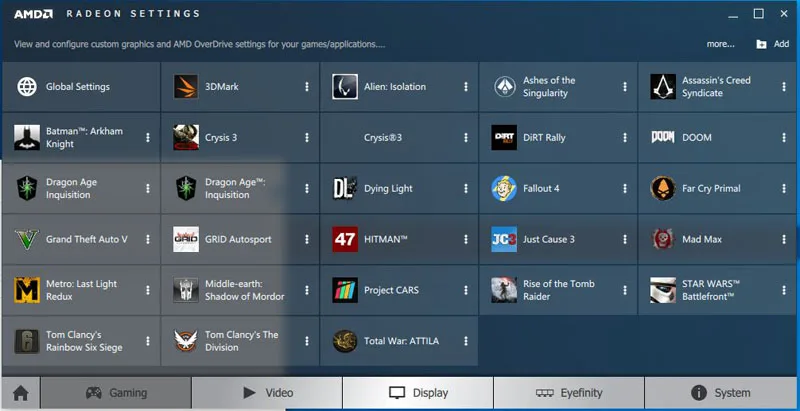

Here are the Global settings that allow us to change profiles for each individual game.

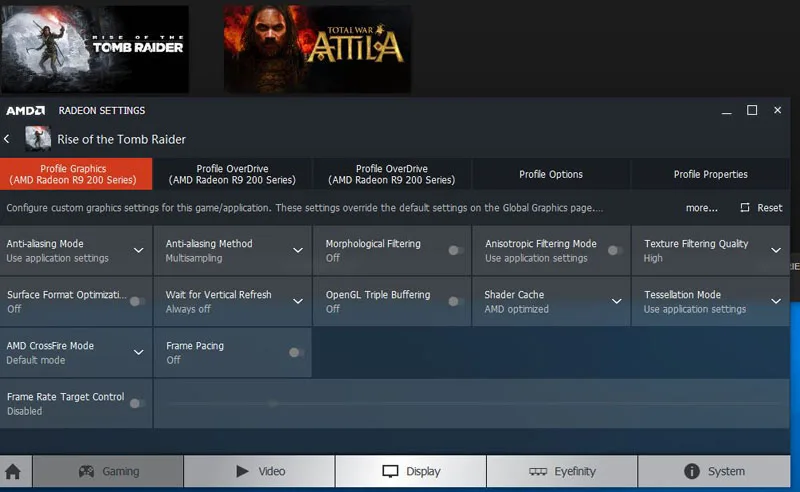

Let’s use Rise of the Tomb Raider as an example to see what AMD allows us to do. First we see the default settings, which in our global settings enable or allow CrossFire for all games.

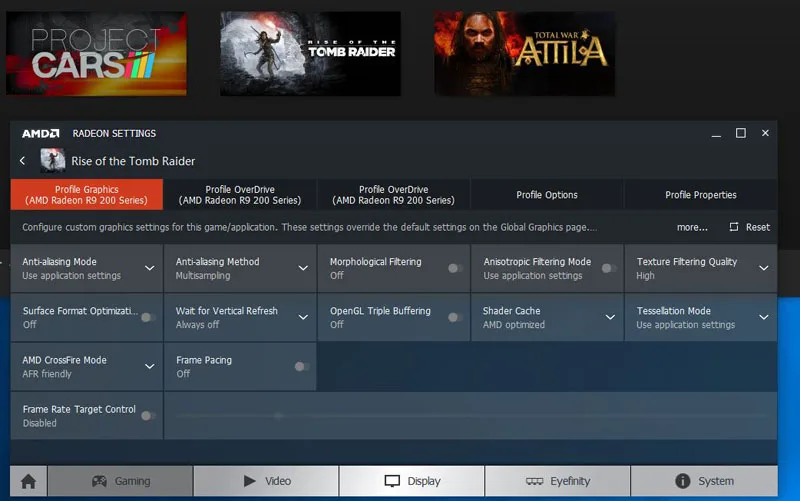

We saw little to no scaling in our three DX12 games as shown by our charts on the next page, although DX11 CrossFire may allow for it. Next we tried “AFR Friendly”.

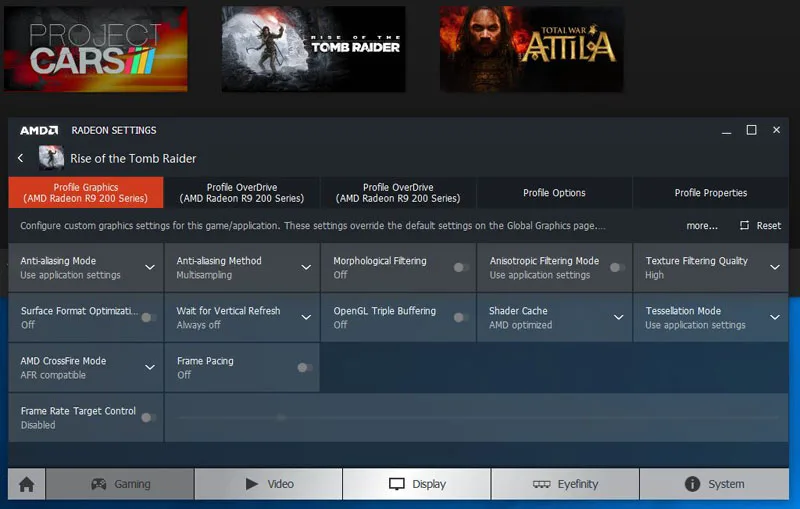

Again, no real change to performance and no scaling for CrossFire over using just one 290X. Now we tried “AFR Compatible”.

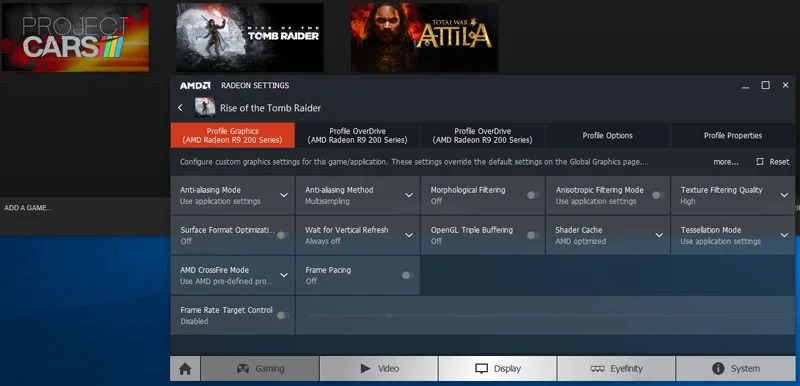

We also tried AMD’s “Predefined Settings” which appear to be the same as the Global Settings.

Finally, we tried Optimize 1×1. Again, we saw no real changes that improved performance, and no scaling for CrossFire over using just one 290X compared with the other settings and as reflected by our charts on the next page. Generally there are no positive changes to performance and no extra performance over using just 1 GPU in Rise of the Tomb Raider’s DX12 setting. We see this happen with more games as shown on our charts on the next page.

The Optimize 1×1 setting is the same as disabling CrossFire or using just one 290X. Again, with most games that do not scale there was no change to performance, although games that scale negatively with CrossFire no longer do so, making it a very useful setting.

Let’s look at Nvidia’s SLI options.

SLI Options

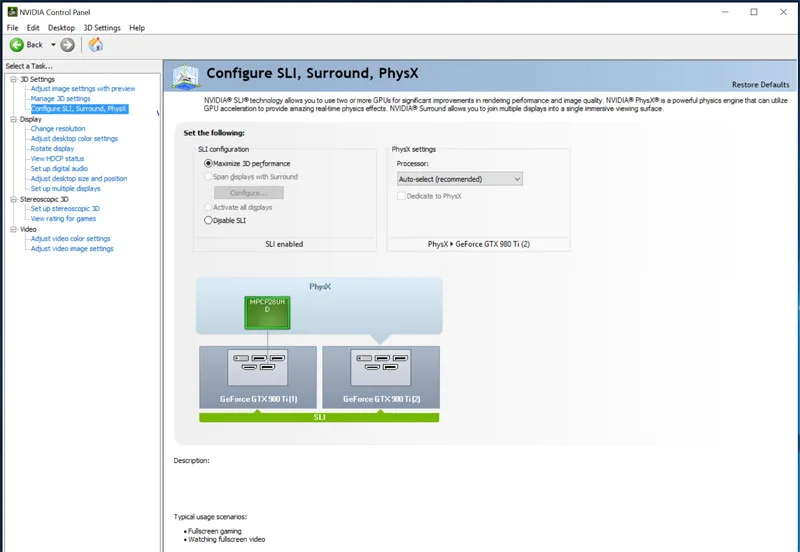

First of all, it is important to “Maximize 3D Performance” in Nvidia’s Control Panel if you have two identical GPUs set up with a SLI bridge.

Using Precision XOC we make sure that our GPUs are linked (upper right corner) and set to the same settings.

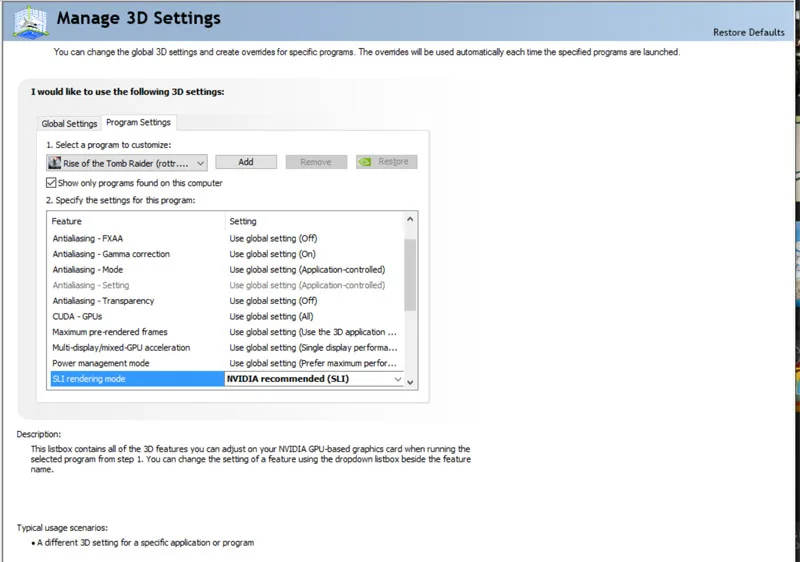

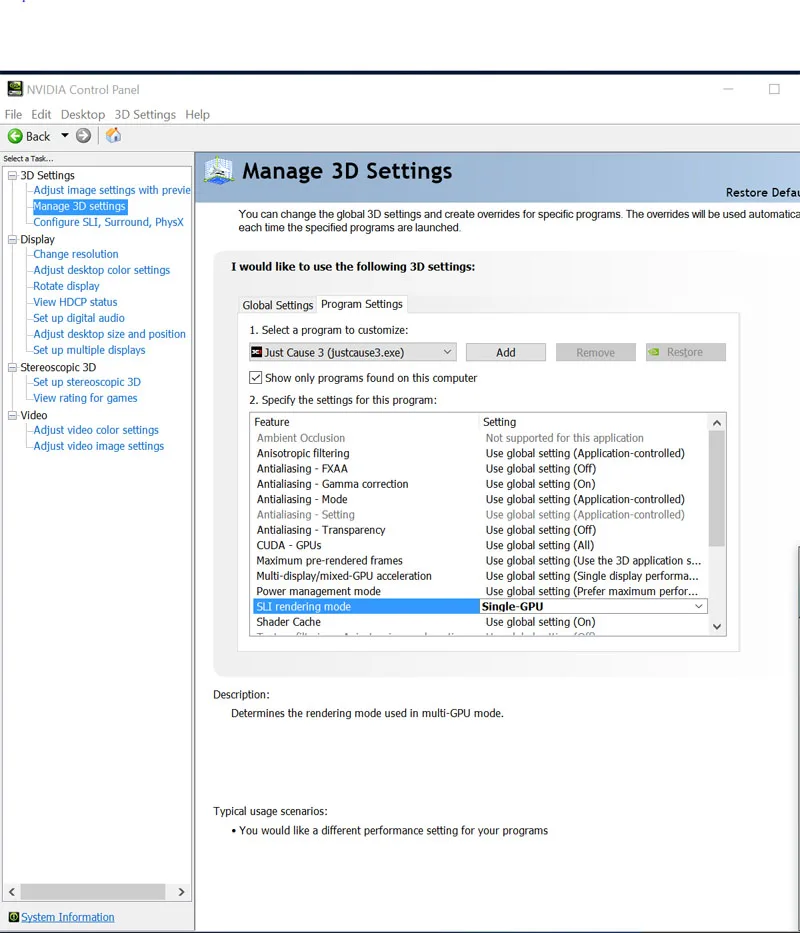

Under 3D settings, you can change SLI settings for individual games. We are using Just Cause 3 and Rise of the Tomb Raider as examples to illustrate the available options for us.

First, here are the default settings, “Nvidia Recommended”, which in this case is SLI for Rise of the Tomb Raider

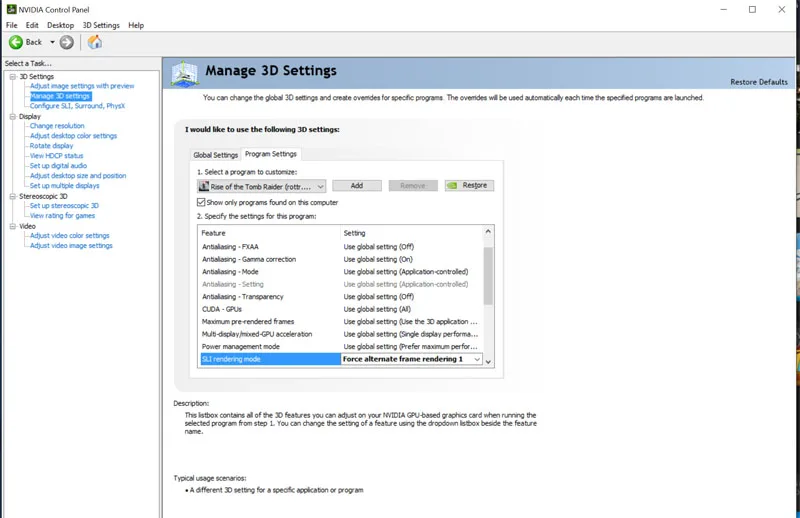

We saw no real positive scaling change to single-GPU performance as reflected by our charts on the next page. So we tried “Force Alternate Frame Rendering-1”

This time, the game locked up and we had to restart it, Next we tried “Force Alternate Frame Rendering-2”

This time, our PC locked up and we had to restart it. Forcing AFR apparently does not work very well with our three DX12 games, although forcing AFR does work positively with some DX11 games.

There is another option which disables SLI for each game individually when it scales negatively. In this case, we used Just Cause 3 as our example although the same setting exists for Rise of the Tomb Raider and for most games.

Just Cause 3 evidently scales very slightly with SLI although the improvement in performance is quite low, and setting it to Single-GPU disables SLI and returns the scaling to the same as using just one GPU. Single-GPU is quite useful when a game scales negatively.

Lets head right to our results.

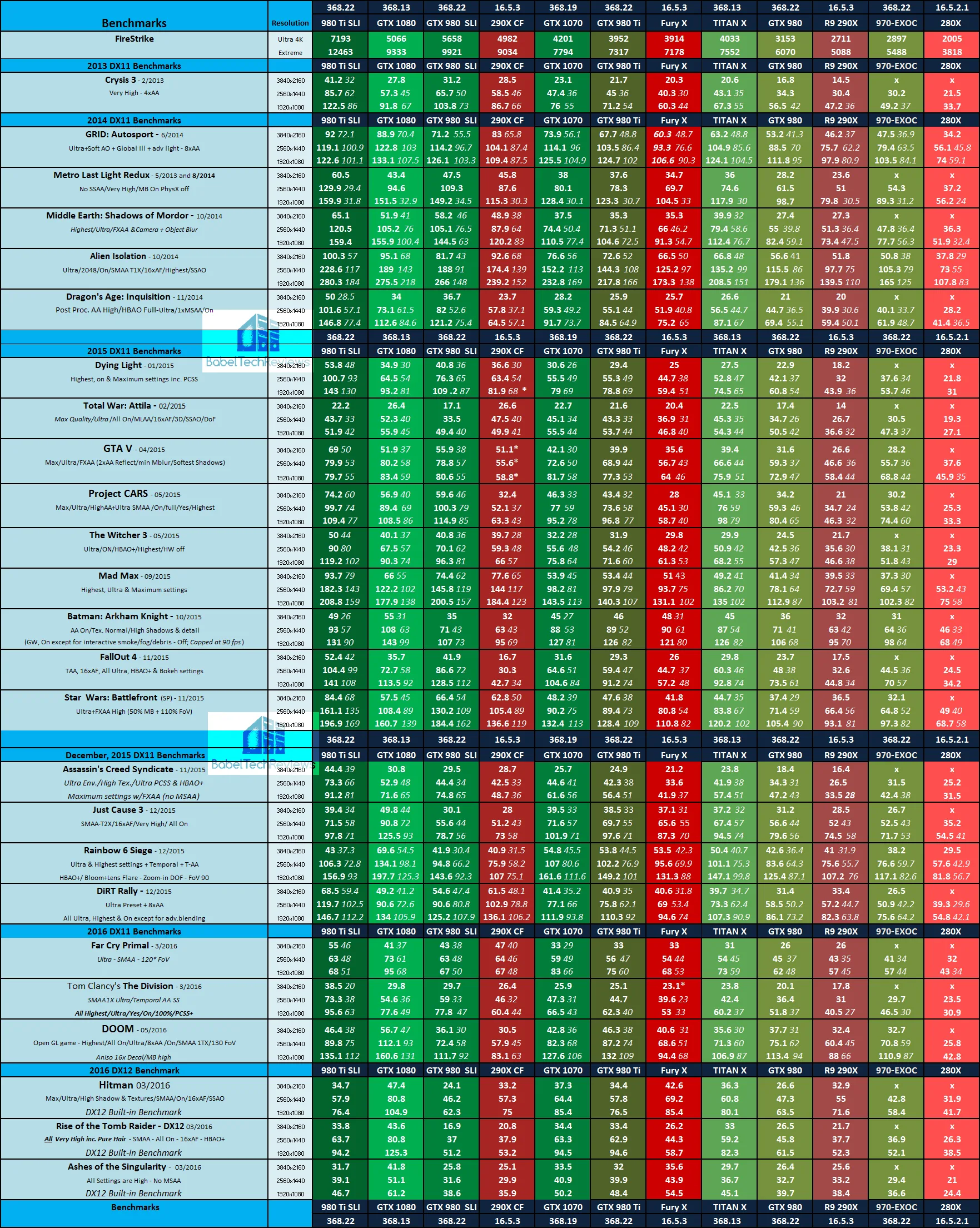

The Summary Chart

Below is the summary chart of 25 games and 1 synthetic test. The highest settings are always chosen and it is DX11 when there is a choice (except for Ashes of the Singularity, Hitman, & Rise of the Tomb Raider which are always run in DX12), and the settings are ultra. Specific in-game settings are listed on the charts.

The benches are run at 1920×1080, 2560×1440, and 3840×2160. All results, except for Firestrike, show average framerates, and higher is always better. In-game settings are fully maxed out and they are identically high or ultra across all platforms. “NA” means the game would not run at the settings chosen, and an asterisk (*) means that there were issues with running the benchmark, usually visual artifacting. “X” means the benchmark was not run.

First we look at our main summary chart which looks at a single GTX 980 versus GTX 980 SLI, and at single GTX 980 Ti performance versus using GTX 980 Ti SLI scaling. We also show a single 290X compared with 290X CrossFire scaling. Where scaling is poor or negative on the multi-GPU side, the background color is darkened. Where multi-GPU scaling is fair, only the number results are given in light blue.

The multi-GPU performance scaling results are excellent for most older games. However, once we move into 2015 games, the picture for both SLI and CrossFire change for the worse. 8 out of 25 games do not scale well for SLI, and one game has just fair results. With 290X CrossFire, 9/25 games do not scale well, and with one fair result, although they are not necessarily the same games.

The multi-GPU performance scaling results are excellent for most older games. However, once we move into 2015 games, the picture for both SLI and CrossFire change for the worse. 8 out of 25 games do not scale well for SLI, and one game has just fair results. With 290X CrossFire, 9/25 games do not scale well, and with one fair result, although they are not necessarily the same games.

In addition to poor scaling, GTA 5 ran very poorly with 290X CrossFire although technically it did scale, and Dying Light had some artifacting and blurriness with 290X CrossFire that it did not have with a single 290X.

All three of our tested DX12 games scale poorly with multi-GPU, and we also note that often it is the latest DX11 games that do not scale well either. In the case of DOOM, our most recent game, the game scales very poorly with GTX 980 Ti SLI, or negatively with 290X CrossFire or with GTX 980 SLI.

Let’s compare SLI and CrossFire with top card single GPU performance.

In many cases, it is far preferable to use a single top card versus using lesser cards in either SLI or in CrossFire.

Now let’s give our Big Picture to see how AMD and Nvidia’s performance compares with each other across 12 video card configurations

Let’s head for our conclusion on the next page.

Conclusion:

We are not sure that we can recommend either SLI or CrossFire based on our testing with 25 modern games. And it will be very hard to recommend the next dual GPU Polaris video card for $500 to take on a single GTX 1080 or GTX 1070 based on what we see today. If you play mostly older games, CrossFire and SLI both scale decently, however, many older games do not need the extra performance that multi-GPU scaling provides.

We noted that 8 out of 25 games from Nvidia, and 9 out of 25 games from AMD – about one-third of the games in our benchmark suite, and making up mostly the latest games – either scaled poorly or negatively compared with using a single GPU. In addition, 290X CrossFire had some visual issues with GTA V and with Dying Light (although Dying Light is not counted negatively on the charts, but GTA V is counted as it stutters). And it is pretty clear that CrossFire or SLI scaling in the newest games, especially with DX12, are going to depend on the developers’ support for each game. We also note that recent drivers may break multi-GPU scaling that once worked. Even a new game patch may affect multi-GPU game performance drastically.

We are going to continue to look at multi-GPU scaling by testing GTX 1070 SLI, and we hope to also examine Fury X CrossFire and the upcoming Polaris CrossFire scaling. But at this point in time, we cannot highly recommend either CrossFire or SLI as a great way to gain performance, especially with the newest games.

Stay tuned, next up we are planning a GTX 1070 overclocking article followed by a GTX 1070 SLI evaluation.

Happy gaming!

Comments are closed.