God of War PC Performance Review featuring DLSS and IQ with 11 RTX Cards using GeForce 511.23 drivers

After spending nearly 20 hours playing God of War and being unable to tear ourselves away from the screen, we can say it is the prime contender for our Game of the Year. Although it is a PlayStation console port, it has incredibly detailed characters and awesome visuals that place it at the apex of the very best cinematic experiences. This well-presented mash up of Greek and Norse mythology is enhanced by its outstanding story, great voice acting, and father-son interaction that brings it to life without needing to know its backstory.

To progress, a gamer needs to be good at fighting and solving puzzles, and there are a lot of hidden areas, secrets, and power-ups as well as XP to earn for character development. Great voice acting and music enhance a player’s experience. However, this review will not focus on reviewing the game; rather it will focus narrowly on DLSS as a means to increase framerates without compromising image quality as the game is very demanding using maxed-out settings.

God of War is basically a DX11 PS4 Pro version with unlocked framerates for the PS5, but it looks better than many of the early next generation console games. The biggest visual improvements for the PC version include shadowing, better lightning and colors, enhanced screen space reflections, and ambient occlusion. The PC version also offers higher framerates and perhaps higher textures. An anytime save is particularly helpful to proceed as there are many tough enemies and difficult boss fights to survive. The NPC enemies generally present good variety although some of them are quite repetitious with only some minor variability.

The player may use his experience points (XP) gained by fighting and by completing tasks, and his gear may be upgraded from the menu by items found in the world or by visiting one of two Dwarven smiths. There are varied environments that range from the wide outdoors, to traversing lakes by boat, entering alternate realms, and exploring underground.

As a history student with a specialty in mythology, we appreciated the well-done references to Greek gods and Norse mythology. A player will get to interact with characters brought to life from Homer’s Iliad and Odyssey to learn about your character’s backstory. Norse mythology accurately references the Tree of Life, Thor, Odin, and even Jörmungandr – it’s a great/fun mash-up of mythology with a very detailed universe that it builds upon from the earlier games in the God of War console series.

As a history student with a specialty in mythology, we appreciated the well-done references to Greek gods and Norse mythology. A player will get to interact with characters brought to life from Homer’s Iliad and Odyssey to learn about your character’s backstory. Norse mythology accurately references the Tree of Life, Thor, Odin, and even Jörmungandr – it’s a great/fun mash-up of mythology with a very detailed universe that it builds upon from the earlier games in the God of War console series.

DLSS, Image Quality, and Performance

More than three years ago, NVIDIA introduced realtime RTX ray tracing together with Deep Learning Supersampling (DLSS). To play at high settings requires the use of AI super resolution or DLSS which provides better than a game’s postprocessing AA together with improved performance. God of War doesn’t use ray tracing, but rather uses DLSS to improve performance, and we will compare the current performance and IQ (image quality) of eleven NVIDIA RTX cards with the latest 511.23 driver released last Friday.

BabelTechReviews received a copy of God of War from NVIDIA when it launched earlier this week with no expectations. It originally received very high praise including many awards when it originally released for the PS4, and it was improved to run faster on the PS5. Although we only completed 4 chapters of seventeen, we were completely blown away by this game and could barely tear ourself away to write this review.

Performance Options

Although God of War is a well-done PlayStation port, it has more limited options than most games developed primarily for the PC. The developers were originally limited to checkerboard 4K 30 FPS on the PS4 and PS5 but have been able to increase the framerates to 60 by using checkboard. Basically there appears to be a dynamic resolution at work on the PS5 to maintain 60 fps at 4K. This kind of upscaling is not needed nor used for the PC version. Playing on PC gives slightly higher graphics fidelity together with a potential for higher and thus more fluid framerates up to 120 FPS. Unfortunately, the only display choices are either windowed or a borderless window, and there is no Fullscreen option.

We played and benchmarked using four different displays – a 48″ LG C1 120Hz 3840×2160 display, a 32″ 60Hz BenQ 4K display, a Samsung G7 240Hz 27″ 2560×1440 display, and an ASUS 360Hz 24″ 1920×1080 display. Our PC is a 12900KF Intel CPU at stock settings on an ASUS ROG Maximus Apex motherboard with G.Skill 16GB x 2 DDR5 6000 CL36, and a 2TB 5,000 MB/s T-Force C440 NVMe SSD for C:Drive plus a 1TB T-Force A440 7,000MB/s NVMe SSD as storage for loading the game. God of War loads very quickly from SSD. We played for over fifteen hours using a RTX 3080 Ti Founders Edition and then benchmarked and played for an additional nearly ten hours using ten more RTX cards.

Here are the highest graphics settings that we used for benchmarking. It is basically the Ultra preset with Ultra+ reflections that really help differentiate it from the console versions.

We are going to compare image quality using three levels of DLSS versus using no DLSS, and we chart the performance of eleven current NVIDIA GeForce RTX cards:

- RTX 3080 Ti

- RTX 3080

- RTX 3070 Ti

- RTX 2080 Ti

- RTX 3070

- RTX 3060 Ti

- RTX 2080 SUPER

- RTX 2070 SUPER

- RTX 3060

- RTX 2060 SUPER

- RTX 2060

DLSS

NVIDIA’s DLSS creates sharper and higher resolution images with dedicated AI processors on GeForce RTX GPUs called Tensor Cores. The original DLSS 1.0 required more work on the part of the game developers and resulted in image quality approximately equal to TAA. DLSS 2.0 and later versions use an improved deep learning neural network that boosts frame rates while generating crisper game images with the performance headroom to maximize settings and increase output resolutions. Since it uses DeepLearning, it continues to evolve and improve.

NVIDIA claims that DLSS offers IQ comparable to native resolution while rendering only one quarter to one half of the pixels by employing new temporal feedback techniques. DLSS 1.0 required training the AI network for each new game whereas DLSS 2.0 trains using non-game-specific content that works across many games.

DLSS generally offers RTX gamers four IQ modes: Quality, Balanced, Performance and Ultra Performance, These settings control a game’s internal rendering resolution with Performance DLSS enabling up to 4X super resolution. In our opinion, Quality DLSS’ IQ looks somewhat better, and its larger hit to the frame rate is worth it over using Performance DLSS. We would prefer to lower other settings before we drop DLSS from Quality to Performance. However, Balanced DLSS is a good in-between choice.

God of War’s own anti-aliasing doesn’t address temporal anti-aliasing like DLSS does. We will next focus on image quality and DLSS on versus off, followed by benchmarking and charting DLSS performance using eleven RTX cards.

DLSS On versus Off

4K without DLSS is 3840×2160 for both the Render and the Output Resolution and it is especially demanding on a video card using maximum settings.

Using the magic of AI and RTX Tensor cores, Quality DLSS upscales a 2560×1440 render resolution to 4K with no loss of image quality as we shall see in the following section.

No DLSS vs. Quality DLSS

Here are 4K images comparing No DLSS (left) with Quality DLSS (right) using a slider. Since the scene is dynamic, they do not exactly match each other.

[twenty20 img1=”25800″ img2=”25807″ offset=”0.5″ before=”Quality DLSS 4K” after=”No DLSS 4K”]

We don’t recommend playing without DLSS. The image quality is equal although there are some very slight differences.

No DLSS vs. Balanced DLSS

Balanced DLSS uses a Render Resolution of 2228×1254 to upscale to 4K and the AI must fill in more pixels than with Quality DLSS.

Here is 4K no DLSS on the left compared with a similar image on the right using Balanced DLSS/

[twenty20 img1=”25800″ img2=”25802″ offset=”0.5″ before=”Balanced DLSS 4K” after=”No DLSS 4K”]

Again the images are very comparable although Balanced DLSS loses a bit more detail than Quality DLSS.

No DLSS vs. Performance DLSS

Performance DLSS requires that a Render Resolution of 1920×1080 be upscaled to 3840×2160 which means the AI must use just one-quarter of the original pixels for its 4K reconstruction. This results in a further slight loss of detail.

Here is the comparison of 4K (left) with Performance DLSS (right)

[twenty20 img1=”25800″ img2=”25805″ offset=”0.5″ before=”Performance DLSS 4K” after=”No DLSS 4K”]

There is some a small loss of detail in some areas but the DLSS upscaled image quality is still excellent.

Next we check out the performance of eleven RTX cards using 3040×2160, 2560×1440, and 1920×1080 resolutions using up to three levels of DLSS with the goal of averaging above 60 FPS while still using completely maxed-out settings.

Performance

God of War is a visually beautiful but extremely demanding game using maximum settings, but these settings are what distinguish it from playing on the PS5. Playing the game on a PS4 and even the PS5 originally gave a playing experience of 4K/30 FPS but its upgraded experience brings 4K/60fps using checkerboard and with slightly less visual settings than what the PC is capable of. The PC game is capped at 120 FPS which is not an issue since Even an RTX 3080 Ti cannot maintain framerates above 110 FPS consistently at 4K even by using Performance DLSS. [Updated: January 17, 2022 10:30 AM PST ] However, to turn off the framerate cap, move the slider to the far left.

Quality DLSS is our goal since it’s visuals are equal to not using DLSS. Balanced DLSS requires a slight visual sacrifice, and Performance DLSS is still acceptable to most gamers because the game is generally fast-paced and most players are generally not exploring the gameworld with a magnifying glass looking for visual flaws.

Although there are very difficult boss battles with multiple enemies on screen at once, as long as a player can maintain average framerates above 60 FPS, the experience is sufficiently fluid as long as the minimums (1% lows) stay above 50 FPS. If a GSYNC or a GSYNC compatible display is used, it is generally not a problem for the lows to dip occasionally into the upper-40s FPS. Below that, fluidity is compromised, and it is better to lower the graphics settings or use a lower quality DLSS setting. Ultra Performance DLSS is not recommended unless framerates cannot be increased by any other method – it is better to lower settings first as it looks worse than the console experience. Ultra Performance was primarily developed for 8K gaming.

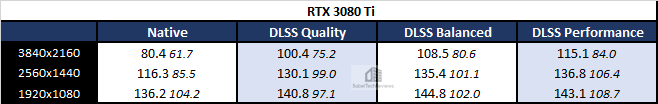

Using God of War’s highest settings, we compare performance without DLSS versus Quality, Balanced, and Performance DLSS using eleven current RTX cards at 1920×1080, 2560×1440, and 3840×2160.

4K Video cards – RTX 3080 Ti/RTX 3080/RTX 3070 Ti/RTX 2080 Ti/RTX 3070

The following cards can generally play at maximum settings using DLSS at 4K.

The RTX 3080 Ti is a no-compromise video card that provides the best 4K God of War experience. It can handle maxed out 4K settings without using DLSS and still remain well above 60 FPS. A gamer may choose to use DLSS Quality to achieve even higher framerates for a potentially more fluid gaming experience but there is no point to using Balanced or Performance DLSS.

The RTX 3080 averages above 70 FPS but drops into the mid-50s FPS for the 1% minimum lows. DLSS Quality insures that the framerates stay above 69 FPS for an excellent playing experience with no image quality compromise.

The RTX 3070 Ti cannot play at maxed-out 4K fluidly without DLSS. However, DLSS Quality allows for a high quality maxed-out 4K experience with excellent averages and occasional drops into the mid-50s.

The RTX 2080 Ti is not recommended for 4K play without DLSS without dropping settings, but it can manage it with DLSS Quality if the player doesn’t mind framerate drops into the low-50s. The experience is acceptable, but it may be better to use DLSS Performance or too use 1440P resolution.

The RTX 3070 performs slightly under the RTX 2080 Ti in God of War, and the same recommendations apply as above.

1440P Video Cards – RTX 3070/RTX 3060 Ti /RTX 2080 Super /RTX 2070 Super

The following four video cards perform best at 1440P starting with the RTX 3060 Ti. It is unsuited for 4K play except perhaps by using DLSS Performance but it gives a very good experience playing at 1440P with DLSS quality with no visual compromises.

The RTX 2080 Super performs just slightly below the RTX 3060 Ti in God of War and the same recommendations apply as above.

The RTX 2070 Super plays acceptably at 1440P with DLSS Quality, although some players may choose DLSS Performance for a little more performance headroom. It would be an excellent 1080P card also for God of War for those who prefer higher framerates.

Next we benchmark the two cards best suited for 1080P.

1080P – RTX 3060/RTX 2060 Super/RTX 2060

Although the RTX 3060 can manage 1440P using DLSS Performance, we would suggest also lowering some individual settings for a more fluid playing experience. It makes an excellent 1080P card using DLSS Quality or DLSS Performance.

The RTX 2060 Super performs only slightly below the RTX 3060 and the same recommendations apply as above.

The RTX 2060 is the entry level RTX card and it is only suited for 1080P using DLSS Performance, or Quality with lower individual settings.

DLSS makes a huge difference to the playability of God of War at the highest settings, and Quality DLSS gives no image quality disadvantages whatsoever. It is highly recommended!

DLSS gains a large percentage of performance over not using it, and it looks at least as good. It is really “free performance” without compromise. We see a RTX 3070 Ti and RTX 2080 Ti playing God of War decently with maximum settings at 4K(!) whereas before they struggled without it. DLSS isn’t perfect, occasionally adding some very minor artifacting and potentially losing a little fine detail, but overall Quality DLSS looks great with the camera in motion and is visually equal to not using it. Balanced DLSS is only incrementally more of a compromise, and Performance DLSS still provides acceptable image quality for most gamers while giving even higher framerates.

Any algorithm that doesn’t use AI such as FidelityFX Super Resolution simply cannot match the image quality of DLSS and there is every good reason for an RTX gamer to use DLSS for God of War. In addition to implementing DLSS, NVIDIA’s Reflex is available in-game for GeForce gamers to lower latency. We will cover Reflex and latency in a future review.

Conclusion

God of War is an outstanding and really fun game that gives a great cinematic experience while telling a exceptionally deep story. We are especially impressed by its visuals coupled with the large free performance increase that DLSS brings to it for RTX gamers.

Next up is a DDR5 5200MHz review comparing DDR4 3600MHz performance using 31 games, and Rodrigo is hard at work on his GeForce 511.23 driver performance analysis also featuring God of War. We also have a super-fast 7,000MB/s SSD on deck for review followed by much faster DDR5 6000MHz and 6400MHz focusing on latency due by this weekend. Stay tuned to BTR!

Happy Gaming!

Comments are closed.