For the past four years, we’ve watched VR (Virtual Reality) grow from a fun curiosity into a wide ranging informational, educational, entertainment solution. The reviews, depending on the content viewed, range from painful to exquisite.

One of the biggest issues often heard from filmmakers has been the inability to control/manage the viewer’s experience and tell a compelling story, a scripted drama.

That barrier was broken late last year when Doug Liman (The Bourne Journey, Edge of Tomorrow) decided he wanted to push the envelope of the evolving technology to develop something more than a VR documentary but rather a major VR production, which ultimately became the episoidic series, Invisible.

One of the first to join the effort was Lewis Smithingham, 30 Ninjas.

Q – Please give us an overview of the project and some background.

Lewis – When Doug gets into a project, he does it with an open mind as to where it will go, how it will end up and how it’s going to progress. He constantly iterates and strives for the absolute best. That’s vital when you’re creating a VR film and especially when you want to produce something as totally new as a scripted VR film.

As a team, we screened all of the of scripted VR shorts that were available back in early 2015. Our favorite was Agnes Die, a short from our friends at CreamVR. We liked that one because it combined cinematic editing techniques with 360 video. We took apart each aspect of production and then figured out how to get it to work for VR.

One of the things we have often heard – and is claimed even today – is that you can’t script the VR storyline because the viewer can go in a totally different direction and leave the film completely, which is totally wrong. You can give audio and visual cues to keep the viewer in the story. Invisible proves it can be done. If you give someone something interesting to look at, they will look at it. As Doug says, “Tolerance for editing is directly related to quality of storytelling.”

In our opinion, Invisible really pushed the technology and surpasses people’s expectations. I think it represents a new wave of cinematic content and immersive storytelling styles. There are things that happen in Invisible that you just don’t see in other VR series. In particular, fast editing, action, immersive lighting and parallel editing

The series is set in modern day Manhattan where the dynastic Ashland family has passed on a gene that gives them an extraordinary ability that enables them to accumulate vast amounts of power and wealth. As the modern generation faces diminished skills and more scrutiny than ever before, the birth of an exceptional new child draws public interest; and their family secret teeters on the brink of becoming exposed because a rather brutal genetic research lab is about to uncover exactly what gives certain Ashlands their ability.

Q – The trailer certainly “sets the mood” with aerial shots, split-screen conversations and even a funeral scene from inside the grave. How long did the project take?

Lewis – The project began in early 2015. I was brought on to a series of test shots that summer. We used those to throw everything at the wall and see what stuck. They were closely viewed and reviewed to find out what would work, what wouldn’t and why. In particular, we found that we really like the emotion carried about low angle shots and parallel editing. Invisible basically broke all of the VR guidelines – don’t cut too much, don’t move the camera, place shots at a natural viewer height. These rules are always funny to me. They are the same silly rules that American Cinema attempted to follow in the teens and twenties, whilst the Soviets and Germans were making insane amazing cinema. There are very few memorable films from American cinema in the teens and twenties (and those that are, are either Charlie Chaplin or offensive work from Griffith). Meanwhile, Metropolis is being remade.

The entire team’s objective was to do what tells the best story and explore how we could push the technology so we break people’s expectations.

Too many people are concerned about defining the rules of VR; but we were obsessed inventing approaches that would deliver something new, different, refreshing for the viewer. We were obsessed with entertaining. Let’s worry about rules later.

Because of this process of constant iteration, we had a pretty long shooting schedule–something like 25 days over 7 months

Q – What was your shooting, production hardware/software?

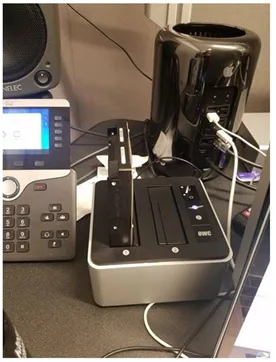

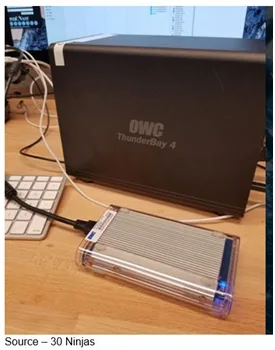

Lewis – We shot primarily on the Jaunt One but also included some GoPro and A7S shots. Our post production system was a Dell 7910 and a number of OWC ThunderBay 4 RAID storage solutions.

You have to understand that everything is bigger in VR when compared to standard 4K film production. Standard 4k is 4096×2048. VR is 4096×4096 at 59.94, which means you need high-performance, reliable horsepower and beefy machines that can crank out footage fast.

Our master DPX (digital picture exchange) was 22TB on one of our ThunderBay 4s and the entire series required about 125TB of fast, reliable storage, just to be backed up once.

For our post work, we primarily relied on Adobe Premiere, Mettle Skybox , C4d, Nuke, and Assimilate Scratch.

Q – Because this was such a data-intensive project and you’re dealing with 360-degree content, how many archive copy stages did you have?

Lewis – I am absolutely paranoid when it comes to having copies of copies of copies because even with the best post techniques, there’s only so much masking you can do of lost frames and reshooting. Having a scene disappear into the atmosphere is not something I want to tell Doug, Julina or any director about.

OWC RAID units have become my “weapon of choice” in protecting the content because they are inexpensive, reliable and so easy to deal with. I assume even the “high end” hard drive that firms make will fail so 30 Ninjas’ SOP (standard operating procedure) has been to have three back-ups of every RAW file, then backing that up to LTO. A long fall into a puddle doesn’t care who made your drive.

The entire series was edited in 2304×2304 proxies and synced on a dropbox across multiple machines. In this way, we could use Dropbox to send files over long distances from one system to another during the editing process and never have to worry that a solar flare or some natural or manmade disaster would force us to redo a scene or production/post step.

Q – Can you give us a simplified description of your workflow, FX, colorization, audio?

Lewis – There’s a lot being written on VR workflow right now because we are still in the early, growing stages. There really are no “standard” techniques we used because we were pushing the envelope on the technology, what it could do and what we could do.

Basically, it consisted of injest and cloud upload for stitch. Proxy download for edit. Once the picture was locked, it headed over to a great house we worked with called The Molecule for VFX and fine stitching. They handed over DPX sequences that were brought back into Premiere. We then output again as DPX, finally bringing it into Scratch with Local Heros for export.

In many instances, this process was even followed for individual shots.

Audio was a whole different beast because the film was delivered in Dolby Atmos, a new audio format for creating and playing back multichannel movie soundtracks. It gives Invisible a more three-dimensional effect for optimum viewer impact. Our sound department is truly amazing. Tim Gedemer, over at Source Sound, really pulled off something incredible.

We shot with Ambisonic mics as well as standard audio mics. As a result, we ended up with a huge amount of data. For example, with the phone call scene in segment one, the scene was a 4-way split screen. The segment wasn’t that long but we ended up working with 59 tracks of audio. It was system/process-intensive but the final segment was well worth the effort.

Q – In producing one of the first, if not the first, scripted episodic VR series, what “tricks of the trade” were developed during the project and what improvements did your crew and you help hardware/software manufacturers with?

Lewis – With a scripted project like this – or any VR project – it is absolutely necessary that the editor be involved throughout the production so shots can be planned for edit. We had a very strong, professional creative and technical crew that worked very well together.

I developed a way to “workshop” edits by laying a sheet of clear plexi and tracking where people looked using different colored markers. Like in cinemascope, fast editing in VR requires the editor to predict where people are looking; so, this was like a hand-made, sloppy heatmap.

Because Invisible is truly a robust 360-degree VR series, everything was new, different, stretched to the limits; and no idea was really off limits. We created our own film school, learning from each other as we shot and edited. The editor has to take into account where the viewer is likely to look and edit accordingly.

Q – Invisible is the first of what we hope will be a growing library of episodic films. Will it stand the test of time as we view it again in 5 or 10 years?

Lewis – That is a very intriguing question. Invisible is groundbreaking because it is a fun, compelling piece of entertainment that is very immersive. I think about Moore’s Law a lot in this context. I think 10 years of media time now is probably equal to 20 or 30 years. So, I think in 10-years’ time, we might look at Invisible the way we look Metropolis or the Cabinet of Caligari, or Battleship Potemkin. There are elements that will definitely hold up, but other things will feel very primitive.

I’m confident Invisible will attract an ever-growing audience; and I can say with confidence there will be additional seasons.

# # #