SMPTE Paves the Way for Content in the Cloud, VR

Elon Musk probably didn’t realize he missed a great opportunity to find out what his “competitor” was doing and how to prepare for his first trip to Mars.

Okay, that didn’t sum up this year’s SMPTE (Society of Motion Pictures and Television Engineering) Annual Tech Conference; but attendees did get a lot of valuable information on how fast work is changing for visual storytellers.

The emphasis of this year’s SMPTE technical event was on using/working in the cloud and VR.

And there were some nice surprises along the way.

Rodney Grubbs, NASA Imagery Experts Program Manager, showed folks how the agency used film work to prepare folks for the unknown. His headline writing may need a little work – “More and Better Pixels, How NASA Plans to use HDR, 4K, VR and Other Technologies to Take Everyone Along for the Ride to Mars” – but it was clear by the end of his discussion that the standards group was on the right track.

He outlined how closely NASA is working with the industry in the development of HDR, cameras with 4K and higher special resolution as well as VR cameras and other technologies that will ultimately find their way to become standard tools for filmmakers.

Based on the other presentations and discussions during the breaks, change is accelerating.

When the industry delivers everything on Grubbs’ wish list, NASA could be running great promos on their new UHD channel for Musk’s ticket sales.

Unrelated Highlight

The annual technical conference is crammed with great technology discussions; but this year, SMPTE’s HPA (Hollywood Professionals Association) held their second student film festival with entries from around the globe and frankly, these kids are good … damn good!

I think Howard Lukk, SMPTE Director of Engineering and Standards, was glad he only had to host the awards instead of figuring out who did the better job.

Everyone was impressed with the professional level that the Audience Choice Award was a three-way tie. Winners included Anna Dining, RIT (Rochester Institute of Technology) for her short At the Game; Unmasked by Christina Faraj and Alice Gavish, School of the Visual Arts, NY; and Rhapsody, CHAN Ming Chun of the Hong Kong Design Institute. Rhapsody was also recognized as the best use of VR for storytelling and proved that VR is more than just a passing fancy.

Filmmakers who work in VR like Lewis Smithingham of 30ninjas, and many speakers throughout the conference noted that people are literally experimenting, testing and refining VR techniques and technology but done right, it’s … awesome!

But seeing all the entries young men and women submitted from around the globe, it’s great to know that visual work is a universal language.

Ok I’ll say it … the professional content is also 100 percent better than the endless YouTube cat and stupid folks’ videos!

Working in the Cloud

I’ll probably be one of the last to jump wholeheartedly into the deep end of the cloud; but people like my kids and film folks who are budget-driven (time, money), see it as logical for 50-70 percent of their stuff.

A lot of progress has been made in the software-defined video pipeline, but speakers and attendees were still wrestling with how to get all the components connected in the IP (Internet Protocol) world.

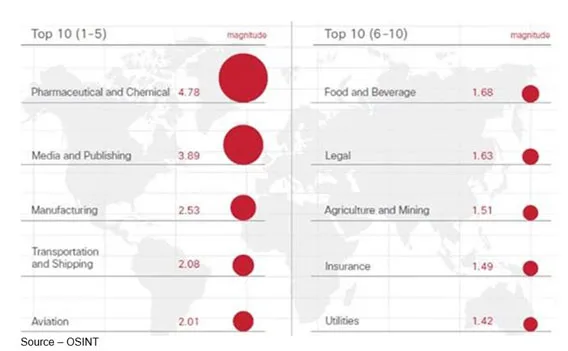

While SMPTE is working on establishing standards, there are still issues outside the creative person’s control like adequate bandwidth, network reliability, jitter, latency and those gut-wrenching issues of performance and security.

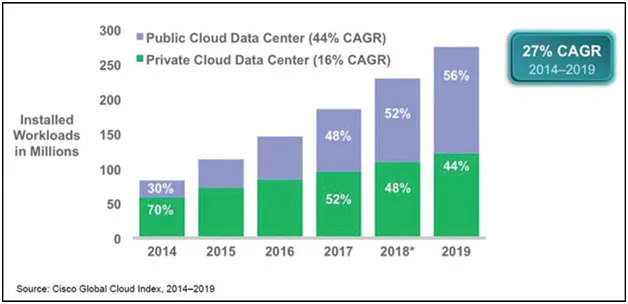

The move seems inevitable because neither large, well-funded facilities or Indie filmmakers can invest the time and resources needed when technology obsolescence is moving so rapidly.

Several immediate uses of the cloud were discussed during the technical sessions including some logical applications of IBM’s Watson-powered cognitive services for video content analytics. Separately, the concept of cloud-based rendering farms was also discussed.

IBM announced their Cloud Video solutions and Media Insights Platform at SMPTE and will be expanding the discussion at the Watson Developers Conference in San Francisco early in November.

It might be a fairly secure and economic way for filmmakers to optimize their video content and their audiences.

We’ll see.

Adobe, Avid, Autodesk and even Amazon are offering some very good, very economic production and post-production tools/services in their clouds. Two that will probably be welcomed with open arms are transcoding services and rendering in the cloud.

Transcoding is sort of a “well, yeah” type thing because it has become a necessary evil for a film of any length. The industry has had to move all of its analog content to digital and then make sure it could be shown at its best regardless of the viewing screen – theatre, TV, tablet, smartphone.

Annie Chang, Vice President of Technology for Marvel Studios and one of the leading women in the Hollywood tech community, is credited with helping Disney (Marvel’s parent company) transition from tapes to files.

Also known for shaping technology standards and strategies as well as helping research and implement new post-production and mastering technologies; Chang was recently invited to join the Science and Technology Council of the Academy of Motion Picture Arts and Sciences where she will continue to champion the breakthroughs of today.

While transcoding was a commodity service everyone needed, it wasn’t necessarily accomplished in-house.

Even with efficient, effective solutions from Autodesk, NVidia, Dell and HP; filmmakers often say they have to wear asbestos gloves when they’re doing a lot of CGI (computer graphics) or FX (special effects) rendering.

And it ain’t cheap.

Using less expensive, powerful systems locally for most of the work and farming out the heavy-lifting VIFX work can save a filmmaker a lot of time and frustration.

The biggest issue in the back of everyone’s mind is security. About the best I can say about that is that they have really smart security people working on it round-the-clock, round-the-calendar but still … stuff happens.

Maybe, by the time SMPTE turns 125, it will no longer be an issue.

Rush to Immersion

Last year’s SMPTE had a great presentation by AMD on the state of VR (and its future) as well as a cool eyes/mind-on showing of Fox’s Martian VR Experience.

If you strapped on the still rather dumb-looking HDR (head-mounted display) and walked around Mars, you knew there were going to be some great immersive films … someday.

Mary-Luc Champel, standard director for the MPEG ATSC (Motion Picture Experts Group, Advanced Television Systems Committee) noted that in studies as many as half of the folks got physically ill and that the industry would have to move slowly so VR didn’t suffer the same end as 3DTV.

It is breathtaking, but he warned that its success depends on the viewers’ positive overall experiences.

He and other speakers noted that Gen Zers seemed to adapt better than Millennials or Boomers to the experience and the sensory conflict.

Another speaker, Pierre (Pete) Routhier, president of Digital Troublemaker, said VR is coming fast but there are still a lot of issues like camera, HMD, interoperable formats, compression and delivery standards that are still “a work in progress.

The biggest challenge is that camera and HMD producers are all pushing for standards built around their technology and somewhere there has to be a common ground.

A VR Premiere

While SMPTE was informative and great for networking, I spent an extra evening in town to take in a VR premier.

It just so happened that Doug Liman (producer and director of Edge of Tomorrow, The Bourne Identity and The Bourne Ultimatum) and Lewis Smithingham, CTO of 30Ninjas, were kicking off one of the first scripted VR series, Invisible.

How could I resist?

Lewis had been telling me how good it was going to be (without mentioning the plot) for nearly a year.

Invisible was Liman’s first foray into VR and rather than the typical documentary or first-person VR film, he tackled the project as a scripted storyteller.

You can catch the trailer online at several locations including Jaunt and http://uploadvr.com/invisible-jaunt-liman/.

Lewis said the episodic series is about the wealthy Ashlands family and a secret certain members of the family have that enable them to turn invisible.

Lewis shot everything with a Jaunt ONE VR camera and you can view it with a Samsung HMD or other VR device.

Lewis noted that it’s unique because you can feel as if you’re in the room with the characters and when one walks to the left and you can follow them. In addition, you can look around other areas of the room if you want.

Since the storytelling approach had never been done before, it made scripting, scene set-up, shooting and post work a constant trial-and-error effort. “All of us, including Doug, tried things that worked great in traditional filmmaking but were disasters with VR,” Lewis said. “So the entire team had to tune our thinking/approach until it felt right for the viewer.”

Lewis began working with VR in 2014 when it was really in its infancy and had to create a whole new storytelling language working with the earliest (primitive) tools, hardware and techniques.

Working at the forefront of the new narrative form he has pushed his hardware to the point of meltdown, cooking iPhones, GPUs (graphic processor units) and computers.

That’s probably because he was working with 4K by 4K, 59 frames per second high-res DPX (digital photo exchange) files that are roughly 85MB per frame and extreme amounts of data and processing were required.

While Liman and Smithingham acknowledge, some viewers may experience disorientation and nausea, they feel the episodic approach (less than 15 minutes each) may be the best way to enjoy the total immersion experience.

“Spending up to eight hours a day, six days a week in a headset is a sure way to guarantee you’re going to have a helluva’ nauseating, migraine by the weekend,” he joked. “I wanted to do everything possible to ensure that didn’t happen to the viewer.”

Technology (hardware and software) has advanced rapidly since Invisible was started a year ago. Today, he’s using a heavy-duty Dell workstation he named “War Machine,” a ton of storage and the latest solutions Adobe has available.

Smithingham emphasized that to produce really breathtaking and comfortable VR, shooters/producers have to have the right hardware that can render with a good refresh rate, a high frame rate and no stutter.

He wouldn’t give any hints as to what Liman and he are thinking about for their next episodic projects except to say, “Ideas are being considered.”

My guess is they never want to look back like Terry did and say, “You don’t understand. I coulda had class. I coulda been a contender. I coulda been somebody.”

My guess is they never want to look back like Terry did and say, “You don’t understand. I coulda had class. I coulda been a contender. I coulda been somebody.”

I doubt if that could ever happen.

# # #