The Conclusion of our Investigation into NVIDIA’s Single Pass Stereo Technology

Our first deep-dive into simulation virtual reality (VR) performance attempted to address the question: “What is Single Pass Stereo (SPS), and does it improve performance in iRacing?” Under the original test-conditions, we concluded that it did not, at least in a meaningful way. A short time later, additional research and feedback left us feeling that SPS was not sufficiently explored.

So we’re back this time with more cars, more track, and with RTX! Buckle-up as we saturate our GPUs to the breaking point in Part II of our search for SPS VR performance in iRacing.

Understanding NVIDIA VRWorks Single Pass Stereo and Multi-View Rendering

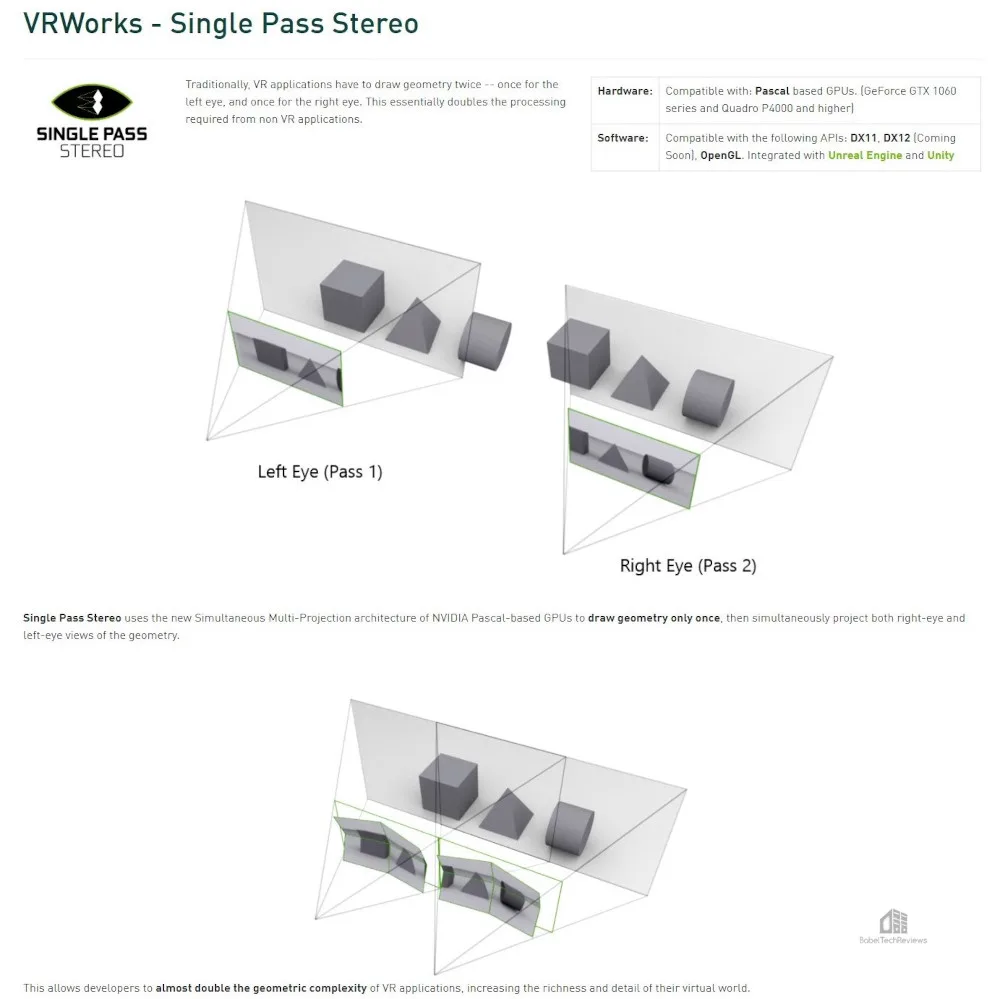

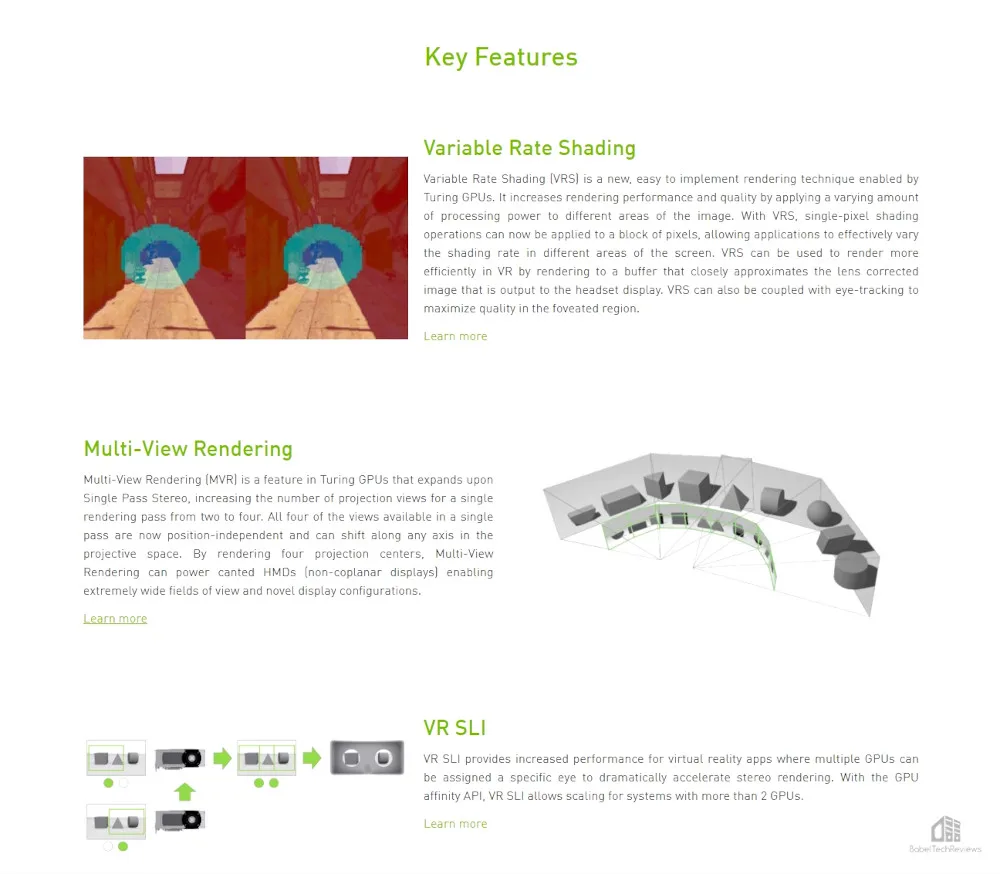

To understand Single Pass Stereo (SPS) one must first understand a basic tenet of VR that everything is drawn twice. Unlike gaming on a monitor where the scene rendered is from only a single point of view, VR demands both a left and right eye image, each slightly different so as to present properly in spatial 3D. SPS (Pascal architecture) and MVR (Multi-View Rendering, Turing/Ampere architecture) are NVIDIA VRWorks features that allow for reducing geometric calculations from two to one per frame in VR. For additional information on NVIDIA VRWorks and how SPS functions, please see our original review.

The SPS Process

While conducting research for these articles, I had the pleasure of interviewing Ilias Kapouranis, a VR Software Engineer and specialist on VRWorks technical details. Since SPS/MVR only aids in accelerating GPU vertex (geometry) calculations, I asked him how or even if the CPU significantly factors into the SPS performance equation. He replied:

“… Shaders change the way of producing vertices from two separate computations per eye to one computation for both eyes. From a very high level view, yes the geometry computation is halved but the developers have to greatly optimize their vertex shaders in order to actually achieve this theoretical peak. Since SPS is not available in the fragment (or pixel) shaders, the work that is being done there is not affected OR halved.

Without SPS:

-

- Send the world data

- Send the left eye data

- Send how to process the left vertex data

- Send how to process the left fragment(pixel) data – Then submit the left eye to the HMD

- Send the right eye data

- Send how to process the right vertex data

- Send how to process the right fragment(pixel) data – Then submit the right eye to the HMD

With SPS:

-

- Send the world data

- Send the left eye data

- Send the right eye data

- Send how to process the vertex data

- Send how to process the left fragment(pixel) data – Then submit the left eye to the HMD

- Send how to process the right fragment(pixel) data – Then submit the right eye to the HMD

…When SPS / MVR is enabled we don’t send “how to process the vertex data” twice. This is translated into API calls. These calls have to leave the program, communicate with the graphics driver, then the graphics driver has to validate the API calls before forwarding them to the low level hardware driver. This is quite a long chain of communication but it doesn’t have any effect in PCVR because modern desktop CPUs are exceedingly fast when compared to mobile chipsets…”

-Ilias Kapouranis 10/24/2020

Hardware

- CPU: AMD Ryzen 9 3900X (12c/24t) – PBO 4.5GHz Boost

- Motherboard: ASUS ROG Crosshair VII Hero X470 – BIOS 3004

- Memory: Crucial Ballistix RGB 3600 32GB DDR4 – 2x16GB, dual channel at 3600MHz

- GPU: EVGA GTX 1080 Ti SC2 – stock clocks

- GPU: NVIDIA RTX 2070 Founders Edition – stock Clocks

- Sound Card: Creative Labs Sound Blaster AE-7

- SSD: Samsung 970 Evo 1TB NVME M.2

- PSU: Seasonic Focus+ Gold 850W

- VR HMD: Oculus Rift S – Quality Setting

- Sim Gear – Fanatec DD1 Wheelbase, Fanatec Formula V2 Rim, Fanatec CSL ELite LC pedals

Software

- Windows 10 Pro (V2004 19041.610)

- NVIDIA Driver Version 456.38 (9/17/2020)

- AMD Chipset Driver 2.07.14.327

- Oculus Software Version 20.0

- iRacing Version 2.27.0273 (Patched through 10/24/2020)

- NVIDIA FCAT VR Capture v3.26.0

- NVIDIA FCAT VR Analysis Beta 18

- Microsoft Direct X 12 (Patched current 10/24/2020)

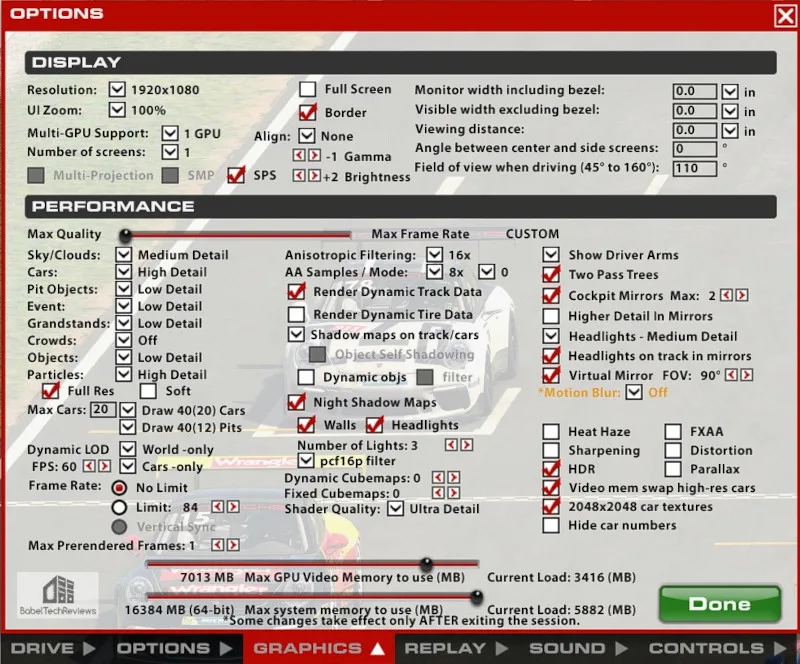

Graphics Settings

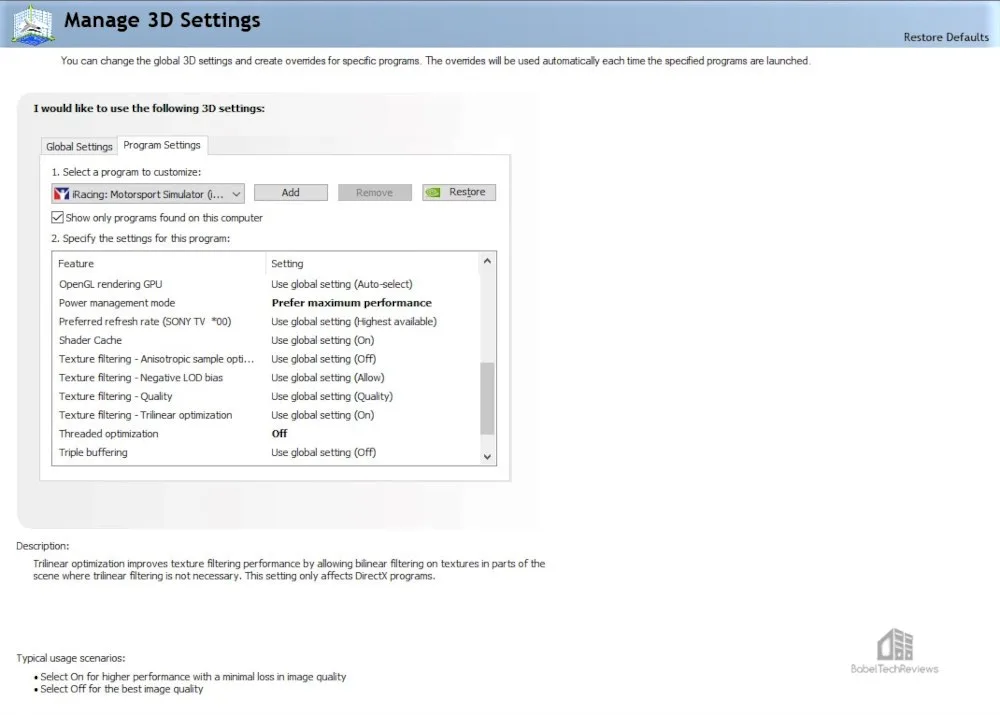

For visual quality and consistency, both configurations were set to identical NVIDIA driver settings and utilized a 120% supersampling rate as set through iRacing’s DX11 Configuration file.

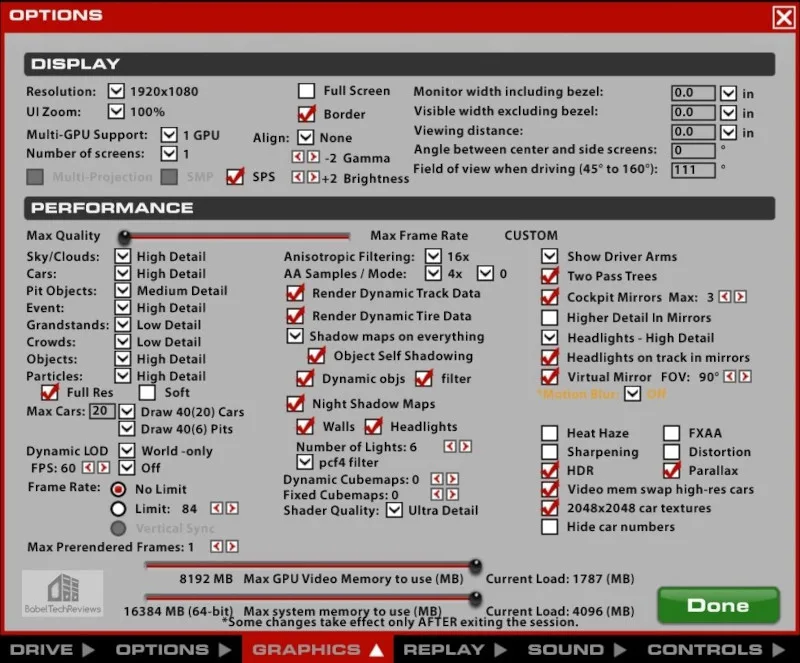

Initially, we utilized the same iRacing graphics settings as the first article. Those graphics settings will be referred to as ‘Standard Settings’.

It is important to note that these ‘Standard Settings’ did not allow the RTX 2070 to run the VR HMD as well as the GTX 1080 Ti which is an older yet more powerful GPU. Under ‘Standard Settings’, the RTX 2070 spent significant time at 40 FPS (frames per second) generating synthetic frames via Asynchronous Space Warp (ASW). Wanting to keep supersampling the same, and due to the demanding nature of the Barcelona Catalunya circuit, new graphics settings were required.

With settings too low, the unconstrained framerate would be too high, potentially in the low hundreds. Running this fast would erase any noticeable difference between SPS On/Off. We needed settings that allowed the RTX 2070 to run around 90 unconstrained FPS while still keeping crisp image quality (IQ). This would let us run the Rift S HMD at its nominal rate of 80 FPS and still allow us to see if SPS could keep the HMD out of ASW territory. After some testing, the following settings allowed the RTX 2070 run approximately 90 FPS unconstrained at all times.

In-Engine Benchmarking

Using a replay for benchmark purposes in iRacing is not an accurate test due to lighter CPU loading when compared with actual driving. Therefore, we created a consistent and repeatable run that allowed us to produce accurate and repeatable results. The in-engine benchmark test was run three times for each GPU configuration at ‘High Settings’ for both SPS On and SPS Off. To better see what the RTX 2070 was truly capable of, a triple series of SPS On/Off tests was run under our new ‘Fast Settings’ standard. For each of the possible settings, the average of all three runs was used for data comparison purposes.

In total, 18 incident-free runs were recorded by NVIDIA’s FCAT VR at a capture length of 240 seconds (four minutes).

iRacing SPS Data Collection Run parameters

- iRacing Graphics Options (High and Fast Setting presets)

- NVIDIA FCAT VR recording started at green light on standing start (240 Seconds)

- iRacing AI Racing, Single Race

- Car – Porsche 911 GT3 Cup (991), Default Setup

- Track – Circuit de Barcelona Catalunya (Grand Prix layout)

- Weather – 80F, 55% Relative Humidity, Wind North 4mph, No Dynamic sky, No Variations

- Race – 4 laps, Damage off, Standing Start last place, 19 Opponents 50-80% skill

- Track Condition – Starting Track State 50%

- Time of Day – Noon, 12PM

- Upon race start, follow AI drivers, overtake to 15th place and hold

Over the course of testing, I learned the very demanding Circuit de Barcelona Catalunya. I came to enjoy its mix of high-speed with technical sections. This demonstration video uses the above parameters with the RTX 2070, ‘Fast Settings’, and SPS On.

The following data acquisition runs were made. Total drive time: 1.2Hrs

- GTX 1080 Ti – SPS On, SS 120%, High Settings- Runs 1, 2, 3

- GTX 1080 Ti – SPS Off, SS 120%, High Settings- Runs 4, 5, 6

- RTX 2070 FE – SPS On, SS 120%, High Settings- Runs 7, 8, 9

- RTX 2070 FE – SPS Off,SS 120%, High Settings- Runs 10, 11, 12

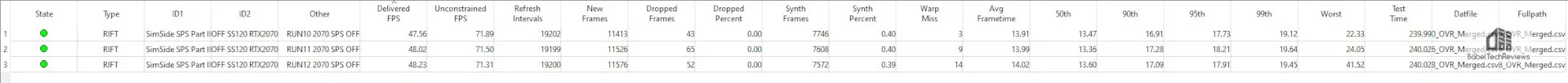

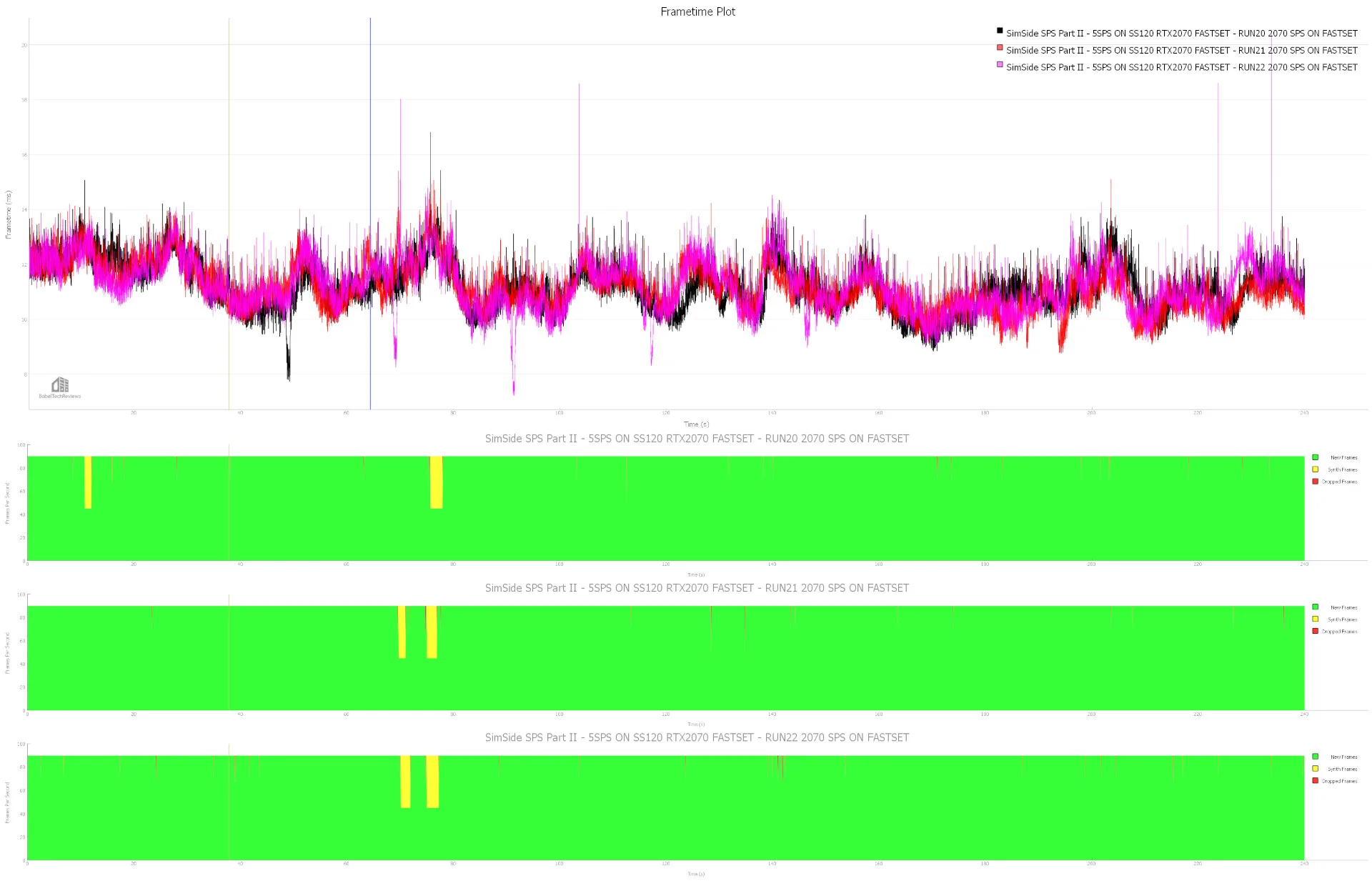

- RTX 2070 FE – SPS On,SS 120%, Fast Settings – Runs 20, 21, 22

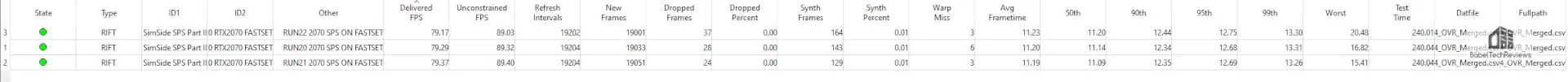

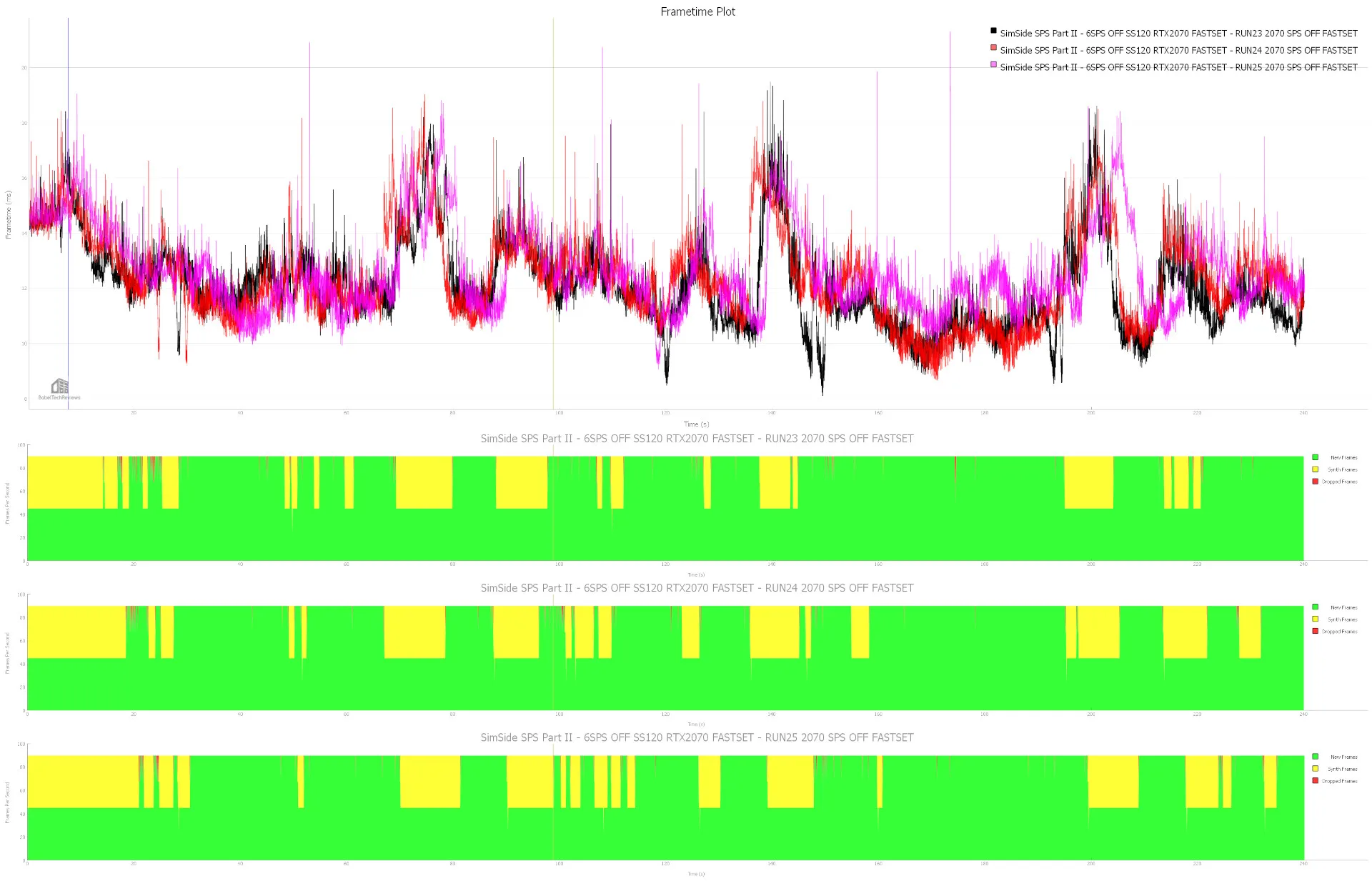

- RTX 2070 FE – SPS Off,SS 120%, Fast Settings – Runs 23, 24, 25

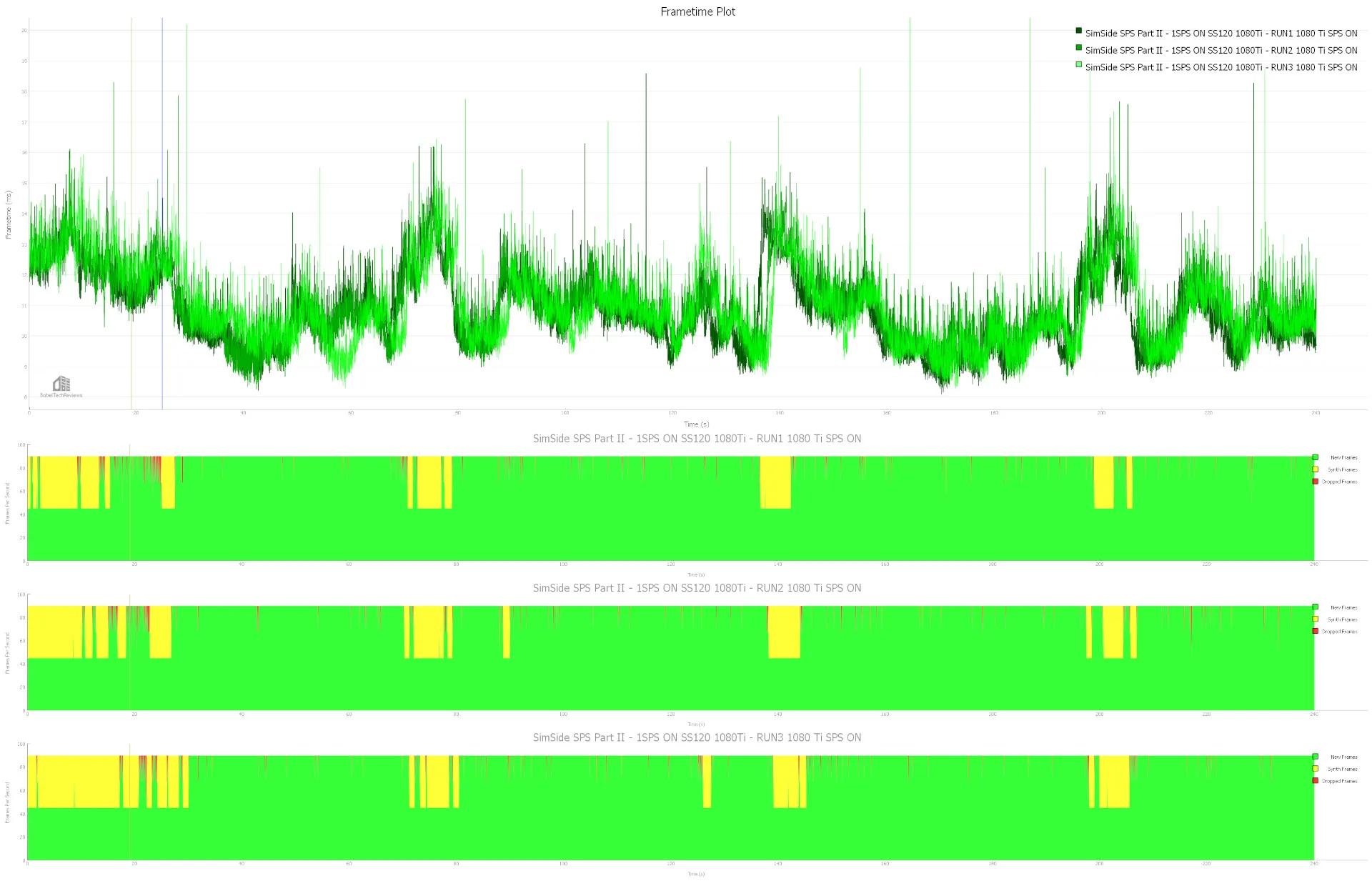

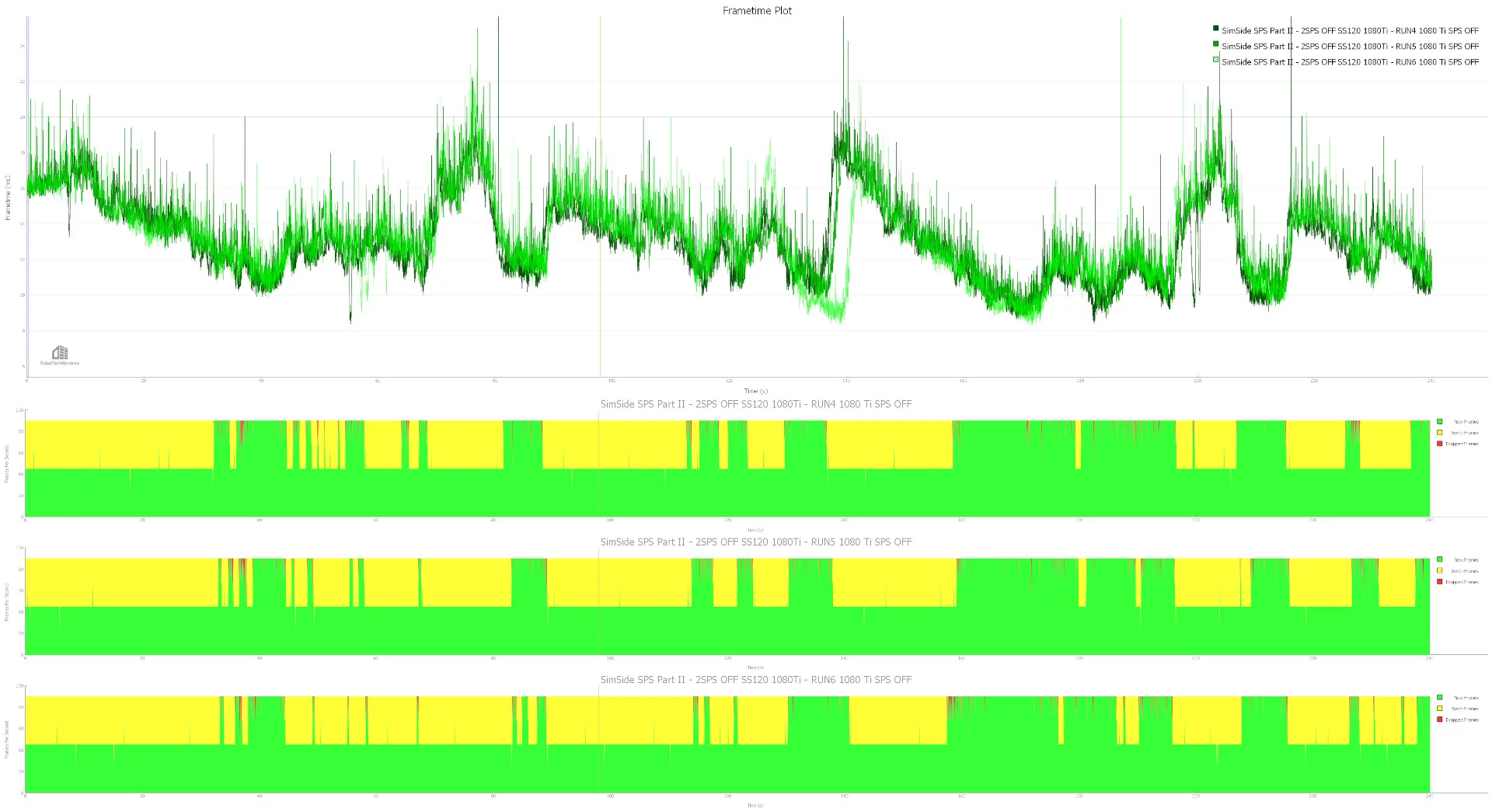

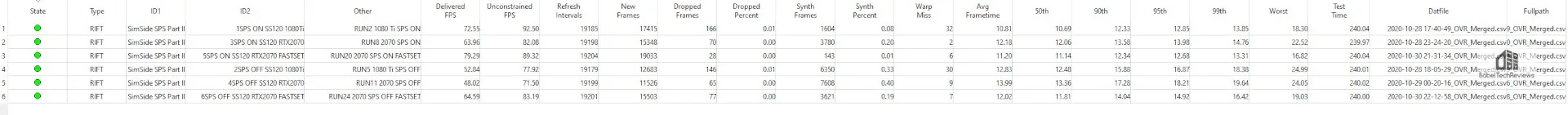

GTX 1080 Ti – SPS On, SS 120%, High Settings – Runs 1, 2, 3

- Average Delivered FPS 72.57

- Average Unconstrained FPS 92.82

- Average Frames Dropped 169.66

- Average Synth Frames 1596

- Average Frametime 10.77ms

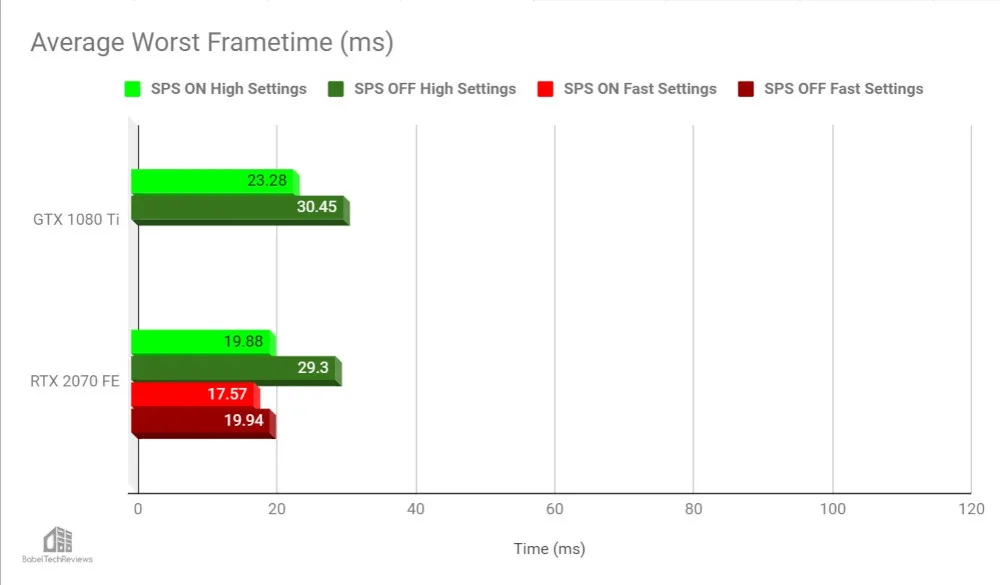

- Average Worst Frametime 23.28ms

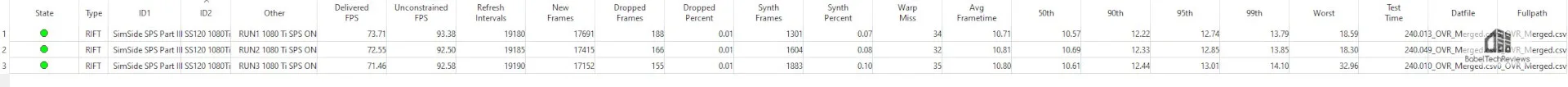

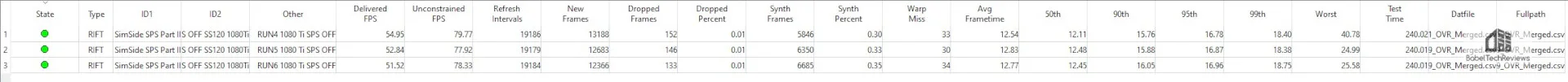

GTX 1080 Ti – SPS Off, SS 120%, High Settings – Runs 4, 5, 6

- Average Delivered FPS 53.10

- Average Unconstrained FPS 78.67

- Average Frames Dropped 143.66

- Average Synth Frames 6,293

- Average Frametime 12.71ms

- Average Worst Frametime 30.45ms

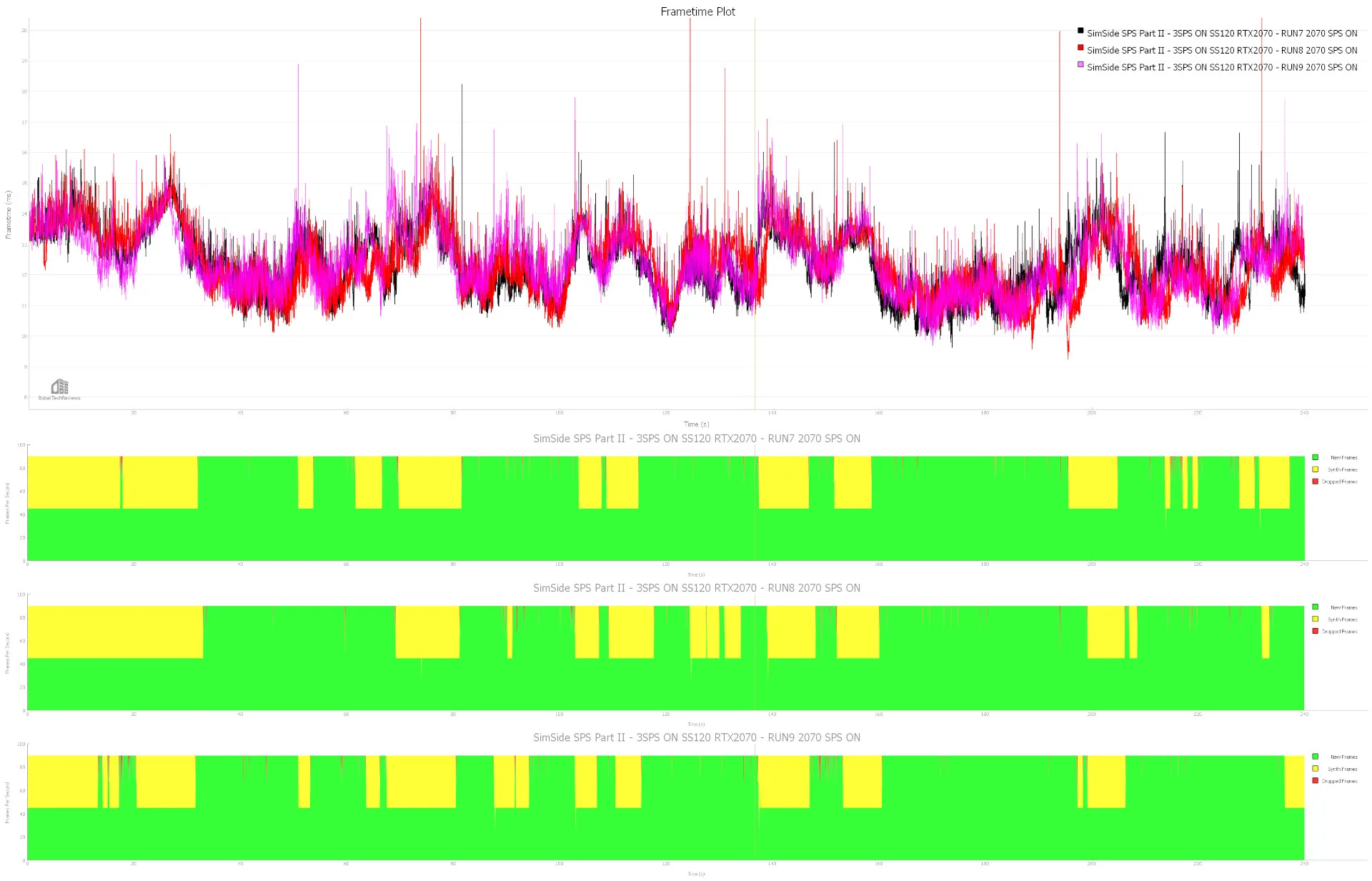

RTX 2070 FE – SPS On, SS 120%, High Settings – Runs 7, 8, 9

- Average Delivered FPS 63.99

- Average Unconstrained FPS 82.09

- Average Frames Dropped 76.33

- Average Synth Frames 3,766

- Average Frametime 12.18ms

- Average Worst Frametime 19.88ms

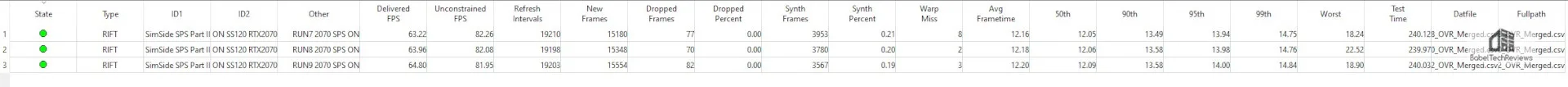

RTX 2070 FE – SPS Off, SS 120%, High Settings – Runs 10, 11, 12

- Average Delivered FPS 47.93

- Average Unconstrained FPS 71.56

- Average Frames Dropped 53.33

- Average Synth Frames 7,642

- Average Frametime 13.97ms

- Average Worst Frametime 29.30ms

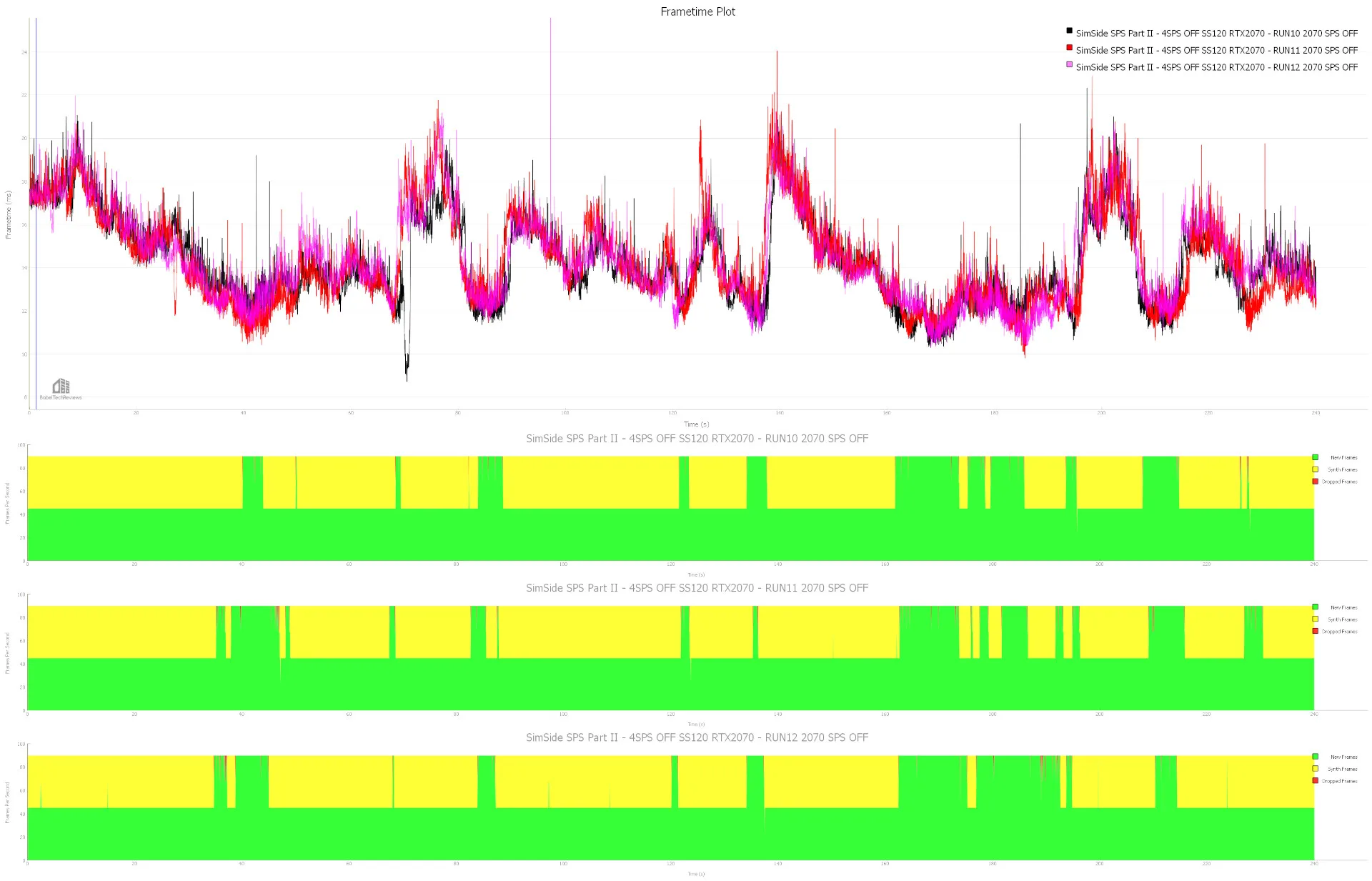

RTX 2070 FE – SPS On, SS 120%, Fast Settings – Runs 20, 21, 22

- Average Delivered FPS 79.27

- Average Unconstrained FPS 89.25

- Average Frames Dropped 29.66

- Average Synth Frames 145.33

- Average Frametime 11.20ms

- Average Worst Frametime 17.57ms

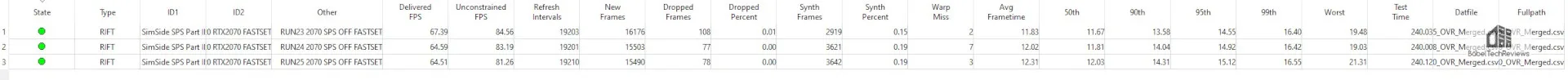

RTX 2070 FE – SPS Off, SS 120%, Fast Settings – Runs 23, 24, 25

- Average Delivered FPS 65.49

- Average Unconstrained FPS 83.003

- Average Frames Dropped 87.66

- Average Synth Frames 3,394

- Average Frametime 12.05ms

- Average Worst Frametime 19.94ms

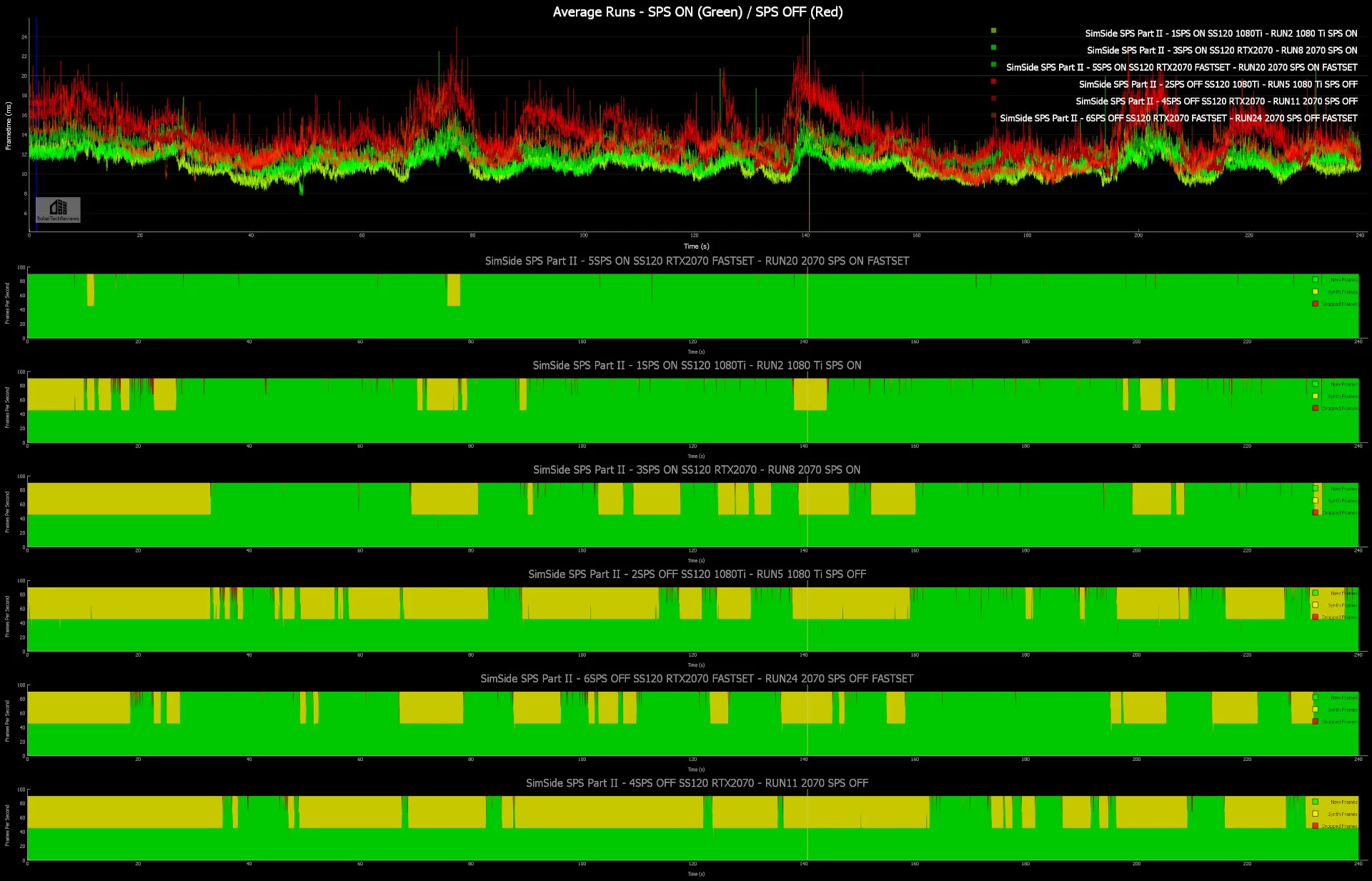

Average Per-Condition Runs

This last chart takes the most average / representative capture of the three runs for each setting and system category. Colors were inverted to better spot trends, with SPS On in green, and SPS Off in red.

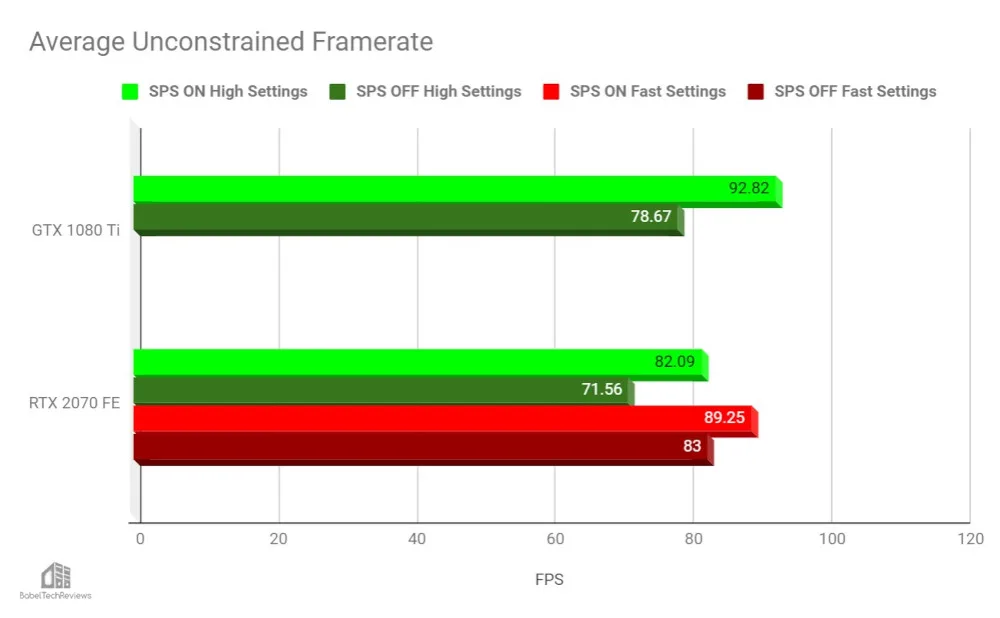

Unconstrained Framerate is the term for how many frames per second (FPS) the system is generating before sending them through the VR compositor. When enabled, SPS netted a 17.99% increase in average unconstrained framerate for the GTX 1080 Ti, while the RTX 2070 saw a 14.71% increase at High Settings, and 7.53% increase at Fast Settings.

Next, and perhaps the most important metric for VR users, is Average Delivered Frames. In the case of the Oculus Rift S, the goal is to deliver an average at or near a constant 80 FPS so as to prevent the HMD from engaging Asynchronous Space Warp and generating synthetic frames. VR is always at its best when it’s fast and smooth. Otherwise, when confronted with synthetic frames and stuttering, some may feel ‘VR sick’.

This time, under the new test conditions, average delivered frames for the GTX 1080 Ti showed a massive difference: With SPS engaged, the HMD saw an improvement of 36.67% in delivered frames. Additionally, the RTX 2070 benefitted with an improvement of 33.51% under High Settings, and 21.04% under Fast Settings.

Average Frametimes for both systems in all test parameters were either identical or within 2ms regardless of SPS mode.

Lastly, SPS again displayed a consistent and statistically significant benefit in Average Worst frametime (ms). For each of the GPUs and under both High and Fast iRacing settings, SPS On lowered average worst frametime by 30.8%, 47.38%, and 13.49% respectively, for an average reduction of worst frametime latency of 30.55%. With significant gains found across all metrics, it is confirmed: NVIDIA’s SPS does aid VR rendering in high-load, geometrically complex situations.

‘Box this lap…’

It has become clear that our first article’s test conditions did not take full advantage of all that iRacing could offer graphically. Under much more rigorous conditions, we have revisited the original question:

‘Does NVIDIA’s VRWorks™ Single Pass Stereo net a tangible benefit for iRacers wishing to maximize their VR performance?’

Yes. If you have a modern NVIDIA video card and the option to use SPS with a compatible VR HMD, check that box! Under high-load, geometrically complex situations, SPS functions to ensure a smoother VR experience. Given identical high-load conditions, video cards that do not enable SPS require more raw performance or lesser settings. With that said, the SPS impact is variable with tests confirming a 0 to 33% boost in delivered VR framerate. SPS is not a cure-all and it will not make up for poorly configured systems or settings.

SPS is most useful when unconstrained framerates are above yet close to the HMD’s design spec. It prevents the system from running under the HMD’s design framerate and dropping into ASW or reprojection. In the final analysis, SPS is a relevant feature that adds indisputable value to NVIDIA GPUs if software allows its use.

I’d like to thank Ilias Kapouranis, the BTR staff, our readership, and the iRacing community for help with this investigation. Look out soon for more from the Sim Side, with a VR review of Reiza Studios’ Automobilista 2.

– Credit for TradingPaints Porsche Skin: Andy D. Oakley

Comments are closed.