NVIDIA Introduces AI Interactive Graphics to Create Virtual 3D Worlds

NVIDIA is introducing new AI research that will enable developers to create interactive 3D environments for the first time. Their research will be unveiled at the NeurIPS AI research conference in Montreal, Canada today. This work was developed over the past eighteen months by a team of NVIDIA researchers led by Bryan Catanzaro, vice president of applied deep learning at NVIDIA.

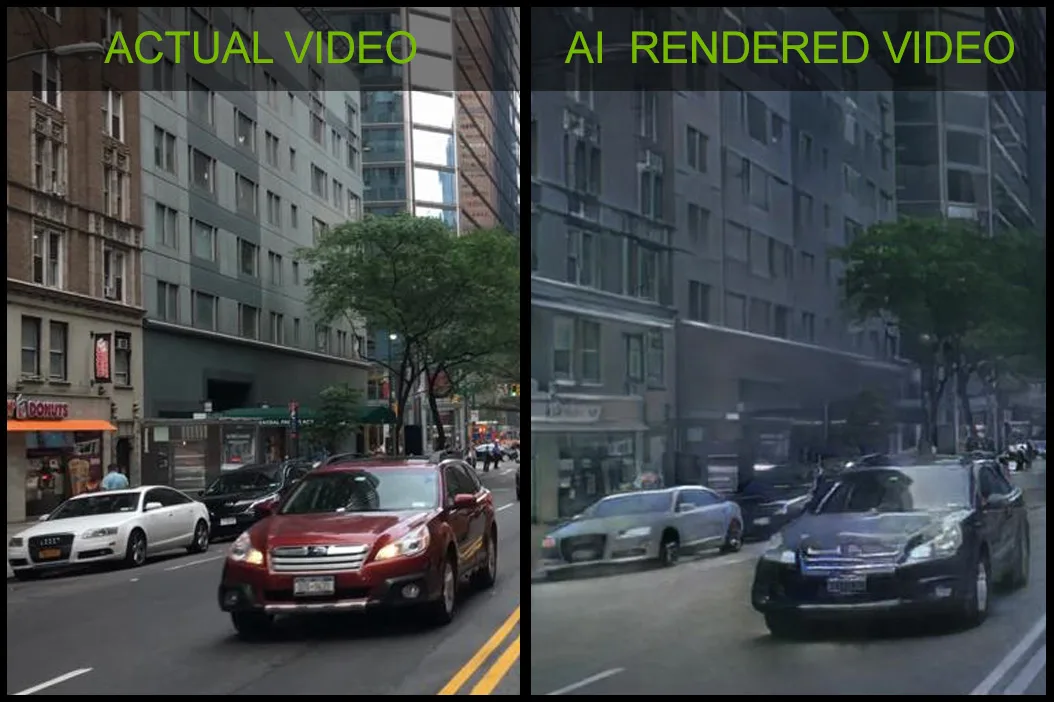

AI research has opened new ways to render computer graphics by using neural networks to use real world videos to render graphics. High-level representations of the real world using computer vision – a necessary part of self-driving autos – converts images into sketches which are analyzed and mapped by deep learning, and then converted into a game scene by a game engine.

Currently, every object in a virtual world needs to be modeled whereas NVIDIA’s research uses models trained from video to render objects instead. Their neural network uses deep learning to model the lighting, material, and dynamics of the real world, and since the scene is synthetically generated, it can be edited easily by the developer. NVIDIA is finding more uses for their specialized Turing RTX tensor cores although any GPU can do this which can make it easier for developers to create virtual game worlds. It is now possible to capture video of real objects or people to create brand new games scenes. Of course, this is still in the research stage, which means it may take years until it is fully realized in games.

NVIDIA is finding more uses for their specialized Turing RTX tensor cores although any GPU can do this which can make it easier for developers to create virtual game worlds. It is now possible to capture video of real objects or people to create brand new games scenes. Of course, this is still in the research stage, which means it may take years until it is fully realized in games.

One use of this research may even be used to upgrade the graphics of an old game. Or it may be possible by using deep learning algorithms to train models using real world objects for use in an existing game to make it easier for developers to add new game levels. It’s a hybrid approach that synthesizes videos using AI/deep learning

One simplified approach that may be used would be to create and add custom avatars of a gamer and of his friends for use inside of a game. It may be as simple as taking a picture of the gamer’s face and of his friends and uploading them to a server.

Here is a short video showing the first interactive AI-rendered virtual world. It was synthesized in about a week on a single DG-X1 using computer vision techniques drawn from self-driving car datasets used for training AI taken from the Internet. After labels are generated and the models are trained by deep learning, the Unreal engine draws a sketch in real time, and then the trained model renders it off-line using Turing cores at 25 FPS.

The dancing portion of the video uses post mapping to describe where the human articulations are by using computer vision techniques to generate labels and then train the model to generate it in real time. These same techniques may allow a player to upload a picture of himself to the server so that his custom avatar appears in the game as an accurate representation of himself.

All of this is available on GitHub now although it is mostly geared toward AI and machine learning now. Even though this research is still a proof of concept, we expect that some devs may want to experiment with it and we are hoping to see AI used in upcoming games.

Happy gaming!