Intelligence Assistants Are Augmented, Not Artificial

The other day, I went into the family room to get something and forgot what it was so I asked, “Alexa, what did I come in here for?”

Silence!

So, I whipped out my ever-present phone and said, “Siri, what did I want?”

Silence!

In other words, artificial intelligence is really dumb.

Sorry, Alexa; sorry, Siri.

If I hear/read one more announcement of someone who has fully implemented artificial intelligence into their whatever, I’m gonna get really ill!

After all, artificial — made or produced by human beings rather than occurring naturally, typically as a copy of something natural.

Dude/dudette, it isn’t really intelligence until it can do something … then do something different … then do something different learning from each action, experience.

Our goal should be augmented — to make larger; enlarge in size, number, strength, or extent; increase.

Yeah, I’ll buy into that.

Hey, Musk said he was really worried about AI being unleashed in the world.

Recently, he had a change of heart with Neuralink that wirelessly plugs a computer your brain.

Recently, he had a change of heart with Neuralink that wirelessly plugs a computer your brain.

Jeezz, Elon, doesn’t like any halfway measures!

Ask people if they know what AI is and most will describe it as ranging from their smartphone to Terminator.

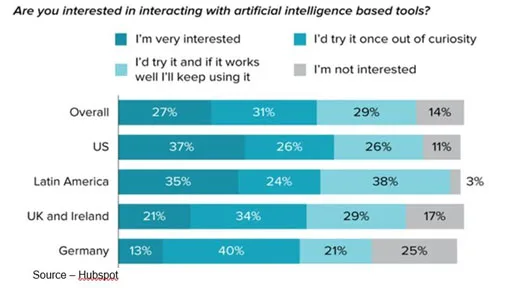

Hubspot recently reported that only 37 percent of the folks they interviewed had used an AI tool but 63 percent of them were actually using it.

You know, voice search or online search, film/photo identification, driving enhancements, etc.

Right now, it’s pretty rudimentary.

It’ll do what it’s programmed to do; and that’s about it, unless there’s human interaction to make things happen differently.

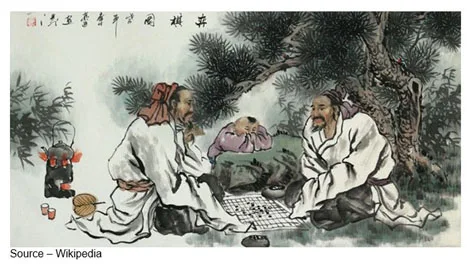

Take DeepMind, the AI research company that laid claim to developing software that beat the world’s top players of Go, a 2500-year-old Asian game with rules that work … except when they don’t.

The program beat the champs by “violating” conventional wisdom and strategies that were developed over generations of experience.

Humans have this fallibility of not wanting to make a mistake, not wanting to risk losing a game and not wanting to experiment and show they *****ed up.

But augment the person’s intelligence (thousands of years of experience) with “sterile” thinking and suddenly, there’s a new, different way to win or accomplish something.

It’s a lot like a child accomplishing something that totally amazes parents and grandparents because they know she shouldn’t be able to do it that way but she succeeded. That’s why grandparents think their kid’s kid is a Brainiac.

The little kid simply didn’t know she was supposed to fail.

And, like the child, the AI program doesn’t know – and doesn’t care – if it succeeds or fails; it just does it!

Viola! Success!

Imagine what this AI/human team could do in curing diseases, saving the environment and penetrating the universe we live in?

Freakin’ awesome!

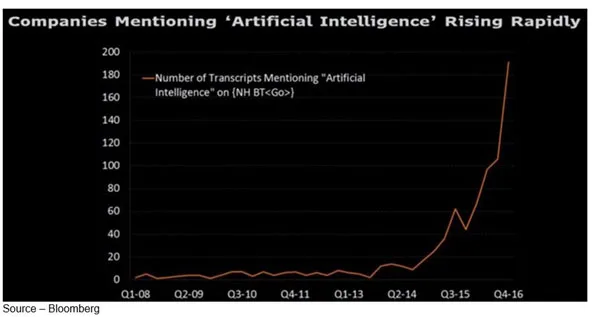

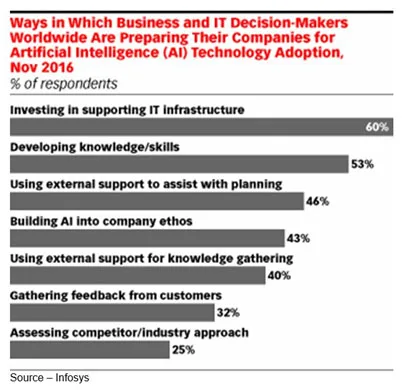

The greatest initial growth for AI has been in IT (Information Technology) because we’ve been gathering data from everything and everyone so fast, people can’t figure out what it means, what to do with it.

How does it impact/help day-to-day business activity?

A recent Tata Consultancy Services (TSC) survey found that 84 percent of executives feel that AI use is critical to their business and 50 percent said it will transform their business.

But only eight percent of the executives were using AI and that was in IT – detecting security intrusions, user issues and delivering automation.

If we’re going to have 50B IoT (Internet of Things) points, AI is going to have to work to help people in such activities as finance, strategic planning, corporate development, HR, marketing and customer service.

Working together, the AI/human team can accomplish things more quickly and less stressfully, so they both get to go home happy and early (Hey, even 1s and 0s need to rest.).

And while the AI/human team is relaxing, another AI solution is digging around in the data to figure out what new show, what new content they want to watch that they didn’t even know they wanted to watch.

To meet that insatiable demand for more and more audio/video content, Indie filmmakers will be using their AI tools to see what people have an unrealized desire to watch.

And organizations like Comcast, Sky, Rogers, Netflix, Amazon Prime, Hotstar, Baidu and Youku will be serving up the refreshing content to keep you glued to your whatever screen.

The companies with the best AI/human teams at work and at home will grow and prosper.

In other words, it will be great … until it’s not.

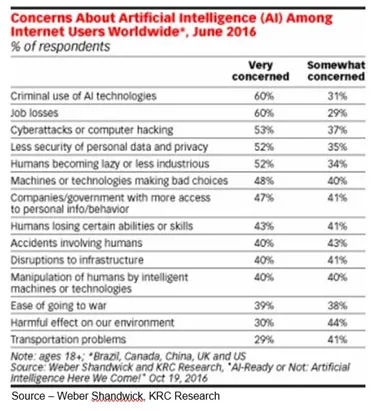

There are concerns about the AI/human team as well.

There are major concerns about security and privacy; but if you give Google or Amazon all of your information; share your personal life/data on Facebook, twitter, Instagram, Weibo, WeChat, Africanzone, Blueworld, Partyflock, Baboo and thousands of other global sites; how concerned are you?

Really?

Yes, some jobs will be lost and new ones will emerge.

That happened when we were hunters/foragers, agrarians, pick-and-ax miners/lumberjacks and pencil pushers/assembly line workers.

Humankind has done a fairly decent job of adapting since we began walking on two legs and especially since the 2,500 years since we developed Go; so why should the game suddenly be over?

Well, there is a concern.

A huge one … Programming Bias!

For example, there’s Tay, an innocuous Twitter bot designed for 18- to 24-year-olds to enjoy the world around them morphed into a foul-mouthed racist or Holocaust denier that said women should die and burn in hell.

Or Google photos that tagged African-American users as gorillas.

Or Siri not knowing how to respond to female health questions.

Or the video game industry’s continual issue of female harassment, discrimination.

AI/programs are nothing more than 1s and 0s strung together by people (sorry Alexa and Siri) who can be consciously or unconsciously racist, biased and prejudiced.

Right now, most of those code writers are not female, not African-American and not Latino, so we can expect the prejudices to arise.

Yes, the programmer(s) can panic, jump in and correct it … for now. But in a few years, we’ll have code writing code that is biased or does harm to a certain segment of society.

That’s gonna’ suck … Big Time!

The people who are developing, testing and rolling out AI programs that interact with humans should probably follow Google’s early motto … do no harm.

What the heck, they gave up using it once they found out they can print money simply by juggling ads around.

Code writers are going to have other code writers from everywhere testing, retesting, tweaking and refining the AI partner because problems will arise, even if they weren’t planned or intended.

The industry and individuals have to constantly keep in mind when others look at their programs what Monica said, “I can’t accept this! There is no substitute for your own child!”

The industry and individuals have to constantly keep in mind when others look at their programs what Monica said, “I can’t accept this! There is no substitute for your own child!”

And I know my kids are perfect, but yours … you need to work on.

# # #