CORSAIR sent us the AI Workstation 300, a remarkably compact system that is smaller than a shoebox. Designed primarily for AI/machine learning, it is a powerful system using AMD’s Ryzen AI Max PRO Series processor. Although there is no discrete GPU, a pool of 128GB of shared memory allows up to 96GB to be allocated to the integrated GPU.

For comparison, we benchmarked the even smaller HP Z2 Mini G1a, with almost identical hardware, which is an IHV certified workstation. In addition, we benchmarked our own high-end consumer PC featuring a Ryzen 9 9950X3D, RTX 5090, and 192 GB of CORSAIR DDR5 memory at 5800 MT/s. We tested all three systems extensively to see how this seemingly uneven matchup would play out.

Before continuing, it is important to clarify that our PC and the CORSAIR AI Workstation 300 are not workstations like the HP’s Z2 Mini G1a. Both PCs can run most workstation-class tasks, but lack ISV certifications. The CORSAIR PC is indeed an AI-first Workstation. Both PCs are Creator PCs, ideal for game developers, media and entertainment professionals, and digital creators engaged in 3D rendering, video editing, graphic design, but especially for handling even very large, large language model (LLM) AI workloads.

In terms of pricing, the CORSAIR AI Workstation 300 as reviewed MSRP is $2,499.99. The similarly equipped HP Z2 Mini G1a lists for $4,999.99, but can be purchased at BHPhoto for $3199.00, and our desktop PC can be self-built for about $6,500.

Since our last review, where we compared the HP Z2 Mini G1a with our custom PC, we changed out the ASRock Taichi motherboard to a MSI Carbon X870E board and were able to increase our 4x48GB CORSAIR DDR5 frequency from 4400MT/s to 5800MT/s (CL30-36-36-76). We also updated the HP Z2 Mini G1a BIOS, and all of our test benches, besides adding new ones.

Test Configuration

CORSAIR AI Workstation 300

Supplied by CORSAIR

| CORSAIR AI Workstation 300 | Configuration |

| Processor: | AMD Ryzen AI Max+ PRO 395 (Zen 5, 120 W, 3.0/5.1 GHz base/boost, 64 MB L3 cache, 16 cores / 32 threads) |

| Graphics: | AMD Radeon 8060S (20 × RDNA 3.5 workgroup processors up to 2.9 GHz) NPU: AMD Ryzen AI XDNA 2 (up to 50 TOPS) |

| Memory: | 8 × 16 GB Micron LPDDR5X-8000 (128 GB total, effective 8000 MT/s, soldered to motherboard) |

| Storage: | 2 × 2 TB PCIe 4.0 NVMe SSDs (Gen 4 × 4) |

| Front Ports: | 2x USB 3.2 Gen 2 Type-A, 1x USB 4.0 Type-C, Headphone/Mic Combo Jack, SD Card 4.0 |

| Rear Ports: | 2x USB 2.0 Type-A, 1x USB 3.2 Gen Type-A, 1x USB 4.0 T |

| Connectivity: | 2.5G Ethernet, Wi-Fi 6E, Bluetooth 5.2 |

| Audio: | 3.5 mm headset jack (Mic/Headphone) |

| Power: | 300W Flex ATX Power Supply |

| Display: | BenQ EW3270U 32″ 4K UHD 60 Hz FreeSync |

Z2 Mini G1a

Supplied by HP

| Z2 Mini G1a | Configuration |

| Processor: | AMD Ryzen AI Max+ PRO 395 (Zen 5, 120 W, 3.0/5.1 GHz base/boost, 64 MB L3 cache, 16 cores / 32 threads) |

| Graphics: | AMD Radeon 8060S (20 × RDNA 3.5 workgroup processors up to 2.9 GHz) NPU: AMD Ryzen AI XDNA 2 (up to 50 TOPS) |

| Memory: | 8 × 16 GB Micron LPDDR5X-8533 ECC (128 GB total, effective 8000 MT/s, soldered to motherboard) |

| Storage: | 2 × Kioxa XG8 1 TB PCIe 4.0 NVMe SSDs (Gen 4 × 4) |

| Front/Top Ports: | 1 × USB-A 10 Gbps, 1 × USB-C 10 Gbps |

| Rear Ports: | 2 × Thunderbolt 4, 1 × USB-A 10 Gbps, 2 × USB-A 2.0 (480 Mbps), 2 × Mini DP 2.1, 1 × RJ-45 Ethernet, 2 × Flex I/O options (dual USB-A 10 G / dual USB-C 10 G / 1, 2.5, and 10 GbE plus 1 GbE fiber) |

| Connectivity: | MediaTek MT7925 Wi-Fi 7 + Bluetooth 5.4 |

| Audio: | 3.5 mm headset jack |

| Power: | HP 300W PSU (internal) |

| Display: | BenQ EW3270U 32″ 4K UHD 60 Hz FreeSync |

Custom PC

Supplied by AMD / ASRock / CORSAIR / NVIDIA

| Custom PC | Configuration |

| Processor: | AMD Ryzen 9 9950X3D |

| Motherboard: | MSI MPG X870E CARBON WIFI X870E (BIOS v7E49v1A70, PBO −0.3 mV, TDP cap 85 °C, SVM disabled) |

| Memory: | 4 × 48 GB CORSAIR Vengeance DDR5-6000 @ 5800 MT/s (CL30-36-36-76) |

| Storage: | Lexar NM1090 Pro 2 TB PCIe 5.0 SSD (C:) TEAMGROUP MP44 4 TB PCIe 4.0 SSD (Storage) |

| GPU: | NVIDIA RTX 5090 Founders Edition |

| Cooling: | DeepCool Castle 360EX AIO |

| PSU: | Super Flower Leadex SE 1200W 80+ Platinum |

| Case: | Corsair 5000D |

| Display: | LG C1 48″ 4K 120 Hz |

Software Stack

- Windows 11 Professional 64-bit 2024 2H2

- NVIDIA Studio driver 581.29 for apps (577.00 for PCMark 10 only) /581.29 GRD for games/3DMark. All drivers, games & apps updated

- UL 3DMark Professional, courtesy of UL

- UL Procyon Suite, courtesy of UL

- UL PCMark 10 Professional, courtesy of UL

- SPEC Workstation 4.0

- SPECviewperf 15

- PugetBench for DaVinci Resolve, courtesy of Puget Bench

- DaVinci Resolve courtesy of Blackmagic Designs

- Blackmagic Disk Speed Test

- AIDA64 Engineer, courtesy of FinalWire

- 7zip

- CrystalDiskMark

- Blender Benchmark 4.5

- Cinebench 2020

- LuxMark v3.1 & 4.0

- CPUZ Benchmark

- Geekbench CPU

- Geekbench AI

- HWiNFO64

- LLM Studio 03.31 – OpenAI gpt-oss 20B & 120B

- MLPerf Client 1.5 – Phi 3.5 Mini Instruct / Phi 4 Reasoning 14B / Llama 3.1 8B Instruct / Llama 2 7B Chat

- MaxxMem2

- PassMark PerformanceTest

- Novabench

- Vovsoft RAM Benchmark

- V-Ray CPU Benchmark

- y-cruncher

- Five games – Cyberpunk 2077, Indiana Jones and the Great Circle, Total War: Pharaoh Dynasties & Warhammer III, and Sid Meier’s Civilization VII AI benchmark.

Let’s look closely at the CORSAIR AI 300 Workstation.

Unboxing and First Impressions

CORSAIR includes a two-year warranty with the Workstation AI 300, which arrives ready-to-use with a separate power cord — Just add keyboard, mouse, DP, or HDMI cable, and plug it into your monitor.

The system ships with Windows 11 Professional preinstalled and ready to go. There is minimal software installed, but a linked online AI center allows you to select your use cases based on AI recommendations and will assist you in downloading the models. Jan.AI is preinstalled with dozens of free compatible open source downloadable LLMs from Hugging Face that are completely offline, with many offering privacy, uncensored prompting, as well as even NSFW options.

In addition, AMUSE.AI is available as a free AI art and video generation tool, developed by TensorStack and optimized for AMD systems. It primarily uses Stable Diffusion so you can generate and work with images from text prompts without needing an internet connection, account, or subscription.

Llama 4 Scout is a 17 billion active-parameter model built on a Mixture-of-Experts (MoE) architecture, employing 16 experts out of a total of 109 billion parameters. It can process text and images and produce multilingual text or code output. All these readily available models use a GUI which means there is no need to learn Command Lines (CLI), but can start immediately working with local AI.

The CORSAIR AI Workstation 300’s HDMI, DisplayPort, and the Type-C port in the rear I/O can be used with an adapter or a Type-C to HMDI/Display port cable to support up to three 4K displays simultaneously, providing strong multi-monitor flexibility despite its compact form.

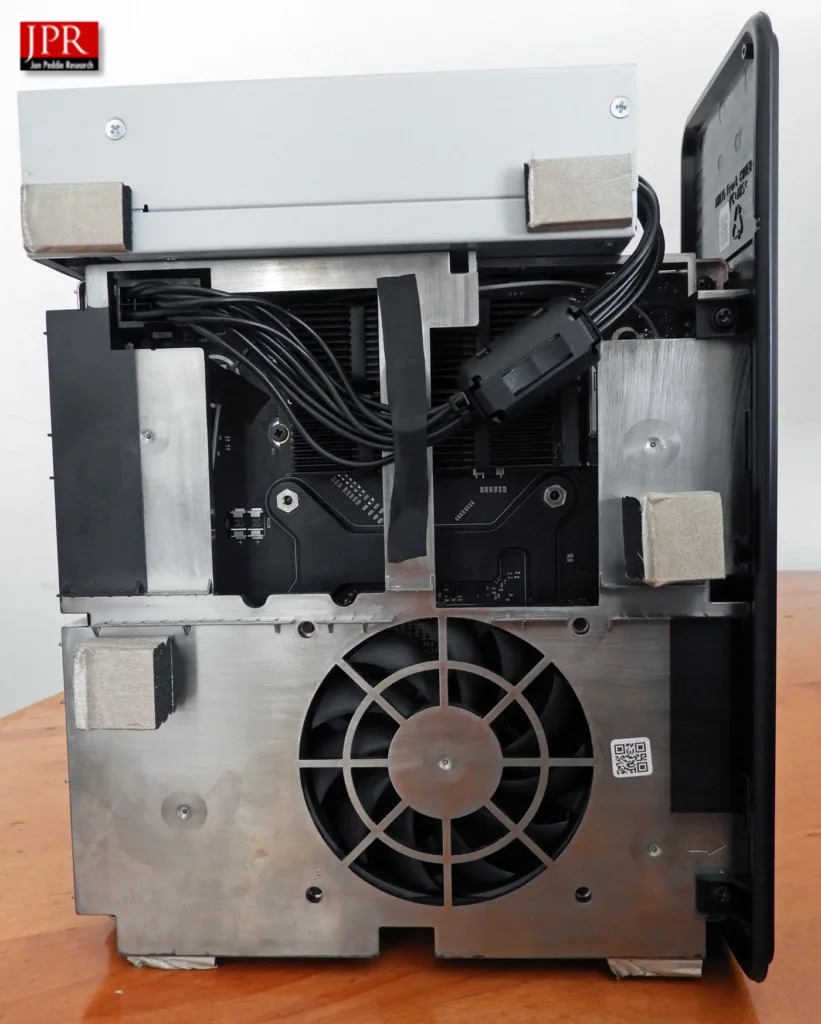

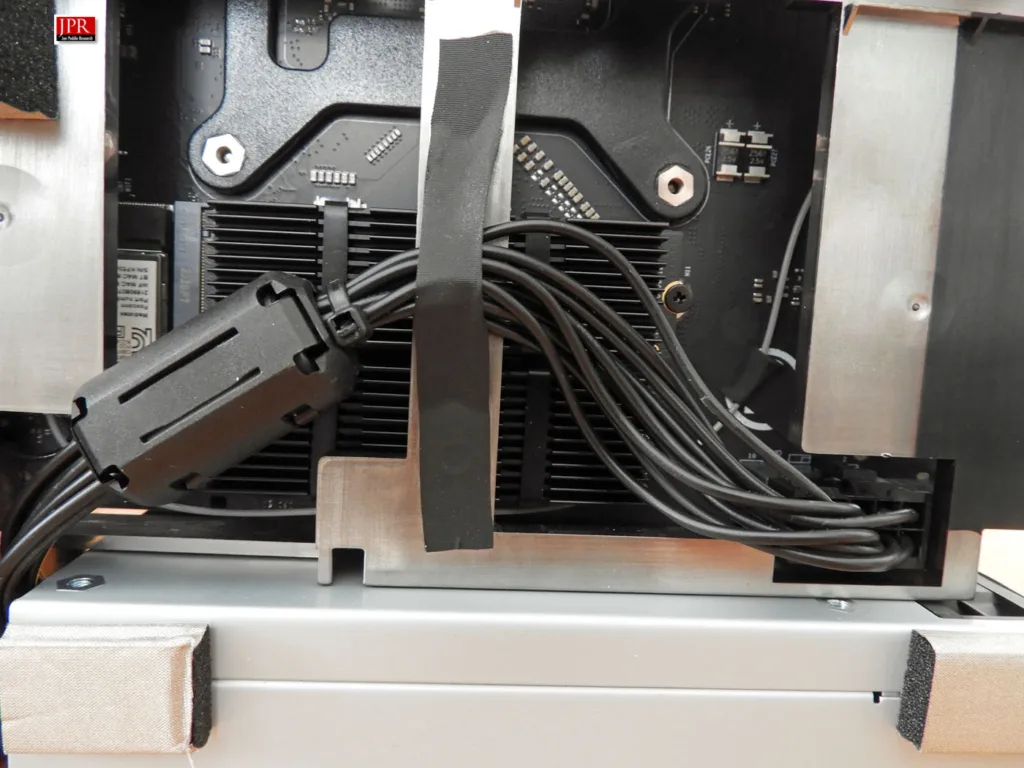

A key highlight: there’s no external power brick. The 300W PSU is built directly into the chassis, which opens by removing nine screws for maintenance or for switching out SSDs.

The 2x 2TB SSDs are under the SSD heatsinks and are easily replaced.

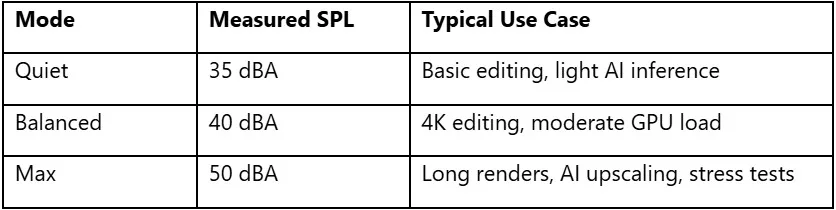

Two squirrel-cage fans direct airflow to cool the internals. Under normal loads, the CORSAIR AI Workstation 300 operates almost silently, although at full load, the fans are audible while sitting on the desktop, although not nearly as loud as the HP Mini.

By default, the system runs in maximum performance mode, but fan RPM can be manually turned down for quieter operation with minimal performance loss, simply by pressing the white button on the front of the PC.

These are CORSAIR’s internal measurements which roughly corresponded to our own dB meter SPL measurements.

The AI Workstation 300 features a sleek, professional design and a robust case. The top button is for power while the white button changes the fan RPMs which will show on the display as they are pressed.

CORSAIR thoughtfully included a SD 4.0 reader as well as a headphone/mic jack, 2x USB 3.2 Gen 2 Type-A, and 1x USB 4.0 Type-C ports on the front panel.

The back of the unit has nine easily removable screws. After removing the back panel, the internal chassis slides out of the case for access to the SSDs. The rear panel accepts the power cord, 2x USB 3.2 Gen 2 Type-A, 1x USB 4.0 Type-C, and 2.5G Ethernet ports. Wireless connectivity is handled by Wi-Fi 6E and Bluetooth 5.2.

It looks good from any angle and the case is well-ventilated for cooling.

A rear label on the bottom provides the serial number, model identification, and regulatory information, while the rubber feet protect your desktop.

Above is an image of the CORSAIR AI Workstation 300 size comparison with the HP Mini next to a 32″ 4K display. The CORSAIR AI 300 is about 3 inches taller, one-half inch wider, and slightly less deep than the HP workstation.

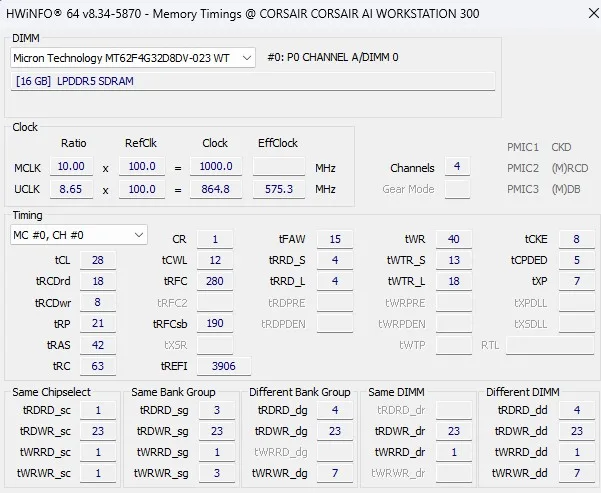

HWiNFO64

Let us take a closer look at the CORSAIR AI Workstation 300’s system specifications and performance metrics using HWiNFO64. The HWiNFO64 system summary provides a detailed breakdown of the hardware configuration.

The memory clock speed registers correctly at 4000 MHz, and as it is DDR (Double Data Rate), data transfers occur twice per clock cycle—yielding an effective rate of 8000 MT/s (megatransfers per second). The installed LPDDR5X memory maintains strong bandwidth with modest latency, as shown below.

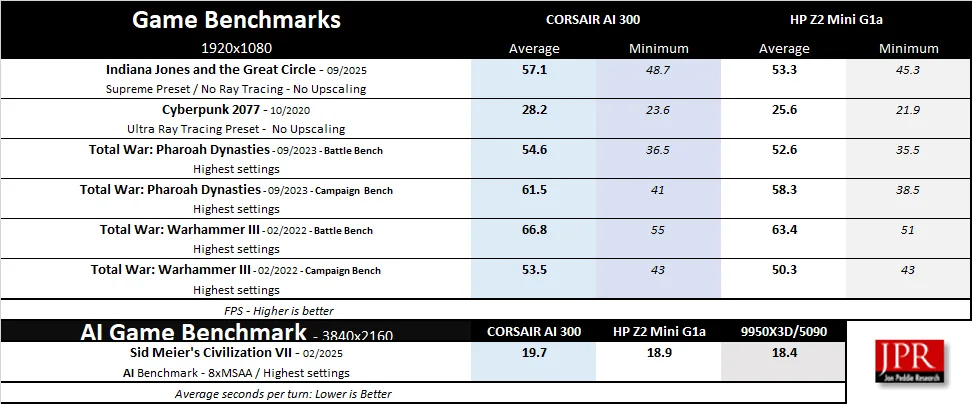

Gaming Performance Summary Chart & 3DMark Physics

Although gaming is not the AI Workstation 300’s primary focus, we benchmarked five modern, CPU- and GPU-intensive titles, in addition to a physics 3DMark test, to illustrate its gaming performance capabilities. All tests were run at 1920×1080, except for Civilization VII AI benchmarked at 3040×2160, to compare average turn times against the HP Mini and the RTX 5090-equipped PC.

The CORSAIR AI 300 is foremost an AI workstation, yet its RDNA 3.5 integrated graphics are among the most capable on the market. It can handle modern games at 1080p, and even 1440p with upscaling, though ultra ray tracing remains out of reach. It is faster than the HP Mini although it trailed the other two PCs in the Civilization VII AI benchmark.

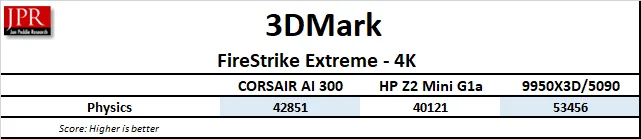

3DMark Physics

As expected, the Ryzen 9 9950X3D outperformed both compact PC’s Ryzen AI Max+ PRO 395 APUs in this synthetic workload, despite their CPU also featuring 16 cores and 32 threads. However, the Corsair AI Workstation 300 outperformed the HP Z2 Mini G1a.

Next, we turn to non-gaming synthetic benchmarks designed to evaluate memory bandwidth, storage performance, GPU compute, and CPU efficiency across our test systems.

Synthetic Benchmark Analysis

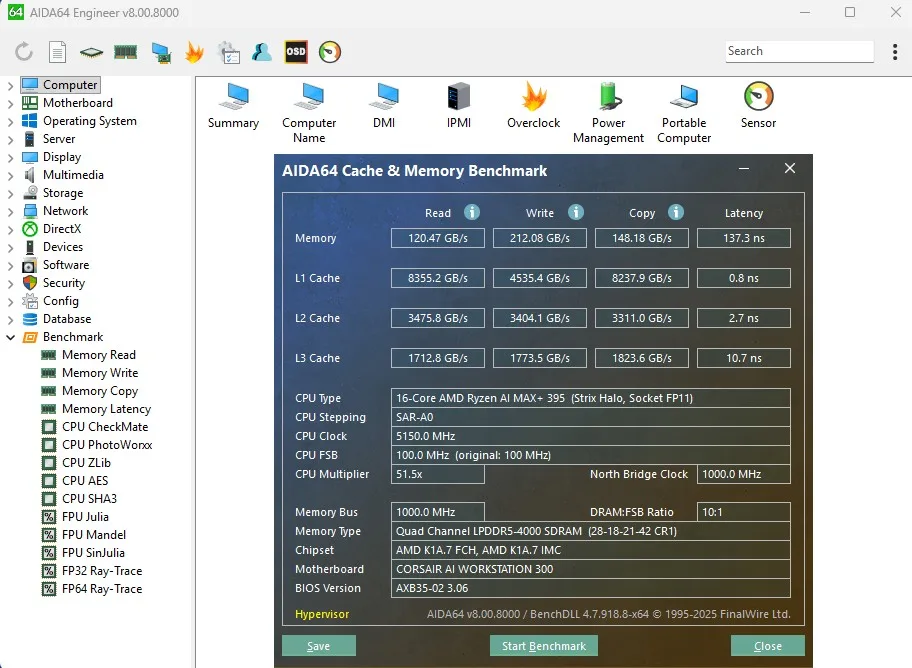

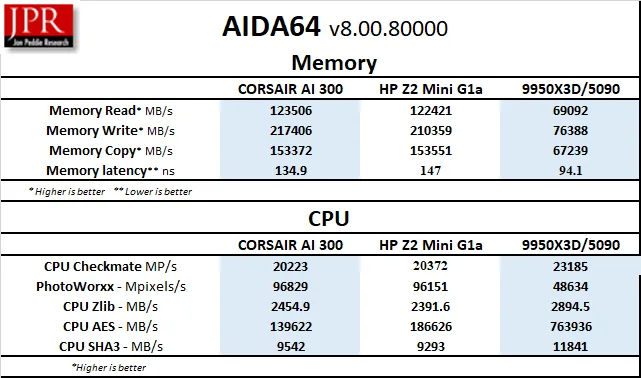

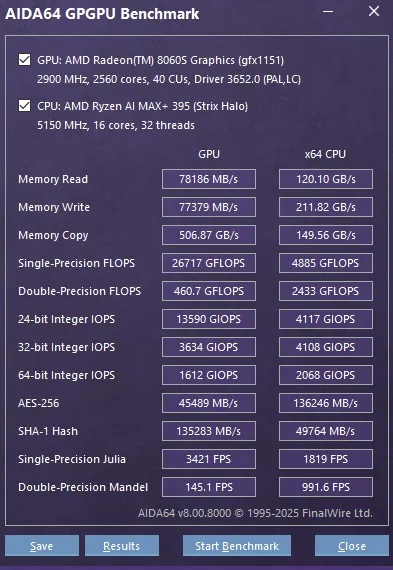

AIDA64 Engineer v8.00.80000

AIDA64 is an important industry tool for benchmarkers. Its memory bandwidth benchmarks (Memory Read, Memory Write, and Memory Copy) measure the maximum available memory data transfer bandwidth. AIDA64’s benchmark code methods are written in Assembly language, and they are well-optimized. We use the Engineer’s full version of AIDA64 courtesy of FinalWire. AIDA64 is free to try and use for 30 days.

The Memory Latency test in AIDA64 measures the delay between issuing a read command and the data arriving in the CPU’s integer registers. It also evaluates read/write/copy bandwidth and cache performance, providing a full view of system memory behavior.

Below are the AIDA64 Cache & Memory benchmark results for the CORSAIR AI Workstation 300.

The chart below compares all three PCs, emphasizing CPU benchmarks sensitive to memory speed and latency.

The Corsair AI Workstation 300’s LPDDR5X-8000 memory delivers exceptional bandwidth, trading blows with the HP Mini. Although its latency is higher, the Ryzen AI Max+ PRO 395 also leads in several benchmarks against the Ryzen 9 9950X3D.

Below are the AI Workstation 300’s AIDA64 GPGPU results, highlighting compute performance across CPU and integrated GPU workloads.

Both the CPU and integrated GPU show strong compute throughput, reaffirming the CORSAIR AI Workstation 300’s efficiency despite its compact design.

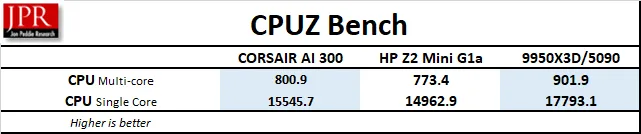

CPU-Z Benchmark

The CPU-Z benchmark provides a quick synthetic test that measures both single-threaded and multi-threaded CPU performance, allowing direct comparison across processor generations.

Included here primarily for context, the AI Workstation 300 performed well, staying ahead of the HP mini, and even competitive against the higher-clocked Ryzen 9 9950X3D.

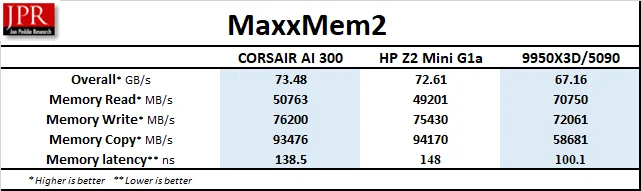

MaxxMem² v3.00.24.109

MaxxMem² is a lightweight, free memory benchmarking utility used to measure bandwidth and latency performance.

The results aligned closely with AIDA64’s findings, validating the AI Workstation 300’s fast memory throughput and efficient cache design.

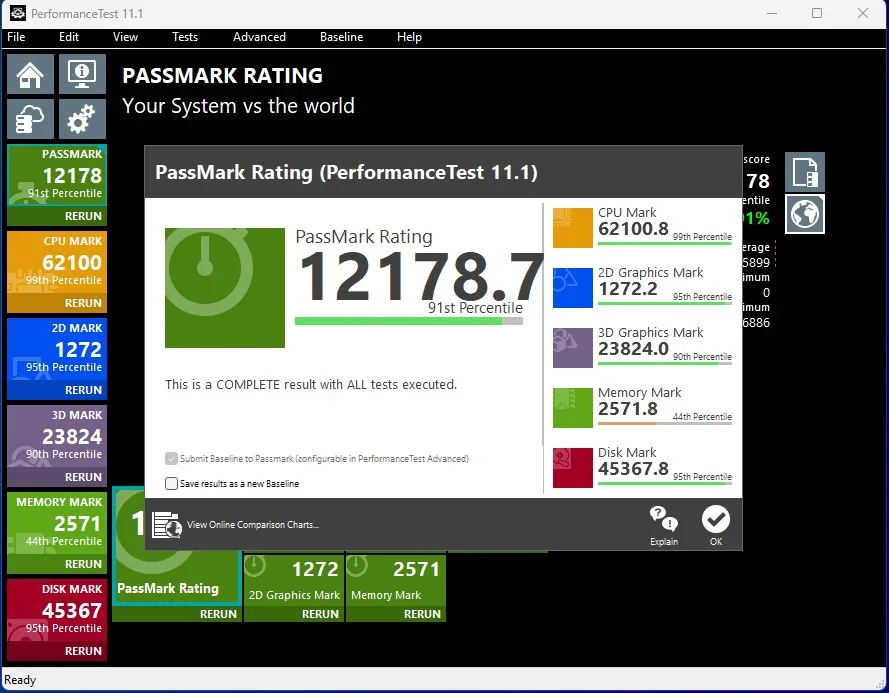

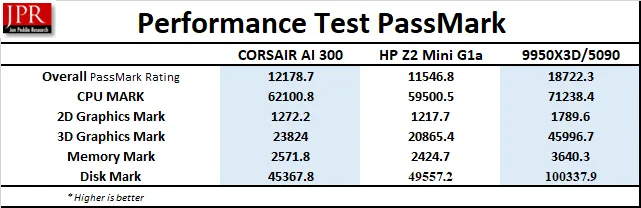

PassMark Software’s PerformanceTest

PassMark Software’s PerformanceTest tool evaluates system-wide performance through composite tests including Memory Mark, Graphics Mark, and Disk Mark.

Although the CORSAIR AI Workstation 300 gets higher scores across the board compared with the MP Z2 Mini G1a, it does not compete with the RTX 5090-equipped desktop that includes a Gen 5 SSD. However, instead of just a score, we also want to compare SSD performance between our desktop and the two compact PCs.

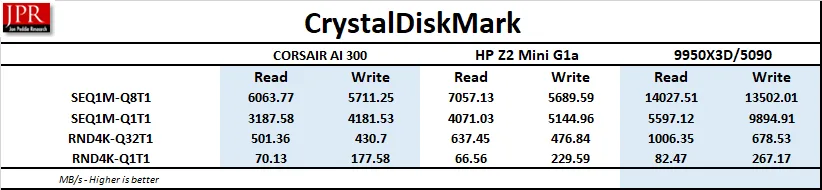

CrystalDiskMark

CrystalDiskMark measures sequential and random read/write throughput of installed drives, making it an industry-standard tool for SSD benchmarking

The desktop’s Lexar Gen 5 (14000/13500MBps) SSD is clearly the fastest with the HP Mini’s Kioxa XG8 SSDs showing solid Gen 4×4 NVMe performance, although its 2× 1 TB capacity is very limiting for large workloads. Fortunately, the AI Workstation 300 includes 2× 2 TB Gen 4×4 SSDs although their speeds are somewhat slower.

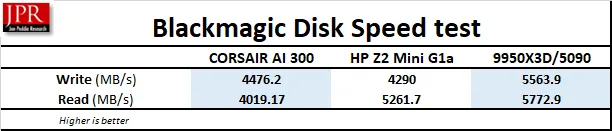

Blackmagic Disk Speed Test

The Blackmagic Disk Speed Test is a free tool that measures the storage performance of SSDs, primarily for video workflows. It performs read and write tests using large blocks of data to determine if a drive is fast enough for tasks like real-time playback and recording of high-quality video. Here are our results.

The CORSAIR AI Workstation 300 is faster in Write but slower in Read than the HP Mini’s SSD, but both are eclipsed by the Gen 5 SSD in the desktop. However, they are all suitable for real-time playback or recording of HQ video.

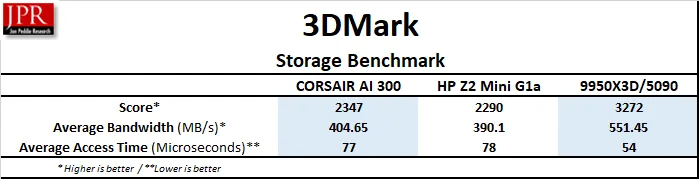

3DMark Storage Benchmark

Although UL’s 3DMark Storage Benchmark is designed primarily for gaming, it can evaluate and compare SSD performance by giving average bandwidth and average access times.

Both compact PC’s SSDs perform very similarly, with the CORSAIR AI Workstation 300 edging out the HP Mini.

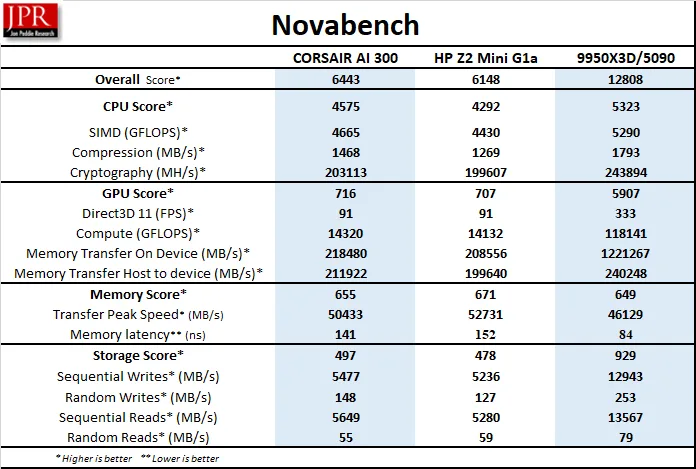

Novabench Summary

Novabench provides a rapid system benchmark that scores CPU, GPU, memory, and storage performance. Here is its summary:

The desktop leads in GPU score and storage thanks to the RTX 5090 and Gen 5 SSD, while the AI Workstation 300 generally scores higher than the Z2 Mini G1a.

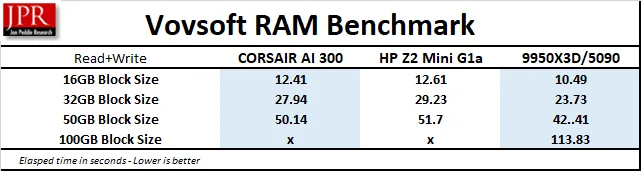

Vovsoft RAM Benchmark

Vovsoft RAM Benchmark simulates real-world memory utilization by testing large, user-defined data blocks. Multiple block sizes were tested; an ‘x’ denotes configurations too large for the system memory to process.

The desktop’s 192 GB DDR5 kit handled blocks up to 100 GB, while both compact PCs remained competitive across all other tested sizes, demonstrating impressive stability and memory throughput.

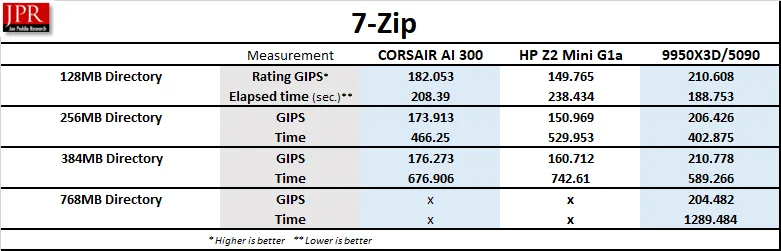

7-Zip Compression Benchmark

The 7-Zip built-in benchmark is a synthetic benchmark that tests LZMA and LZMA2 algorithms compression/decompression and gives a rating in GIPS (giga or billion instructions per second), which is calculated from the measured speed.

Increasing directory size revealed memory scalability limits — even with nearly 128GB allocated to both compact PC’s system memory — only the desktop’s 192 GB configuration could process the largest 768 MB test. However, the AI Workstation 300 was consistently faster than the Z2 Mini G1a.

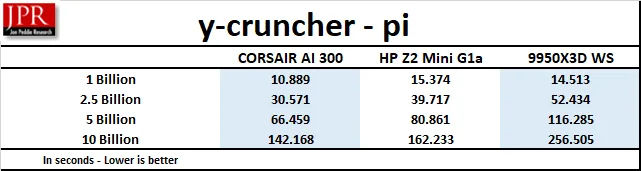

Y-cruncher Benchmark

Y-cruncher is a synthetic benchmark that tests the raw computational power of a system by calculating Pi, pushing the CPU and RAM to their limits. It is a free test that is fully multi-threaded. We chose four tests, from 1 Billion to 10 Billion, and kept the test running only in RAM, with no swapping to disk allowed.

The AI 300 Workstation is faster than the Z2 Mini G1a, and both machines decisively outperformed the desktop in Y-cruncher, demonstrating the advantage of ultra-fast LPDDR5X-8000 memory in compute-intensive tasks.

With synthetic testing complete, we now move on to semi-real-world workloads that simulate practical performance across productivity and AI applications.

Semi-Real World Benchmarks

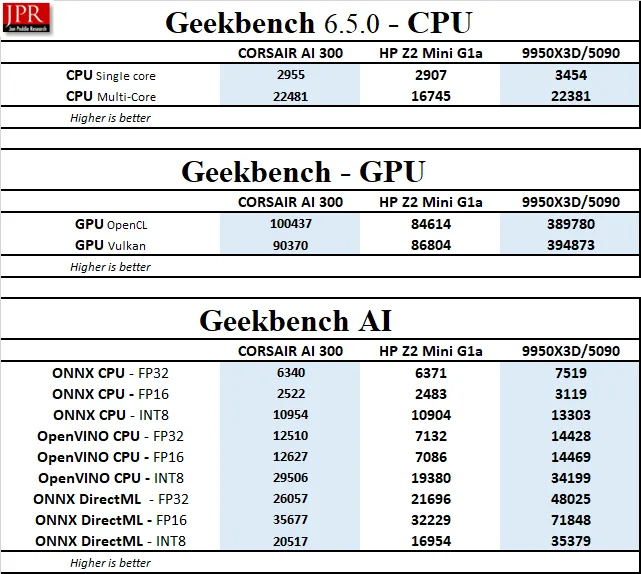

Geekbench 6 CPU and Geekbench AI Benchmarks

Geekbench 6 is a well-established cross-platform benchmark that evaluates CPU performance by timing the completion of diverse, real-world workloads

Both Geekbench and Geekbench AI straddle the line between synthetic and real-world testing. They simulate practical workloads such as image compression, machine learning inference, and object recognition.

Below is a summary comparison of all three systems’ results in Geekbench’s CPU, GPU, and AI benchmarks:

Again, the CORSAIR AI Workstation 300 consistently outscored the HP Z2 Mini G1a, sometimes by a significant margin, although the desktop consistently scored the highest.

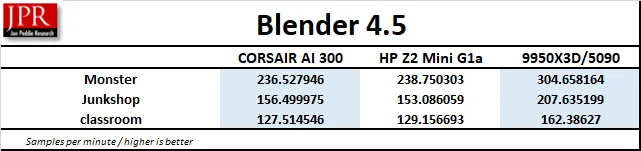

Blender 4.5.0 Benchmark

Blender 4.5.0 serves as a real-world benchmark by rendering multiple professional-grade 3D scenes. While rendering is just one part of the full workflow, it offers a practical performance snapshot for creators.

We ran the latest Blender 4.5.0 benchmark, focusing on CPU rendering performance. The benchmark outputs results in samples per minute, automatically rendering each scene multiple times. It may be downloaded from here.

Below is the performance summary.

There is very little difference in the Blender rendering speeds between the compact PCs.

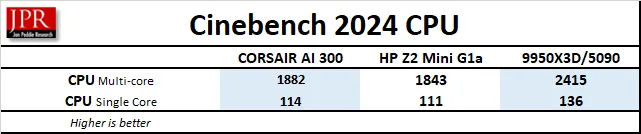

Cinebench 2024 (MAXON Redshift Engine)

Cinebench 2024 is based on MAXON’s professional 3D content creation suite, Cinema 4D. The latest version of Cinebench uses real-world rendering tasks from the Redshift engine, although it only runs the Maxon Renderer on the Export/Render phase of the workflow. It provides scores for multi-core and single-core CPU (as well as GPU) accurately and automatically, and is an excellent tool for quick comparison purposes.

Here is the Cinebench 2024 summary chart comparing the tested systems.

The CORSAIR AI Workstation 300 scores higher than the HP Z2 Mini G1a.

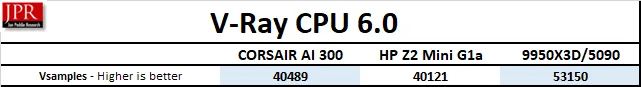

V-Ray CPU Benchmark

The V-Ray benchmark leverages the same rendering engine used by professionals in visual effects and design to measure real-world render performance. We tested CPU-only mode to isolate raw computational throughput.

Again, the AI Workstation 300 is slightly faster than the Mini.

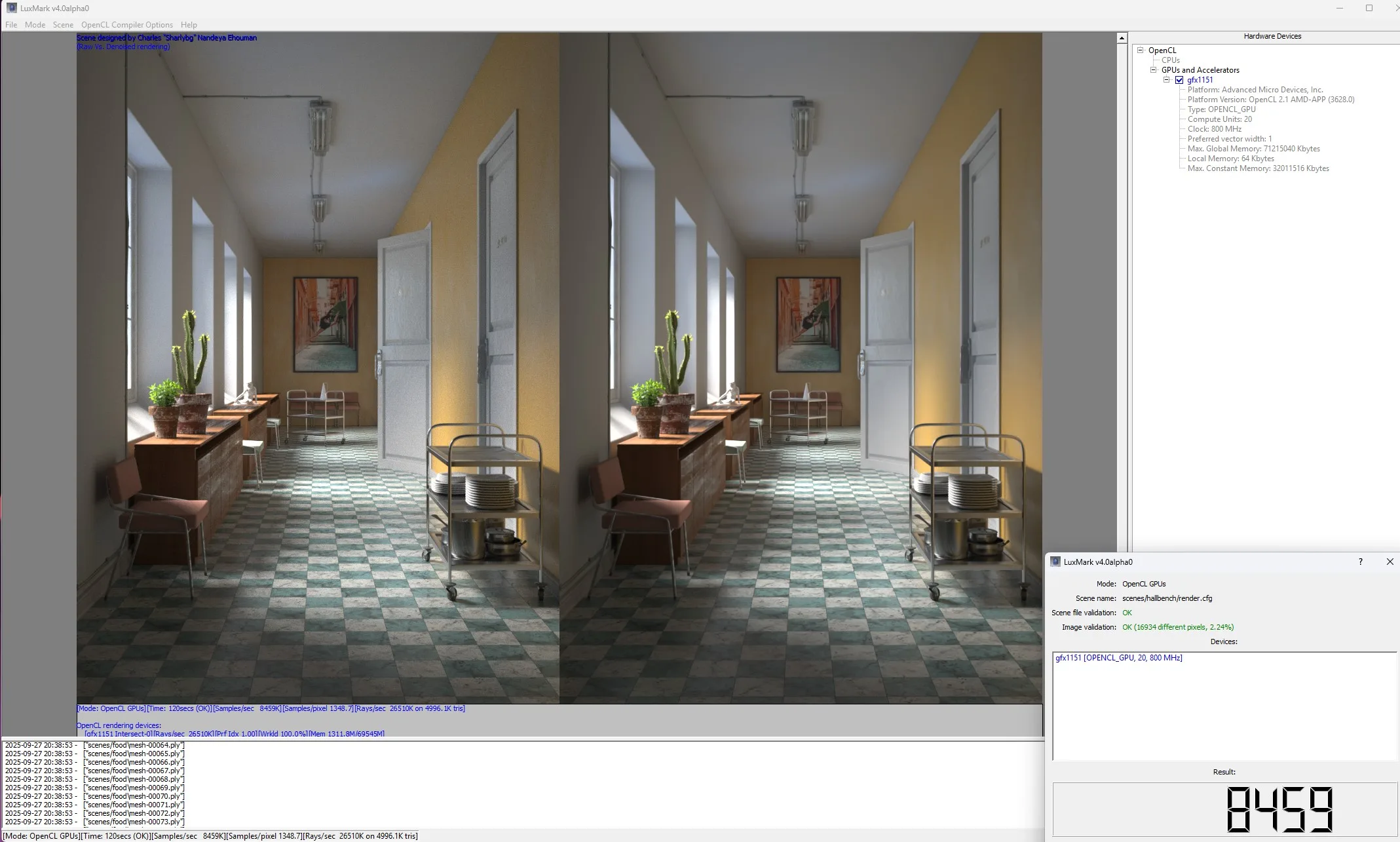

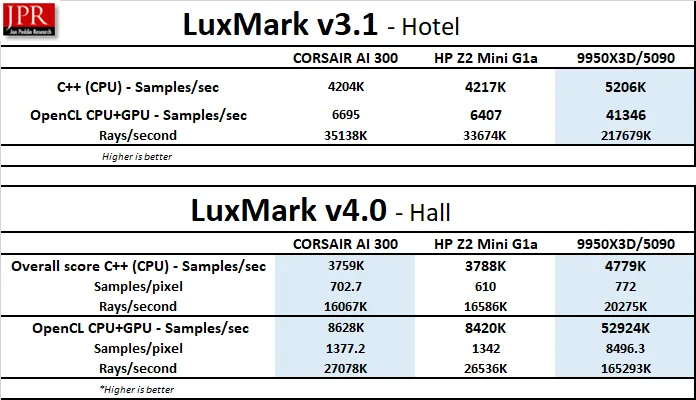

LuxMark Benchmark (v3.1 & v4.0 Alpha)

LuxMark is a GPU benchmark using the LuxCore renderer, which is an open-source ray-tracing renderer used for visual effects and architectural applications. These two benchmarks are relevant for evaluating workstation graphical rendering capabilities, where accurate light simulation is crucial. It measures OpenCL performance, and it is based on Intel Embree. Although narrow in scope since rendering is only one part of the workflow, it’s useful for comparing workstation performance.

We used the older 3.1 version and the newest 4.0 (Alpha). Each version offers 3 separate tests, and we chose the most complex scenes to give our test systems a real workout.

Below are the LuxMark benchmark results for each platform.

The two compact PCs deliver comparable compute results, but unsurprisingly, the RTX 5090’s GPU compute power far exceeds the integrated Radeon 8060S in LuxMark rendering workloads.

Next, we will move on to broader hybrid benchmark suites that incorporate office, AI, and mixed workload testing.

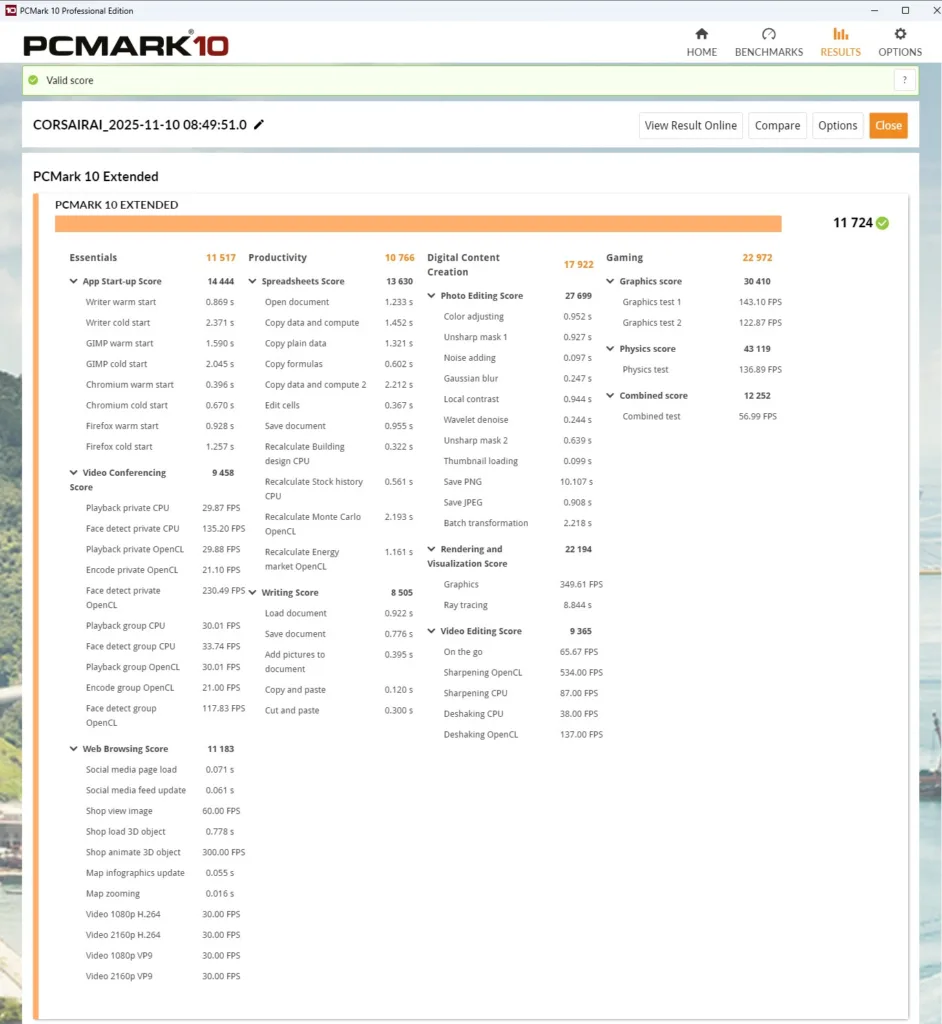

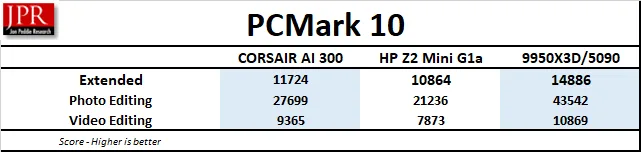

PCMark 10 Professional Edition

PCMark 10 offers a range of real-world timed benchmarks, simulating everyday tasks such as web browsing, video conferencing, photo and video editing, and casual gaming. We used the Extended Suite in this review (courtesy of UL) for a comprehensive evaluation.

Our analysis primarily focuses on overall system performance along with photo and video editing subtests. While UL’s Procyon Office Suite has largely superseded PCMark 10, the benchmark remains fully supported and relevant, especially for systems lacking Adobe Creative Cloud licenses.

Since PCMark 10’s Extended Suite relies on GPU acceleration for certain tasks, the desktop with the RTX 5090 achieved the highest overall scores, although the AI Workstation 300 beat the Mini soundly this time.

UL Procyon Benchmark Suite

UL’s Procyon benchmark suite blends real-world applications and simulated workloads to measure performance across different domains. It includes Office, AI Inference, and Image Generation benchmarks, offering a balanced view of productivity and AI capabilities.

Procyon Office Benchmark

The Procyon Office suite evaluates performance in Microsoft Word, Excel, PowerPoint, and Outlook. The CORSAIR AI Workstation 300 delivered detailed, consistent results across these productivity tasks.

Below is the summary of results:

The Z2 Mini G1a also performed well for all Office tasks, although the AI Workstation 300 retained a solid lead.

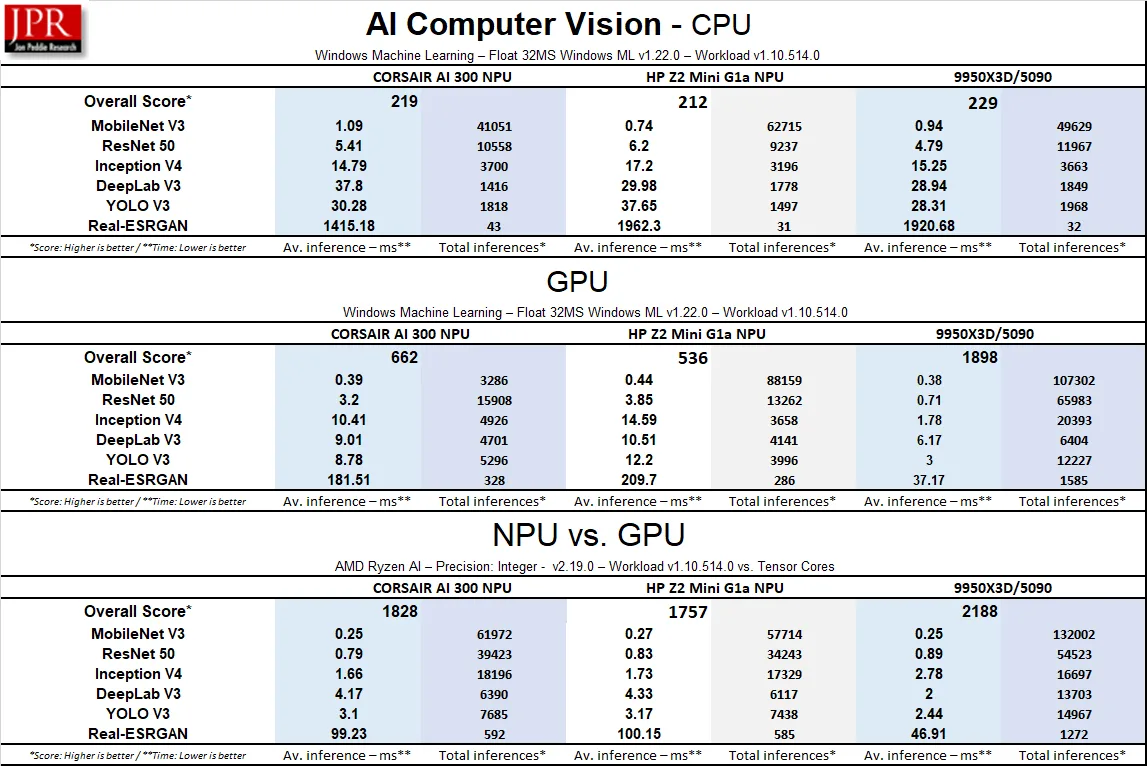

Procyon AI Computer Vision Benchmark

The Procyon AI Computer Vision Benchmark evaluates AI inference performance across models from five vendors. It includes:

• MobileNet V3 – lightweight image classification

• Inception V3 – deeper image recognition

• ResNet-50 – neural network training and classification

• DeepLab V3 – image segmentation via pixel clustering

• Real-ESRGAN – intensive upscaling and image enhancement

We first ran the Windows ML Float32 version of the benchmark on our test systems to establish baseline CPU inference performance, then moved on to the GPU, and finally tested each compact system’s NPUs using AMD Ryzen AI versus the RTX 5090’s Tensor Cores.

Performance results were somewhat mixed—each system excelled in different inference workloads, although the CORSAIR AI Workstation 300 was generally faster than the HP Z2 Mini G1a.

The Ryzen AI NPUs performed surprisingly well, achieving results competitive with many discrete GPU inference results despite its 50 TOPS limit. Notably, they exceed Microsoft’s CoPilot+ PC hardware requirements, confirming their future readiness. But of course, the NVIDIA flagship RTX 5090’s Tensor Cores were significantly faster.

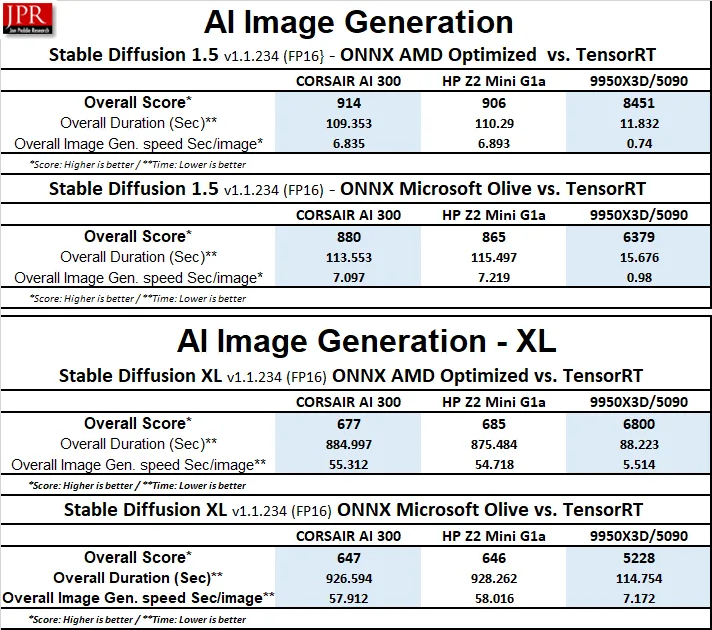

UL Procyon AI Image Generation Benchmark

The Procyon AI Image Generation benchmark measures text-to-image inference performance using Stable Diffusion models. We tested both using FP16 workloads, first with ONNX AMD Optimized and then with ONNX Microsoft Olive versus using TensorRT for the 5090.

• Standard (512×512, batch size 1)

• Stable Diffusion XL (1024×1024, batch size 4)

While primarily optimized for GPUs, it can also execute very slowly on the desktop’s CPU-integrated graphics that we did not benchmark.

The RTX 5090 completed these tasks roughly ten times faster thanks to its dedicated Tensor Cores, whereas the AI Workstation 300 traded blows with the Mini.

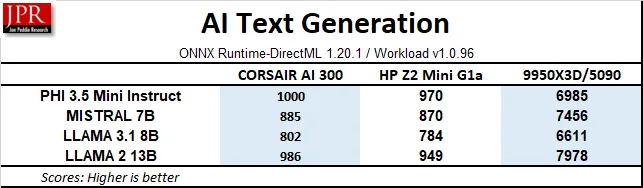

UL Procyon AI Text Generation Benchmark

The Procyon AI Text Generation benchmark evaluates performance across several LLMs. Since it primarily leverages GPU inference, the RTX 5090-equipped PC holds a clear advantage.

The summary results are presented below with the AI Workstation 300 again beating the Mini.

With synthetic and hybrid workloads completed, we now transition to fully real-world application benchmarks, beginning with PugetBench for DaVinci Resolve.

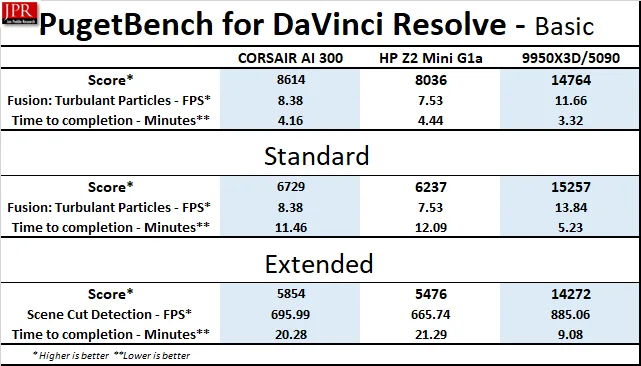

PugetBench for DaVinci Resolve

Unlike many benchmarks that isolate final rendering, PugetBench for DaVinci Resolve evaluates the entire creative workflow — from timeline playback to rendering and export. It runs directly atop DaVinci Resolve, pulling data from real in-app performance. We used the full version of Blackmagic Design’s DaVinci Resolve for testing.

While not coming close to the RTX 5090-equipped desktop in raw performance, the AI Workstation 300 beat the Z2 Mini G1a and performed exceptionally well, delivering smooth, stable results for Resolve workloads.

SPECworkstation 4.0 Benchmark Suite

All the SPECworkstation 4.0 benchmarks are based on professional applications, most of which are in the CAD/CAM or media and entertainment fields. All these benchmarks are free except for vendors of computer-related products and/or services.

The most comprehensive SPECworkstation benchmark is the newly revamped 4.0 version. It is a free-standing benchmark that does not require ancillary software. It measures GPU, CPU, storage, and all other major aspects of workstation performance based on actual applications and representative workloads. It features a new category of tests focusing on AI and ML workloads and has updated many others over the older version.

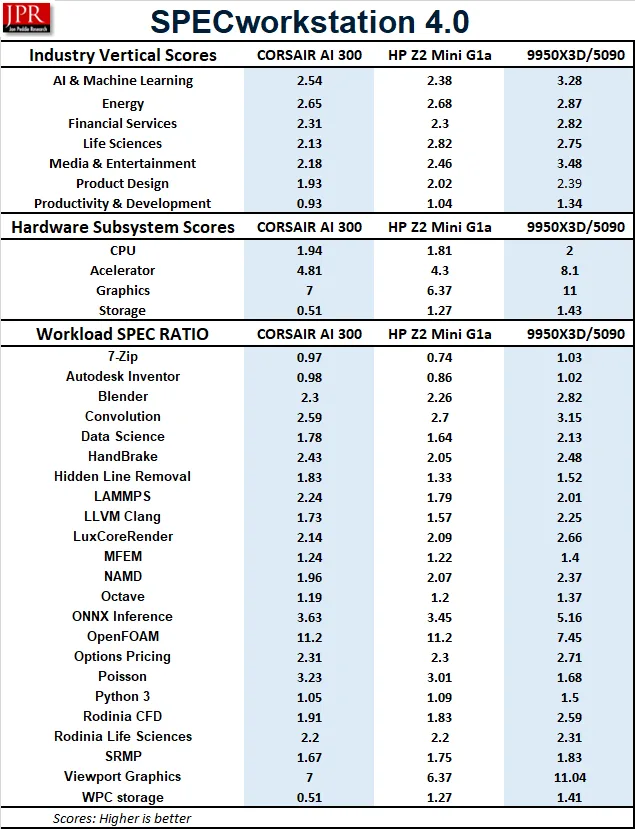

Below is our SPECworkstation 4.0 performance summary:

In most of the subtests, the compact PCs outperformed the official SPEC reference workstation, affirming their abilities to perform workstation tasks. In a few cases they performed close to the desktop’s scores — and even outperformed it in OpenFOAM and Poisson. Generally, the CORSAIR AI Workstation 300 performed better than the HP Z2 Mini G1a.

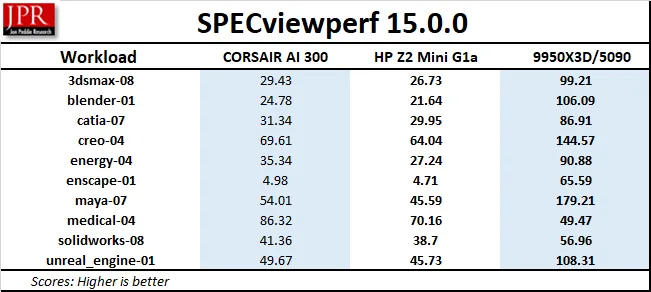

SPECviewperf 15 Benchmark Suite

SPECviewperf 15, a major revision of the 2020 edition, benchmarks professional visualization workloads that leverage both system RAM and GPU resources. It simulates large data manipulation and rendering typical of engineering, architecture, and media workflows.

The RTX 5090-equipped desktop was clearly superior in GPU-heavy visualization tasks, falling behind only in the medical-04 test. However, the compact PCs proved capable for daily professional workloads, including CAD, BIM, and general visualization, although the CORSAIR AI Workstation 300 was faster than the HP Z2 Mini G1a.

While the Radeon 8060S excels at handling large memory-bound models, it cannot match discrete GPUs in raw rendering throughput. In heavy 3D projects, viewport navigation may slow down.

Beyond conventional workstation tasks, the AI Workstation 300’s and Z2 Mini’s integrated GPU can access up to 96 GB of shared system memory, enabling it to run very large AI models—significantly larger than what the RTX 5090 can fit in its 32 GB of VRAM. AMD claims that the Ryzen AI Max PRO architecture can handle LLMs up to 128 billion parameters, effectively offering ChatGPT 3.0-scale performance locally, without the cloud!

With that in mind, we moved on to testing Large Language Model performance using LM Studio.

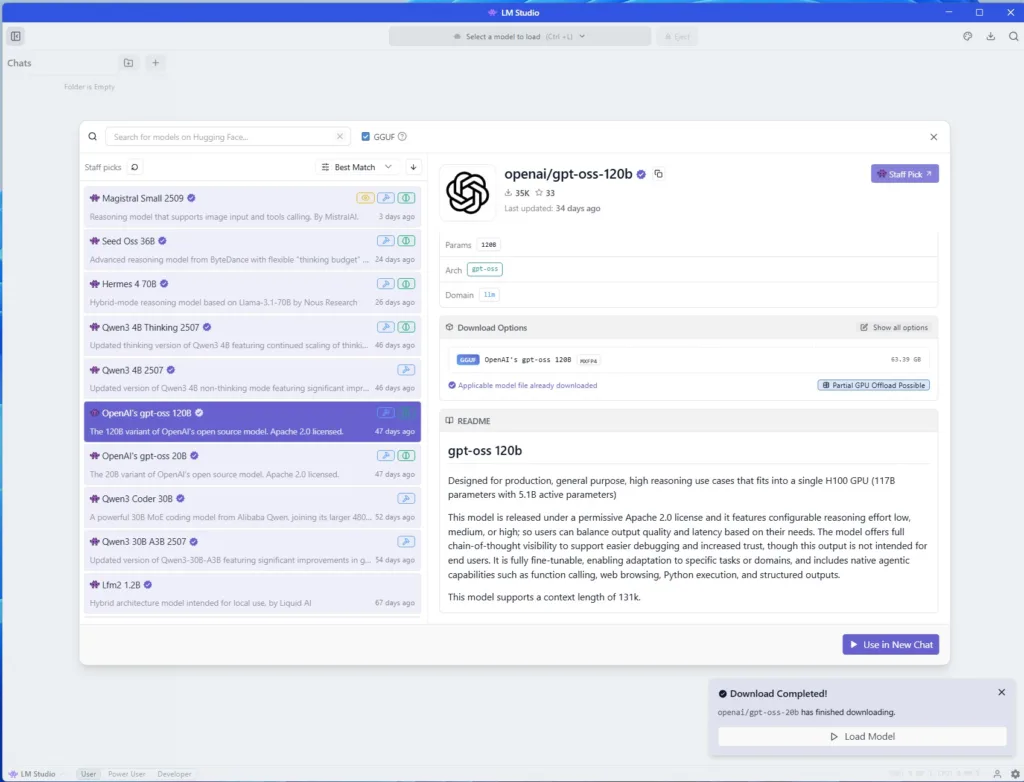

LM Studio (Local AI Inference Testing)

LM Studio is a powerful open-source desktop application that enables users to download, run, and experiment with large language models (LLMs) locally. It is ideal for those prioritizing data privacy and offline inference — unlike cloud-based platforms such as ChatGPT, which require subscriptions and transmit user prompts to remote servers. Once a model is downloaded, LM Studio can operate entirely offline, though search and API-dependent features are disabled.

The OpenAI GPT-OSS 120B model stands out for its natural language fluency, context retention, and coherent generation. The GPT-OSS 120B model is highly optimized for efficiency. It uses MXFP4 quantization and reduced Mixture-of-Experts (MoE) weights down to 4.25 bits per parameter, enabling excellent inference performance even on lower-end hardware. The model’s Int4 deployment runs smoothly on the Ryzen AI Max+ PRO 395, with minimal quality loss, according to AMD and OpenAI.

However, when loading the 63 GB GPT-OSS 120B model on our RTX 5090 desktop, LM Studio displayed a “Partial GPU Offload Possible” warning — indicating memory constraints for full VRAM allocation.

The model is so large that it cannot fit into the 32GB VRAM of the RTX 5090, and we actually need 192GB of system RAM (over 96GB) to have good performance. Without enough RAM, this large model makes performance compromises as system RAM is used as a “swap file”. When the model is too big for a GPU’s VRAM, LM Studio offloads part of the model to the system RAM, and constant data transfers between them create a performance bottleneck.

Since the last time we ran the benchmarks, we have learned to optimize better for the desktop PC, with much better results by forcing Model Expert Weights onto the CPU.

Limited RAM is not an issue with the CORSAIR AI Workstation 300 since it has 128GB of memory which is shared between the CPU and the GPU. It is not unified memory since Windows treats CPU and GPU memory as separate banks; however, the AMD driver allows GPU-dedicated memory to be configured up to 96 GB and we had no issues loading the OpenAI 120B model.

Unfortunately, the issues with the HP Mini continued even after a BIOS update, and we could not load the 120B model with 96GB dedicated to the GPU. Dedicating 64GB of memory allowed the model to load, but its performance was no different from using 32GB of dedicated GPU memory and allowing the driver to allocate memory to the GPU on the fly. The Mini’s performance continues to suffer compared to the CORSAIR AI Workstation 300 and the RTX 5090-equipped desktop.

LM studio configures its models for a default tiny 4096 token context window as the amount of data it can use while generating responses. However, by selecting “manually load advanced settings”, “show advanced settings”, and turning on “Flash Attention”, we were able to use a 128,000 token window.

Afterward, the AI responded just like using ChatGPT 3.0 or even perhaps 3.5. In-depth discussions with the AI continued for hours without running out of context or having to pay for tokens used.

GPT-OSS 120B is an outstanding, fully customizable transformer model offering excellent reasoning for research, tool usage, problem-solving, and agentic workflows. It is a top industry model for local inference, which does not require using the cloud to ensure data privacy and to manage costs over using GPT-5. Even complex production workloads are supported.

There are three effort presets: High, Medium, and Low. Using the high effort preset shows the model “thinking”, and for our second prompt it took an average of seven minutes because it wanted to produce an accurate response of exactly 300 words, which requirement the low reasoning effort ignored.

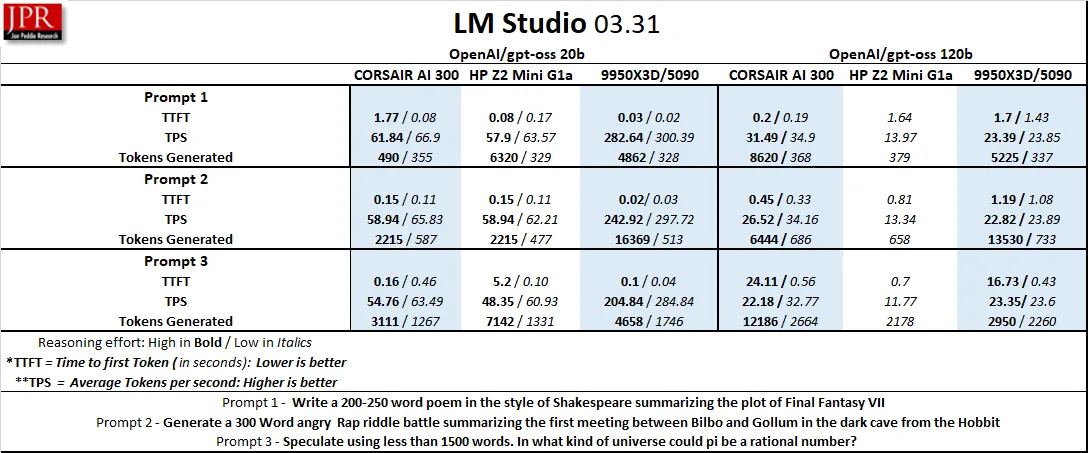

Below are our results using OpenAI GPT-OSS 20B and 120B comparing the low effort preset (in italics) with the high effort preset (in bold) for deeper multi-step reasoning which produce more complex answers. Simple prompts should use low-effort reasoning for less computation with much faster response times. There is also a medium-effort setting we did not test as performance results fall directly in between the high and low presets.

OpenAI GPT-OSS 20B, with 3.6B parameters per token, gives less complex answers, responds far more quickly, and at times is somewhat inaccurate compared to the 120B model, which activates 5.1B parameters. For our purposes, the 120B model is better for information gathering and research. Although the RTX 5090 is incredibly fast using the 20B model, fifty plus tokens per second produced by the AI Workstation 300 and the Z2 Mini G1a is significantly faster than the average human can read.

When moving to the 120B model, the AI Workstation 300 was generally faster than the RTX 5090 desktop. We consider above 20 tokens per second quite acceptable, especially for advanced math formulas with complex toy universe-building responses generated by our third prompt using high reasoning. Unfortunately, the HP Mini fell short, possibly because of a driver issue that still affects some of these devices.

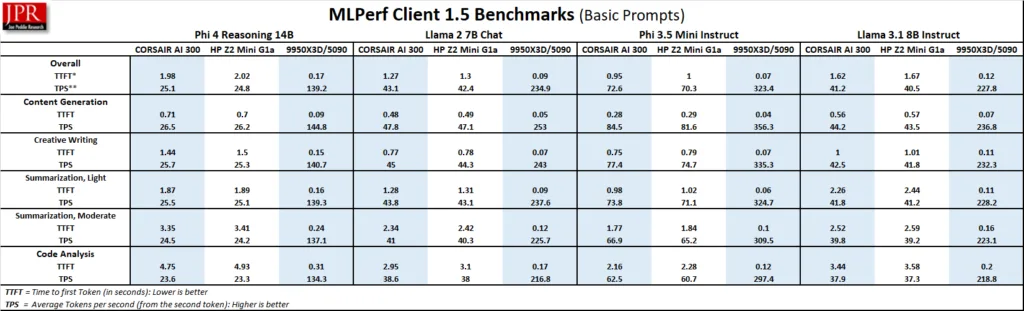

MLPerf Client 1.5 (LLM and AI Inference Testing)

The LLM tests in MLPerf Client v1.5 make use of multiple different language models and are also considered real-world benchmarks. The benchmark suite was updated yesterday from v1.0 to v1.5 with significant improvements, and we were privileged to download a preview build of the new benchmarks last week.

This new v1.5 AI PC benchmark now features Windows ML support for improved GPU/NPU performance, expanded platforms, and experimental power/energy measurements. Fortunately for us, the GUI is out of beta and has been working flawlessly compared with v1.0. Kudos to ML Commons for continuing to develop and expand their AI benchmarking suite and we look for great things to come from them in the future.

The basic benchmark measures and compares Code analysis, Content generation, Creative writing, as well as Light and Moderate Summarization measurements. Just like with LM Studio, you can use command lines or the GUI.

Although there are many more choices and models to run, we picked ORT GenAI GPU because the runtimes are common to both AMD and NVIDIA GPUs. Below are the MLPerf Client 1.5 benchmark basic results using four models.

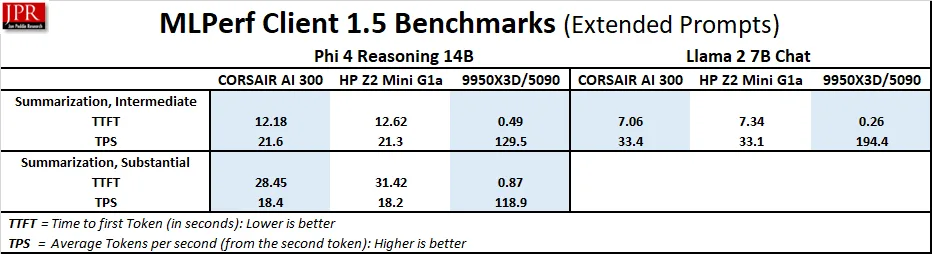

The new 1.5 benchmarks also have extended tests built in that go beyond Moderate Summarization for some models.

In all the benchmarks we ran, the desktop with the RTX 5090 was significantly faster than either the AI Workstation 300 or the Z2 Mini G1a as expected, yet the small PCs provided a very good tokens per second output well above 20, often above 40, and sometimes much higher. All the models that MLPerf Client 1.5 provide will easily fit into the VRAM of the RTX 5090 giving it a clear performance advantage.

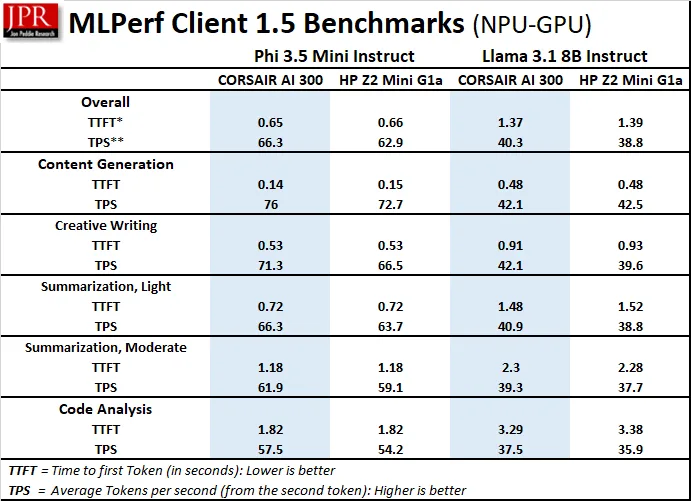

We were unable to run the NPU benches with v1.0 but had no issues running them with the latest version. The desktop PC does not have a dedicated NPU.

Both NPUs achieved excellent results although the AI Workstation 300 consistently edged out the HP Mini in almost all the MLPerf Client 1.5 Benchmarks. So, how well did the CORSAIR AI Workstation do overall? Let us head to our analysis and conclusion.

Final Thoughts

The CORSAIR AI Workstation 300 is an excellent AI workstation for professionals, businesses, and individuals who work with AI and who want full privacy without paying subscription prices. It offers excellent performance for even very large LLMs and is AI-centric for its ease of acquiring and using models, even for those with no former experience with it. One does not need to learn CLI to leverage AI almost immediately using the preconfigured and customizable Corsair AI Software Stack.

CORSAIR offers two years warranty with excellent support. If a self-built creator PC fails, the user is responsible for its troubleshooting and downtime. Although $2500 is expensive, consider that it is less pricey than an AIB RTX 5090 by itself! Compared with our massive, power-hungry desktop PC, the CORSAIR AI Workstation 300 represents a more efficient and reliable AI-first alternative. While our desktop creator PC executes many workloads faster, it also costs almost three times more.

Compared with the HP Z2 Mini G1a which is about $700 more expensive, the AI Workstation 300 is faster at most tasks that we benchmarked. Each PC fills a different role although both PCs leverage the AMD Ryzen AI Max+ PRO 395 with 128GB of memory. The HP Mini is an IHV certified workstation using ECC memory with an extra level of security – for medical imaging, for example – where 100% accuracy is essential. However, if you are looking primarily to work with AI and LLMs, the CORSAIR AI Workstation is an unbeatable value.

If you are primarily an elite gamer who maxes out every setting of AAA games at 4K and who also may make a living as a 3D artist or who specializes in creative tasks, then the Ryzen 9 9950X3D/RTX 5090/192GB DDR5 combination may be best for your deep pockets. It can also run very large LLMs and other AI workflows locally, although even the mighty RTX 5090 may run out of VRAM and must depend on the much slower system RAM.

As an AI-first workstation is where the Ryzen AI MAX platform featured in the CORSAIR AI Workstation 300 excels by filling a specific niche in a very compact power-sipping package once exclusively the domain of large workstations with professional grade GPUs. Highly recommended!

Pros & Cons

Pros

- Professional look and solid build quality

- Excellent CPU performance

- Powerful GPU with shared memory pool

- 128GB of super-fast RAM

- 50 TOPS NPU adds serious AI power into a small package

- Handles very large LLMs efficiently

- Outstanding AI Software Stack

- Two-year warranty with excellent CORSAIR support

Cons

- Somewhat limited IO ports

- Integrated graphics hold it back from real-time rendering or GPU-accelerated production work

- High MSRP

We are proud to present BTR Editor’s Choice Award to the CORSAIR AI Workstation 300, in recognition of its outstanding performance in a compact design as a very capable AI-first workstation!