NVIDIA and OpenAI have announced a major collaboration that’s set to change the game for on-device AI. They are bringing the new gpt-oss family of open-weight models to consumers, allowing the kind of advanced, state-of-the-art AI that was once confined to massive cloud data centers to run with incredible speed on your RTX-powered PC.

This is a huge win for local inference, as it ushers in a new generation of faster and smarter AI applications supercharged by the horsepower of your GeForce RTX GPUs and RTX PRO GPUs.

The New Models

Image credit: Nvidia

The launch includes two new models designed for a range of uses:

- gpt-oss-20b: This model is optimized for NVIDIA RTX AI PCs with at least 16GB of VRAM. It’s built for speed, delivering up to 250 tokens per second on an RTX 5090 GPU. This is the model most PC gamers and tech enthusiasts will want to check out.

- gpt-oss-120b: A larger, more powerful model designed for professional workstations and accelerated by NVIDIA RTX PRO GPUs.

Both models are the first to support MXFP4 precision on NVIDIA RTX hardware, a technique that boosts model quality and accuracy without a performance hit. They also boast impressive context lengths of up to 131,072, making them excellent for complex tasks like in-depth research and code analysis.

How to Get Started

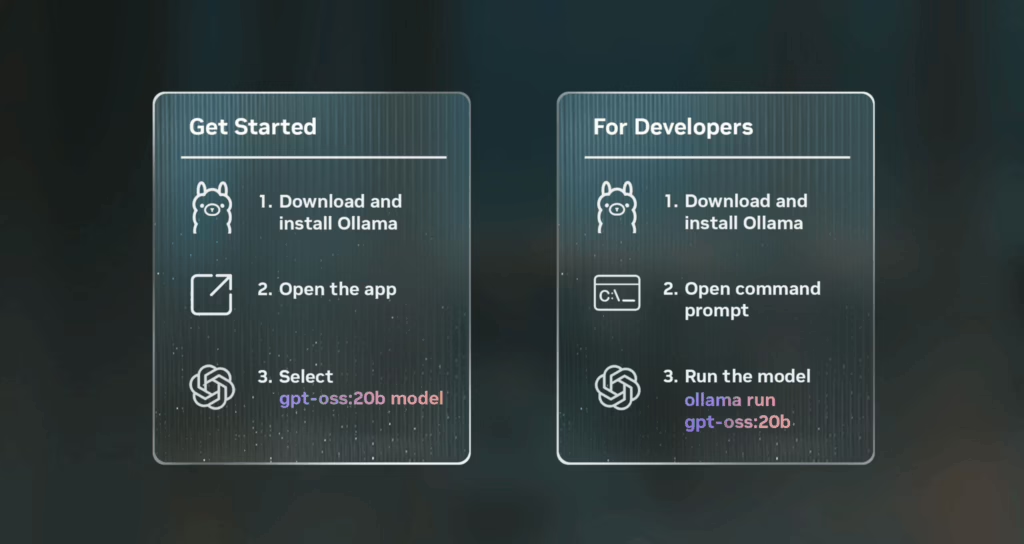

NVIDIA has a few ways for AI enthusiasts and developers to get these new models running on their RTX systems right away.

- Ollama App: The easiest method for most users is the new Ollama app. Its user interface now includes out-of-the-box support for the gpt-oss models, all fully optimized for RTX GPUs. You can find more details on how to get started on the Ollama website.

- Llama.cpp: For developers who prefer to work with open-source frameworks, NVIDIA is actively collaborating to optimize performance on RTX GPUs, with new contributions like CUDA Graphs to reduce overhead. You can find the latest code and information at the Llama.cpp GitHub repository.

- Microsoft AI Foundry: Windows developers can also access the models via Microsoft AI Foundry Local, currently in public preview. Getting started is as simple as running a command in a terminal.

This move democratizes access to powerful AI, empowering developers and users to build and run advanced applications right on their desktops.

As NVIDIA founder and CEO Jensen Huang put it:

“The gpt-oss models let developers everywhere build on that state-of-the-art open-source foundation, strengthening U.S. technology leadership in AI — all on the world’s largest AI compute infrastructure.”

For a deeper dive, check out the official RTX AI Garage blog post from NVIDIA or the NVIDIA company blog for more information on the collaboration.